It is information that is the driving force of modern business and at the moment is considered the most valuable strategic asset of any enterprise. The volume of information grows exponentially along with the growth of global networks and the development of e-commerce. To succeed in an information war, you need to have an effective strategy for storing, protecting, sharing and managing the most important digital property - data - both today and in the near future.

The management of storage resources has become one of the most burning strategic issues facing IT staff. Due to the development of the Internet and fundamental changes in business processes, information is accumulating at an unprecedented rate. In addition to the urgent problem of ensuring the possibility of a constant increase in the volume of stored information, the problem of ensuring the reliability of data storage and constant access to information is no less acute on the agenda. For many companies, the formula for accessing data "24 hours a day, 7 days a week, 365 days a year" has become the norm of life.

In the case of a separate PC, under a storage system (SD), one can understand a separate internal hDD or a system of disks. If we are talking about corporate storage, we traditionally can distinguish three technologies for storing data: Direct Attached Storage (DAS), Network Attach Storage (NAS), and Storage Area Network (SAN).

Direct Attached Storage (DAS)

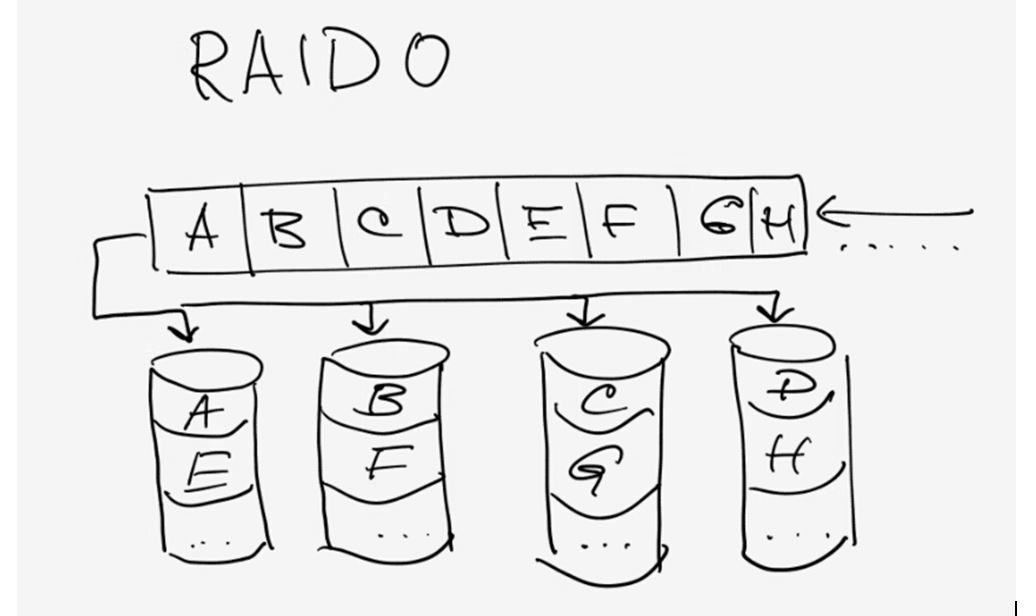

DAS technology implies direct (direct) connection of drives to a server or to a PC. In this case, drives ( hard disks, tape drives) can be both internal and external. The simplest case of a DAS-system is one disk inside a server or a PC. In addition, the DAS-system can be attributed and the organization of an internal RAID array of disks using a RAID controller.

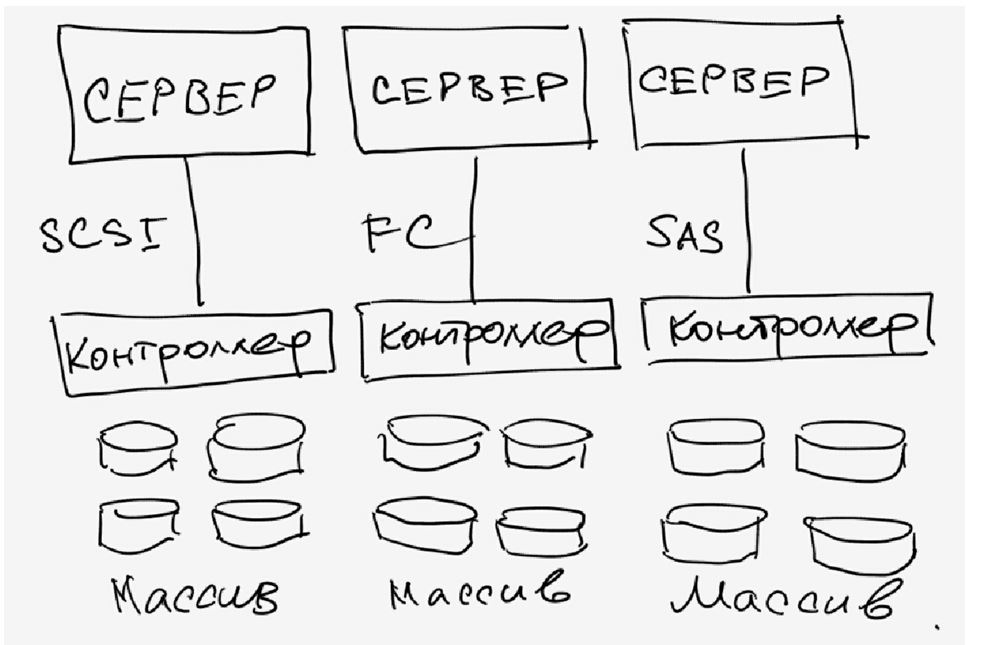

It is worth noting that, despite the formal possibility of using the term DAS system with respect to a single disk or an internal array of disks, it is customary to understand the DAS-system as an external rack or a basket with disks, which can be considered as an autonomous storage system (Figure 1). In addition to independent power supply, such stand-alone DAS-systems have a specialized controller (processor) for managing the array of drives. For example, as such a controller can act as a RAID controller with the ability to organize RAID arrays of different levels.

Fig. 1. Example of DAS-storage system

It should be noted that stand-alone DAS-systems can have several external I / O channels, which allows several computers to connect to the DAS-system simultaneously.

The interfaces for connecting drives (internal or external) in DAS technology can be SCSI (Small Computer Systems Interface), SATA, PATA and Fiber Channel interfaces. If the SCSI, SATA and PATA interfaces are used primarily for connecting internal drives, the Fiber Channel interface is used exclusively for connecting external storage devices and stand-alone storage. The advantage of the Fiber Channel interface lies in the fact that it does not have a strict length limitation and can be used when the server or PC connected to the DAS system is located at a considerable distance from it. SCSI and SATA interfaces can also be used to connect external storage systems (in this case, the SATA interface is called eSATA), but these interfaces have a strict limitation on the maximum length of the cable connecting the DAS system and the connected server.

The main advantages of DAS-systems include their low cost (in comparison with other storage solutions), ease of deployment and administration, as well as high speed of data exchange between the storage system and the server. Actually, it is thanks to this that they have gained great popularity in the segment of small offices and small corporate networks. At the same time, DAS-systems have their drawbacks, which include weak controllability and suboptimal utilization of resources, since each DAS-system requires connection of a dedicated server.

Currently, DAS-systems occupy a leading position, but the share of sales of these systems is constantly decreasing. DAS systems are gradually replaced by either universal solutions with the possibility of smooth migration with NAS systems, or systems that can be used both as DAS-, NAS- and even SAN-systems.

DAS systems should be used to increase disk space one server and making it for the case. Also, DAS-systems can be recommended for use for workstations processing large amounts of information (for example, for stations of non-linear video editing).

Network Attached Storage (NAS)

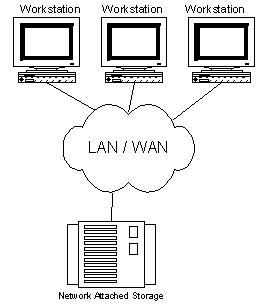

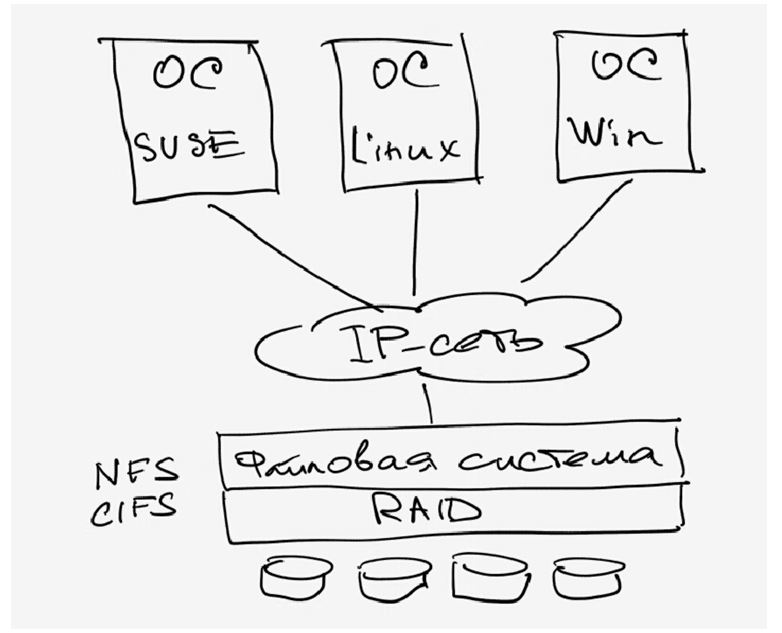

NAS systems are networked storage systems directly connected to the network in the same way as a network print server, router or any other network device (Figure 2). In fact NAS systems represent the evolution of file servers: the difference between a traditional file server and a NAS device is about the same as between a hardware network router and a software router based on a dedicated server.

Fig. 2. Example of NAS-storage system

In order to understand the difference between a traditional file server and a NAS device, let's remember that a traditional file server is a dedicated computer (server) that stores information available to network users. To store information, you can use hard disks installed in the server (usually they are installed in special baskets), or DAS devices can connect to the server. Administration of the file server is performed using the server operating system. This approach to the organization of storage systems is currently the most popular in the segment of small local networks, but it has one significant drawback. The fact is that a universal server (and even in combination with a server operating system) is not a cheap solution at all. At the same time, most of the functionality inherent in the universal server is simply not used in the file server. The idea is to create an optimized file server with an optimized operating system and a balanced configuration. It is this concept that embodies the NAS-device. In this sense, NAS devices can be considered as "thin" file servers, or, as they are otherwise called, filers.

In addition to the optimized OS, freed from all functions not associated with file system maintenance and data I / O implementation, NAS systems have a speed-optimized file system. NAS systems are designed in such a way that all their computing power is focused solely on file maintenance and storage operations. The operating system itself is located in flash memory and pre-installed by the manufacturer. Naturally, with the output new version OS user can independently "reflash" the system. Connecting NAS devices to the network and configuring them is a fairly simple task and can be done by any experienced user, not to mention the system administrator.

Thus, in comparison with traditional file servers, NAS devices are more productive and less expensive. At present, almost all NAS devices are oriented to use in Ethernet (Fast Ethernet, Gigabit Ethernet) networks based on TCP / IP protocols. Access to NAS devices is done using special protocols for accessing files. The most common protocols for file access are the protocols CIFS, NFS and DAFS.

CIFS(Common Internet File System System - common file system Internet) is a protocol that provides access to files and services remote computers (including the Internet) and uses a client-server model of interaction. The client creates a request to the server to access the files, the server executes the client's request and returns the result of its work. CIFS is traditionally used on local networks with Windows to access files. For data transport, CIFS uses the TCP / IP protocol. CIFS provides functionality similar to FTP (File Transfer Protocol), but it provides clients with better control over the files. It also allows you to share access to files between clients, using blocking and automatically restoring communication with the server in the event of a network failure.

Protocol NFS (Network File System) is traditionally used on UNIX platforms and is a collection of distributed file system and network protocol. The NFS protocol also uses a client-server interaction model. The NFS protocol provides access to files on the remote host (server) as if they were on the user's computer. NFS uses TCP / IP to transport data. To work NFS on the Internet, the WebNFS protocol was developed.

Protocol DAFS(Direct Access File System - direct access to the file system) is a standard file access protocol that is based on NFS. This protocol allows application tasks to transfer data bypassing the operating system and its buffer space directly to transport resources. The DAFS protocol provides high file I / O speeds and reduces CPU usage by significantly reducing the number of operations and interrupts that are normally required when processing network protocols.

DAFS was designed with a focus on use in a clustered and server environment for databases and a variety of Internet applications, focused on continuous work. It provides the lowest latency of access to common file resources and data, and also supports intelligent mechanisms for restoring system and data health, which makes it attractive for use in NAS systems.

Summarizing the above, NAS systems can be recommended for use in multiplatform networks in the case where network access to files is required and important factors are simple to install the administration of the storage system. An excellent example is the use of NAS as a file server in the office of a small company.

Storage Area Network (SAN)

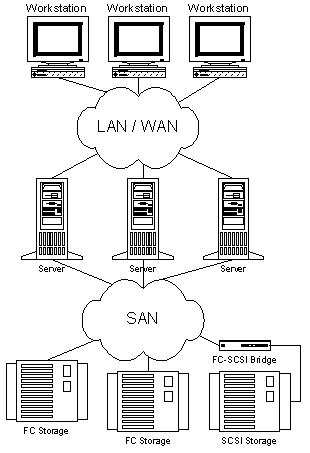

Actually, SAN is no longer a separate device, but a complete solution, which is a specialized network infrastructure for data storage. Storage networks are integrated as separate, specialized subnets into a local (LAN) or global (WAN) network.

In fact, SAN-networks connect one or several servers (SAN-servers) with one or several storage devices. SAN-networks allow any SAN-server to access any storage device without loading any other servers, or local area network. In addition, data can be exchanged between data storage devices without the involvement of servers. In fact, SAN-networks allow a very large number of users to store information in one place (with rapid centralized access) and share it. As storage devices, RAID arrays, various libraries (tape, magneto-optical, etc.), as well as JBOD-systems (disk arrays, not integrated into RAID) can be used.

Storage networks began to develop intensively and were introduced only since 1999.

Just as local networks can in principle be built on the basis of different technologies and standards, different technologies can also be used to build SAN networks. But just like the Ethernet standard (Fast Ethernet, Gigabit Ethernet) has become the de facto standard for local networks, Fiber Channel (FC) dominates storage networks. Actually, it was the development of the Fiber Channel standard that led to the development of the SAN concept itself. At the same time, it should be noted that the iSCSI standard is becoming increasingly popular, on the basis of which it is also possible to build SAN-networks.

Along with the speed parameters one of the most important advantages of Fiber Channel is the possibility of working at great distances and the flexibility of topology. The concept of building a storage network topology is based on the same principles as traditional local networks based on switches and routers, which greatly simplifies the construction of multi-node system configurations.

It should be noted that both Fiber Channel and copper cables are used to transmit data in the Fiber Channel standard. When arranging access to geographically remote sites at a distance of up to 10 km, standard equipment and single-mode fiber for signal transmission are used. If the nodes are separated for a greater distance (tens or even hundreds of kilometers), special amplifiers are used.

SAN network topology

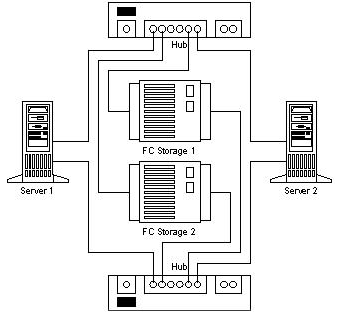

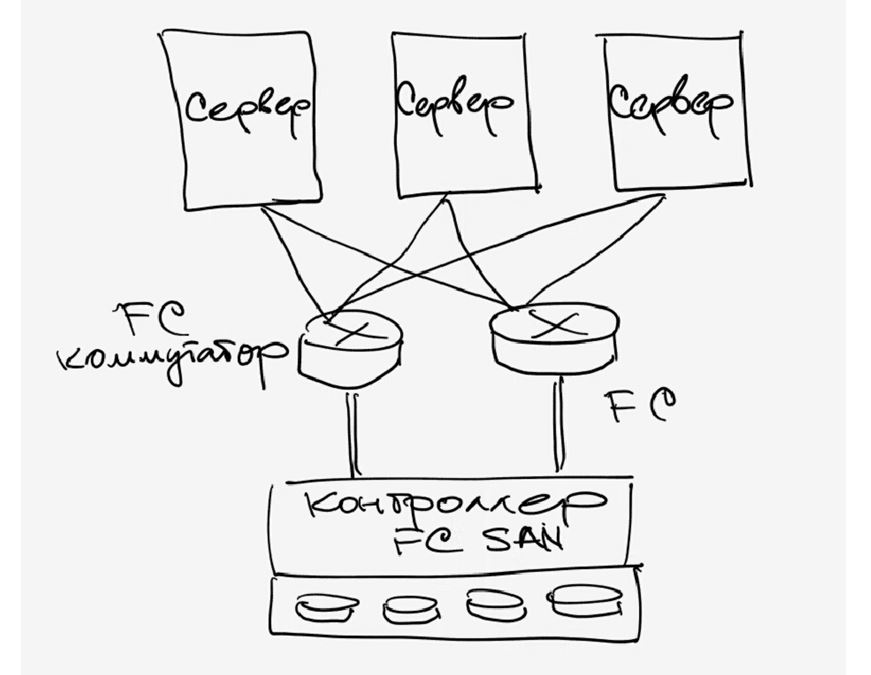

A typical version of a SAN-network based on the Fiber Channel standard is shown in Fig. 3. The infrastructure of such a SAN-network consists of storage devices with a Fiber Channel interface, SAN-servers (servers connected both to the local network through the Ethernet interface and to the SAN-network via the Fiber Channel interface) and the switching fabric (Fiber Channel Fabric) which is built on the basis of Fiber Channel switches (hubs) and is optimized for the transfer of large blocks of data. Access of network users to the storage system is realized through SAN-servers. It is important that the traffic inside the SAN-network is separated from the IP traffic of the local network, which, of course, reduces the load on the local network.

Fig. 3. A typical SAN circuit

Advantages of SAN-networks

The main advantages of SAN technology include high performance, high level of data availability, excellent scalability and manageability, the ability to consolidate and virtualize data.

Fiber Channel switch factories with non-blocking architecture allow simultaneous access of multiple SAN-servers to storage devices.

In the SAN architecture, data can be easily moved from one storage device to another, thus optimizing data placement. This is especially important when several SAN servers require simultaneous access to the same storage devices. Note that the process of data consolidation is impossible in case of using other technologies, as, for example, when using DAS-devices, that is, storage devices directly connected to servers.

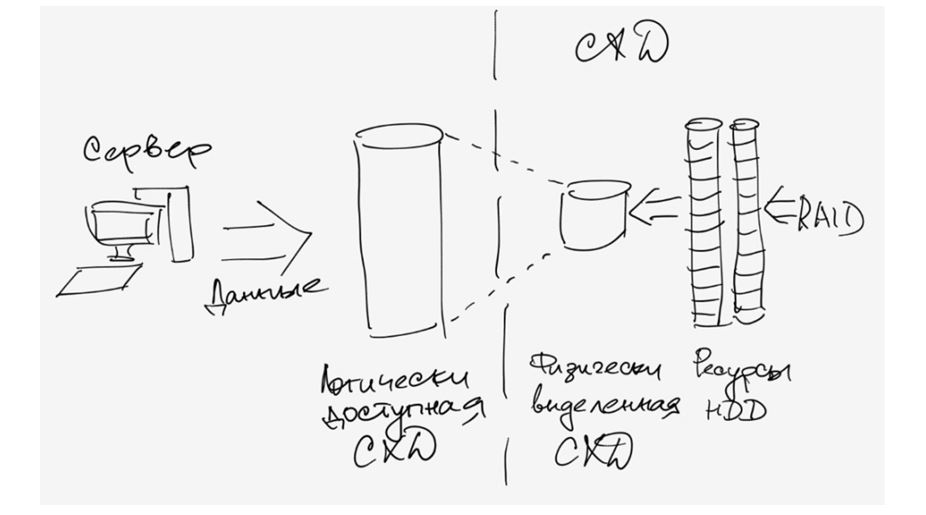

Another possibility provided by the SAN architecture is data virtualization. The idea of virtualization is to provide SAN-servers with access to resources rather than to separate storage devices. That is, servers should "see" not virtual storage devices, but virtual resources. For practical implementation of virtualization, a special virtualization device can be placed between SAN servers and disk devices, to which data storage devices are connected on one side, and on the other hand, SAN servers. In addition, many modern FC switches and HBAs offer the ability to implement virtualization.

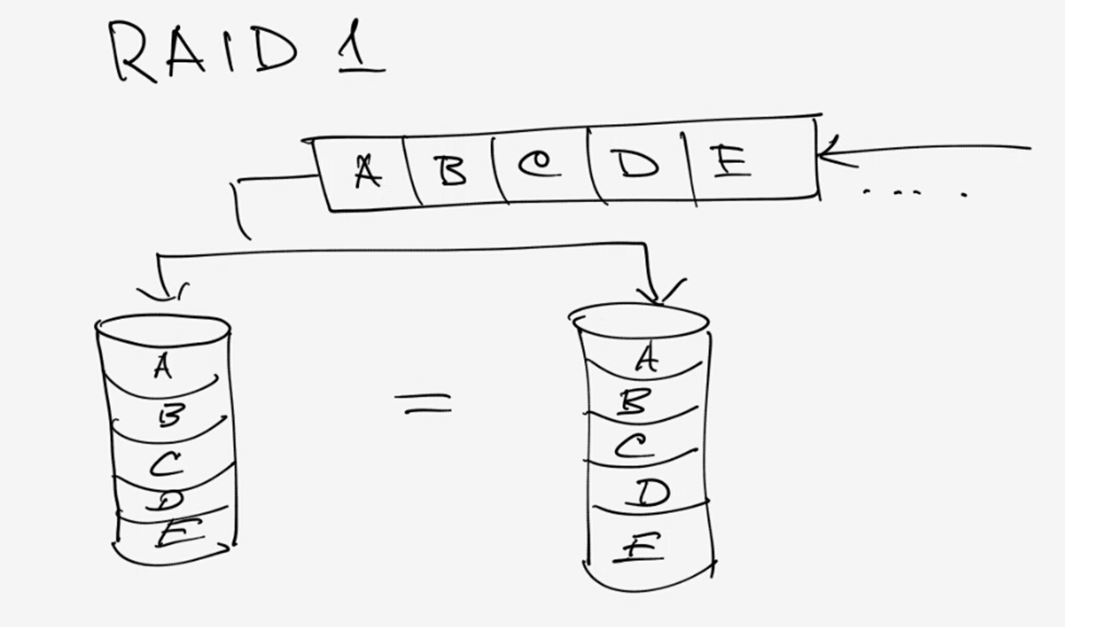

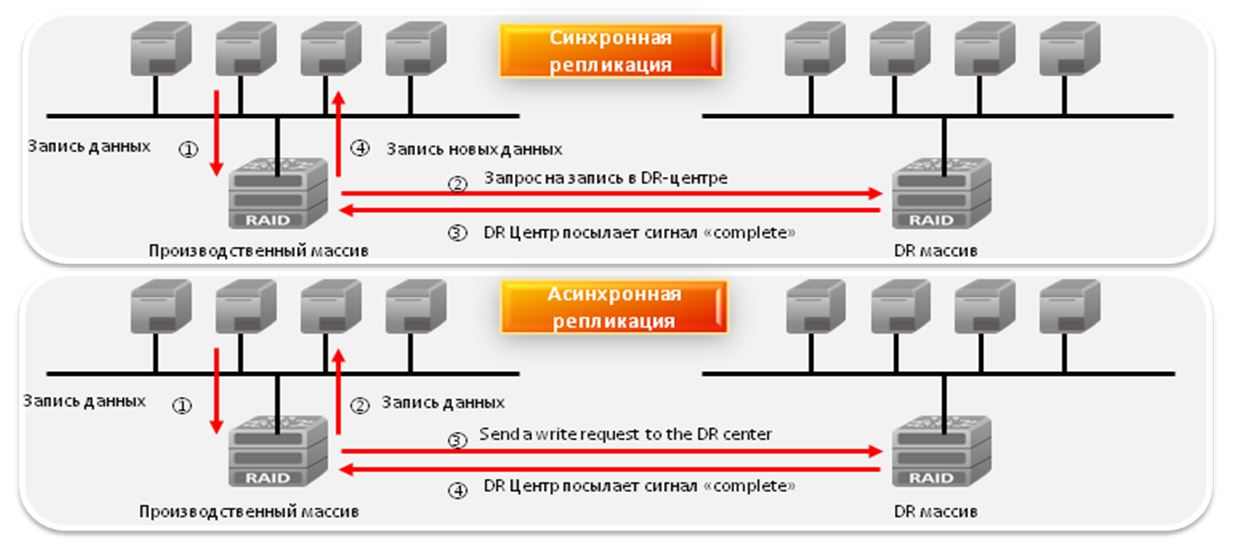

The next opportunity provided by SAN-networks is the implementation of remote data mirroring. The principle of data mirroring is the duplication of information on multiple media, which increases the reliability of information storage. An example of the simplest case of data mirroring is the combination of two disks in a RAID array of level 1. In this case, the same information is recorded simultaneously on two disks. Disadvantage of this method can be considered the local location of both disks (as a rule, disks are in the same basket or rack). SANs can overcome this drawback and provide the ability to mirror not just individual storage devices, but SANs themselves, which can be hundreds of kilometers away from each other.

Another advantage of SAN-networks is the simplicity of the organization reserve copy data. Traditional backup technology, which is used in most local networks, requires a dedicated Backup-server and, most importantly, dedicated bandwidth of the network. In fact, during the backup operation, the server itself becomes unavailable for users on the local network. Actually, that's why backup is performed, as a rule, at night.

The architecture of data storage networks allows us to approach the problem of backup in a fundamentally different way. In this case, the Backup server is an integral part of the SAN-network and is connected directly to the switching plant. In this case, Backup-Traffic is isolated from the LAN traffic.

Equipment used to create SAN-networks

As already mentioned, SAN-network deployment requires storage devices, SAN-servers and equipment for building a switching plant. Switching factories include both physical layer devices (cables, connectors) and Interconnect Device (s) for communicating SAN nodes to each other, Translation devices that perform Fiber Channel (FC) protocol conversion functions to other protocols, for example SCSI, FCP, FICON, Ethernet, ATM or SONET.

Cables

As already noted, for the connection of SAN-devices, the Fiber Channel standard allows the use of both fiber-optic and copper cables. In this case, different types of cables can be used in one SAN-network. Copper cable is used for short distances (up to 30 m), and fiber-optic cables are used for short and long distances up to 10 km and more. Apply both multimode (Multimode) and singlemode (Singlemode) fiber optic cables, and multimode is used for distances up to 2 km, and single-mode - for long distances.

Coexistence different types cables within the same SAN-network is provided by means of special converters of interfaces GBIC (Gigabit Interface Converter) and MIA (Media Interface Adapter).

The Fiber Channel standard provides for several possible transmission rates (see table). Note that currently the most common FC-devices standards 1, 2 and 4 GFC. This ensures backward compatibility of faster devices with less speed, that is, the GFC standard device automatically supports the connection of GFC standards 1 and 2 devices.

Interconnect Devices

The Fiber Channel standard allows the use of various network topologies for connecting devices, such as Point-to-Point, Arbitrated Loop, FC-AL, and a switched fabric.

A point-to-point topology can be used to connect a server to a dedicated storage system. In this case, the data is not shared with the SAN-network servers. In fact, this topology is a variant of the DAS-system.

To implement a point-to-point topology, at a minimum, you need a server equipped with a Fiber Channel adapter and a storage device with a Fiber Channel interface.

The topology of a ring with a shared access (FC-AL) implies a device connection scheme, in which data is transmitted in a logically closed loop. In the FC-AL ring topology, Hub or Fiber Channel switches can act as connection devices. With concentrators, the bandwidth is shared between all ring nodes, while each switch port provides protocol bandwidth for each node.

In Fig. Figure 4 shows an example of a Fiber Channel ring with access sharing.

Fig. 4. Example of a Fiber Channel Ring with Access Sharing

The configuration is similar to a physical star and a logical ring used in local networks based on Token Ring technology. In addition, as in Token Ring networks, data moves along the ring in one direction, but, unlike Token Ring networks, the device can request the right to transmit data, rather than wait for an empty marker from the switch. Fiber Channel rings with access sharing can address up to 127 ports, however, as practice shows, typical FC-AL rings contain up to 12 nodes, and after the connection of 50 nodes the performance catastrophically decreases.

The topology of the switched fabric architecture (Fiber Channel switched-fabric) is implemented on the basis of Fiber Channel switches. In this topology, each device has a logical connection to any other device. In fact, Fiber Channel switches in a coherent architecture perform the same functions as traditional Ethernet switches. Recall that, unlike a hub, the switch is a high-speed device that provides connection under the scheme "each with each" and processes several simultaneous connections. Any node connected to a Fiber Channel switch receives the protocol bandwidth.

In most cases, when building large SAN-networks, a mixed topology is used. At the lower level, FC-AL rings are used, connected to low-performance switches, which in turn are connected to high-speed switches providing the highest possible throughput. Multiple switches can be connected to each other.

Broadcast devices

Translation devices are intermediate devices that convert Fiber Channel protocol to higher-level protocols. These devices are designed to connect a Fiber Channel network to an external WAN-network, a local network, and also to connect various devices and servers to the Fiber Channel network. These devices include bridges (Bridge), Fiber Channel Adapters (HBAs), routers, gateways and network adapters.The classification of the broadcast devices is shown in Figure 5.

Fig. 5. Classification of broadcast devices

The most common broadcast devices are HBA-adapters with PCI interface, which are used to connect servers to the Fiber Channel network. Network adapters Allows you to connect local Ethernet networks to Fiber Channel networks. Bridges are used to connect storage devices with SCSI interface to a network based on Fiber Channel. It should be noted that recently almost all storage devices that are intended for use in SAN have built-in Fiber Channel and do not require the use of bridges.

Storage Devices

As storage devices in SAN-networks can be used as hard drives, and tape drives. If to speak about possible configurations of application hard disks as storage devices in SAN-networks, it can be both JBOD arrays and RAID arrays of disks. Traditionally, storage devices for SAN-networks are issued in the form of external racks or baskets equipped with a specialized RAID-controller. Unlike NAS or DAS devices, devices for SAN-systems are equipped with a Fiber Channel interface. In this case, the disks themselves can have both SCSI and SATA interfaces.

In addition to storage devices based on hard disks, tape drives and libraries are widely used in SAN-networks.

SAN-servers

Servers for SANs are only one part different from conventional application servers. In addition to the network Ethernet adapter, they are equipped with an HBA adapter to connect the server to the local network, which allows them to be connected to SAN-networks based on Fiber Channel.

Intel Storage Systems

Next, we'll look at a few specific examples of Intel storage devices. Strictly speaking, Intel does not produce complete solutions and develops and manufactures platforms and separate components for building storage systems. On the basis of these platforms, many companies (including a number of Russian companies) produce already completed solutions and sell them under their own logos.

Intel Entry Storage System SS4000-E

The Intel Entry Storage System SS4000-E is a NAS device designed for use in small and medium-sized offices and multiplatform local area networks. When the Intel Entry Storage System SS4000-E is used, shared network access to the data is obtained by clients based on Windows, Linux and Macintosh platforms. In addition, the Intel Entry Storage System SS4000-E can act as both a DHCP server and a DHCP client.

The Intel Entry Storage System SS4000-E is a compact external rack with the ability to install up to four drives with a SATA interface (Figure 6). Thus, the maximum capacity of the system can be 2 TB when using drives with a capacity of 500 GB.

Fig. 6. Intel Entry Storage System SS4000-E

The Intel Entry Storage System SS4000-E uses a SATA RAID controller with RAID levels 1, 5 and 10. this system is a NAS device, that is, in fact a "thin" file server, the storage system must have a dedicated processor, memory and a stitched operating system. As the processor in the Intel Entry Storage System SS4000-E, Intel 80219 is used with a clock speed of 400 MHz. In addition, the system is equipped with 256 MB dDR memory and 32 MB of flash memory to store the operating system. The operating system uses Linux Kernel 2.6.

To connect to a local network, the system has a dual-channel Gigabit LAN controller. In addition, there are also two USB ports.

The Intel Entry Storage System SS4000-E supports CIFS / SMB, NFS and FTP protocols, and the device configuration is implemented using the web interface.

In the case of Windows clients (supported by Windows 2000/2003 / XP), there is additionally the possibility of implementing backup and recovery of data.

Intel Storage System SSR212CC

The Intel Storage System SSR212CC is a universal platform for creating storage systems such as DAS, NAS and SAN. This system is made in a 2U high cabinet and is designed for mounting in a standard 19-inch rack (Figure 7). The Intel Storage System SSR212CC supports installation of up to 12 SATA or SATA II drives (hot-plug functionality is supported), which allows the system to grow up to 6TB with 550GB disks.

Fig. 7. Intel Storage System SSR212CC Storage System

In fact, the Intel Storage System SSR212CC is a full-fledged high-performance server running the operating systems of Red Hat Enterprise Linux 4.0, Microsoft Windows Storage Server 2003, Microsoft Windows Server 2003 Enterprise Edition, and Microsoft Windows Server 2003 Standard Edition.

The basis of the server is the Intel Xeon processor with a clock frequency of 2.8 GHz (FSB 800 MHz, L2 cache size 1 MB). The system supports the use of SDRAM DDR2-400 memory with ECC up to a maximum of 12 GB (six DIMM slots are available for installing memory modules).

The Intel Storage System SSR212CC is equipped with two RAID controllers, the Intel RAID Controller SRCS28Xs with the ability to create RAID arrays of levels 0, 1, 10, 5 and 50. In addition, the Intel Storage System SSR212CC has a dual-channel Gigabit LAN controller.

Intel Storage System SSR212MA

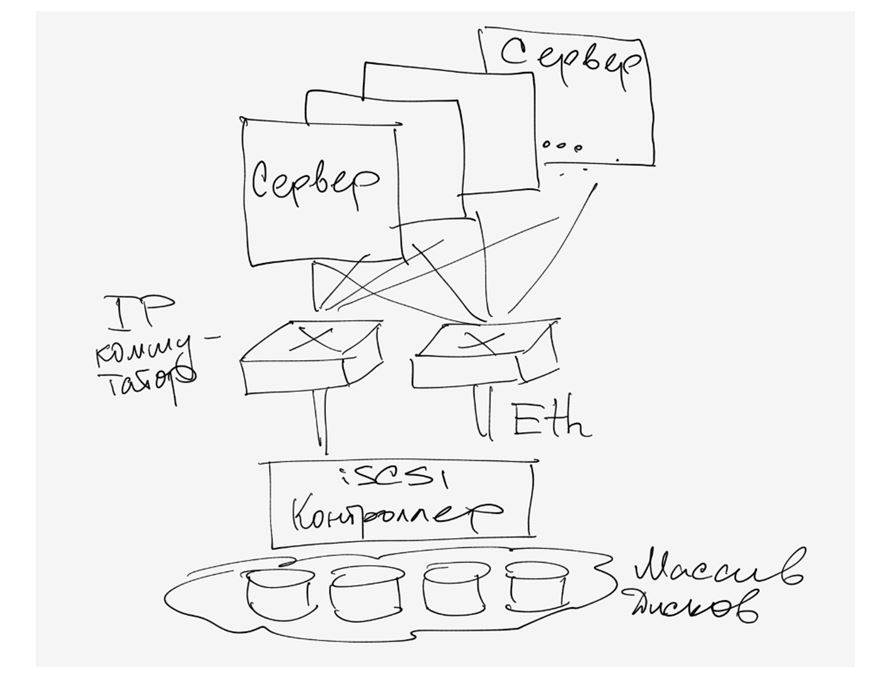

Intel Storage System SSR212MA is a platform for creating storage systems in IP SAN-networks based on iSCSI.

This system is made in a 2U high cabinet and is designed for mounting in a standard 19-inch rack. The Intel Storage System SSR212MA supports installation of up to 12 SATA drives (hot-plug function is supported), which allows the system to grow up to 6TB with 550GB drives.

By its hardware configuration, the Intel Storage System SSR212MA system does not differ from the Intel Storage System SSR212CC.

SAS, NAS, SAN: a step to the storage networks

Introduction

With the daily complication of networked computer systems and global corporate solutions, the world began to demand technologies that would give impetus to the revival of corporate storage systems (storage systems). And now, one single technology brings to the world treasury of advances in the field of unparalleled performance, tremendous scalability and exceptional advantages of total cost of ownership. The circumstances that formed with the introduction of the FC-AL (Fiber Channel - Arbitrated Loop) and SAN (Storage Area Network) standard, which develops on its basis, promise a revolution in data-centric computing technologies.

"The most significant development in storage" ve seen in 15 years »

Data Communications International, March 21, 1998

The formal definition of SAN in the interpretation of the Storage Network Industry Association (SNIA):

"A network whose main task is to transfer data between computer systems and storage devices, as well as between the warehouses themselves. The SAN consists of a communication infrastructure that provides physical communication, and is also responsible for a management layer that combines communications, warehouses and computer systems, making data transfer safe and secure. "

SNIA Technical Dictionary, copyright Storage Network Industry Association, 2000

Variants of organization of access to warehouses-systems

There are three main options for organizing access to storage systems:

- SAS (Server Attached Storage), a store connected to the server;

- NAS (Network Attached Storage), a store connected to the network;

- SAN (Storage Area Network), a storage network.

We consider the topologies of the corresponding systems and their singularities.

SAS

A warehousing system attached to the server. Familiar to everyone, the traditional way to connect a storage system to a high-speed interface in the server, usually to a parallel SCSI interface.

Figure 1. Server Attached Storage

The use of a separate housing for a stand-in system within the SAS topology is not mandatory.

The main advantage of storages connected to the server, in comparison with other options - low price and high speed at the rate of one store for one server. Such topology is the most optimal in case of using one server, through which access to the data set is organized. But it still has a number of problems that have prompted designers to look for other options for organizing access to storage systems.

The features of SAS include:

- Access to the data depends on the OS and the file system (in the general case);

- The complexity of organizing systems with high availability;

- Low cost;

- High performance within a single node;

- Reduces the response speed when the server is loaded, which serves the account.

NAS

A stand-alone system connected to the network. This variant of access organization appeared relatively recently. Its main advantage is the convenience of integrating an additional data storage system into existing networks, but in itself it does not bring any radical improvements to the architecture of storages. In fact, NAS is a clean file server, and today you can meet a lot of new realizations of storages such as NAS based on thin server technology (Thin Server).

Figure 2. Network Attached Storage.

Features of NAS:

- Dedicated file server;

- Access to data is independent of OS and platform;

- Convenience of administration;

- Maximum ease of installation;

- Low scalability;

- Conflict with LAN / WAN traffic.

Storing, built on NAS technology, is an ideal option for low-cost servers with a minimal set of functions.

SAN

Storage networks began to develop intensively and were introduced only since 1999. The basis of the SAN is a separate network from the LAN / WAN, which serves to organize access to the data of servers and workstations that deal with their direct processing. This network is created on the basis of the Fiber Channel standard, which gives the systems of the advantages of LAN / WAN technologies and the ability to organize standard platforms for systems with high availability and high query intensity. Almost the only disadvantage of SAN today is the relatively high price of components, but the total cost of ownership for enterprise systems built using storage area network technology is quite low.

Figure 3. Storage Area Network.

The main advantages of SAN can be attributed almost all its features:

- Independence of SAN topology from warehouses and servers;

- Convenient centralized management;

- No conflict with LAN / WAN traffic;

- Convenient backup of data without loading the local network and servers;

- High speed;

- High scalability;

- High flexibility;

- High availability and fault tolerance.

It should also be noted that this technology is still quite young and in the near future it must survive many improvements in the field of control standardization and ways of SAN subnetwork interaction. But we can hope that this threatens the pioneers with only additional prospects for the championship.

FC as a basis for building a SAN

Like LAN, a SAN can be created using different topologies and media. When building a SAN, you can use either a parallel SCSI interface or a Fiber Channel or, say, a SCI (Scalable Coherent Interface), but its growing popularity of SAN is due to Fiber Channel. The design of this interface involved specialists with considerable experience in the development of both channel and network interfaces, and they were able to combine all the important positive features of both technologies in order to get something really revolutionary. What exactly?

The main key features of the channel:

- Low latency

- High speeds

- High reliability

- Point-to-point topology

- Short distance between nodes

- Dependence on platform

- Multipoint topologies

- Large distances

- High scalability

- Low speed

- Great delays

- High speeds

- Independence from the protocol (0-3 levels)

- Large distances

- Low latency

- High reliability

- High scalability

- Multipoint topologies

Traditionally, the interfaces (what is between the host and storage devices) have been an obstacle to the growth of performance and the increase in the volume of storage systems. At the same time, application tasks require a significant increase in hardware capacity, which, in turn, pulls the need for an increase in the bandwidth of interfaces for communication with the warehouses. It is the problems of building flexible high-speed data access that helps to solve the Fiber Channel.

The Fiber Channel standard was finally defined over the last few years (from 1997 to 1999), during which a tremendous effort was made to harmonize the interaction of manufacturers of various components, and everything necessary was done to make Fiber Channel a purely conceptual technology in The real one, which received support in the form of installations in laboratories and computer centers. In the year 1997, the first commercial samples of the cornerstone components for the construction of FC-based SANs, such as adapters, hubs, switches and bridges, were designed. Thus, since 1998, FC has been used for commercial purposes in the business sphere, in production and in large-scale projects for the implementation of critical systems for failures.

Fiber Channel is an open industry standard for high-speed serial interface. It provides connection of servers and storage systems up to 10 km (using standard equipment) at a speed of 100 MB / s (at the Cebit 2000 exhibition samples of products that use the new Fiber Channel standard with speeds of 200 MB / s per one ring, and in the laboratory, the implementation of the new standard with speeds of 400 MB / s is already in operation, which is 800 MB / s when using a double ring.) (At the time of publication, a number of manufacturers have already begun shipping network cards and switches to FC 200 MB / s .) Fiber Channel Simultaneously o Supports a number of standard protocols (including TCP / IP and SCSI-3) when using one physical carrier, which potentially simplifies the construction of network infrastructure, besides it provides opportunities to reduce the cost of installation and maintenance. However, the use of separate subnets for LAN / WAN and SAN has several advantages and is recommended by default.

One of the most important advantages of Fiber Channel along with high-speed parameters (which, incidentally, are not always the main ones for SAN users and can be realized with the help of other technologies) is the possibility of working at great distances and the flexibility of topology that has come to the new standard from network technologies. Thus, the concept of building a storage network topology is based on the same principles as traditional networks, usually based on hubs and switches that help prevent a drop in speed with an increase in the number of nodes and create the ability to easily organize systems without a single point of failure.

For a better understanding of the advantages and features of this interface, we give the comparative characteristics of FC and Parallel SCSI in the form of a table.

Table 1. Comparison of Fiber Channel and Parallel SCSI Technologies

The Fiber Channel standard assumes the use of a variety of topologies, such as point-to-point, ring or FC-AL hub (Loop or Hub FC-AL), trunk switch (Fabric / Switch).

The point-to-point topology is used to connect a single stand-alone system to the server.

Loop or Hub FC-AL - to connect multiple devices to multiple hosts. When the double ring is organized, the speed and fault tolerance of the system increase.

The switches are used to provide maximum speed and fault tolerance for complex, large and branched systems.

Thanks to the network flexibility in the SAN, an extremely important feature is embedded - a convenient option for building fault-tolerant systems.

By offering alternative storage solutions and the ability to combine multiple hardware backups, SAN helps protect hardware and software systems from hardware failures. For a demonstration, let's give an example of creating a two-way system without points of failure.

Figure 4. No Single Point of Failure.

Building three- or more-node systems is accomplished simply by adding additional servers to the FC network and connecting them to both concentrators / switches).

When using FC, the construction of disaster tolerant systems becomes transparent. Network channels for both the home and the local network can be built on fiber (up to 10 km or more using signal amplifiers) as a physical medium for FC, while standard equipment is used, which makes it possible to significantly reduce the cost of such systems.

Thanks to the ability to access all SAN components from any of its points, we get an extremely flexible managed data network. At the same time, it should be noted that the SAN provides transparency (the ability to see) all components up to disks in the ware-systems. This feature has prompted component manufacturers to use their considerable experience in building management systems for LAN / WAN in order to lay broad monitoring and management capabilities in all SAN components. These capabilities include the monitoring and management of individual nodes, the production of components, enclosures, network devices and network substructures.

The SAN management and monitoring system uses such open standards as:

- SCSI command set

- SCSI Enclosure Services (SES)

- SCSI Self Monitoring Analysis and Reporting Technology (S.M.A.R.T.)

- SAF-TE (SCSI Accessed Fault-Tolerant Enclosures)

- Simple Network Management Protocol (SNMP)

- Web-Based Enterprise Management (WBEM)

Systems built using SAN technologies not only provide the administrator with the ability to monitor the development and status of resources, but also provide opportunities for monitoring and controlling traffic. Thanks to such resources, SAN management software implements the most effective scheduling schemes for the volume of workloads and balancing the load on system components.

Storage networks perfectly integrate into existing information infrastructures. Their implementation does not require any changes in existing LAN and WAN networks, but only expands the possibilities existing systems, eliminating them from tasks aimed at transferring large amounts of data. Moreover, when integrating and administering a SAN, it is very important that the key elements of the network support hot swapping and installation, with dynamic configuration capabilities. So the administrator can add one or another component or make a replacement, without shutting down the system. And the entire integration process can be visually displayed in the graphical SAN management system.

Considering the above advantages, there are a number of key points that directly affect one of the main advantages of the Storage Area Network - Total Cost Ownership.

Incredible scalability allows an enterprise that uses a SAN to invest in servers and securing as needed. And also to save your investments in the already installed equipment with the change of technological generations. Each new server will be able to have high-speed access to the warehouses and every additional gigabyte of storage will be available to all subnet servers at the administrator's command.

Excellent opportunities to build fault-tolerant systems can bring a direct commercial benefit from minimizing downtime and save the system in the event of a disaster or some other cataclysms.

The manageability of components and the transparency of the system make it possible to centralize administration of all resources, and this, in turn, significantly reduces the cost of their support, the cost of which, as a rule, is more than 50% of the cost of equipment.

The impact of SAN on applied tasks

In order to make it clearer to our readers how useful the technologies that are discussed in this article are, let's give some examples of applications that would be ineffectively solved without using storage networks, require huge financial investments or would not be solved by standard methods at all.

Backup and Recovery (Data Backup and Recovery)

Using the traditional SCSI interface, the user, when building backup and recovery systems, faces a number of complex problems that can be solved very simply using SAN and FC technologies.

Thus, the use of SANs brings the solution of the backup and restore task to a new level and provides the ability to backup several times faster than before, without loading the local network and servers with data backup.

Server Clustering

One of the typical tasks for which the SAN is effectively used is server clustering. Since one of the key moments in the organization of high-speed cluster systems that work with data is access to storage, then with the advent of SAN, the construction of multinode clusters at the hardware level is solved simply by adding a server with a connection to the SAN (this can be done without even shutting down the system, since FC switches support a hot-plug). If you use a parallel SCSI interface, connectivity and scalability is significantly worse than FC, data-oriented clusters would be hard to do with more than two nodes. Parallel SCSI switches are very complex and expensive devices, and for FC it is a standard component. To create a cluster that does not have a single point of failure, it is enough to integrate a mirrored SAN (DUAL Path technology) into the system.

As part of the clustering, one of the RAIS technologies (Redundant Array of Inexpensive Servers) seems particularly attractive for building powerful scalable Internet commerce systems and other types of tasks with increased power requirements. According to Alistair A. Croll, co-founder of Networkshop Inc, the use of RAIS is quite effective: "For example, for $ 12,000-15,000 you can buy about six inexpensive one-two (Pentium III) Linux / Apache servers. The power, scalability and fault tolerance of such a system will be significantly higher than, for example, a single four-processor server based on Xeon processors, and the cost is the same. "

Simultaneous access to video and data distribution (Concurrent video streaming, data sharing)

Imagine the task when you need to edit a video on several (say,\u003e 5) stations or just work on huge data. Transferring a file of 100GB over the LAN will take you a few minutes, and common work over it will be a very difficult task. When using SAN, each workstation and network server accesses the file at a speed equivalent to a local high-speed disk. If you need another station / server for data processing, you can add it to the SAN without shutting down the network, simply connecting the station to the SAN switch and granting it the rights to access the storage. If you are not satisfied with the performance of the data subsystem, you can simply add one more storage and using data distribution technology (for example, RAID 0) get twice the speed.

Basic SAN components

Wednesday

To connect components within the Fiber Channel standard, copper and optical cables are used. Both types of cables can be used simultaneously when building a SAN. Conversion of interfaces is carried out with the help of GBIC (Gigabit Interface Converter) and MIA (Media Interface Adapter). Both types of cable today provide the same data rate. Copper cable is used for short distances (up to 30 meters), optical cable is used for short, and for distances up to 10 km and more. Use multimode and single-mode optical cables. Multimode cable is used for short distances (up to 2 km). The internal diameter of the optical fiber of the multimode cable is 62.5 or 50 microns. To ensure a transfer rate of 100 MB / s (200 MB / s in duplex) when using multimode fiber, the cable length should not exceed 200 meters. Single-mode cable is used for long distances. The length of such a cable is limited by the power of the laser, which is used in the signal transmitter. The internal diameter of the single-mode optical fiber is 7 or 9 microns, it ensures the passage of a single beam.

Connectors, adapters

For connecting copper cables, DB-9 or HSSD connectors are used. HSSD is considered more reliable, but DB-9 is used just as often, because it is simpler and cheaper. The standard (most common) connector for optical cables is the SC connector, it provides a quality, clear connection. For a typical connection, multimode SC connectors are used, and for a single-mode connection, single-mode connectors are used. Microport connectors are used in multiport adapters.

The most common adapters for FC for PCI 64 bit bus. Also, many FC adapters are developed for the S-BUS, special adapters for MCA, EISA, GIO, HIO, PMC, Compact PCI are available for specialized use. The most popular are single-port, there are two- and four-port cards. PCI adapters usually use DB-9, HSSD, SC connectors. Also often there are GBIC-based adapters that come with both GBIC modules and without them. Fiber Channel adapters differ in the classes they support, and with a variety of features. To understand the differences, let's look at the comparative table of adapters manufactured by QLogic.

| Fiber Channel Host Bus Adapter Family Chart | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SANblade | 64 Bit | FCAL Publ. Pvt Loop | FL Port | Class 3 | F Port | Class 2 | Point to Point | IP / SCSI | Full Duplex | FC Tape | PCI 1.0 Hot Plug Spec | Solaris Dynamic Reconfig | VIB | 2Gb |

| 2100 Series | 33 & 66MHz PCI | X | X | X | ||||||||||

| 2200 Series | 33 & 66MHz PCI | X | X | X | X | X | X | X | X | X | ||||

| 33MHz PCI | X | X | X | X | X | X | X | X | X | X | ||||

| 25 MHZ Sbus | X | X | X | X | X | X | X | X | X | X | ||||

| 2300 Series | 66 MHZ PCI / 133MHZ PCI-X | X | X | X | X | X | X | X | X | X | X | X | ||

Concentrators

Fiber Channel HUBs (concentrators) are used to connect nodes to the FC ring (FC Loop) and have a structure similar to Token Ring concentrators. Since the rupture of the ring can lead to the termination of the network, modern FC concentrators use PBC-port bypass circuits that automatically open / close the ring (connect / disconnect systems connected to the hub). Usually FC HUBs support up to 10 connections and can stack up to 127 ports per ring. All devices connected to the HUB receive a common bandwidth, which they can share with each other.

Switches

Fiber Channel Switches (switches) have the same functions as the familiar LAN switches. They provide a full-speed unblocked connection between nodes. Any node attached to the FC switch receives full (with scalability) bandwidth. As the number of ports on the switched network increases, its throughput increases. Switches can be used together with concentrators (which are used for sites that do not require dedicated bandwidth for each node) to achieve an optimal price / performance ratio. By cascading, the switches can potentially be used to create FC networks with an address range of 2 24 (over 16 million).

Bridges

FC Bridges (bridges or multiplexers) are used to connect devices with parallel SCSI to an FC-based network. They provide translation of SCSI packets between Fiber Channel and Parallel SCSI devices, examples of which are Solid State Disk (SSD) or tape libraries. It should be noted that recently almost all devices that can be recycled within the SAN are being manufactured by manufacturers with an embedded FC interface for direct connection to storage networks.

Servers and Stores

Despite the fact that servers and warehouses are far from the last important components of SAN, we will not dwell on their description, as we are sure that all our readers are well acquainted with them.

In the end, I would like to add that this article is just the first step to data storage networks. For a complete understanding of the topic, the reader should pay a lot of attention to the features of implementing components by SAN manufacturers and management software, since without them the Storage Area Network is just a set of elements for switching the storage systems that will not bring you the full benefits of implementing a storage area network.

Conclusion

Today, the Storage Area Network is a fairly new technology that will soon become mass in the circle of corporate customers. In Europe and the US, businesses that have a fairly large fleet of installed storage systems are already beginning to migrate to storage networks for the organization of storages with the best total cost of ownership.

According to analysts' forecasts, in 2005 a significant number of middle and top-level servers will be delivered with a pre-installed Fiber Channel interface (this trend can be seen already today), and only a parallel SCSI interface will be used for internal disk connections in servers. Already today, when building the warehouse systems and acquiring servers of the middle and top level, one should pay attention to this promising technology, especially since already today it makes it possible to implement a number of tasks much cheaper than with the help of specialized solutions. In addition, investing in SAN technology today, you will not lose your investments tomorrow, because the features of Fiber Channel create excellent opportunities for use in the future of investments invested today.

P.S.

The previous version of the article was written in June 2000, but due to the lack of mass interest in the technology of storage area networks, the publication was postponed for the future. This future has come today, and I hope that this article will prompt the reader to realize the need to switch to storage network technology, as an advanced technology for building storage systems and organizing access to data.

The problem of storing files has never been more acute than it is today.

The appearance of hard disks with a volume of 3 or even 4TB, Blu-ray discs from 25 to 50GB, cloud storage - does not solve the problem. Around us is more and more devices that generate heavy content around: photos and video cameras, smartphones, HDTV and video, game consoles, etc. We generate and consume (mostly from the Internet) hundreds and thousands of gigabytes.

This leads to the fact that an average user's computer stores a huge number of files, hundreds of gigabytes: a photo archive, a collection of favorite movies, games, programs, working documents, etc.

This all needs not just to be stored, but also to protect from failures and other threats.

Pseudo-solution of the problem

You can equip your computer with capacious hard drive. But in this case the question arises: how and where to archive, say, data from a 3-terabyte disk?

You can put two disks and use them in the RAID "mirror" mode or simply perform a regular backup from one to another. This is also not the best option. Suppose a computer is attacked by viruses: most likely, they infect data on both disks.

You can store important data on the optical disks, organizing a home Blu-Ray archive. But it will be extremely inconvenient to use it.

Network storage is the solution! Partly ...

Network attached storage (NAS) - network file storage. But it can be explained even easier:

Suppose you have two or three computers at home. Most likely, they are connected to a local network (wired or wireless) and to the Internet. Network storage is a specialized computer that is built into your home network and connects to the Internet.

As a result, the NAS can store any of your data, and you can access it from any home PC or laptop. Looking ahead, it is worthwhile to say that the local network must be modern enough so that you can quickly and without problems "pump" on it tens and hundreds of gigabytes between the server and computers. But about this later.

Where can I get a NAS?

Method one: purchase. A more or less decent NAS for 2 or 4 hard drives can be bought for 500-800 dollars. Such a server will be packed into a small case and ready to work, as they say, out of the box.

Method one: purchase. A more or less decent NAS for 2 or 4 hard drives can be bought for 500-800 dollars. Such a server will be packed into a small case and ready to work, as they say, out of the box.

However, PLUS to these 500-800 dollars is added even the cost of hard drives! Since usually NAS is sold without them.

Pros: you get a finished device and spend a minimum of time.

The disadvantages of this solution: NAS is like a desktop computer, but it has incomparably less capabilities. In fact, it's just a network external disk for a lot of money. For quite a lot of money you get a limited, unprofitable set of opportunities.

My solution: self-assembly!

This is much cheaper than buying a separate NAS, although a little longer because you are building the car yourself). However, you get a full-fledged home server, which, if desired, can be used in the full range of its capabilities.

ATTENTION!I strongly do not recommend assembling a home server using old computer or old, spent their components. Do not forget that the file server is the store of your data. Do not be stingy to make it as reliable as possible, that one day all your files are not "burned" along with hard disks, for example, due to a failure in the power circuit of the motherboard ...

So, we decided to build a home file server. A computer whose hard drives are available in the home network for use. Accordingly, we need to make this computer economical in terms of power consumption, quiet, compact, does not emit much heat and has sufficient performance.

The ideal solution based on this is a motherboard with a built-in processor and passive cooling, compact size.

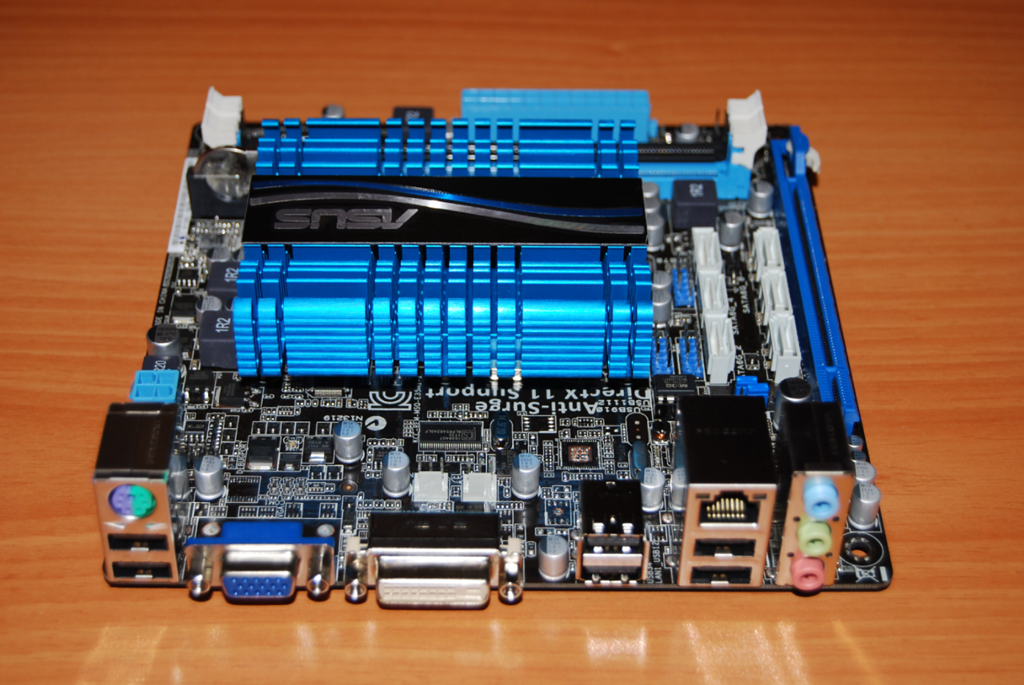

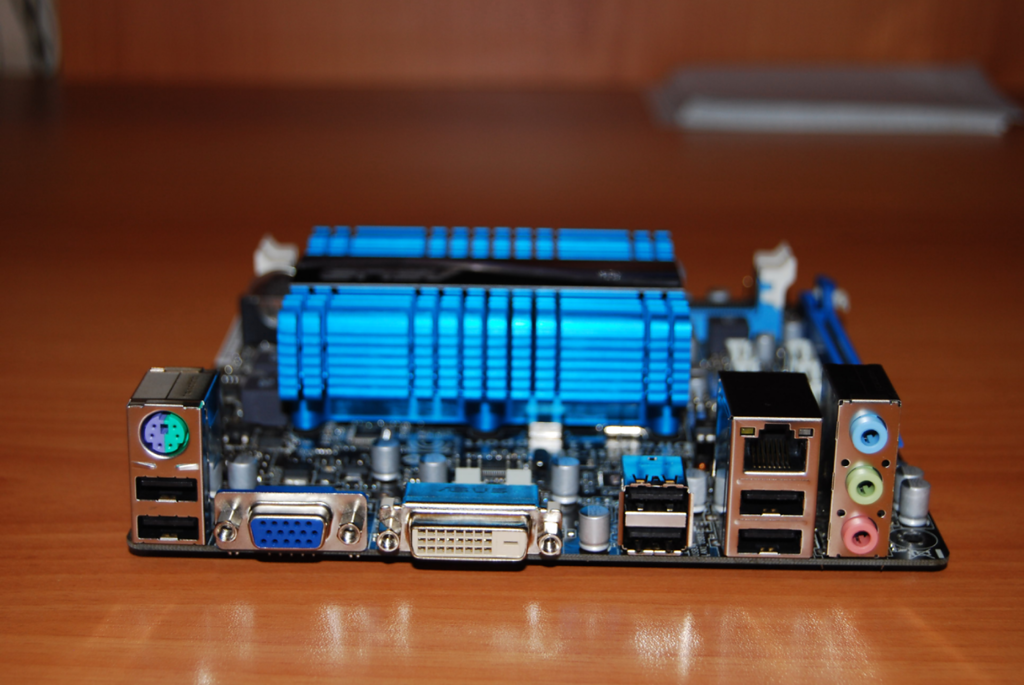

I chose the motherboard ASUS C-60M1-I . It was bought from the online store dostavka.ru:

The package includes a high-quality user manual, a driver disk, a sticker for the case, 2 SATA cables and a back panel for the case:

ASUS, as however and always, manned the fee very generously. Full specifications of the board you can find here: http://www.asus.com/Motherboard/C60M1I/#specifications. I will say only a few important points.

At a cost of only 3300 rubles - it provides 80% of all that we need for the server.

On board there is a dual-core processor AMD C-60 with a built-in graphics chip. The processor has a frequency 1 GHz(can automatically increase to 1.3 GHz). Today it is installed in some netbooks and even laptops. Processor class Intel Atom D2700. But everyone knows that Atom has problems with parallel computing, which often reduces its performance to "no." But the C-60 - is deprived of this lack, and in addition is equipped with a fairly powerful for this class of graphics.

There are two memory slots DDR3-1066, with the ability to install up to 8 GB of memory.

The board contains 6 ports SATA 6 Gbps. That allows you to connect to the system as many as 6 disks (!), And not only 4, as in a regular NAS for home.

What is the most important - the board is built on the base UEFI, and not the usual BIOS. This means that the system will be able to work normally with hard disks of more than 2.2 TB. She "sees" the whole of their volume. BIOS motherboards can not work with hard disks larger than 2.2 GB without special "crutch-tools". Of course, the use of such utilities is unacceptable, if we are talking about the reliability of data storage and servers.

The C-60 is a rather cold processor, so it is cooled using an aluminum radiator alone. This is enough that even at the time of full load the CPU temperature did not increase more than 50-55 degrees. What is the norm.

The set of ports is quite standard, disappointing only the lack of a new USB 3.0. And especially want to answer the presence of a full gigabit network port:

On this board, I installed 2 modules of 2 GB DDR3-1333 from Patriot:

The Windows 7 Ultimate system was installed on the WD 500GB Green hard drive, and for the data I purchased a Hitachi-Toshiba HDD for 3 TB:

All this equipment I have from the FSP's 400 Watt FSP, which, of course - with a margin.

The final stage was the assembly of all this equipment in the case of mini-ATX.

Immediately after the assembly I installed on windows computer 7 Ultimate (installation took about 2 hours, which is normal, given the low processor speed).

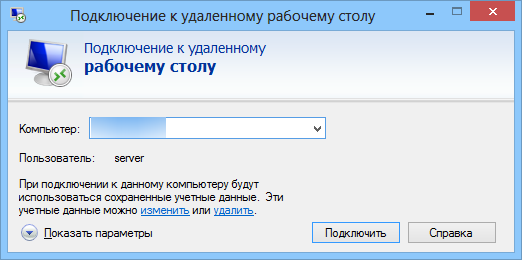

After all this, I disconnected the computer keyboard, mouse and monitor. In fact, there is one system unit connected to the local network via cable.

It is enough to remember the local IP of this PC on the network, to connect to it from any machine through the standard Windows-utility "Remote Desktop Connection":

I deliberately did not install specialized oS to organize file storage, such as FreeNAS. After all, in that case, there would not be much sense in assembling an individual PC for these needs. You could just buy a NAS.

But a separate home server, which can be loaded with work at night and leave - it's more interesting. In addition, the familiar interface of Windows 7 is convenient to manage.

Total total cost of a home server WITHOUT hard drives was 6,000 rubles.

Important addition

When using any network storage, the network bandwidth is very important. Moreover, even the usual 100 Megabit cable network does not enthuse, when you, for example, perform archiving from your computer to your home server. Send 100 GB of 100 Megabit network - this is several hours.

What can we say about Wi-Fi. Well, if you use Wi-Fi 802.11n - in this case, the network speed is kept around 100 Megabit. And if the standard is 802.11g, where the speed rarely exceeds 30 megabits? This is very, very little.

Ideal when the interaction with the server occurs over the cable network Gigabit Ethernet. In this case, it's really fast.

But I'll tell you how to create such a network quickly and with minimal expenses - in a separate article.

And other things, the medium of data transfer and the servers connected to it. It is usually used by large enough companies with a developed IT infrastructure for reliable data storage and high-speed access to them.

Simplified, storage is a system that allows servers to distribute reliable fast disks of variable capacity from different storage devices.

A bit of theory.

You can connect the server to the data store in several ways.

The first and simplest one is DAS, Direct Attached Storage (direct connection), we put drives into the server, or an array into the server adapter, and we get a lot of gigabytes of disk space with relatively fast access, and when using a RAID array, Spears on the subject of reliability have been broken for a long time.

However, such use of disk space is not optimal - on one server the place ends, on the other it is still a lot. The solution to this problem is NAS, Network Attached Storage (storage connected over a network). However, with all the advantages of this solution - flexibility and centralized management - there is one significant drawback - speed of access, not yet all organizations have implemented a network of 10 gigabit. And we come to the storage network.

The main difference between SAN and NAS (besides the order of letters in abbreviations) is how the connected resources are seen on the server. If NAS connects resources to NFS or SMB protocols, in SAN we get a connection to the disk with which we can work at the level of block I / O operations, which is much faster than network connection (plus an array controller with a large cache adds speeds to many operations).

Using SAN, we combine the advantages of DAS - speed and simplicity, and NAS - flexibility and manageability. Plus, we have the ability to scale storage systems as long as there is enough money, while simultaneously killing a few more birds with one shot, which are not immediately visible:

* We remove restrictions on the range of connection SCSI-devices, which are usually limited to a wire of 12 meters,

* reduce backup time,

* Can be loaded with SAN,

* in case of refusal from NAS we unload the network,

* get a high I / O speed due to optimization on the storage system side,

* We can connect several servers to one resource, this gives us the following two birds with one stone:

- we make full use of VMWare capabilities - for example VMotion (virtual machine migration between physical machines) and others like them,

- We can build fault-tolerant clusters and organize geographically distributed networks.

What does it give?

In addition to mastering the budget of optimization of the data storage system, we get, in addition to what I wrote above:

* Increased productivity, load balancing and high availability of storage systems due to several ways to access arrays;

* Economy on disks due to optimization of information arrangement;

* Accelerated disaster recovery - you can create temporary resources, deploy backups and connect servers to them, and restore information without haste, or transfer resources to other servers and quietly deal with dead iron;

* Reduced backup time - due to the high transfer speed, you can backup to the tape library faster, or even make a snapshot (snapshot) from the file system and quietly archive it;

* disk space on demand - when we need it - you can always add a couple of shelves to the storage system.

* Reduce the cost of storing megabytes of information - of course, there is a certain threshold from which these systems are cost-effective.

* A reliable place to store mission critical and business critical data (without which the organization can not exist and work normally).

* I want to mention separately VMWare - completely all the chips like the migration of virtual machines from the server to the server and other goodies are available only on the SAN.

What does it consist of?

As I wrote above - the storage system consists of storage devices, transmission medium and connected servers. Consider in order:

Storage systems usually consist of hard disks and controllers, in a self-respecting system there are usually 2 to 2 controllers, 2 paths to each drive, 2 interfaces, 2 power supplies, 2 administrators. Of the most respected system manufacturers, mention should be made of HP, IBM, EMC and Hitachi. Here I will quote one EMC representative at the seminar - "HP makes excellent printers. Let her do it! "I suspect that HP is also very fond of EMC. The competition between manufacturers is serious, however, as elsewhere. The consequences of competition are sometimes the imputed prices per megabyte of storage system and compatibility problems and support for competitors' standards, especially for old equipment.

Communication environment.

Usually SAN build on optics, this gives the current speed of 4, places in 8 gigabits per channel. At construction earlier used specialized hubs, now more switches, mainly from Qlogic, Brocade, McData and Cisco (the last two on the sites have not seen once). Cables are used traditional for optical networks - single-mode and multimode, single-mode more long-range.

Inside, FCP - Fiber Channel Protocol, transport protocol is used. Typically, it runs classic SCSI, and FCP provides addressing and delivery. There is an option for connecting to a conventional network and iSCSI, but it usually uses (and heavily loads) a local, rather than a dedicated data network, and requires adapters supporting iSCSI, well, speed is slower than optics.

There is also the clever word topology, which is found in all SAN textbooks. There are several topologies, the simplest version is point-to-point, we connect two systems. This is not a DAS, but a spherical horse in a vacuum is the simplest version of the SAN. Next is a controlled loop (FC-AL), it works by the principle of "pass on" - the transmitter of each device is connected to the receiver of the next one, the devices are closed in a ring. Long chains have the property of long initialization.

Well, the final version is a switched fabric (Fabric), it is created using switches. The structure of connections is based on the number of connected ports, as well as when building a local network. The basic principle of construction is that all paths and connections are duplicated. This means that before each device in the network there are at least 2 different paths. Here, too, the word topology is used, in the sense of arranging the circuit for connecting devices and connecting the switches. In this case, as a rule, the switches are configured so that the servers do not see anything, except for the resources assigned to them. This is achieved through the creation of virtual networks and is called zoning, the closest analogy is the VLAN. Each device on the network is assigned an analog of the MAC address in the Ethernet network, it is called the WWN - World Wide Name. It is assigned to each interface and each resource (LUN) of storage systems. Arrays and switches can distinguish between WWN access for servers.

Servers connect to the storage through the HBA - Host Bus Adapter. By analogy with network cards there are one-, two-, four-port adapters. The best "dog breeders" recommend putting two adapters per server, which allows both load balancing and reliability.

And then on the storage systems, resources are cut, they are disks (LUN) for each server and the place is left in the stock, everything turns on, system installers register the topology, catch glitches in the switch settings and access, everything starts up and everyone lives happily ever after *.

I do not specifically touch the different types of ports in the optical network, whoever needs to - he already knows or reads, who does not need - only to score his head. But as usual, with the wrong type of port, nothing will work.

From experience.

Usually when creating a SAN, you order arrays with several types of disks: FC for high-speed applications, and SATA or SAS for not very fast. This results in 2 disk groups with different megabyte cost - expensive and fast, and slow and sad cheap. On a fast hang usually all databases and other applications with active and fast I / O, slow - file resources and everything else.

If a SAN is created from scratch, it makes sense to build it based on solutions from a single vendor. The fact is that, despite the declared compliance with the standards, there are underwater rakes of compatibility problems of equipment, and not the fact that some equipment will work with each other without dancing with a tambourine and consulting with manufacturers. Usually, it's easier to call the integrator to get rid of such problems and give him money than to communicate with the arrows transferring each other arrows.

If the SAN is created on the basis of the existing infrastructure - everything can be difficult, especially if there are old SCSI arrays and a zoo of old equipment from different manufacturers. In this case, it makes sense to call for help from the terrible beast of the integrator, who will unravel the compatibility problems and make a third villa in the Canaries.

Often when creating storage systems, firms are not ordered to support the system by the manufacturer. Usually this is justified if the firm has a staff of competent competent admins (who have already called me a teapot 100 times) and a fair amount of capital that allows buying spare parts in the required quantities. However, competent administrators are usually enticed by integrators (I saw it myself), and money is not allocated for purchase, and after a crash, a circus begins with the screams of "All uvolu!" Instead of calling for support and the arrival of an engineer with a spare part.

Support usually comes down to replacing dead drives and controllers, well, to adding disk shelves and new servers to the system. A lot of trouble happens after a sudden prevention of the system by the forces of local specialists, especially after complete shutdown and disassembly-assembly of the system (and this happens).

About VMWare. As far as I know (virtualization specialists correct me), only VMWare and Hyper-V have the functionality that allows "on the fly" to transfer virtual machines between physical servers. And for its implementation, it is required that all the servers between which it moves virtual machine, were connected to one disk.

About clusters. Similar to the case with VMWare, I know the systems of building fail-safe clusters (Sun Cluster, Veritas Cluster Server) - require connected to all storage systems.

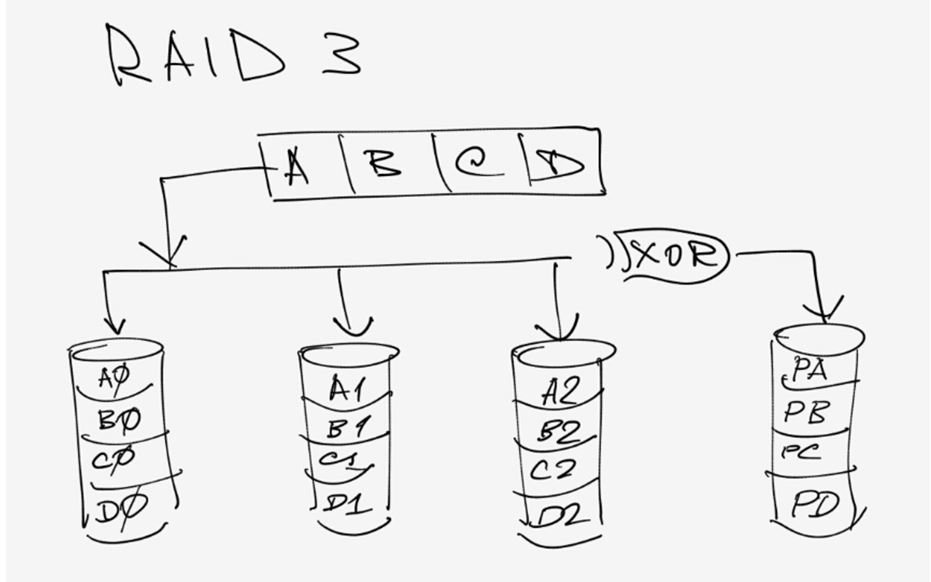

While writing an article - I was asked - which RAID usually combines disks?

In my practice, I usually do either RAID 1 + 0 on each disk shelf with FC disks, leaving 1 spare disk (Hot Spare) and cutting it from the piece of LUN for tasks, or doing RAID5 from slow disks, again leaving 1 disk to replace. But the question is complex, and usually the way to organize disks in an array is selected for each situation and justified. The same EMC for example goes even further, and they have an additional array setting for applications that work with it (for example under OLTP, OLAP). With the other vendors, I did not dig so deep, but I guess that fine tuning everyone has it.

* Before the first major failure, after it is usually bought support from the manufacturer or supplier of the system.

So, the issue number 1 - "Data storage systems."

Storage systems.

In English they are called one word - storage, which is very convenient. But in Russian this word is translated rather clumsily - "storehouse". Often on the slang of "IT-shnikov" use the word "storazh" in Russian transcription, or the word "treasurer", but this is completely mauva. Therefore, we will use the term "storage systems", abbreviated as storage, or simply "storage systems".

To devices of data storage it is possible to carry any devices for data recording: the so-called. "Flash drives", CDs (CDs, DVDs, ZIP), tape drives (Tape), hard disks (Hard disk, they are also called in the old way "Winchesters", because their first models resembled a cartridge with cartridges of the same name rifle of the 19th century) and etc. Hard drives are used not only inside computers, but also as external USB data recorders, and even, for example, one of the first iPod models is a small 1.8-inch hard drive with headphone output and built-in screen .

Recently, more and more popularity is gaining so-called. "Solid state" SSD (Solid State Disk, or Solid State Drive) systems, which by the principle of operation are similar to the "flash drive" for a camera or smartphone, only have a controller and a larger amount of stored data. Unlike hard drive, The SSD does not have mechanically moving parts. While the prices for such storage systems are quite high, but are rapidly declining.

All this - consumer devices, and among industrial systems should be allocated, primarily, hardware storage systems: arrays of hard drives, so-called. RAID controllers for them, tape storage systems for long-term data storage. In addition, a separate class: controllers for storage systems, to manage data backup, create snapshots in the storage system for later recovery, replication of data, etc.). Storage systems also include network devices (HBAs, Fiber Channel Switch switches, FC / SAS cables, etc.). And, finally, large-scale solutions for data storage, archiving, data recovery and disaster recovery (disater recovery) are developed.

Where do the data that you want to store come from? From us, loved ones, users, from application programs, email, as well as from various equipment - file servers, and database servers. In addition, the supplier of a large amount of data - the so-called. M2M (Machine-to-Machine communication) - all sorts of sensors, sensors, cameras, etc.

By the frequency of the use of stored data, storage can be divided into systems of short-term storage (online storage), storage of average duration (near-line storage) and the system of long-term storage (offline storage).

The first can include a hard disk (or SSD) of any personal computer. The second and third are external DAS storage systems (Direct Attached Storage), which can be an array of external (relative to the computer) disks (Disk Array). They, in turn, can also be divided into "just an array of disks" JBOD (Just a Bunch Of Disks) and an array with the controller controller iDAS (intelligent disk array storage).

There are three types of external storage systems: DAS (Direct Attached Storage), SAN (Storage Area Network) and NAS (Network attached Storage). Unfortunately, even many experienced IT-shniki can not explain the difference between SAN and NAS, saying that at one time this difference was, and now - it supposedly already is not. In fact, the difference is, and significant (see Figure 1).

Figure 1. The difference between SAN and NAS.

In a SAN with a storage system, the actual servers themselves are connected via the SAN storage area network. In the case of NAS, network servers are connected via a local area network (LAN) with a common file system to RAID.

The basic protocols of storage connection

SCSI Protocol (Small Computer System Interface), is pronounced "skazi," a protocol developed in the mid-1980s to connect external devices to mini mini computers. Its version of SCSI-3 is the basis for all communication protocols of storage systems and uses a common SCSI command system. Its main advantages: independence from the server used, the possibility of parallel operation of several devices, high data transfer speed. Disadvantages: the limited number of connected devices, the range of connection is very limited.

FC protocol(Fiber Channel), the internal protocol between the server and the shared storage, controller, disks. It is a widely used serial communication protocol running at speeds of 4 or 8 Gigabits per second (Gbps). It appears, as its name suggests, to work through fiber, but it can also work on copper. Fiber Channel - the main protocol for storage systems FC SAN.

ISCSI Protocol(Internet Small Computer System Interface), a standard protocol for transferring data blocks over a well-known TCP / IP protocol. "SCSI over IP". iSCSI can be considered as a high-speed inexpensive solution for storage systems that are connected remotely via the Internet. iSCSI encapsulates SCSI commands in TCP / IP packets for transmission over an IP network.

SAS protocol(Serial Attached SCSI). SAS uses serial data transfer and is compatible with SATA hard disks. Currently, SAS can transfer data at 3Gpbs or 6Gpbs, and supports full duplex mode, i.e. can transmit data to both sides at the same speed.

Types of storage systems.

You can distinguish three main types of storage systems:

- DAS (Direct Attached Storage)

- NAS (Network attached Storage)

- SAN (Storage Area Network)

Storage with direct connection of DAS disks were developed at the end

Figure 2. DAS

70-ies, due to the explosive increase in user data, which is simply not physically fit in the internal long-term memory of computers (for young people we will make note that here it is not about personal computers, they were not there, but big computers, so-called. mainframes). Data transfer speed in DAS was not very low, from 20 to 80 Mbit / s, but for those needs it was quite enough.

Storage network connection NAS appeared in the early 90's. The reason was the rapid development of networks and critical requirements for the sharing of large data sets within an enterprise or operator network. NAS used a special network file system CIFS (Windows) or NFS (Linux), so different servers from different users could read the same file from the NAS at the same time. The data transfer rate was already higher: 1 - 10Gbps.

Figure 3. NAS

In the mid-90's, there were networks for connecting storage devices FC SAN. Their development was caused by the need to organize scattered data on the network. A single storage device in a SAN can be broken up into several small nodes, called LUNs (Logical Unit Number), each of which belongs to the same server. The data transfer rate has increased to 2-8 Gbps. Such storage systems could provide technologies for protecting data from losses (snapshot, backup).

Figure 4. FC SAN

Another type of SAN is the IP SAN (IP Storage Area Network), developed in the early 2000s. FC SAN systems were expensive, difficult to manage, and IP-based networks were at their peak, which is why this standard appeared. Storage systems were connected to servers using an iSCSI controller via IP switches. Data transfer rate: 1 - 10 Gb / s.

Fig.5. IP SAN.

The table shows some of the comparative characteristics of all the storage systems discussed:

| DAS | NAS | SAN | ||

| FC SAN | IP SAN | |||

| Transmission type | SCSI, FC, SAS | IP | FC | IP |

| Data type | Data block | File | Data block | Data block |

| A typical application | Any | File Server | Database | CCTV |

| Advantage | Ease of understanding, Excellent compatibility |

Easy installation, low cost | Good scalability | Good scalability |

| disadvantages | Difficulty of management. Inefficient use of resources. Poor scalability |

Low productivity. Not applicable for some applications |

High price. Configuration Difficulty |

Low productivity |

Briefly, SANs are designed to transfer massive data blocks to storage, while NASs provide access to data at the file level. The combination of SAN + NAS can get a high degree of data integration, high-performance access and file sharing. Such systems are called unified storage - "unified storage systems."

Unified storage systems:the architecture of networked storage systems, which supports both a file-oriented NAS system and a block-oriented SAN system. Such systems were developed in the early 2000s in order to solve administration problems and high total cost of ownership of separate systems in one enterprise. This storage system supports almost all protocols: FC, iSCSI, FCoE, NFS, CIFS.

Hard disks

All hard drives can be divided into two main types: HDD (Hard disk drive, which, in fact, translates as a "hard disk") and SSD (Solid State Drive). Ie, both are hard. What then is the "soft disk", such are? Yes, in the past, there were called "floppy disks" (as they were nicknamed because of the characteristic "clapping" sound in the drive during operation). The drives for them can still be seen in system units old computers that have been preserved in some state institutions. However, with all the desire, such magnetic disks can hardly be attributed to the storage SYSTEM. These were some analogues of the present "flash drive".