One of the basic concepts of the AIS design methodology is the concept of the life cycle of its software (LC software). Lifecycle software is an ongoing process that begins from the moment a decision is made on the need for its creation and ends at the moment of its complete withdrawal from service. The structure of software lifecycle is based on three groups of processes:

- the main software lifecycle processes (purchase, delivery, development, operation, maintenance);

- subsidiary processes that ensure the execution of the main processes (documentation, configuration management, quality assurance, verification, validation, assessment, audit, problem solving);

- organizational processes (project management, creation of project infrastructure, definition, assessment and improvement of the life cycle itself, training).

Development - this is all work on the creation of software and its components in accordance with the specified requirements, including the preparation of design and operational documentation, preparation of materials necessary to check the operability and appropriate quality of software products, materials required for organizing personnel training, etc. Software development usually includes analysis, design and implementation (programming).

Exploitation includes work on the implementation of software components into operation, including configuring databases and user workstations, providing operational documentation, training personnel, etc., and direct operation, including localizing problems and eliminating the causes of their occurrence, software modification within the established regulations, preparation of proposals for improving, developing and modernizing the system.

Project management associated with the planning and organization of work, the creation of development teams and control over the timing and quality of work performed. The technical and organizational support of the project includes the choice of methods and tools for the implementation of the project, the definition of methods for describing the intermediate states of development, the development of methods and software testing tools, personnel training, etc. Project quality assurance is associated with software verification, verification and testing issues. Verification is the process of determining whether the current state of development, achieved at a given stage, meets the requirements of that stage. Verification allows you to assess the compliance of development parameters with the original requirements. Verification overlaps with testing, which is concerned with identifying the differences between actual and expected results and assessing the conformity of the software characteristics to the original requirements. In the process of project implementation, an important place is occupied by issues of identification, description and configuration control of individual components and the entire system as a whole.

Configuration Management - one of the auxiliary processes that support the main processes of the software life cycle, primarily the processes of software development and maintenance. When creating projects of complex ISs, consisting of many components, each of which may have varieties or versions, the problem arises of taking into account their connections and functions, creating a unified structure and ensuring the development of the entire system. Configuration management allows you to organize, systematically take into account and control software changes at all stages of the life cycle. General principles and recommendations of configuration accounting, planning and management of software configurations are reflected in the standard 1EO 12207-2.

Each process is characterized by certain tasks and methods of their solution, initial data obtained at the previous stage and results. The analysis results, in particular, are functional models, information models and their corresponding diagrams. The life cycle of software is iterative in nature: the results of the next stage often cause changes in the design solutions developed at earlier stages.

Existing life cycle models determine the order of execution of stages during development, as well as the criteria for moving from stage to stage. In accordance with this, the most widespread are the following three life cycle models:

- cascade model (1970-1980s) - involves the transition to the next stage after the complete completion of the work on the previous stage;

- phased model with intermediate control (1980-1985) - an iterative development model with feedback loops between stages. The advantage of such a model is that inter-stage adjustments provide less labor input compared to the waterfall model, but the lifetime of each stage is extended over the entire development period;

- spiral model (1986-1990) - focuses on the initial stages of the life cycle: requirements analysis, specification design, preliminary and detailed design. At these stages, the feasibility of technical solutions is checked and justified by creating prototypes. Each turn of the spiral corresponds to a step-by-step model for creating a fragment or version of a software product, it specifies the goals and characteristics of the project, determines its quality, and plans the work of the next turn of the spiral. Thus, the details of the project are deepened and consistently specified, and as a result, a reasonable option is selected, which is brought to implementation. Experts note the advantages of the spiral model:

- accumulation and reuse of software tools, models and prototypes;

- focus on the development and modification of software in the process of its design;

- analysis of risks and costs in the design process.

The main feature of the software development industry is the concentration of complexity at the initial stages of the life cycle (analysis, design) with a relatively low complexity and labor intensity of the subsequent stages. Moreover, unresolved issues and mistakes made during the analysis and design stages give rise to difficult, often unsolvable problems in subsequent stages and ultimately lead to the failure of the entire project.

TRAINING PLAN SPECIALTIES "1-40 01 73 SOFTWARE OF INFORMATION SYSTEMS"

Technical means of information systems

Arithmetic and logical foundations of information processing, including forms of information presentation, features and limitations associated with bit depth. Physical principles of computer functioning, architecture modern processors on the example of Intel-compatible models, including caching, pipelining, multicore and principles of parallel computing. Peripheral devices, principles of collection, storage and transformation of information in information systems.

Fundamentals of Algorithmization and Programming in Languages high level

Theoretical foundations of algorithms and programming: foundations of the theory of algorithms and programming technology. general characteristics high-level programming language, program structure, data types, operations and expressions, data input and output, operators of computing process control, subroutines. Additional features the high-level language being studied (dynamic memory allocation, pointers, etc.). Programming and debugging a class of branching and cyclic algorithms. The course is built on the basis of C ++ Visual Studio.

Operating system architecture

Concept, purpose and functions of the operating system (OS). The concept of a resource, OS as a resource management system. Classification and characteristics of modern operating systems. Principles of construction and architecture of the OS (kernel and auxiliary modules, monolithic, layered, based on a microkernel, and other types of kernel architectures). Organization of the user interface. The concept and implementation of the application programming interface. Compatibility and application software environments. Java virtual machine. .Net-based managed software environment architecture. Process and thread concept. Process and thread management, CPU time allocation algorithms. Interaction of processes, races, synchronization, the problem of dead ends. Memory management. Virtual memory, address translation, virtual memory management algorithms. Virtual memory and data exchange between processes. I / O control, multilayer I / O subsystem structure. The concept, organization and tasks of the file system. Logical structure and operations with files. The physical organization of the file. File systems Windows and UNIX. Projecting program files and data into the address space. Access control and data protection. Organization of modern operating systems of the Unix, Linux and Windws families.

Object Oriented Programming

Object-oriented programming paradigms. Classes. Objects. Constructors and destructors. Methods. Inheritance. Virtual methods. The mechanism for calling virtual methods. Differentiation of access to object attributes. Object method pointers (delegates). Virtual constructors. Information about the type of program execution time. The course is built on the basis of C # Visual Studio.

Computer networks

The current state of network technologies, the basics of building computer networks, network hardware and networking software. OSI model and network protocols, the concept of internetworking and routing, TCP / IP protocol stack. Principles of network administration, account management and access to network resources, the basics of network security. Network services on the corporate network, terminal services and thin clients. Enterprise network infrastructure virtualization and cloud computing. Creation of network applications.

System Programming

Using system calls to implement the application interface. Programming input using keyboard and mouse. Graphics device interface, programming output in application with graphical interface... Access to system resources in the program using the API. Kernel objects. Process, thread management. Multi-threaded programming, synchronization and elimination of races, system synchronization facilities. Synchronous and asynchronous file operations. Virtual memory management, dynamically allocated memory areas, files projected into memory. Development and use of dynamic link libraries. Structured exception handling.

Component programming technologies

Component programming concept. Evolution of programming technologies and application architecture. Comparative characteristics procedural, object-oriented and component programming. Component object model COM and technologies based on it. Component concept, requirements and properties. The basic COM hierarchy is server / class / interface / method. COM interfaces. COM library. COM servers. OLE and ActiveX technologies. Automation and dispatch interfaces. Type library, late binding. IDL. ATL library. Streaming models and synchronization. Error handling and exceptions. Collections and enumerations. Reverse interfaces, event handling. Containers. Overview of COM + technology, .NET-based component programming, CORBA and the OMA, ORB, GIOP, IIOP specifications.

Facilities visual programming applications

The concept of visual design of software tools. Elements and technology for creating software applications in the visual environment. Compilation tools for creating working versions and programs using the visual environment. The main methods of the library for the development of software applications. Main classes base library, purpose and methods of effective use in the developed applications. Effective methods for developing applications in a specific area. Visual components for presenting data. Methods and tools for implementing concepts in the studied environment. Organization of input / output and information processing, application and restoration of object states. Linking and embedding technologies. Containers and servers, their use in the created applications. Organization of access and work with databases. Application programming strategies for various models of database architectures (remote server and active server). Principles of processing messages from server programs and database server errors in database applications. The course is built on the basis of C # Forms Visual Studio.

Web technologies

Distinctive features of a Web application. HTTP protocol. Familiarity with HTML, CSS, Bootstrap. Familiarity with the ASP.NET MVC application. ASP.NET MVC Application Models, Controllers, and Views. Razor language. The helper methods @Html and @Url. Layouts pages and partial views. Passing data from controller to view. Data binding mechanism. Routing Abstract and data validation. Dependency Injection. Packages (Bundles). File transfer. Introduction to the Web Api. AJAX technology. Working with Json. Unit testing ASP.NET MVC applications. Authentication and Authorization. Deploying a WEB application. ASP.NET Core overview.

Organization and design database

The course is built on the basis of T-SQL MS SQL Server, with consideration of the features in Oracle and MySQL. Principles of working with data in various types of information systems. Database management systems, their main functions and architecture according to the ANSI standard. Data models, their classification. The relational data model used in more than 80% of DBMS is considered in detail. The core of the relational model is relational algebra. Logical and physical organization of the database, data integrity, organization of indexes and security systems. SQL. A hands-on study of data management, indexes and security in T-SQL.

Transactions and Transaction Models, a hands-on study of T-SQL transaction management. Transaction log. Problems of parallel execution of transactions. Locks, types of locks, practical study of T-SQL lock management. Database architecture models. Database programming, practical study of creating code for stored procedures, triggers, user-defined functions, cursors.

Relational database design, methodology and stages of database design. Database anomalies and their elimination using procedures to normalize relations. Practical use Case-systems for database design.

Information systems software design technologies

Models of life cycle (LC) of software tools (PS): strategies for developing software; life cycle models that implement these strategies; selection of a life cycle model for a specific project. Structural Approach to Substation Design. Classical technologies for designing substations. Evaluation of the effectiveness of the structural division of the PS into modules. Modern structural technologies for the development of software systems. Methodologies and notations for structural analysis and design of software systems. Introduction to software development automation: principles of automation; classification of CASE-funds. Object-oriented approach to the design of software systems. An object-oriented modeling language (for example, the UML unified modeling language). Application building, program code generation, data modeling in the environment of object-oriented software. The course is based on the UML Rational Rose.

Software Testing

Basic concepts and definitions. Reliability indicators computer systems... Analysis of the causes of errors in the software. Standardization of software reliability assessment in the Republic of Belarus and abroad: current standards, software reliability models. Software testing: basic concepts, principles of testing organization, design of test cases, structural and functional methods of assembly (integration) testing, testing the correctness of the final software product. System testing and its types. Regression testing automation of the software testing process. Software verification.

Send your good work in the knowledge base is simple. Use the form below

Students, graduate students, young scientists using the knowledge base in their studies and work will be very grateful to you.

Similar documents

Analysis of technical support of information systems (microprocessors). Information systems software. Software classification. Programs for preparing primary documents on the example of "1C: Accounting", "1C: Taxpayer".

test, added 07/20/2010

History of development information technologies... Classification, types of software. Methodologies and technologies for designing information systems. Methodology and technology requirements. Structural approach to the design of information systems.

thesis, added 02/07/2009

Methodologies for the development of information systems in domestic and foreign literature. State and international standards in the field of software development. Development of a fragment of the educational-methodical resource information system.

term paper, added 05/28/2009

The software life cycle is an ongoing process that begins with a decision on the need to create software and ends when it is completely withdrawn from service. The Riley, Lehman and Boehm approach to defining the software life cycle.

abstract, added 01/11/2009

Life cycle of information systems. Documentation and configuration management processes. Using cascade and spiral approaches to building IS. Their advantages and disadvantages. Cascade software development process.

presentation added on 11/09/2015

The concept of software, issues of its development and use. General characteristics of the system software and the operation of the operating system. The specifics of the software development management process and its features.

term paper, added 08/23/2011

Informatization of Russia. Software market. The main tasks of standardization, certification and licensing in the field of informatization. A set of engineering methods and tools for creating software. Life cycle of software.

The software (software) of computer information systems (IS) is their necessary component. Software is a set of programs whose function is to solve certain problems on a computer. Without the appropriate software, the functioning of even an ideally designed system is impossible, since its meaning is completely lost. Depending on the functions performed by the software, it can be divided into groups: 1) system software 2) application software 3) instrumentation (tool systems)

The software (software) of computer information systems (IS) is their necessary component. Software is a set of programs whose function is to solve certain problems on a computer. Without the appropriate software, the functioning of even an ideally designed system is impossible, since its meaning is completely lost. Depending on the functions performed by the software, it can be divided into groups: 1) system software 2) application software 3) instrumentation (tool systems)

Software (SW) System software of the program Operating systems Service systems Systems maintenance Shells and environments Utilities (utilities) Application software User application programs Application packages (APPs) General purpose Method-oriented Problem-oriented Integrated Tool software Programming systems Tool environments Simulation systems

Software (SW) System software of the program Operating systems Service systems Systems maintenance Shells and environments Utilities (utilities) Application software User application programs Application packages (APPs) General purpose Method-oriented Problem-oriented Integrated Tool software Programming systems Tool environments Simulation systems

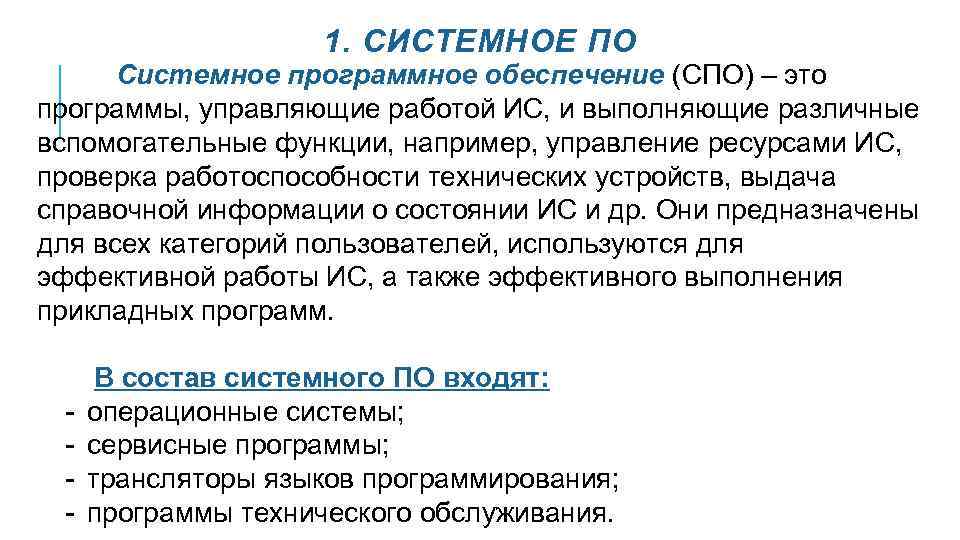

1. SYSTEM SOFTWARE System software (SSS) are programs that control the operation of the IS, and perform various auxiliary functions, for example, managing the resources of the IS, checking the operability technical devices, issuance of reference information on the state of the IS, etc. They are intended for all categories of users, are used for efficient operation of IS, as well as for the effective execution of applied programs. - The system software includes: operating systems; service programs; translators of programming languages; maintenance programs.

1. SYSTEM SOFTWARE System software (SSS) are programs that control the operation of the IS, and perform various auxiliary functions, for example, managing the resources of the IS, checking the operability technical devices, issuance of reference information on the state of the IS, etc. They are intended for all categories of users, are used for efficient operation of IS, as well as for the effective execution of applied programs. - The system software includes: operating systems; service programs; translators of programming languages; maintenance programs.

OPERATING SYSTEM An operating system (OS) is a set of programs that controls the hardware of a computer, its resources (RAM, disk space), ensures the launch and execution of application programs, and automation of input / output processes. Without an operating system, the computer is dead. The OS is loaded when the computer is turned on.

OPERATING SYSTEM An operating system (OS) is a set of programs that controls the hardware of a computer, its resources (RAM, disk space), ensures the launch and execution of application programs, and automation of input / output processes. Without an operating system, the computer is dead. The OS is loaded when the computer is turned on.

SERVICE SYSTEMS Service systems expand the capabilities of the OS for system maintenance, provide user convenience. 1) Maintenance systems are a set of software tools that perform control, testing and diagnostics and are used to check the functioning of computer devices and detect malfunctions during the operation of the computer. 2) Software shells of operating systems - programs that allow the user to perform actions to manage computer resources (Norton Commander (Symantec), FAR (File and Archive manage. R)) using other means than those provided by the OS (more understandable and efficient). 3) Utilities (utilities) are auxiliary programs that provide the user with a number of additional services for the implementation of frequently performed work or that increase the convenience and comfort of work (packers (archivers), antivirus programs, optimization and quality control programs disk space; information recovery, formatting, data protection programs; CD burning software; drivers - programs.

SERVICE SYSTEMS Service systems expand the capabilities of the OS for system maintenance, provide user convenience. 1) Maintenance systems are a set of software tools that perform control, testing and diagnostics and are used to check the functioning of computer devices and detect malfunctions during the operation of the computer. 2) Software shells of operating systems - programs that allow the user to perform actions to manage computer resources (Norton Commander (Symantec), FAR (File and Archive manage. R)) using other means than those provided by the OS (more understandable and efficient). 3) Utilities (utilities) are auxiliary programs that provide the user with a number of additional services for the implementation of frequently performed work or that increase the convenience and comfort of work (packers (archivers), antivirus programs, optimization and quality control programs disk space; information recovery, formatting, data protection programs; CD burning software; drivers - programs.

2. APPLIED SOFTWARE The applied software is intended for solving specific problems of the user and organizing the computing process of the information system as a whole. Application software allows you to develop and execute tasks (applications) of the user for accounting, personnel management, etc. The application software runs under the control of system software, in particular operating systems. The applied software includes: - general purpose application packages (APP); - software packages for functional purposes.

2. APPLIED SOFTWARE The applied software is intended for solving specific problems of the user and organizing the computing process of the information system as a whole. Application software allows you to develop and execute tasks (applications) of the user for accounting, personnel management, etc. The application software runs under the control of system software, in particular operating systems. The applied software includes: - general purpose application packages (APP); - software packages for functional purposes.

General purpose RFP 1) General purpose RFP are universal software productsdesigned to automate the development and operation of functional tasks of the user and information systems in general. This class of software packages includes: - text editors (word processors) and graphic; - spreadsheets; - database management systems (DBMS); - integrated packages; - Case technologies; - shells of expert systems artificial intelligence.

General purpose RFP 1) General purpose RFP are universal software productsdesigned to automate the development and operation of functional tasks of the user and information systems in general. This class of software packages includes: - text editors (word processors) and graphic; - spreadsheets; - database management systems (DBMS); - integrated packages; - Case technologies; - shells of expert systems artificial intelligence.

PPP functional purpose 2) PPP functional purpose refers to software products focused on the automation of user functions in a specific area of \u200b\u200beconomic activity. TO this class includes software packages: accounting, technical and economic planning, development of investment projects, drawing up a business plan for an enterprise, personnel management, an automated management system for an enterprise as a whole.

PPP functional purpose 2) PPP functional purpose refers to software products focused on the automation of user functions in a specific area of \u200b\u200beconomic activity. TO this class includes software packages: accounting, technical and economic planning, development of investment projects, drawing up a business plan for an enterprise, personnel management, an automated management system for an enterprise as a whole.

3. TOOL SOFTWARE To instrumental software (IPO) include programming systems for the development of new programs. K IPO programming systems (SP), such as C ++, Pascal, Basic tooling environments (IDS) for application development, such as C ++ Bilder, Delphi, Visual Basic, Java, which include visual programming tools, as well as modeling systems, for example, the simulation system Mat. Lab, business process modeling systems Bp. Win and Er database. Win and others. It should be noted that at present, development environments are mainly used for software development.

3. TOOL SOFTWARE To instrumental software (IPO) include programming systems for the development of new programs. K IPO programming systems (SP), such as C ++, Pascal, Basic tooling environments (IDS) for application development, such as C ++ Bilder, Delphi, Visual Basic, Java, which include visual programming tools, as well as modeling systems, for example, the simulation system Mat. Lab, business process modeling systems Bp. Win and Er database. Win and others. It should be noted that at present, development environments are mainly used for software development.

INTEGRATED APPLICATION PACKAGES Integrated RFPs include a set of tools, components, each of which is equivalent in functionality to a problem-oriented package. For example, integrated microsoft package Office includes applications that can function autonomously, independently of each other (word processor, electronic excel tables, Access DBMS, etc.). The structure of such packages provides system components that ensure switching between different applications, their interaction and conflict-free use of common data.

INTEGRATED APPLICATION PACKAGES Integrated RFPs include a set of tools, components, each of which is equivalent in functionality to a problem-oriented package. For example, integrated microsoft package Office includes applications that can function autonomously, independently of each other (word processor, electronic excel tables, Access DBMS, etc.). The structure of such packages provides system components that ensure switching between different applications, their interaction and conflict-free use of common data.

REFERENCE MODELS OF THE ENVIRONMENT AND INTERCONNECTION OF OPEN SYSTEMS The requirement of interoperability and interoperability of applications has led to the development of the "Portable Operating System Interface" (a set of POSIX standards) standards system and communication standards. However, these standards do not cover the required spectrum of needs, even within their assigned scope. Development of IT standardization and formation of the principle open systems found expression in the creation of a functional open systems environment OSE and the construction of a corresponding model that would cover the standards and specifications for providing IT capabilities.

REFERENCE MODELS OF THE ENVIRONMENT AND INTERCONNECTION OF OPEN SYSTEMS The requirement of interoperability and interoperability of applications has led to the development of the "Portable Operating System Interface" (a set of POSIX standards) standards system and communication standards. However, these standards do not cover the required spectrum of needs, even within their assigned scope. Development of IT standardization and formation of the principle open systems found expression in the creation of a functional open systems environment OSE and the construction of a corresponding model that would cover the standards and specifications for providing IT capabilities.

The model is aimed at heads of IT services and project managers responsible for the acquisition (development), implementation, operation and development of information systems consisting of heterogeneous software and hardware and communication tools. OSE applications can include: Real Time System (RTS) and Embedded System (ES); Transaction Processing System (TPS); database management systems (DBMS); a variety of decision support systems (Decision Support System - DSS); management IS of administrative (Executive Information System - EIS) and production (Enterprise Resource Planning - ERP) purposes; geographic IS (Geographic Information System - GIS); others specialized systemsin which the specifications recommended by international organizations can be applied.

The model is aimed at heads of IT services and project managers responsible for the acquisition (development), implementation, operation and development of information systems consisting of heterogeneous software and hardware and communication tools. OSE applications can include: Real Time System (RTS) and Embedded System (ES); Transaction Processing System (TPS); database management systems (DBMS); a variety of decision support systems (Decision Support System - DSS); management IS of administrative (Executive Information System - EIS) and production (Enterprise Resource Planning - ERP) purposes; geographic IS (Geographic Information System - GIS); others specialized systemsin which the specifications recommended by international organizations can be applied.

From the point of view of manufacturers and users, OSE is a fairly universal functional infrastructure that regulates and facilitates the development or acquisition, operation and maintenance of secure application systems that: § run on any used platform of the supplier or user; § use any operating system; § provide database access and data management; § exchange data and interact through the networks of any suppliers and in the local networks of consumers; § interact with users through standard interfaces in a common user-computer interface system.

From the point of view of manufacturers and users, OSE is a fairly universal functional infrastructure that regulates and facilitates the development or acquisition, operation and maintenance of secure application systems that: § run on any used platform of the supplier or user; § use any operating system; § provide database access and data management; § exchange data and interact through the networks of any suppliers and in the local networks of consumers; § interact with users through standard interfaces in a common user-computer interface system.

OSE supports portable, scalable, and interoperable application computer programs through standard functionality, interfaces, data formats, exchange and access protocols. Standards can be international, national, and other publicly available specifications and agreements. These standards and specifications are available to any developer, vendor, and user of computing and communications software and hardware when building systems and facilities that meet the OSE criteria.

OSE supports portable, scalable, and interoperable application computer programs through standard functionality, interfaces, data formats, exchange and access protocols. Standards can be international, national, and other publicly available specifications and agreements. These standards and specifications are available to any developer, vendor, and user of computing and communications software and hardware when building systems and facilities that meet the OSE criteria.

OSE applications and tools are portable when implemented on standard platforms and written in standardized programming languages. They work with standard interfaces that connect them to the computing environment, read and create data in standard formats, and transmit them according to standard protocols running in various computing environments. OSE applications and tools are scalable across multiple platforms and network configurations - from PCs to powerful servers, from local parallel computing systems to large GRID systems. The user can notice the difference in the amount of computing resources on any platform by some indirect signs, for example, by the speed of the application program, but never by system failures.

OSE applications and tools are portable when implemented on standard platforms and written in standardized programming languages. They work with standard interfaces that connect them to the computing environment, read and create data in standard formats, and transmit them according to standard protocols running in various computing environments. OSE applications and tools are scalable across multiple platforms and network configurations - from PCs to powerful servers, from local parallel computing systems to large GRID systems. The user can notice the difference in the amount of computing resources on any platform by some indirect signs, for example, by the speed of the application program, but never by system failures.

OSE applications and tools interact with each other if they provide services to the user using standard protocols, data exchange formats, and interfaces for collaborative or distributed data processing systems for targeted use of information. The process of transferring information from one platform to another via a local computer network (LAN) ( Local Area Network - LAN) or a combination of any networks (up to global ones) must be absolutely transparent for applications and users and not cause technical difficulties in use. At the same time, the location and location of other platforms, operating systems, databases, programs and users should not matter for the applied tool used.

OSE applications and tools interact with each other if they provide services to the user using standard protocols, data exchange formats, and interfaces for collaborative or distributed data processing systems for targeted use of information. The process of transferring information from one platform to another via a local computer network (LAN) ( Local Area Network - LAN) or a combination of any networks (up to global ones) must be absolutely transparent for applications and users and not cause technical difficulties in use. At the same time, the location and location of other platforms, operating systems, databases, programs and users should not matter for the applied tool used.

In the description of the model, the following elements are used: 1) Logical objects, including: a) Application software (APP), b) The application platform consists of a set of software and hardware components that implement system services that are used by the application software. The concept of an application platform does not include specific implementation functionality. For example, a platform can be a processor used by multiple applications or a large distributed system. c) The external environment of the platforms consists of elements external to the application software and the application platform (workstations, external peripherals collection, processing and transmission of data, objects of communication infrastructure, services of other platforms, operating systems or network devices).

In the description of the model, the following elements are used: 1) Logical objects, including: a) Application software (APP), b) The application platform consists of a set of software and hardware components that implement system services that are used by the application software. The concept of an application platform does not include specific implementation functionality. For example, a platform can be a processor used by multiple applications or a large distributed system. c) The external environment of the platforms consists of elements external to the application software and the application platform (workstations, external peripherals collection, processing and transmission of data, objects of communication infrastructure, services of other platforms, operating systems or network devices).

2) Interfaces containing: a) The Application Program Interface (API) is the interface between the application software and the application platform. The main function of the API is to support the portability of the application software. API classification is made depending on the type of services being implemented: interaction in the "user - computer" system, information exchange between applications, internal system services, communication services. b) The External Environment Interface (EEI) provides information transfer between an application platform and an external environment, as well as between applications that run on the same platform.

2) Interfaces containing: a) The Application Program Interface (API) is the interface between the application software and the application platform. The main function of the API is to support the portability of the application software. API classification is made depending on the type of services being implemented: interaction in the "user - computer" system, information exchange between applications, internal system services, communication services. b) The External Environment Interface (EEI) provides information transfer between an application platform and an external environment, as well as between applications that run on the same platform.

Logical objects are represented by three classes, interfaces - by two. In the context of the OSE reference model, the application software directly contains program codes, data, documentation, testing, aids and training tools. The OSE RM Reference Model implements and regulates the provider-user relationship. The logical objects of the application platform and the external environment are the service provider, the application software is the user. They communicate using a set of API and EEI interfaces defined by the model

Logical objects are represented by three classes, interfaces - by two. In the context of the OSE reference model, the application software directly contains program codes, data, documentation, testing, aids and training tools. The OSE RM Reference Model implements and regulates the provider-user relationship. The logical objects of the application platform and the external environment are the service provider, the application software is the user. They communicate using a set of API and EEI interfaces defined by the model

The EEI interface is a collection of all three interfaces, each of which has characteristics determined by an external device: 1) Communication Service Interface (CSI) - provides a service for implementing interaction with external systems. Interaction is implemented by standardizing protocols and data formats that can be exchanged using established protocols; 2) Human Computer Interface (HCI) - an interface through which the physical interaction of the user and the software system is carried out; 3) interface information services (Information Service Interface - ISI) - the boundary of interaction with external memory for long-term data storage, provided by the standardization of formats and syntax for data presentation.

The EEI interface is a collection of all three interfaces, each of which has characteristics determined by an external device: 1) Communication Service Interface (CSI) - provides a service for implementing interaction with external systems. Interaction is implemented by standardizing protocols and data formats that can be exchanged using established protocols; 2) Human Computer Interface (HCI) - an interface through which the physical interaction of the user and the software system is carried out; 3) interface information services (Information Service Interface - ISI) - the boundary of interaction with external memory for long-term data storage, provided by the standardization of formats and syntax for data presentation.

The application platform provides services for various uses through both main interfaces to the platform. The OSE provides the operation of the application software using certain rules, components, methods of interfacing system elements (Plug Compatibility) and a modular approach to the development of software and information systems. The advantages of the model are the separation of the external environment into an independent element, which has certain functions and the corresponding interface, and the possibility of its use to describe systems built on the basis of the "client-server" architecture. A relative disadvantage is that not all of the required specifications have yet been presented at the level of internationally harmonized standards.

The application platform provides services for various uses through both main interfaces to the platform. The OSE provides the operation of the application software using certain rules, components, methods of interfacing system elements (Plug Compatibility) and a modular approach to the development of software and information systems. The advantages of the model are the separation of the external environment into an independent element, which has certain functions and the corresponding interface, and the possibility of its use to describe systems built on the basis of the "client-server" architecture. A relative disadvantage is that not all of the required specifications have yet been presented at the level of internationally harmonized standards.

CRITERIA FOR SELECTING THE SOFTWARE § § § § § product and company stability; price / budget; the ability to integrate with other programs; provided opportunities; availability of customer service and its efficiency; the number of figures and symbols available in the database; your purpose, needs and application of the software; the volume and complexity of the data to be processed; compatible with Macintosh or Windows platforms; Availability additional programsthat expand the capabilities of the software.

CRITERIA FOR SELECTING THE SOFTWARE § § § § § product and company stability; price / budget; the ability to integrate with other programs; provided opportunities; availability of customer service and its efficiency; the number of figures and symbols available in the database; your purpose, needs and application of the software; the volume and complexity of the data to be processed; compatible with Macintosh or Windows platforms; Availability additional programsthat expand the capabilities of the software.

THE MAIN TRENDS OF SOFTWARE DEVELOPMENT ARE - standardization of both individual software components and interfaces between them, which allows one or another application to be used on different hardware platforms and in the environment of different operating systems, as well as to ensure its interaction with a wide range of applications; - focus on object-oriented design and programming of software tools, which, together with their standardization, allows you to go to new technology - technologies of "assembly" of this or that application, - intellectualization of the user interface, ensuring its intuitive clarity, non-procedurality and approximation of the language of communication with the computer to the professional language of the user; customizing the user interface for the characteristics and needs of a particular user when organizing his dialogue with a computer; the use of multimedia in the implementation of the user interface; - intellectualization of program capabilities and software systems; artificial intelligence methods are used more and more in the design of applications, which makes it possible to make applications smarter and to solve increasingly complex, poorly formalized problems;

THE MAIN TRENDS OF SOFTWARE DEVELOPMENT ARE - standardization of both individual software components and interfaces between them, which allows one or another application to be used on different hardware platforms and in the environment of different operating systems, as well as to ensure its interaction with a wide range of applications; - focus on object-oriented design and programming of software tools, which, together with their standardization, allows you to go to new technology - technologies of "assembly" of this or that application, - intellectualization of the user interface, ensuring its intuitive clarity, non-procedurality and approximation of the language of communication with the computer to the professional language of the user; customizing the user interface for the characteristics and needs of a particular user when organizing his dialogue with a computer; the use of multimedia in the implementation of the user interface; - intellectualization of program capabilities and software systems; artificial intelligence methods are used more and more in the design of applications, which makes it possible to make applications smarter and to solve increasingly complex, poorly formalized problems;

- universalization of individual components (modules) of applied programs and the gradual transition of these components, and then of the programs themselves, from the field of specialized applied software to the area of \u200b\u200buniversal applied software. A similar situation has developed with word processorswhich at one time belonged to specialized application software; - orientation towards joint, group work of users when solving a particular problem using software tools. In this regard, when developing software, more and more attention is paid to communication components. - software implementation into the hardware component technical means (goods) of mass consumption - televisions, telephones, etc. This, on the one hand, increases the requirements for the reliability of software, the user interface, and on the other, requires from the user to a certain extent more complete knowledge of both the basic concepts of software (files, folders, etc.), and about typical actions in the software environment; - gradual transition of software components characteristic of specialized application software into universal application software. Those software tools that were previously available to specialists in a specific problem area are becoming available to a wide range of users. 15-20 years ago text editors were available mainly to employees of divisions engaged in publishing.

- universalization of individual components (modules) of applied programs and the gradual transition of these components, and then of the programs themselves, from the field of specialized applied software to the area of \u200b\u200buniversal applied software. A similar situation has developed with word processorswhich at one time belonged to specialized application software; - orientation towards joint, group work of users when solving a particular problem using software tools. In this regard, when developing software, more and more attention is paid to communication components. - software implementation into the hardware component technical means (goods) of mass consumption - televisions, telephones, etc. This, on the one hand, increases the requirements for the reliability of software, the user interface, and on the other, requires from the user to a certain extent more complete knowledge of both the basic concepts of software (files, folders, etc.), and about typical actions in the software environment; - gradual transition of software components characteristic of specialized application software into universal application software. Those software tools that were previously available to specialists in a specific problem area are becoming available to a wide range of users. 15-20 years ago text editors were available mainly to employees of divisions engaged in publishing.

BUSINESS INFO History The company was founded on May 14, 2001 in order to meet the demand for informational resources legal profile. LLC "Professional Legal Systems" is one of the companies of Vladimir Grevtsov. Today Professional Legal Systems LLC is one of the leaders in the dissemination of legal information in electronic form on the territory of the Republic of Belarus. Products of Professional Legal Systems LLC produces and implements the analytical legal system Business-Info. Until 2008, the company was represented on the market by the reference analytical system "Glavbuh-Info", which ceased to exist with the entry into the market of APS "Business-Info". Our clients The number of organizations that have chosen APS "Business-Info" as a source of legal information is growing steadily and currently amounts to about 10,000.

BUSINESS INFO History The company was founded on May 14, 2001 in order to meet the demand for informational resources legal profile. LLC "Professional Legal Systems" is one of the companies of Vladimir Grevtsov. Today Professional Legal Systems LLC is one of the leaders in the dissemination of legal information in electronic form on the territory of the Republic of Belarus. Products of Professional Legal Systems LLC produces and implements the analytical legal system Business-Info. Until 2008, the company was represented on the market by the reference analytical system "Glavbuh-Info", which ceased to exist with the entry into the market of APS "Business-Info". Our clients The number of organizations that have chosen APS "Business-Info" as a source of legal information is growing steadily and currently amounts to about 10,000.

INFORMATION SEARCH SYSTEM "ETALON" Standard databank of legal information of the Republic of Belarus with information retrieval system "ETALON" version 6. 1 (EBDPI) is the main state information and legal resource that is formed, maintained and represents a set of data banks "Legislation of the Republic of Belarus", "Decisions of bodies of local government and self-government", "International agreements". EBDPI is distributed in the form of an electronic copy (IPS "ETALON"). ISS "ETALON" includes from 3 to 6 data banks, including: Legislation of the Republic of Belarus; International agreements; Decisions of local government and self-government bodies; Orders of the President and the Head of the Presidential Administration of the Republic of Belarus (provided by agreement with the Administration of the President of the Republic of Belarus); Orders of the Government and the Prime Minister of the Republic of Belarus; Arbitrage practice; Law enforcement practice.

INFORMATION SEARCH SYSTEM "ETALON" Standard databank of legal information of the Republic of Belarus with information retrieval system "ETALON" version 6. 1 (EBDPI) is the main state information and legal resource that is formed, maintained and represents a set of data banks "Legislation of the Republic of Belarus", "Decisions of bodies of local government and self-government", "International agreements". EBDPI is distributed in the form of an electronic copy (IPS "ETALON"). ISS "ETALON" includes from 3 to 6 data banks, including: Legislation of the Republic of Belarus; International agreements; Decisions of local government and self-government bodies; Orders of the President and the Head of the Presidential Administration of the Republic of Belarus (provided by agreement with the Administration of the President of the Republic of Belarus); Orders of the Government and the Prime Minister of the Republic of Belarus; Arbitrage practice; Law enforcement practice.

CONSULTANT PLUS The Consultant program is a reference and legal system developed for legal professionals and accountants in the Republic of Belarus. The consultant includes documents of the following types: regulatory legal acts of the Republic of Belarus comments and explanations to documents, comments on specific situations from legal and accounting practice, informational articles from the periodical press, books, collections of accounting and legal orientation, analytical reviews, information of a reference nature (exchange rates Of the Republic of Belarus, the size of the refinancing rate, calendar, etc.) approved forms of documents for the correspondence of accounts; useful analytical materials for specialists in various fields and others. A consultant is an excellent solution and a big plus for your business in the Republic of Belarus.

CONSULTANT PLUS The Consultant program is a reference and legal system developed for legal professionals and accountants in the Republic of Belarus. The consultant includes documents of the following types: regulatory legal acts of the Republic of Belarus comments and explanations to documents, comments on specific situations from legal and accounting practice, informational articles from the periodical press, books, collections of accounting and legal orientation, analytical reviews, information of a reference nature (exchange rates Of the Republic of Belarus, the size of the refinancing rate, calendar, etc.) approved forms of documents for the correspondence of accounts; useful analytical materials for specialists in various fields and others. A consultant is an excellent solution and a big plus for your business in the Republic of Belarus.

MICROSOFT VISIO Microsoft Visio - vector graphics editor, editor of diagrams and flowcharts for Windows Available in three editions: Standard, Professional and Pro for Office. Visio was originally developed and purchased by Visio Corporation. Microsoft acquired the company in 2000, then the product was called Visio 2000, was rebranded, and the product was included in Microsoft Office Visio supports an extensive set of templates - business flowcharts, network diagrams, workflow diagrams, database models, and software diagrams. They can be used to visualize and streamline business processes, track project progress and resource utilization, optimize systems, chart organizational structures, network maps, and building plans.

MICROSOFT VISIO Microsoft Visio - vector graphics editor, editor of diagrams and flowcharts for Windows Available in three editions: Standard, Professional and Pro for Office. Visio was originally developed and purchased by Visio Corporation. Microsoft acquired the company in 2000, then the product was called Visio 2000, was rebranded, and the product was included in Microsoft Office Visio supports an extensive set of templates - business flowcharts, network diagrams, workflow diagrams, database models, and software diagrams. They can be used to visualize and streamline business processes, track project progress and resource utilization, optimize systems, chart organizational structures, network maps, and building plans.

Information systems (IS) software (software) includes:

· Basic software is operating systems (OS) and database management systems (DBMS);

· Software tools for modeling and designing IS;

· Means of IS implementation - programming languages;

· Software application that provides automated execution of tasks in the subject area.

6.1 Comparative analysis of operating systems

The operating system determines the efficiency of application execution; performance, data protection degree, network reliability; the possibility of using equipment from different manufacturers; application of modern information technologies and their development.

The choice of OS is made based on the following requirements:

1. Cost / performance ratio.

2. Functionality.

3. Reliability of functioning.

4. Data protection.

5. The ability to generate a kernel for a specific hardware structure.

6. Features of functioning and operating modes of the OS, allowing to solve the assigned tasks.

7. All modern operating systems support network mode, but the requirements for the server and workstations may be different in the following parameters:

a) the required amount of RAM;

b) the required amount of disk memory;

c) compatibility with other systems.

8. Support for remote access to terminals.

9. Prospects for the development of the entire computing system.

10. Standards support.

11. Easy to administer and install.

Based on the above requirements, for the comparative analysis are included the currently popular Windows and Unix operating systems, designed for direct network operation and constituting two competing areas.

Conclusion.

To organize application servers (SQL servers), it is advisable to use the UNIX operating system, other operating systems are less efficient as application servers.

Any file server can be used to implement modern system... However, Windows NT requires the most hardware resources. When the bandwidth of the communication channels is low, UNIX can optimize access by routing packets.

To implement remote access servers, it is advisable to use UNIX, since it does not require the installation of any additional packages... Windows NT is very expensive and requires a lot of hardware resources and is not designed for low connection remote access servers.

The most efficient OS in terms of cost, performance, functionality, data protection and development prospects are the operating systems of the UNIX family.

Basic requirements for operating the OS in network mode

When it comes to the application of a multiuser database used not only in the workstation of one specialist, but also in the workstation of other specialists, the network operating system must have the ability to organize a file server. In addition, network operating systems with a server must provide high performance for networks with a large number of users.

When building a server-based LAN, reliability is key, followed by workstation support and performance metrics. From the point of view of ensuring reliability, the most important thing is effective means memory management, because without this, with a large number of users, situations may arise when workstations will lose communication with each other, and file servers will become inoperative. The term reliability also encompasses the concept of compatibility: the network OS should work well with all common multi-user applications and standard by software... Reliability also means that the server and workstations run smoothly on the network, applications run correctly, and the network operating system protects data from hardware failures. You need a complete set of error protection tools, data protection at the level of individual database records, effective memory management tools and reliable mechanisms for organizing multitasking work. Requirements for workstation support are also important. If network drivers occupy too much memory on each PC, then a situation is possible when workstation application software and utilities residing in RAM will not be executed.

Performance is especially important in the operation of multi-user software packages, as it determines the efficiency of the execution of SQL - queries and how many additional users the system can service before it becomes necessary to purchase a second server.

The next most important factor after performance is management tools. Flexible administration tools allow you to set up and configure your network in less time. The network operating system should provide flexible options for sharing resources on the LAN — printers, modems, and external memory.

Choosing a DBMS

The choice of DBMS depends on the organization of the local and network base data (DB), cost, specifics of the tasks being solved, functional features (integrity support, level of data protection, speed, efficiently processed amount of data in the database, network support, availability of a development environment, interaction with other applications, including Internet applications).

The following network database organization methodologies should be considered:

1. The database is stored centrally on the server, and access from workstations over the network;

2. The database is distributed among workstation computers, but rigidly fixed.

Choosing a network protocol (ODBC, Microsoft, Novell).

Network protocol used to access data in a remote database. It allows you to integrate heterogeneous databases.

The choice is made in accordance with the international ISO standard (seven-level model) and is determined by the following criteria:

1. Performance and efficiency to ensure the required speed of processing requests and responses.

2. Possibility of its implementation with existing software using available system modules. The same SQL servers can be installed in the network, then you can use the SQL server network protocol, and not use additional software to implement the standard protocol (ODBC).

The network protocol must comply with the international ISO standard. This protocol includes ODBC, which is universally suitable for interacting with any DBMS.

1. The ISO standard implies storing a list of users with registered rights together with the main database. Authorization is implemented by means of the DBMS.

2. The second option involves storing the list of users not directly in the database, but in operating system... In this case, user authorization is networked and is implemented at the OS level.

Database reservation.

To ensure the reliability of data storage, a copy of the database must be created. Centralized databases are usually copied to the server. There are different strategies for distributed databases:

1) creation backup DB on the workstation itself, or on any workstation in the network;

2) creating a backup on the Backup server. Through special program Backup automatically creates a mirror copy of the database on any network computer sufficient capacity, which is the Backup server.