Long ago, back in the last millennium, the main purpose of video cards was the ability to output images from computer memory to the display screen. Among the manufacturers of devaysov there was a fierce confusion and vacillation. Games came out with their own set of drivers for a variety of devices (including video cards). Then he came: S3 Trio64 V +, which became a kind of standard. Almost a complete copy - S3 Trio64 V2 - was released a little later. And even later appeared S3 Virge, already equipped with 4 MB of video memory.

S3 Trio64 V2 with 1 MB of video memory on board. As you can see, the video card can be upgraded by adding two more pieces of SOJ DRAM EDO 512 KB to get 2 MB of memory.

1 MB of video memory is enough to create 3 buffers at a screen resolution of 640 × 480 pixels with 256 colors. Heroes 3 required 2 MB, because the resolution was 800 × 600 pixels and 65,536 colors.

And everything was simple and clear: the processor cheated and sent data to memory, and the video card transmitted a picture to the display. And then in the computer world literally burst 3dfx with its revolutionary technology Voodoo.

In fairness, it must be said that before the appearance of solutions from 3dfx, there were other technologies. In particular, the NV1 NV1 chip (which failed).

So what was Voodoo? It was a so-called 3D accelerator; an additional fee for creating high-quality, high-performance graphics. An important difference from modern solutions - this accelerator required the presence of a conventional (2D) video card. For its video cards, 3dfx developed API - Glide - a convenient tool for working with Voodoo (for game developers, in the first place).

![]()

Accelerator of three-dimensional graphics based on 3Dfx Voodoo 2. It was with this chipset that appeared sLI technology: it was possible to connect two accelerators that worked in tandem, while one accelerator processed even lines of the image, the other - odd, than was achieved, theoretically, a twofold increase in performance

Realizing the advantage of the new market, other big players joined the struggle for the consumer, presenting their products. Among the main ones you can recall: 3D RAGE from ATI, RIVA from NVIDIA, and also uttered by the reverent whisper Millennium from Matrox. The oils were poured into the fire and the developers software, in a noble desire to standardize (and, as a result, to free programmers from having to port their code to support video cards from different manufacturers) the functions used: Microsoft releases Direct3D (part of the DirectX library), Silicon Graphics - OpenGL.

Perhaps the last success of 3dfx was Voodoo3. On that moment this graphics card was the most productive in terms of 3D graphics, but it "on the heels" came RIVA TNT2 from NVIDIA. In an effort to outperform the competition, 3dfx abandoned all efforts to develop a new chipset. And at that time GeForce 256 came out from NVIDIA, which, in fact, scored the last nail in the "cover of confrontation". The new 3dfx chipset was not a competitor to the GeForce 256. To recover the leadership, it was decided to release a multi-processor configuration of the updated chipset - VSA-100 - Voodoo5. However, the time was lost, and at the time of the appearance of new accelerators based on 3dfx processors, competitors lowered prices for their previous products, and soon they introduced new ones that were not only cheaper but also more productive than Voodoo 5.

For the former leader of 3D accelerators everything was finished. Most of the assets were acquired by NVIDIA, and this was followed by the complete eradication of everything that was associated with 3dfx, including the recognizable names - Voodoo and Glide API. From that moment, in fact, there were only two manufacturers of high-speed solutions for home users: ATI and NVIDIA.

In 2000, ATI released a cult processor of a new generation - Radeon. The brand was so popular that it is still used. This graphics processor was the first solution that fully supports DirectX 7. Earlier NVIDIA provided its cult chipset, which put Voodoo5 on both blades, and was the beginning of the branded line - GeForce. Both names are firmly entrenched in the minds of users, and the question "What is your video card?" Is quite possible to hear the answer: "Radeon" or "GeForce".

At different times, the palm tree of the championship passed to one or another company. It is impossible to say unequivocally on what chipset the video card will be better: much depends on the manufacturers of video cards. But let's leave the "holy wars", and briefly go over the main lines of decisions.

So, the year 2000. ATI produces a line of "seven-thousandths" - Radeon 7000 and 7200, and a year later - 7500, using for manufacturing a more "thin" technical process. The cards supported DirectX 7.0 and OpenGL 1.3.

NVIDIA puts GeForce 2 and GeForce 3 on the market, supporting DirectX 7 and OpenGL 1.2.

The next generation of video cards from ATI "eight" (represented by Radeon 8500 and its light version - Radeon 8500 LE) and "nine-thousanders" (Radeons 9000, 9100, 9200, 9250 with additional suffixes), supporting DirectX 8.1 and OpenGL 1.4. In 2002, there are regular "nine-thousanders": Radeon 9500 - Radeon 9800, and also the beginning of the X-series: X300 - X850 and X1050 - X1950. Supported API - DirectX 9.0 b OpenGL 2.0.

NVIDIA releases GeForce4 in MX versions - for entry-level systems, and Ti (Titanium) - for medium and top-end systems supporting DirectX 8.1 and OpenGL 1.3. With the next line - GeForce FX, there was a small scandal. The company released chipsets with support for shaders, version 2.0a and actively promoted this technology. But, as the tests showed, the shaders worked much slower than the competitor (ATI). To somehow save the situation, NVIDIA changed the code of its drivers so that in popular benchmarks the shader code was replaced with its own, more productive one.

Be that as it may, the next line returned to the digital signage - GeForce 6, and the video processors began supporting DirectX 9.0c and OpenGL 2.0. The seventh generation - GeForce 7, was the direct successor of the previous one, and did not bring anything new.

In 2006, ATI is acquired by AMD. However, the old brand is preserved and the products continue to be labeled as ATI Radeon. Once again, changing the trade name, and instead of the X series there are HD series (which, incidentally, are being released to this day). New "two-thousanders" - HD2000 - support DirectX 10.0 and OpenGL 3.3. Series HD3000, in fact, nothing special is different (except that in some models there is support for DirectX 10.1). The next line - HD4000 - also exploited the previous solutions: a decrease in the process technology led to a decrease in heat generation and allowed a painless increase in the number of stream processors responsible for graphics processing. Most top-end solutions receive GDDR5 memory (younger models use GDDR3).

But NVIDIA makes a breakthrough in computing, having released the eighth generation of its product - GeForce 8. In addition to support for DirectX 10 and OpenGL 3.3, CUDA technology is supported, with which you can transfer some of the calculations from the main processor to the graphics card processors. Along with this technology, another one was presented - PhysX - which for calculations could use either a central processor or a video card processor with CUDA support.

Video card Zotac 9800GT with 512 MB of memory. The extremely unsuccessful model in terms of heat sink, I had to install an additional cooler for better cooling

The ninth generation - GeForce 9 - was in fact the development of previous successes. In the course of development, the designation was changed - the new line was labeled as GeForce 100.

In the tenth generation - GeForce 200 - CUDA version 2.0 has been updated. Also, hardware support for H.264 and MPEG-2 was added. Some models of video cards begin to be equipped with fast memory - GDDR5.

At the end of 2009, ATI updates the lineup - the HD5000 comes out. In addition to the updated process technology, video cards get support for DirectX 11 and OpenGL 4.1.

In 2010, AMD decides to abandon the ATI brand in future cards. The new series - HD6000 - already comes out under the name AMD Radeon. The output of the video image in 3D is also available.

NVIDIA is also changing the process technology and there are new graphics processors - GeForce 400, supporting DirectX 11 and OpenGL 4.1. A huge amount of work was done on the chip, and through MIMD it was possible to significantly increase the performance.

Continuation of the development of the line - the GeForce 500 - traditionally has a reduced (due to optimization) power consumption and improved performance.

Our days

In 2012, both corporations presented updated lines of their products: GeForce 600 from NVIDIA and HD7000 from AMD.

NVIDIA GPUs received an updated filling, based on the Kepler architecture, resulting in increased performance and reduced heat dissipation. 28 nm process technology also benefited (in the cards of the initial level, 40 nm is used). Available technologies are DirectX 11.1 and OpenGL 4.3. By marking it is possible in a high degree to determine which of the video cards is faster: for this you need to look at the last 2 digits of the series:

- GTX610-640 - so-called. office cards, have mediocre performance (less than $ 100);

- GTX 650 and GTX 650 Ti - entry-level game cards (less than $ 150);

- GTX 660 - mid-level gaming cards (less than $ 250);

- GTX 660 Ti, GTX670-690 - game cards of the highest level of performance and top-end video cards (the cost can reach $ 1000 and more);

- GTX Titan, GTX 780 - top-end game cards.

The fastest dual-processor aMD video card Radeon HD 7990. At full load consumes 500 watts, which requires a powerful power supply on one side and actually puts an end to the purchase of a second card for the CrossFire mode

AMD chipsets have got Graphics Core Next architecture, production is conducted on 28 nm process. Available technologies are DirectX 11.1 and OpenGL 4.2. The purpose of the video card can be determined by the last 3 digits:

- HD77x0-77 × 0 - game video cards initial price range (less than $ 200);

- HD78x0-78 × 0 - video game cards of the middle price range (less than $ 300);

- HD79x0-79 × 0 - advanced and top gaming video cards (the cost can reach $ 1000 and more).

In the Russian market, as the "x" in the name of the models are figures 5, 7 and 9. The figure 5 corresponds to the lowest productivity in the class, but also the lowest price. 9, on the contrary, shows that this card in the class is the most productive.

It would seem that the designation of models is simple and understandable, and you can be sure that the video card on the "older" chipset will be uniquely faster than the "younger" colleague. Unfortunately, this is not always the case. And even the performance of cards built on a single chipset can differ by 20% (at almost the same cost). Why does this happen? Some manufacturers can decide to save on components, in particular, to install cheaper memory, or use a bus with a lower bit width. Others, on the contrary, rely on quality components, overclock and test video cards. Do not forget about the cooling system: a fast card with a buzzing fan can repel all the fun of the games (especially at night). So do not be lazy, but it's worth visiting authoritative websites and reading test reviews to find not only a fast, but also a quiet video card with good cooling. Good resources: ixbt, ferra, easycom. Reviews can be read.

Both companies - AMD and NVIDIA - offer solutions that can satisfy almost any user. Both corporations have a solution for 3D - support in games and movies, these are: AMD HD3D and 3D VISION from NVIDIA. Video cards of these companies are able to display an image on several monitors, up to the point that you can use several monitors for one game. And, AMD and NVIDIA have technologies that allow combining several video cards: CrossFire and SLI respectively, due to which the performance in some games, in fact, doubles (and even triples when installing 3 cards). In fact, it is very good way Increase the performance of your video system in the future.

Game on 3 screens with NVIDIA® 3D Vision ™ technology. There is a significant expansion of the scope

It remains only to decide which of the functions are needed and how much money it is willing to spend. So, for 3D, you need an appropriate monitor and glasses. PhysX is nVIDIA technology, and shifting some of the tasks from the CPU to the graphics card processor can have a positive effect on overall performance.

Surely you have a ripe question: which video card is currently the fastest? This dual-processor flagship, built on two AMD graphics processors Radeon HD 7970.

And how to choose a card according to your budget and not to lose? This question will help to answer a special tablet, which calculates the absolute cost of 1% of the "ideal" performance.

Open the video card performance table (in a new window)

Appendix 1

NVIDIA has released a new series of video cards - 7xx. Now most of the boards in this series are closing (both in terms of price range and performance) "holes" that inevitably arise in the development process. The GTX 690 is very fast, the GTX Titan is an extremely quiet and fastest uniprocessor graphics card. But in comparison with the GTX 680 they came off quite strongly. The difference in performance should be paid off by the GTX 770 and GTX 780. Recently, the GTX 760 was announced. The main feature of the new series is the large (in comparison with the previous cards) autoadilling frequencies.

Appendix 2

AMD released a new monster - a two-chip radeon graphics card R9 295X2. It differs from its single-chip "parent" - Radeon R9 290X - slightly higher core frequency and twice the characteristics of other components - 2 × 512 bit memory buses, and double heat dissipation - as much as 500 watts! For cooling, a water radiator with an external unit is used. The declared value of this "toy" is 1500 $, which is more than 2.5 times more expensive than a single-chip card. The price, of course, is high, but the productivity is beyond the limits - there are simply no competitors at the moment. The only card capable of issuing in Metro: Last Light with Full HD resolution and maximum settings over 60 FPS, more precisely 71 FPS (for comparison - GeForce GTX 780 Ti produces less than 53 FPS).

"Soak up" a situation like the GeForce GTX TITAN Z is called - a two-chip from NVIDIA, about which it is known that it will be released. The declared price is 3000 $. Most likely, the video card will be faster than its competitor from AMD, but it should be noted that even now a bundle of two GeForce GTX 780 Ti will cost even less than the Radeon R9 295X2, in the light of which the purchase of the flagship from NVIDIA looks promising, only in case the presence of extra 100,000 rubles and a wild desire to personally "feel" the novelty.

Most smartphones use the ARM processor architecture. It was created by the same company, and it also supports it. In the process of creating the bulk of chipsets that are used in mobile devices, its development is used.

However, the approach may vary. Some companies license ready solutions, while others practice creating their own, using the company's development as the basis. For this reason, there is a confrontation in the market between the basic and custom architectures of graphic as well as central processors.

Which is better than Qualcomm or ARM?

To the basic solutions that are created ARM, include processor cores and graphics Mali. They are used by, for example, chipmakers like Spreadtrum, Nvidia, Samsung, MediaTek.

Whereas Qualcomm has a different approach. For top-end chipsets, it uses the use of bones Kryo, and to equip the Snapdragon chips, Adreno graphics are used. It was developed by company specialists. The presence of various architectures provokes the emergence of the question, who should I prefer - Qualcomm or ARM?

It is very difficult to give an unambiguous answer to this question. As well as making a decision, whose graphics chips should be given the palm of primacy. I must say that it is not only the situation that matters, but also the specific tasks that are set. And depending on this, the scales can bend to one side or the other. This article is intended to help those who want to fully understand this issue.

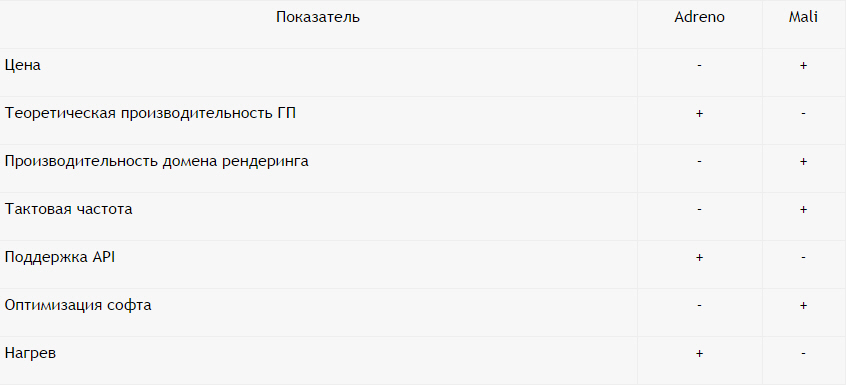

The positive and negative sides of Adreno

Let's start with the pluses:

High performance. Theoretical calculations indicate a higher maximum speed graphics Adreno relatively Mali. They are valid if used in chipsets of the same class. So, for Snapdragon 625, the Adreno 506 processing power is about 130 GFLOPS (it's about a billion calculations per second with a floating point). Its competitor MTK Helio P10, which has the GPU Mali T860 Mp2, has 47 GFLOPS indicator.

Supports more advanced APIs. The latest generation of Adreno chips have a larger set of APIs (software tools for development), besides, they are newer versions. For example, since the release of Adreno from the 500th version, it's been a year already. And they are supported by Open GL ES 3.2, DirectX12, OpenCL 2.0 and Vulkan. While Mali is not supported by DirectX12, and OpenCL are only available for the G 2016 series, which appeared relatively recently.

They are less overheated. Adreno GPUs do not have as high a tendency to overheat as Mali does. At the same time, I must say that Qualcomm had some processors, which were peculiar to fall into throttling. But these were processors, characterized by increased power, respectively, and the CPU core had a hot temper. Almost on a par with competitors, they worked when the regime of reduced performance was observed.

Now about the disadvantages:

Quite high cost. To develop its graphics, Qualcomm has to spend more money, when compared with the amount, which costs competitors to licensing ARM Mali. For this reason, the cost of chipsets from the American manufacturer is higher than, say, MTK.

Soft is optimized worse. Order, and they use Mali graphics. Huawei in Kirin models also practices the introduction of stock GP from ARM. And MediaTek prefer to use ARM-graphics, not using any other. The result is a large share of Mali in the global market. And because game developers are given preference in optimizing their Mali products. We can say that having a smaller number of GFLOPS, Mali in chips that are related to the average and budget level is slightly worse in games than Adreno.

In rendering, Fillrate is smaller. Adreno chips have a relatively weak texturing domain, which is responsible for the process of forming the final image. Adreno 530 is able to plot about six hundred million triangles forming a 3D image in one second. And Mali G71 - 850 million.

Positive and negative aspects of Mali

And in this case we begin with a positive:

High prevalence rate. In connection with, the benchmark Mali graphics for smartphone chipsets, the optimization of games for it is better than under Adreno.

Low price threshold. The cost of a license for the production of chipsets with Mali is quite cheap. This allows even small companies that do not have the ability to produce million investments to produce chips from Mali. And this provokes encouraging competition and stimulates the company ARM moving it to develop new solutions. In addition, users of Mali graphics have to spend less money as a result.

High clock rates. The frequencies used in the Mali graphics processors are 1 GHz. And the competition does not exceed 650 MHz. The higher frequency on the Mali chips allows for better games, with which the multithreaded 3D processing is maintained worse.

Power of rendering domain. The top GP of the Mali G71 is capable of rendering about eight hundred and fifty triangles for one second, which is identical to twenty-seven billion pixels. And this despite the fact that Adreno 530 is able to handle only 8 billion. So, it is better to use it in the process of working with graphics of HD-textures having high resolution.

Shader cores are smaller. Mali graphics processors have fewer shader cores than competitors' products. Mali is worse and in terms of maximum performance in GFLOPS. In addition, they are less adaptable to games that are capable of effectively parallelizing loads on GP.

Configurations are limited. The actual lag of graphics processors Mali from Adreno is insignificant. However, in real life manufacturers prefer the use of ready-made solutions that are not very complex, having a very small number of computational clusters. So, in Mali T720 the maintenance of the order of eight blocks is provided, however the most widespread is Mali T720 MP2, which has only two clusters.

Predisposition to overheating. The high clock speed of the Mali solution is more universal, however, as a side effect, they have the ability to overheat. It is for this reason that it is impossible to integrate a significant number of graphics clusters into the chipset.

Adreno or Mali which is better?

Based on the foregoing, we can say that Adreno has more powerful computing blocks, better supports new technologies and does not create problems with overheating. At the same time, on the side Mali popularity, availability, the power of the rendering domain, and the clock frequency. And, as a consequence, the primary optimization of software for these GP.

But I must say that these are only theoretical calculations. Reality, however, indicates that Mali is preferred for use in models related to the budget and initial-average levels. While the prerogative of Adreno is a model of the middle class and flagship. And this is quite natural, because modern reality does not allow to view graphics separately from the chipset.

Pros and Cons of Adreno - Mali

Adreno or Mali: Which graphics processor is better?

To date, the market for computers, computer components, there has been a slowdown in technological progress, as in many science intensive branches of our earthly life. As a result, video card manufacturers are renaming slightly improved old solutions and are releasing them to the market. In recent years, this has not been embarrassed by both AMD and NVIDIA.

That is why in this article we would like to dwell on the general principles of choosing a video card for modern computer so that the material was relevant for many years, as in the principles of the development of the video card market over the past decade, virtually nothing has changed. Most of the criteria were discussed by users of our site MegaObzor on the forum. So, let's begin.

How to choose a video card?

Criterion one: "Before you buy, decide what is the video card for?"

Many of us in our childhood dream of fast computer with a productive video card. Starting to make money, most people want to realize children's dreams, but by buying a fast and expensive video card either do not find the time to fully use it in computer games, or they continue to play Counter and Strike and World of Tanks, which are customary for them to the computer hardware in principle do not differ. As a result, the money invested in the video card immediately after purchase depreciates by 30%, and after one year of idle time - by all 60-70%, since more for the purchased once new video card in the secondary market, no one will give.That's why you get video cards according to needs. If you play the usual old games for you, and you are not very interested in new items, then there are enough integrated graphics cores in Intel Core i3 / Intel Core i5 / Intel Core i7 processors or various AMD APU variants. The latter are significantly inferior in performance of computing cores, the level of energy consumption to the presented processors from Intel, therefore, despite the significant difference in cost, we recommend paying attention to these representatives.

Modern motherboards know how to fully realize the potential integrated graphics processor cores, a soldered set of output ports for the image on them depends on each particular instance, so you will not feel any defective.

If you like to play modern computer games and even with high resolution and detail, then there can not be any built-in graphics - you should immediately look at external video cards that often cost, like a CPU, often more expensive than it.

Criterion number two: "At what price should I buy a graphics card for modern games?"

Conditionally video card market can be divided into three categories. The first category is video cards costing up to $ 150, the second from $ 150 to $ 300, and more than $ 300.Experience shows that at a cost up to 150 dollars video cards of the forgotten epochs or low performance are offered, which is comparable or inferior to many modern integrated graphics cores of the central processors of Intel Core i3 / Intel Core i5 / Intel Core i7 / APU AMD. Therefore, they can be purchased only to replace the video card in the old computer, but not to build a new gaming computer.

Video cards lying in the price range from 150 to 300 dollars, can be considered game. At the same time, solutions at a cost closer to $ 300 and on the latest graphics cores can provide an acceptable level of performance in modern games with average image quality settings.

Video cards costing more than 300 dollars and based on the latest generations of graphics cores, can be considered gaming graphics cards. With the enhancement of the technical characteristics of the video card, its cost will also grow. When choosing the data of a video card, it is necessary to remember that your CPU must be able to reveal its full potential. This is in the following criteria.

Criterion the third: "What processor is necessary for a modern game video card?"

Since in any computer " head"is the central processor and it sends commands to the graphics card - its performance depends on the performance of the graphics subsystem.When buying a top-end video card, but having a low-performance processor, you will never open its potential.This is why before buying a video card you should either purchase a fast modern processor, or reviews on the Internet, find a minimal solution, which is enough to reveal its potential. In any case - the performance margin of the CPU but it's always good, and the video card's performance margin is money thrown to the wind.

Criterion number four: "Which video card manufacturer should I choose?"

Most video cards are produced by the reference template, and only the various overclocked versions have an individual design. Individual design is necessary to ensure the operation of the video card at higher operating frequencies, often voltage and, accordingly, more efficient cooling. Virtually all ASUS video cards have an individual design, while the rest of the manufacturers have template clones of the reference version.If you do not plan to overclock the graphics card, for you the names of video card manufacturers (ASUS, EVGA, Gigabyte, XFX, Palit, MSI) should only mean the difference between the following parameters:

- price;

- the complete set of delivery (a box, disks, games, adapters, covers, labels, etc.);

- cooling system;

- presence or absence of factory overclocking;

- the warranty period from the manufacturer.

When you select first determine the last four points, then select the video card at the minimum cost.

If you plan to overclock the video card and feel like an overclocker, then among the above requirements come to the fore:

- layout of the components of the video card on the printed circuit board;

- the principle of building a core power system, video memory;

- operating temperatures of the video card with a cooling system installed from the factory;

- the presence of any selection of chips by the manufacturer;

- the availability of examples of successful modification of the video card on the Internet.

It should be understood that overclocking, as a rule, it is really enjoyable when you bought a video card at the minimum cost and dispersed it to a more expensive level. Successful overclockers achieve 100% or more of the difference in cost when recalculating to the ratio of performance to purchase cost.

Criterion the fifth: "On what technical characteristics of a video card to be guided?"

Technical characteristics of video cards any producers and generations are easy to compare, since their construction has not changed for many years. We list them:- type of architecture of the core;

- the number of kernel computational units (universal shaders and raster blocks);

- the number of transistors used in the manufacture of the core;

- the fineness of the technological process of making the core;

- the frequency of the kernel;

- width of the memory bus;

- type of video memory;

- frequency of video memory operation;

- The amount of video memory;

- power consumption / heat generation;

- price.

All these data are presented in the description of the goods of online stores, the aggregator of prices in RuNet - Yandex.Market. Just click on the "Specifications" or "Specifications" section and you will get comprehensive information.

Let us dwell on these characteristics in more detail.

1. Kernel architecture type. This parameter determines how many operations the computational core will perform per 1 calculation cycle or 1 Hz of the operating frequency. Graphics cores of different architectures running at the same frequency will always show different performance, which sometimes can reach 100%. The newer the core architecture of this manufacturer is, the more progressive it is and performs more operations per one computing clock.

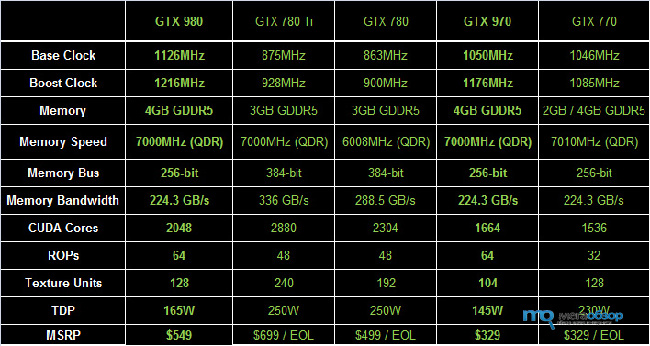

2. The number of kernel computational units (universal shaders and raster blocks) - significantly affects the performance of the video card. Some video cards of different price categories can be built on the same cores, but have different number of activated computing units. For clarity, compare below geForce video cards GTX 970 and GeForce GTX 980:

Both video cards are built on the same GM204 core. But the younger video card has a reduced number of shader units from 2048 to 1664 pieces. Computing units are cut for one of two reasons: either the chip turned out to be a defective block (s) and blocked some of them, cheaper video cards were released on their basis, or more expensive graphics cards are not sold and after cutting them they were allowed to produce cheaper video cards. The last many years allowed overclockers, unlocking blocked blocks, to turn cheap video cards into more expensive ones. Unfortunately, recent generations video cards rarely present such luck to their owners.

3. Number of transistors used in the manufacture of the core. Another important parameter of the graphic core of the video card. This is due to the fact that the more transistors used in the production of a video card, the more it consumes electricity at the time of peak loads.

Let's return to the graphics core of the GM204 GeForce GTX 970 and GeForce GTX 980 graphics cards. As you can see, the cores of both video cards are based on the same number of transistors, in spite of the fact that the GeForce GTX 970 has a smaller number of computational units. Often, the disabled units still continue to consume electricity at a certain minimum level, so the average operating temperature at equal operating frequencies for video cards will be comparable. The latter circumstance makes the trimmed graphics card always less energy efficient than the older one when comparing the performance / power consumption ratio of electricity.

4. The fineness of the manufacturing process of the core. The modern technological process of manufacturing the cores of video cards has reached 28 nanometers. Intel's CPUs have become more sophisticated. The smaller the process, the less the core consumes electricity and the more transistors can be placed on the silicon wafer, thereby reducing the cost of production. But the latest generations of nuclei show that a decrease in the technological process increases the average operating temperature, as increasing the density of transistors leads to an increase in heat release per unit area. In any case, if adequate cooling is provided, the thinner the technological process of making the core, the better for the end user.

5. Kernel frequency. The higher the operating frequency of identical graphics cores, the better, as it will provide a greater level of performance.

6. Memory bus width. The wider the data bus for the video card, the better. But we should remember that this statement will be true only if the video cards have the same type of video memory and the same operating frequency. Strong examples are the top-end video cards of two competitors - the GeForce GTX 980 and Radeon R9 290X, where, despite the twice wider data bus in the latter, the GeForce GTX 980 graphics card demonstrates great performance due to a different core architecture and higher operating frequencies.

7. Video Memory Type. The more modern type video memory, the higher the operating frequency and the higher peak performance it can work. It is necessary to take video cards on the same type of video memory as the reference version of the manufacturer during the announcement - NVIDIA or AMD.

8. Frequency of video memory operation. The higher the operating frequency of video memory, the better. This statement is valid when comparing two video cards with the same width of the data bus.

9. Video memory capacity. Often, modern manufacturers blatantly deceive customers, offering at the same price two video cards with a large and smaller volume of soldered video memory chips. Comparable cost, as a rule, is achieved due to the use of a more primitive type of video memory, for example, instead of GDDR5 it is unleashed GDDR3, etc. This trick should not be conducted in any case - an extra amount of video memory will not give you much, but a slower video memory will lead to significant loss of graphics performance.

10. Power Consumption / Heat Dissipation. Here everything is clear, the lower the energy consumption and heat, the better. When comparing video cards, you should pay attention to the number of unsoldered elements on the PCB and their quality. The fewer empty places from under the capacitors, voltage regulators, the more stable the graphics card will work with the voltage drops on the power supply side.

11. Price - should be minimal for the product that meets the above-listed requirements.

The criterion of the sixth: "Interfaces connecting video cards PCI-Express 16x 3.0 / PCI-Express 16x 2.0 / PCI-Express 16x 1.0 compatible or not?"

Yes, the interfaces are backward compatible. But, naturally, the more modern interface supports your motherboard, the better. The key is the number of active PCI-Express lines - 16x or 8x. With the number of lines cut down to 8x, top-end video cards will show less performance than when working in a full-fledged PCI-Express 16x 3.0 slot or PCI-Express 16x 2.0 slot.Criterion number seven: "Do I need support for SLI / CrossFire graphics card?"

This option is necessary in the event that you are either very rich or risk becoming poor in the future. In the first case, if there is support motherboard two or more video cards, combining their computing power, you will achieve overproduction.

In the second case, after a few years, you can buy a second similar video card for a pittance in the secondary market, combine it with the existing one and get an increase in productivity in the region of 40-70% depending on the game. How much this will be actual by that time is unclear, because in recent years, every two generations of graphics solutions have almost doubled the performance.

Criterion of the eighth: "What is better - NVIDIA or AMD?"

Each manufacturer has its own core architecture, a printed circuit board, a list of supported technologies. As a consequence, they differ in terms of productivity, energy consumption, heat generation and the ability to perform related functions.

For example, many video converters support the acceleration of conversion by using the computational capabilities of the graphic chip of the video card. The degree of acceleration depends on the performance of the core of the video card and is quite significant in some conversion modes. Agree, a pleasant bonus.

Criterion number nine: "What should the CPU pay attention to when buying a new video card?"

Firstly, these are the sizes of the video card being purchased. In today's market, there are both dual-slot and single-slot video cards. Some models can be quite minimal in length and can be easily installed in many micro-ITX cases. If you have a similar case - take a video card of minimum size, if you have a full "tower", then you should take the standard one - you will win the cooling efficiency of the power components of the graphics core and video memory.Secondly, this is the power of the installed power supply. Most modern game graphics cards require an additional external power supply for 12 volts. Therefore, the power supply must have several lines of 12 volts, have the required number of plugs supplementary feeding PCI-Express or at least you need to have adapters to support them. The maximum power of the power supply should be 20-25% higher than your system consumes at maximum load - then it will provide the maximum efficiency when converting 220 volts to 12, 5 and 3.3 volts for consumers in the computer.

Tenth criterion: "What does Retail (RTL) and OEM mean in the names of the video card?"

These inscriptions mean completing the proposed solution. OEM video cards are supplied to computer manufacturers, so they do not have the usual boxes, they have minimal equipment and are delivered to the market, as if "accidentally". Typically, this is a video card in a package with a disk to install the drivers. But you get a similar "boxed" graphics card at the lowest price.

Example of OEM video card completion

Abbreviation Retail or RTL means that the video card is "boxed", that is, it is intended for retail sale and has in the delivery set everything that is stated on the manufacturer's website.

Perhaps these are all those common points that you need to know when choosing a video card for a gaming computer.

When reading the specifications of smartphones and tablets, most users first of all pay attention to the characteristics of the CPU and, random access memory, screen size, built-in storage and camera. At the same time, they sometimes forget about such an important component of the device as a graphics processor (GPU). A typical graphics processor from a company is associated with a specific CPU. For example, the well-known Qualcomm Snapdragon processors are always integrated with Adreno graphics chips. The Taiwanese company MediaTek usually supplied its chipsets with PowerVR graphics processors from Imagination Technologies, and more recently with ARM Mali.

The Chinese processors Allwiner usually comes with graphics processors Mali. Broadcom CPUs work together with VideoCore Graphic graphics processors. Intel uses PowerVR graphics processors and NVIDIA graphics with its mobile processors. Resource s-smartphone.com was compiled a rating of three dozen of the best in terms of its parameters, graphics processors, designed for use in smartphones and tablets. Every modern user is important to know about.

1. Qualcomm Adreno 430, used in the smartphone and;

3. PowerVR GX6450;

4. Qualcomm Adreno 420;

7. Qualcomm Adreno 330;

8. PowerVR G6200;

9. ARM Mali-T628;

10. PowerVR GSX 544 MP4;

11. ARM Mali-T604;

12. NVIDIA GeForce Tegra 4;

13. PowerVR SGX543 MP4;

14. Qualcomm Adreno 320;

15. PowerVR SGX543 MP2;

16. PowerVR SGX545;

17. PowerVR SGX544;

18. Qualcomm Adreno 305;

19. Qualcomm Adreno 225;

20. ARM Mali-400 MP4;

21. NVIDIA GeForce ULP (Tegra 3);

22. Broadcom VideoCore IV;

23. Qualcomm Adreno 220;

24. ARM Mali-400 MP2;

25. NVIDIA GeForce ULP (Tegra 2);

26. PowerVR GSX540;

27. Qualcomm Adreno 205;

28. Qualcomm Adreno 203;

29. PowerVR 531;

30. Qualcomm Adreno 200.

The graphics processor is an essential component of the smartphone. From its technical capabilities depends on the performance of graphics and, in the first place, the most graphically intensive applications - games. Since the rating was compiled in the first half of the year, since that time some changes may have occurred in it. How do you think, does the position of processors in this rating match their real performance?

« Why this insertion is necessary? Give more cores, megahertz and cache!"- an average computer user asks and exclaims. Indeed, when a discrete graphics card is used in a computer, the need for integrated graphics is eliminated. I admit, I was sly about the fact that today the CPU without built-in video is harder to find than with it. There are such platforms - this is LGA2011-v3 for Intel chips and AM3 + for "AMD" stones. In both cases, we are talking about top decisions, and they have to be paid for. The mainstream platforms, such as Intel LGA1151 / 1150 and AMD FM2 +, are all equipped with processors with integrated graphics. Yes, in notebooks "inbuilt" is indispensable. At least because in 2D mode mobile computers longer run on battery power. In desktops, the sense of integrated video is in office assemblies and so-called HTPC. First, we save on components. Secondly, we again save on energy consumption. Nevertheless, recently AMD and Intel are seriously talking about the fact that their built-in graphics - to all graphs of the chart! Suitable also for gaming. This we will check.

300% increase

For the first time, the graphics integrated into the processor (iGPU) appeared in the solutions (architecture of the Core of the first generation) in 2010. It is integrated into the processor. An important amendment, since the very concept of "embedded video" was formed much earlier. Intel - in the distant 1999 with the release of the 810-th chipset for Pentium II / III. In Clarkdale, integrated HD Graphics was implemented as a separate chip, located under the processor's heat-spreading cover. The graphics were made using the old 45nm process technology at that time, the main computational part was based on 32nm standards. The first Intel solutions, in which the HD Graphics unit "settled" along with the rest of the components on a single chip, were the Sandy Bridge processors.

Since then, the graphics integrated into the "stone" for the mainstream platforms LGA115 * has become the de facto standard. Generations Ivy Bridge, Haswell, Broadwell, Skylake - all got an integrated video.

Embedded in the graphics processor appeared 6 years ago

Unlike the computational part, the "build-in" in Intel solutions is noticeably progressing. HD Graphics 3000 in the desktop processors Sandy Bridge K-series has 12 executive devices. HD Graphics 4000 in Ivy Bridge has 16; HD Graphics 4600 in Haswell - 20, HD Graphics 530 in Skylake - 25. The frequencies of both the GPU and RAM are constantly growing. As a result, the performance of the built-in video in four years has increased by 3-4 times! But there is a much more powerful series of "embedded" Iris Pro, which are used in certain Intel processors. 300% interest over four generations.

Embedded in the processor graphics - this is the segment in which Intel has to keep up with AMD. In most cases, the solutions of the "red" are faster. Nothing surprising in this, AMD is developing powerful video game cards. That's in the integrated graphics of desktop processors using the same architecture and the same developments: GCN (Graphics Core Next) and 28 nanometers.

AMD's hybrid chips debuted in 2011. The family of Llano crystals was the first in which the integrated graphics were combined with the computational part on a single crystal. AMD marketers realized that it would not be possible to compete with Intel on its terms, so they introduced the term APU (Accelerated Processing Unit, processor with video accelerator), although the idea had been "red" since 2006. After Llano came three generations of "hybrids": Trinity, Richland and Kaveri (Godavari). As I said before, in modern chips the built-in video architecture is no different from the graphics used in discrete 3D Radeon accelerators. As a result, in chips 2015-2016 half of the transistor budget is spent on iGPU.

The most interesting thing is that the development of APU influenced the future ... of game consoles. Here and in the PlayStation 4 with Xbox One is used chip AMD Jaguar - eight-core, with graphics on the architecture of GCN. Below is a table with characteristics. Radeon R7 - this is the most powerful integrated video, which is the "red" to date. The block is used in AMD A10 hybrid processors. Radeon R7 360 is a discrete entry-level video card, which, in 2016, can be considered a game card. As you can see, the modern "build-in" in terms of characteristics is slightly inferior to the Low-end adapter. It can not be said that the graphics of game consoles have outstanding characteristics.

The very appearance of processors with integrated graphics in many cases puts an end to the need to buy a discrete entry-level adapter. However, already today integrated video of AMD and Intel encroaches on the sacred - the gaming segment. For example, in nature there is a quad-core processor Core i7-6770HQ (2.6 / 3.5 GHz) on the Skylake architecture. It uses the integrated graphics Iris Pro 580 and 128 MB of eDRAM memory as a fourth-level cache. The integrated video consists of 72 executive units operating at 950 MHz. This is more powerful than the Iris Pro 6200 graphics, which uses 48 executive devices. As a result, the Iris Pro 580 is faster than such discrete graphics cards as the Radeon R7 360 and GeForce GTX 750, and in some cases imposes competition GeForce GTX 750 Ti and Radeon R7 370. Whether it will be when AMD transfers its APU to 16-nanometer technical process, and both manufacturers will eventually use together with the built-in graphics memory.

Testing

To test the modern built-in graphics, I took four processors: two from AMD and Intel. All chips are equipped with different iGPUs. So, in the AMD A8 hybrid (plus A10-7700K), the Radeon R7 video comes with 384 unified processors. The older series - A10 - 128 blocks more. Above the flagship and frequency. There is still a series of A6 - in it with graphic potential it's all very sad, because it uses the "inbuilt" Radeon R5 with 256 unified processors. I did not consider it for Full HD games.

The most powerful integrated graphics are AMD A10 and Intel Broadwell

As for Intel products, the HD Graphics 530 module is used in the most popular chips of the Skylake Core i3 / i5 / i7 for the LGA1151 platform. As I said, it contains 25 executive devices: 5 more than the HD Graphics 4600 (Haswell), but 23 less than the Iris Pro 6200 (Broadwell). In the test, the junior quad core Core i5-6400 was used.

|

Process technology |

||||

|

Generation |

Kaveri (Godavari) |

Kaveri (Godavari) |

||

|

Platform |

||||

|

Number of cores / threads |

||||

|

Clock frequency |

3.6 (3.9) GHz |

4.1 (4.3) GHz |

2.7 (3.3) GHz |

3.1 (3.6) GHz |

|

Level 3 cache |

||||

|

Built-in graphics |

Radeon R7, 757 MHz |

Radeon R7, 866 MHz |

HD Graphics 530, 950 MHz |

Iris Pro 6200, 1100 MHz |

|

Memory Controller |

DDR3-2133, two-channel |

DDR3-2133, two-channel |

DDR4-2133, DDR3L-1333/1600 two-channel |

DDR3-1600, two-channel |

|

TDP Level |

||||

|

Calling the bait: AMD A8-7670K 3 inline |

Calling the bait: AMD A10-7890K 3 inline |

Calling the bait: Intel Core i5-6400 3 inline |

Calling the bait: Intel Core i5-5675C 3 inline |

Below are the configurations of all test benches. When it comes to the performance of the embedded video, it is necessary to pay due attention to the choice of RAM, since it also depends on how much FPS will show the integrated graphics in the end. In my case, we used DDR3 / DDR4 whales operating at an effective frequency of 2400 MHz.

|

Test stands |

|||

|

|

|

|

|

|||

Such kits are not for nothing. According to official data, the built-in memory controller of Kaveri processors works with DDR3-2133 memory, but motherboards based on the A88X chipset (due to the additional divider) also support DDR3-2400. Intel chips, coupled with the flagship logic Z170 / Z97 Express, also interact with faster memory, the BIOS presets are much larger. As for the test stand, the LGA1151 platform used a two-channel Kingston Savage HX428C14SB2K2 / 16 whale, which works without problems in overclocking to 3000 MHz. In other systems, the ADATA AX3U2400W8G11-DGV memory was used.

A little experiment. In the case of Core i3 / i5 / i7 processors for the LGA1151 platform, the use of faster memory for graphics acceleration is not always rational. For example, for the Core i5-6400 (HD Graphics 530), the change in the DDR4-2400 MHz set to DDR4-3000 in the Bioshock Infinite gave only 1.3 FPS. That is, given the graphics quality settings I set, the performance "rested" precisely in the graphics subsystem.

When using hybrid AMD processors, the situation looks better. Increasing the speed of RAM gives a more impressive increase in FPS, in the delta frequencies of 1866-2400 MHz we are dealing with an increase of 2-4 frames per second. I think the use of RAM in all test benches with an effective frequency of 2400 MHz is a rational solution. And more close to reality.

To judge the speed of integrated graphics will be based on the results of thirteen gaming applications. I conditionally divided them into four categories. The first includes popular, but undemanding PC-hits. They are played by millions. Therefore, such games ("tanks", Word of Warcraft, League of Legends, Minecraft - here) do not have the right to be demanding. We can expect a comfortable FPS level with high graphics quality settings in Full HD resolution. The remaining categories were simply divided into three time frames: Games 2013/14, 2015 and 2016.

The performance of the integrated graphics depends on the frequency of the RAM

The quality of the graphics was selected individually for each program. For undemanding games, these are predominantly high settings. For other applications (with the exception of Bioshock Infinite, Battlefield 4 and DiRT Rally) - poor graphics quality. Still, we will test the integrated graphics in Full HD resolution. Screenshots describing all the graphics quality settings are located in the same name. We will consider a playable figure at 25 fps.

HD

The main purpose of the test is to study the performance of the integrated graphics of processors in the Full HD resolution, but first we'll start at a lower HD. Quite comfortably in such conditions felt iGPU Radeon R7 (both for A8 and A10) and Iris Pro 6200. But HD Graphics 530 with its 25 executive devices in some cases gave out a completely unplayable picture. Specifically: in five games out of thirteen, as in Rise of the Tomb Raider, Far Cry 4, "The Witcher 3: Wild Hunt", Need for Speed and XCOM 2, there is nowhere to reduce the quality of graphics. Obviously, in Full HD, the integrated video chip Skylake expects a complete failure.

HD Graphics 530 merges already at 720p

Graphics Radeon R7, used in the A8-7670K, failed to cope with three games, the Iris Pro 6200 - with two, and the A10-7890K - with one.

Interestingly, there are games in which the integrated video Core i5-5675C seriously bypasses the Radeon R7. For example, in Diablo III, StarCraft II, Battlefield 4 and GTA V. In low resolution, not only the presence of 48 executive devices affects, but also the CPU dependence. And also the presence of a cache in the fourth level. At the same time, the A10-7890K beat his opponent in more demanding Rise of the Tomb Raider, Far Cry 4, "The Witcher 3" and DiRT Rally. The architecture of GCN is well manifested in modern (and not so) hits.

Full HD

For greater clarity, let's add a bundle consisting of an FX-4300 processor and a discrete GeForce GTX 750 Ti video card to testing the built-in graphics. Something similar. Calculate the cost is simple: the chip costs 4000 rubles, the adapter - 8500 rubles. The duo goes 1000 rubles more expensive A10-7890K, at 5500 rubles more expensive A8-7670K, 5000 rubles more expensive A10-7800, but at 7500 rubles cheaper Core i5-5675C.

In Full HD-resolution with maximum graphics quality settings, the playable FPS in Dota 2 was not shown. Serious drawdowns are observed. In games like MOBA, they will seriously affect the final result. The solution is simple. Reduce the graphics quality settings from "High" to "Medium". In Diablo III and StarCraft II with the parameters set by us, you can play. Unless in the case of HD Graphics 530 there is a drawdown of the minimum FPS in StarCraft II.

In the category of games released in 2015, it is comfortable to play real only in GTA V. And there is enough margin of safety to adjust the settings in the direction of improving the quality of graphics. As he said, with increasing load on the built-in video, the FPS level between A10-7890K and Core i5-5675C in the game about great auto thefts almost equaled. In The Witcher 3: Wild Hunt and Fallout 4 do not play, you have to reduce the resolution to 1600x900 points, for example.

In the games released in 2016, with integrated graphics, it's better not to bother at all. Here, without a cheap gaming, but a discrete video card can not do.

Let's sum up with my favorite device - a dry statement of facts: HD Graphics 530 and Core i5-6400 processor demonstrated a playable FPS in two games of thirteen; The remaining chips - only in four applications. Really change the situation with an increase in the number of frames is possible only in DiRT Rally, Bioshock Infinite and Dota 2, reducing the quality of graphics. In other cases, you will have to change the resolution. At the same time, the bundle "FX-4300 + GeForce GTX 750 Ti" coped with all the games.

Finally

The miracle did not happen. If we are talking about gaming in Full HD, then the integrated graphics of modern CPUs can only cope with multiplayer non-resourceful games, as well as popular hits of old times. With modern AAA games released this year, neither the Radeon R7 nor the Iris Pro 6200 can cope. The rest - and even more so. Therefore, to rely on a hybrid processor with a powerful iGPU makes sense only. In any other situation, it's better to buy a more efficient discrete graphics card. It's trite, but it's true.

The integrated graphics of the CPUs will continue to progress rapidly. Intel already has a core of HD Graphics 580. Let's see if something similar appears in the desktop segment. AMD will soon release its first 16-nanometer chips. There are no specific deadlines, but I am sure that the APU "reds" will continue to evolve. As they say, wait and see.

Yes, in some cases, the integrated graphics of the Iris Pro 6200 are faster than the Radeon R7, but the Core i5-5675C itself is much more expensive than any existing APU for the FM2 + platform. If you need system unit for undemanding games, it is better (cheaper) still to take a hybrid AMD chip.