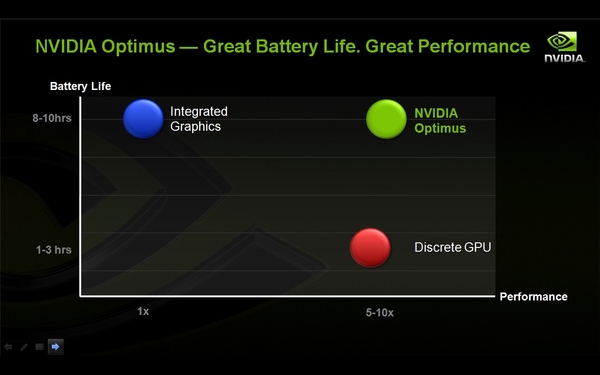

NVIDIA Optimus technology is designed to combine the performance of a laptop and its long battery life.

The slogan of technology sounds like this: performance + battery life.

That is, the main message of NVIDIA is to achieve high performance from the laptop, not at the expense of the time of work.

The laptop has a built-in graphics card, there is a discrete one.

It is assumed that the first you will use during "office" work, the second - in those cases where you need hardware acceleration, whether it's games, HD-video, including FullHD in browsers, work with multimedia and so on.

Previously, to switch between graphic cards, you had to participate - switching was done manually.

Above the keyboard is a power button.

This requires either rebooting the laptop, or rebooting the OS or, the best option, doing nothing, but some programs may refuse to work, they start asking for a reboot.

In any case, the process sometimes took at least 15 seconds of time.

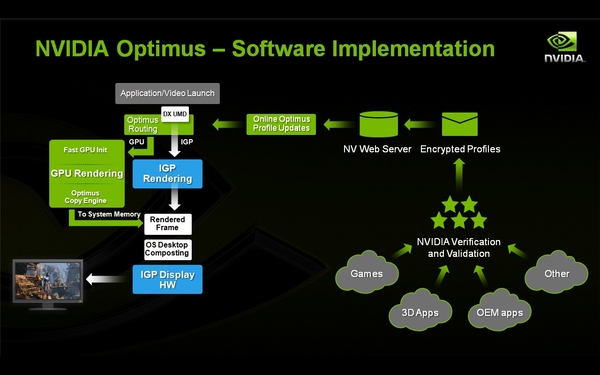

Optimus technology works in this way: in case of hardware acceleration, the system itself includes discrete graphics.

It happens literally in a second.

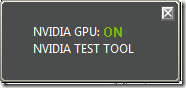

NVIDIA has a special indicator that shows when the external graphics are working, and when - not.

Work with text - use built-in graphics card, opened a page with a browser where FullHD-video is played, - "graphics" immediately turns on.

Switched to another window, where just the text, - and the system again turns off the external graphics card.

We will not go into the jungle of the technology, but the representative of the company assures that during the shutdown of the external card, the supply of energy is completely cut off.

That is, you can literally pull out the card, the experiment was carried out, the card with the PCI Express interface was simply pulled out of the test stand, the system did not curse and did not notice anything, continuing to work on the built-in graphics.

For Optimus technology, only Intel processors can be used.

And from the side of NVIDIA there are no barriers, AMD company was invited to participate in the technology, but the latter refused.

As a result, Optimus can be found in notebooks with Intel Core2Duo, Core i7, Core i5, Core i3, Atom N4xx.

As a discrete graphical solution can act geForce video cards M (nextgen), GeForce 300M, GeForce 200M, as well as the new generation ION.

Optimus technology applies to all classes of laptops: mainstream, gaming, thin and light, netbooks.

There may be a question about the system of work of technology:

whether it is possible to adjust the degree of disconnectability, for example, deactivation of only a few graphics processors in the video card.

The answer is negative - Optimus works exclusively on the principle of on / off.

System requirements for Total War

Warhammer 2

Total War Warhammer 2 goes on sale on September 28 - which means that the fans of the series need to imagine how powerful a computer is required to play with the comfort and without "cinematography" a novelty.

Minimum requirements (25-35 frames per second in the campaign, duels and battles of 20 units against 20, on low settings graphics at a resolution of 1280x720) are as follows:

Processor: Intel Core 2 Duo with a clock speed of 3.0 GHz;

- 4 GB random access memory or 5 GB when using the integrated graphics processor;

- graphics card: NVIDIA GeForce GTX 460 or AMD Radeon HD 5770 or Intel HD 4000;

- 64-bit operating system Windows 7.

Processor: Intel Core i5-4570 with a clock speed of 3.2 GHz;

- 8 GB of RAM;

- graphics card: NVIDIA GeForce GTX 760 or AMD Radeon R9 270X;

- 60 GB of free space on the drive;

Optimum requirements (60 frames per second in the campaign, duels and battles of 20 units against 20, on ultra-high graphics settings at 1920x1080 resolution):

Processor: Intel Core i7-4790K with a clock speed of 4.0 GHz;

- 8 GB of RAM;

- graphics card: NVIDIA GeForce GTX 1070;

- 60 GB of free space on the drive;

- 64-bit operating system Windows 7 or more modern.

Fall Update Creators Update for Windows 10 will bring a reworked game mode

Microsoft Corporation recently announced the release of another major update for the operating room windows systems 10 called Fall Creators Update.

It will be available on October 17. The main innovations in the upcoming update are support for the Windows Mixed Reality platform, on the basis of which a number of VR headsets are being developed from companies such as ASUS, Dell, Acer, HP and Lenovo, as well as a re-engineered game mode (Game Mode).

"In the update of Fall Creators Update, we have redesigned the game mode, which will now allow the games to use all the computing power of your device in the same way as if it were an Xbox game console," the official blog says.

Unfortunately, Microsoft did not disclose details about how the new Game Mode will work or how much better than the game mode that appeared in Windows 10 with the spring update.

The latter did not have a proper effect on the frame rate in games and, according to many gamers, is a completely useless function.

YouTube: new design and logo, changes in functionality

Google introduced an updated video streaming service YouTube, which changed not only the logo, but also the functionality of the mobile application and the web version.

As for the logo, now the brand-new white play button on a red background was placed next to the name YouTube.

The new YouTube design is made in the Material Design concept to simplify the interface and remove all unnecessary.

In the web version of YouTube appeared night mode, which should reduce the burden on the eyes of users in the dark.

It is noteworthy that in YouTube appeared support for vertical video, which will now be played on mobile devices without black bars around the edges.

By double-clicking on the screen, you can activate the fast-forward function, in addition, in mobile version you can change the playback speed.

All these and other changes will be introduced gradually, a full transition will take some time.

"General Battle" added patch 9.20 for World of Tanks

Company Wargaming.net has released a new update for the multi-player tank action World of Tanks.

In the patch with the index of 9.20 the game was added to the game "General Battle" with battles of 30 to 30, as well as a new branch of Chinese PT-ACS, the main advantages of which are combat universality, a large radius of view and good camouflage.

Mode "General Battle" - the response of the developers of World of Tanks to the requests of tankers to implement the game massive battles.

In it, teams of 30 participants compete on a new map of Nebelburg with an area twice as large as the standard map - 2 km².

In general battles can only participate machines of the 10th level, and the reward for the victory includes bonuses - a new currency, for which you can buy pre-combat instructions and improved equipment that will further strengthen your tank.

For a long time, laptops often have two video cards. One, weak (integrated), can be part of the laptop or processor chipset, and is intended more for saving energy when high performance is not required, and the second one, more feasible (discrete, nvidia or radeon) is used for games and heavy applications that require more serious performance.

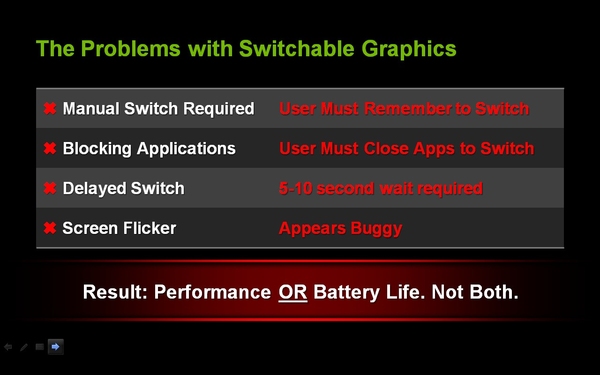

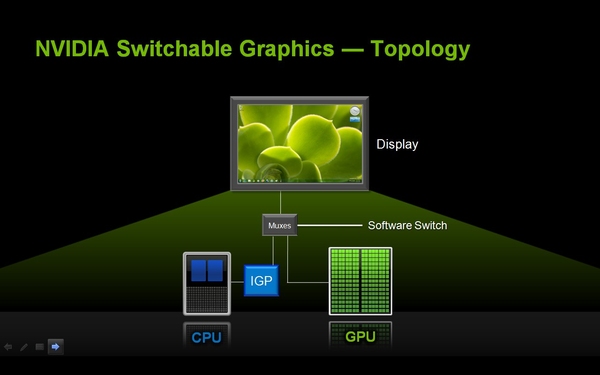

Only the principle of this approach is changed. First, to switch video cards it was necessary to reboot the laptop, then it was enough to press a specially designed button for this on the case or in the program, while the image could have problems, up to reboot-because. there was a switch of the driver and transfer of the buffer of a picture on other card.

The last trend of the fashion is the automatic switching of video cards according to the load. In the case of graphics from nVidia, this technology is called Optimus.

Technology, designed to simplify the work with the laptop and automate the switching of video cards, in fact often turns out to be a problematic-powerful discrete video card sometimes does not turn on when it is needed, and determine which card is working at the moment and which applications it is ready to serve (i.e. when it turns on) - it can be quite difficult. The fact is that the output of the image in this case (and when using a discrete, powerful video card) occurs through the built-in intel video. This is a necessity and a feature of implementation: this eliminates the need to completely switch the driver and transfer images from one video card to another.

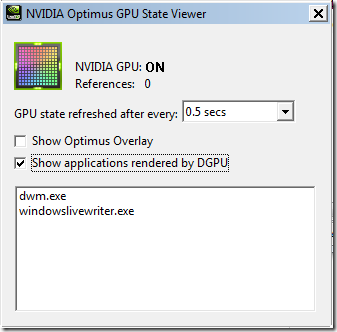

For the system, this approach is ideal, for it there is one working graphics card, no hemorrhoids with definitions of switched equipment, transfer of the image buffer. For the user ... with a purely user-defined position, it's also a great option: why do you need to know which card is working? Works, and excellent. But when you need to know this, it's a problem. There are no built-in tools for determining which video processor is used for rendering right now and it was in this program that until recently there was no. In new versions of the nvidia driver, it's easy to see the operation of a discrete card - about the hours below the icon will show you whether a discrete video is used or not. However, this is not always the case, and in the case of not new laptops, and even more so. Therefore, in order to see which video card is used, and, more importantly, to see the list of applications for processing which this video card is used, it is used nVidia Optimus Tools.

Download can befrom here (letitbit) orfrom here (turbobit). Maybe I'm a fool, but I did not find the utility on the off-line, so I can not give a direct link. There are two folders in the archive, one for 32-bit system, another for 64-bit, and additionally GPU-Z- information utility that gives full information about your video card.

NVGPUStateViewer.exe (left) - shows the current status of nVidia GPU, enabled or disabled.

NvOptimusTestViewer.exe (right) - check the "Show applications rendered by DGPU" checkbox and see the list of programs that are rendered using a chip from nVidia.

And two more moments- nVidia Optimus is NOT SUPPORTED in WindowsXP and Linux. For XP, it will never be again, the maximum that can be done is to disable this technology in the bios (this is probably not all laptops, and in general it's a rarity, and is more likely to be found on older models where Optimus is not "pureblood" ); for Linux, the probability that there will be a solution with optimus is, but so far rather small. There is a "Bumblebee" project that allows Linux users to hope for a fix, but for now it's an inferior analog of optimus, and a surrogate with a bunch of problems and reservations.

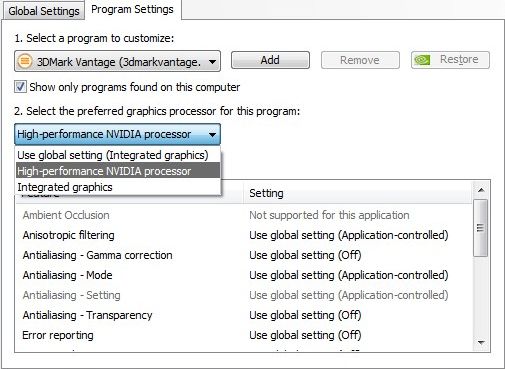

In order to run the game with a certain video card - there are two options: right-click on the game icon-choose "run with a graphics processor" - and there's "High-performance Nvidia processor". Now the game will start running with a video card nVidia.

In order that you do not have to manually select the video card every time you start, select "change the graphics processor by default". The nVidia settings will open, where you have to select the startup file from the game folder (.exe of the game). Here it is necessary to take into account that some games require the addition of not only one game, but also some other concomitant in such a launch. For example, for Skyrim you will need to add the TESV.exe and skyrimlauncher.exe files.

And yet - want 3d on the TV? Figures. Optimus does not support the output of 3d to external displays, due to the fact that the output of the image always is carried out by means of a card from intel. This is the reason why the integrated card can not be disabled.

As an additional bonus-FAQ on Optimus from nVidia.

Question: Optimus technology is available for discrete graphics cards?

Answer: No. Optimus is offered only for end systems, such as laptops and an all-in-one PC.

Question: Is there a performance loss of NVIDIA Optimus graphics solutions due to the need to output an image through Intel IGP?

Answer: Insignificant, ± 3%.

Question: Will NVIDIA produce mixed driver packages for Optimus GPU and Intel IGP?

Answer: No, graphics driver Optimus is a separate package, like a standard package nVIDIA drivers. Intel GPUs use an Intel driver, Intel and NVIDIA drivers can be updated independently.

Question: Optimus will support 3D Vision technology on both NVIDIA GPU and Intel IGP?

Answer: No, 3D Vision is only available for displays directly connected to a discrete NVIDIA graphics processor.

Question: Does Optimus support SLI?

Answer: No, at present, the combinations Optimus + SLI are not supported.

Question: Intel IGP and NVIDIA GPU work simultaneously in systems with Optimus? Can they be used for 3D rendering together? Answer: Intel IGP is always active, at least it is responsible for the image of the desktop. Next, the Optimus driver for each application separately determines which graphics processor to use for its rendering. So, it's possible that some of the 3D applications are running on the GPU, and some of them are running on the IGP.

Question: How can I determine the current state of the GPU (active / disabled) in the system with Optimus?

Answer: From the user's point of view, there are no special signs for determining the state of the GPU. For the operating system, the GPU looks affordable all the time. If the GPU is not used for rendering and the displays connected to it are inactive, software Optimus turns off the GPU.

Question: How to determine if the GPU or IGP is rendering the current application in the system with Optimus?

Answer: From the user's point of view, there are no special signs to determine which graphics processor performs the rendering. In the NVIDIA control panel, you can set preferences for a specific application, and also enable a special interface for selecting a graphics adapter manually. For displays connected directly to the GPU, the rendering is always performed by the GPU.

Question: How to understand the terms "IGP rendering" and "GPU rendering" for applications in Optimus?

Answer: In systems with Optimus, graphics or universal calculations of an application can be performed on a single GPU, and the result can be displayed on another. For example, a display connected to the IGP can display both a picture calculated by the IGP and a picture calculated by the GPU. The choice is made for each application separately and completely hidden from the user, although it can be configured in the NVIDIA panel. For rendering on displays connected directly to the GPU, the rendering is always performed by the GPU.

Question: How to understand the terms "IGP display" and "GPU display" for applications in Optimus?

Answer: IGP display, as it follows from the definition, is connected to Intel IGP. Most displays in systems with Optimus, including laptop panels, are connected just in time to the IGP. The IGP displays both an IGP image and a GPU image, depending on which Optimus is selected for rendering the application. GPU displays are connected directly to the outputs of a discrete NVIDIA GPU, and can be supported by some systems, for example, for HDMI output in the PineTrail platform. Preparing images of applications displayed on GPU displays is always performed on the GPU.

Question: Is there a "knife switch" to make all applications be counted on IGP, or is it all on a GPU in the Optimus platform?

Answer: Some settings are provided only for specific cases and should not be used without reason. The GPU always turns on when it needs it. Forcing the rendering on IGP can have serious consequences for performance (for example, some video content will not be played) and will confuse the user with different application behavior from start-up to launch. The main advantage of Optimus is precisely in the concealment of transients from the user.

Question: Is HDCP content protection supported in Optimus systems?

Answer: The HDCP relationship is established between the display and the GPU to which it is directly connected. For IGP displays, the process is managed by IGP itself, NVIDIA is not involved in it. For GPU displays, everything is decided by the standard mechanism for storing encryption keys, as in conventional NVIDIA solutions.

Question: How is the support for sound transmission [on the display] in the Optimus platform?

Answer: For the transmission of sound is responsible for that graphics adapter, to which the display is directly connected. The NVIDIA audio driver is not used for IGP connections, for the connections to the GPU the driver works as in any system with multiple graphics adapters.

Question: How is it possible to play video in the Optimus platform?

Answer: The possibilities for working with Intel IGP video differ between chipsets. While Arrandale supports full HD video acceleration without third-party assistance, PineTrail can not boast of this. When the NVIDIA GPU can provide benefits in terms of performance or power consumption, the Optimus driver uses the GPU to improve user satisfaction. For displays connected to the IGP, SD quality video is always processed by IGP, as well as HD on most chipsets, except for PineTrail, where the GPU is attracted. If the video is copy-protected, for example, playback from Blu-ray discs, and decoded to a GPU, then a quality degradation to SD can be performed on the display connected to the IGP. The video output to the displays directly connected to the GPU is always processed by the GPU.

Q: Does the connection of the displays to the GPU outputs affect its performance for applications that are output through the IGP in the Optimus platform?

Answer: Optimus technology works regardless of the presence of connected to the GPU displays.

Question: What display combinations are possible between the GPU and the IGP in the Optimus system?

Answer: The configuration of the IGP displays is entirely determined by the IGP itself and its drivers. From zero to two displays connected to the IGP, can be used at any time. If the system has GPU outputs implemented, one GPU display is additionally supported. A total of two display adapters are supported up to three displays simultaneously. Windows does not support image cloning mode for displays connected to different graphics adapters, only for displays on one adapter, so only desktop expansion mode is available at the same time for all adapter displays.

Question: How is the connection of displays controlled and the installation of video modes in systems with Optimus?

Answer: If there are no displays connected directly to the GPU, all work with the displays is carried out the Intel driver IGP. If there are GPU displays, the functions of the NVIDIA driver will also be called.

Question: What outputs for display connection are supported for the GPU in the Optimus platform? How is the display connected to them determined?

Answer: Currently, only HDMI is supported, based on the HDMI definition definition specification. Support for DisplayPort, DVI and VGA is planned in the future, but may require special hardware adaptations (DDC MUX).

Question: Optimus supports common (shared) between graphics processors outputs for displays, like Hybrid?

Answer: No, in Optimus, each output is connected to only one graphics processor. However, like the Hybrid, the platform must provide a common for the IGP and GPU detection of display connections, since the GPU can be turned off.

NVIDIA Optimus technology: automatic switching graphics in laptops - and is it as good as it seems?

Part 1

Today we will try to talk about such an interesting and topical novelty as nVIDIA technology Optimus. The fanfares have already died down, time to take a sober look: what is it, do we need it at all? The last question sticked to me during SSD testing, and I now try it on all the laptops and gadgets that hit me. From the purchase of so many things had to be abandoned - the horror is simple!

However, any innovation has both pluses and minuses. Therefore, in addition to traditional descriptions and reflections, I will also try to critically analyze - and whether this technology is needed, how much it will help users. These comments I decided to highlight in italics - those readers who are not interested in personal opinion can skip the appropriate pieces.

On the one hand, the usefulness of Optimus can not be denied. This technology simplifies the life of the user, and this is its main and most significant plus. Even with existing limitations in implementation, this technology represents a huge step forward from the operational point of view.

The fact is that with her arrival laptops with switchable graphics have learned to switch between video adapters in fully automatic mode, being guided by what level of performance the system needs, and without requiring user intervention. Therefore theoretically, the laptop will work more efficiently and will not distract the user from his affairs, independently reacting to the changed performance requirements.

On the other hand, for me Optimus does not look much like a technological one, but as a marketing novelty. In my opinion, this is a typical development of the situation with the advancement of everything in general, when the idea "let's give them what they want" grows into the idea "let's give them what we will make them think they want." That is, at first there is a demand for something, then the producers try to satisfy this demand, they understand that they have something not glued on, and try to reform the demand for themselves: do not create a technology that suits the users' desires, and force users to think that they wanted exactly what they were offered. As a result, it will become worse from the technological, technical and operational point of view, only comfort will increase for those users who do not want to understand the details (and such an overwhelming majority) and who are ready to sacrifice efficiency for the sake of apparent comfort.

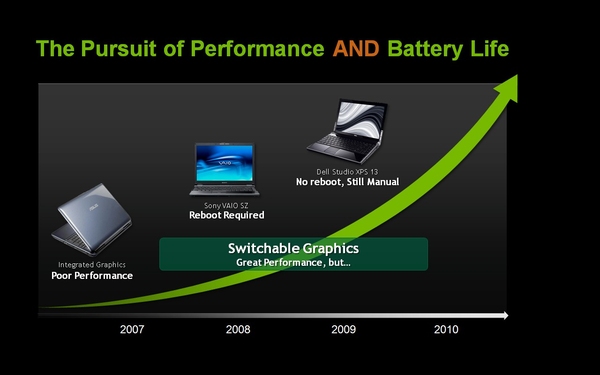

The development of the idea of switchable graphics

At first, absolutely all laptops had built-in graphics and in 3D could not do anything. However, this was not required from them: the laptop was considered a very niche product for professionals with very specific performance requirements. However, gradually 3D conquered the world (with the active participation of NVIDIA), and then the laptops began to become increasingly massive and, importantly, a universal product. Accordingly, they were used in the widest range of tasks, including multimedia, and users very quickly ceased to meet the performance (or rather, its complete absence) graphics. Responding to the demand, manufacturers began to strengthen the graphics subsystem of laptops, there were even gaming laptops and professional mobile graphics stations. They, however, lagged behind in performance from desktops, but could already cope with most of the tasks of ordinary users.

![]()

And then there was a split: the laptop was either powerful, but large, heavy and shamefully short battery life, or small, light, with good battery life, but with shameful performance, especially in 3D.

The built-in kernel should take up little space, consume very little power and do not warm up. Therefore, it operates at low frequencies, has the minimum required number of blocks and a small size, does not have its own video memory, bypassing the usual RAM (this also saves energy). It's quite another matter if there is a dedicated graphics chip: in spite of the fact that it also needs to consume little and does not warm up, it still requires more or less adequate performance in 2D and 3D, the ability to work with digital video, etc. As a rule , mobile chips are a very truncated version of the desktop, working on the same at lower frequencies. So, although these are compromise variants, on performance characteristics, primarily power consumption and heating, they lose significantly to integrated solutions.

Between models with integrated and dedicated graphics, there was clearly a failure. And manufacturers began to think hard how to combine powerful graphics and low power consumption, thereby greatly increasing the versatility of laptops.

Theoretically, you can create a video chip that can manage frequencies depending on the load (however, it's simple and already implemented), dynamically disconnect unnecessary processor blocks and memory, reducing the consumption in idle time (however, this is already implemented to some extent ). But this is a difficult and long way, and it's not a fact that the implemented one - what was possible - has already been introduced into modern chips. However, there is a simpler solution - to have both of the graphics chip in the system, switching between them.

One of the original and interesting solutions was almost non-living XGP technology from ATI / AMD (I'll say it right away, because I really liked the idea, but ... alas). Inside the laptop is an integrated chip, and a powerful one is carried to the external box and connects to the laptop via a fast PCI-E x16 interface (not x1). The huge advantage of this solution is that at home you get a powerful workstation, and on the road - a long-playing laptop. And also - an excellent output to an external monitor and a convenient docking station ... In general, the technology had a lot of advantages, but it had to be developed and popularized. However, AMD, famous for its traditions to kill chickens bearing golden eggs, performed in its repertoire: it closed it with a patent and, having a meager share in the notebook market, decided to proudly promote XGP independently "to Intel's peak." The results we see ... more precisely, we do not see. And sorry, the idea, I repeat, was excellent.

A more accurate NVIDIA has learned from the ancient times of the struggle with 3Dfx the truth that productivity, quality, etc. are nothing before public opinion. This the right approach allows the company to occupy a leading position in the market, regardless of the performance and quality of mobile solutions released to the market (for desktops I will not say).

So, NVIDIA went herself and shoved ATI into the direction of creating switching graphics. The essence of it is that the laptop has two chips at once (this removes the competition between Intel and NVIDIA as manufacturers of graphics solutions for laptops), between which you can switch when you need it. In theory, everything is simple: if there is a powerful task on the agenda that requires high performance, a powerful external graphics processor is used, when nothing special happens - it turns off, and the work includes an integrated adapter, which, of course, is weaker but consumes much less energy.

In the first generation "manual switching" was realized. For example, I had one of the first Sony Z series, and there it was done with a large switch on the case. Sliding the slider in different directions, you can include either graphics from NVIDIA, or the built-in Intel chip. You pull it - and the driver suggests closing all applications before switching the graphics chip, because otherwise the laptop can hang (and really hanging, losing data from open applications). It's very fun to work with, especially when there are thirty applications open, and it's not possible to close them - it's cheaper to not go to work. At the same time, the benefits of switching were doubtful: the laptop is equipped with Geforce 9400, whose three-dimensional capabilities are very modest, except that HDMI only works through it. And this, by the way, was not the very first implementation of the platform, it generally required a reboot of the system. But it, in general, old and already unnecessary decisions. And what happened next?

Modern switchable graphics and NVIDIA Optimus

Now I have two ASUS notebooks on my test, one with NVIDIA Optimus technology, the second one without it. At that they are both with switchable graphics, moreover - with automatically switched! So what's the difference between them?

First about the "older" technology. On modern ASUS laptops there is no button to switch between graphics chips, everything is implemented "automatically". How? Through power schemes. For each of them it is prescribed in what conditions which adapter to use. That is, for example, you put "high performance" - and you have a high-performance (well, if you can call the G210M) chip from both the network and the batteries. You put it "balanced" - and when you work from the network, you will have a productive chip, and when you switch to batteries, the system will switch to Intel's built-in graphics. You put "energy savings" - and the built-in graphics will always work. Thus, the switching of the graphic chip depends on two parameters: the displayed power management scheme and the operation from the network or battery.

However, in life with switching, not everything is as smooth as described above. Often the schedule does not switch, motivating it by the fact that it is hindered by those or other running applications, and they should be closed. But this is not always convenient, for example, if the switch is hampered by a working application or the system asks you to turn off the movie that you are watching. As a result, the entire meaning of the switched graphics is lost. In addition, the "manual" switching has a number of other drawbacks.

However, even if from the technical side everything would work flawlessly, there are many other things that make the laptop not optimal. For example, by no means always when working from a network requires high performance and vice versa. Moreover, this defect will manifest as an inexperienced user (who simply does not know how the switching works) or advanced: he will have to constantly switch in manual mode, through power schemes, and the need for switching is very easy to forget. By the way, even those users who understand these schemes of work and are sometimes ready to manually control the parameters of the laptop, still would like to have a clear automatic mode. It is simply more convenient and allows you to free your head for work tasks. With manual control, you can easily forget to switch and quickly dispose of the battery.

So there was "a newer technology," that is, the same Optimus. Millions of hamsters ... i.e., sorry, casual gamers on laptops (it sounds scary, but there are a lot of them) do not want to understand such intricacies as power schemes or even the presence of a video card inside the laptop, considering that the main speed indicator of a laptop - sticker on the keyboard. Therefore, they do not always manage to use the necessary chip in the games, that's why there are complaints in tech support that I bought a gaming laptop, and graphics (poor built-in Intel) do not pull. Again, even literate users are not always ready to mess with the vagaries of the switching process. For example, when I see a request to close the necessary applications, I simply do not switch to another adapter. And this is bad for either speed or battery life.

Therefore, a technology was developed that allows you to go from the other end: the driver, when switching, looks not at the power scheme, but on the applications that are launched. Started Word? You also have enough integrated Intel. I launched a modern toy - here's your external production graphics. Closed the toy, returned to Word? Everything again switched back, and without any action on your part. It would seem that it is very convenient and casual, and besides it is very advanced from a technological point of view.

And after spending only ten minutes, I managed to configure one application ...

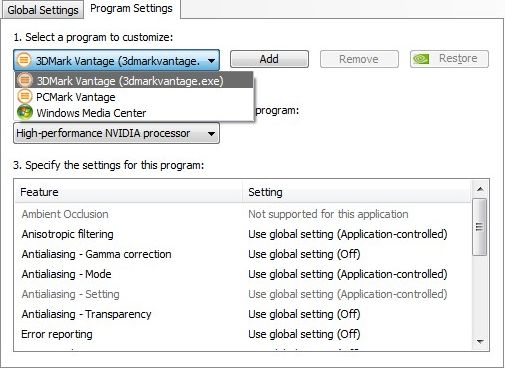

The subtle point of the technology is that Optimus reacts to the executable file of the program. That is, I made the setting for pcmark.exe - and the graphics will switch, you should run this file. However, if you run the pcmark-2.exe file, then the smart computer will not switch, because you did not receive such a command.

Therefore, it is obvious that this system requires one-time, but much more serious tuning than previous version. And few of the kazualov (and we are talking primarily about them) will deal with it. So, anticipating that users will not suffer (or at all at least something to do) with the creation of profiles in the control panel, NVIDIA takes a proactive step and offers online settings: i.e., a database of applications with the registered under each graphical settings, after which the database is downloaded to the laptop (with the user's permission, of course). Thus, the Optimus management utility already "knows" a large number of applications and can determine which chip and with which graphics settings to use in accordance with the recommendations "from above".

Although the driver has implemented a mechanism that uses nVIDIA graphics card, if the application makes a call to Direct3D or DXVA (acceleration of video playback). That is, application dependency is not absolute.

So, NVIDIA Optimus technology is just a software patch over a technologically old hardware solution?

Of course, I'm cunning. Because at the hardware level, too much has changed: now the built-in and external graphics do not work separately ("only one of us can work!"), But cooperate, dynamically switching among themselves, while the external chip uses some functions of the integrated (frame buffer) , and in the minutes of downtime completely off, but the image on the screen remains due to the built-in graphics. Conveniently? Yes! Economical? Yes! Ergonomically? Still as yes!

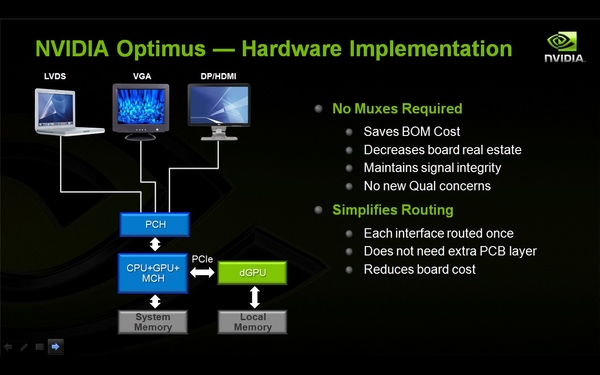

Hardware implementation of NVIDIA Optimus technology

Let's take a closer look at how the technology is implemented from the hardware point of view.

Here's how it was before it was implemented:

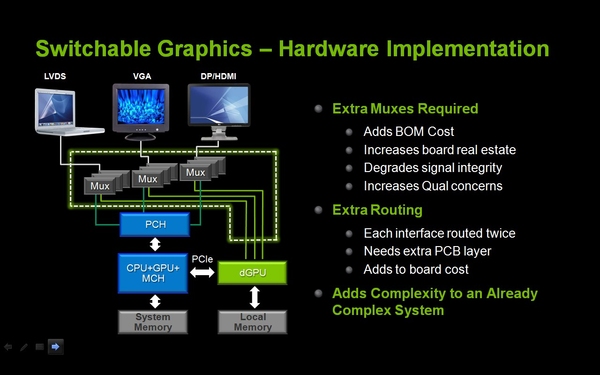

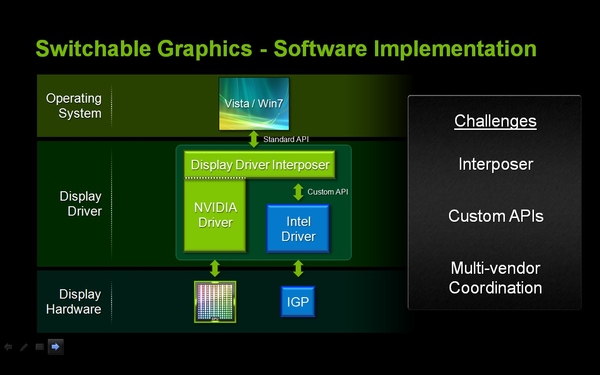

The system installed two independent video adapters. When switching from one adapter to another, the system actually stopped, and the disconnecting adapter started to "deal" - it freed its memory. Then it was switched off and a new one (from here the screen flashing) was switched on and for some time the system "came to the senses" - the new adapter established interaction with the system. At the same time, the Intel-NVIDIA interaction scheme is such that not always the data can be seamlessly transferred from one adapter to another - at least the architectures do not coincide. Accordingly, when using three-dimensional applications or applications that use an overlay (perhaps, and other types - here I'm not sure) to switch the schedule is impossible, they will have to close. In addition, the system had to put an additional chip - a multiplexer (called Muxes on the diagram), which redirects the data from the selected video card to the image output device. Such a multiplexer should be for each output device (internal monitor, external monitor, etc.).

Another aspect is that the system previously allowed only one video driver in the system, so you also had to make software blobs that would allow you to run different drivers for integrated and integrated graphics), but now this problem is solved at the OS level.

As you can see, the diagram shows the Display Driver Interposer. This is a program that controls the switching of the graphics chip in the system, making the driver visible to the OS. Accordingly, this level is necessary for the work, plus a program layer between the interpozer and the second chip, well, we need to negotiate with the manufacturer. By the way, if I understand correctly, Windows 7 allows two active video adapters with different drivers in the system, so this problem is more or less resolved with the help of Microsoft.

As we remember, in a new generation of platforms Intel has very much changed the scheme of interaction of components. In particular, the good half of the graphics chip moved to a common case with a processor, and only the part that was responsible for directly outputting the image remained "outside".

This includes a new interaction scheme. The NVIDIA chip is now not connected in parallel and independently, but is included in the common work with IGP over the PCI Express bus. The layout of the board in this case is simpler, there is no need for multiplexers, and an external chip is connected via a common bus. However, the system has many other features, which we'll talk about below. Let's see how the platform in which Optimus is implemented works.

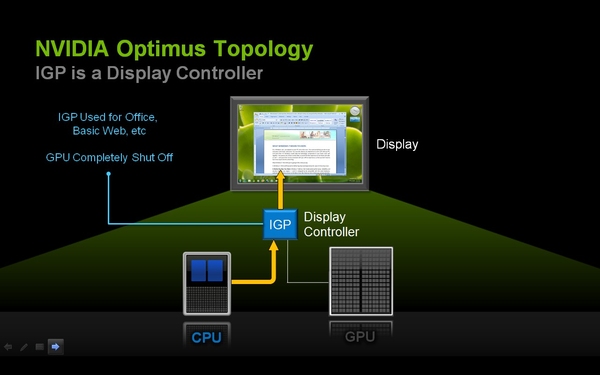

If you do not use applications that require powerful dedicated graphics, you have an integrated kernel. In this case, the built-in chip is occupied by the image output, the external NVIDIA chip is completely disconnected and does not consume any energy at all.

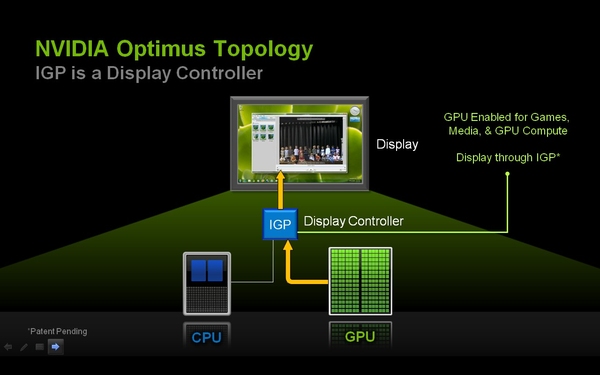

When you run an application that requires an external video card, this chip is turned on.

However, Optimus is implemented in such a way that the integrated video adapter continues to display the image on the screen. That is, in this case we have two graphic controllers at once: one prepares the picture, the second displays it on the screen. Due to this switching is instantaneous and invisible to the user.

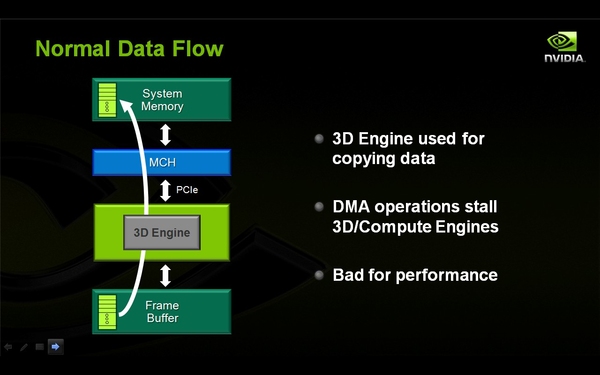

And then there is the next bottleneck, which must be overcome. Remember, when in the traditional scheme there was a switch, the chip "dumped" the data? So, the external NVIDIA card after the formation of the frame should transfer it to the RAM, where it will be taken from the integrated graphics card and displayed on the screen.

But the chip itself will start to download data from the frame buffer in system memory and will deal only with this, and not with his direct duties - the calculation of the next frame. Accordingly, the performance will be extremely low.

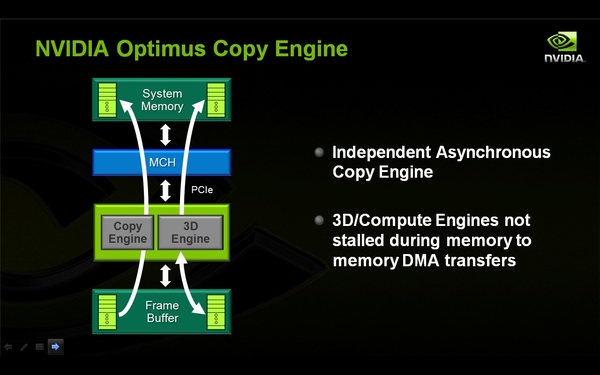

To solve this problem, the company developed the Optimus Copy Engine technology.

This is an independent copy mechanism, which deals exclusively with the fact that it outputs data to the system memory, without distracting the resources of the main chip. Thus, technically the Copy engine is a separate (important) mechanism that takes the generated frame from the local memory of the NVIDIA adapter and sends it to the RAM, from which the integrated controller should take it and display it. The question remains - what are the overhead costs?

The software part of NVIDIA Optimus

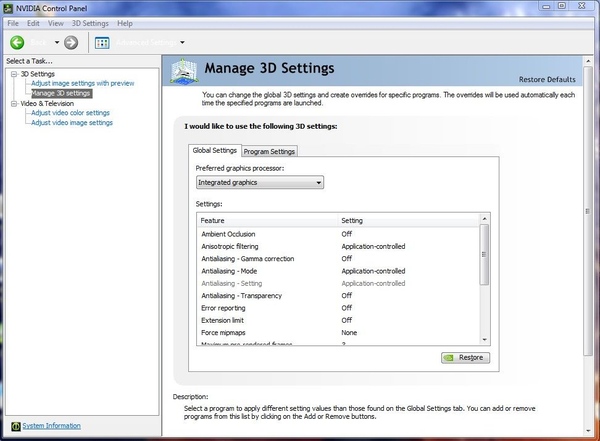

C program point it's all quite simple: we have the same utility that any laptop owner with an NVIDIA adapter can see. Simply there now there was one more bookmark where data for applications are registered.

Please note that you can select which video card is the default.

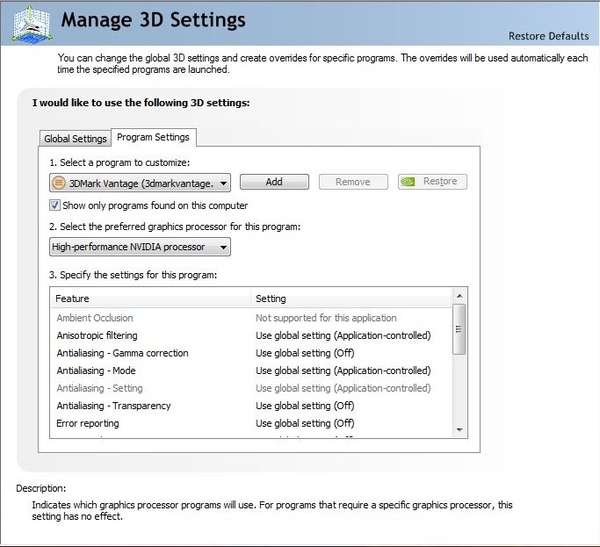

And we'll go to the second tab, which, in fact, the profiles for different applications are specified. As already mentioned, the driver switches the graphics chip in two situations.

The first option is when the application sends a request for the use of a special function. This includes DXVA (hardware acceleration of video using DirectX), actually DirectX and seemingly CUDA. In case the application wants to use one of these functions, the driver will immediately switch it to a more efficient NVIDIA adapter.

The second option is the application profile. For each application (and it is identified by the executable file), a profile is created, including which graphics adapter and what settings it should use. If the driver sees that this application is running, it switches the graphics settings according to those specified in the profile, including selecting the video adapter.

In words, everything is simple. In reality, there are rough edges: for example, before reinstalling, I activated the NVIDIA card when DXVA was turned on, and after that it was no longer there. Although the player still wrote that DXVA is used. After a clean reinstallation of the system, we will check again if the video card is switched or not.

Marketing, marketing ...

Well, now that we have a pretty rough idea of theoretical aspects, but before we look at practical ones, I would like to draw attention to this.

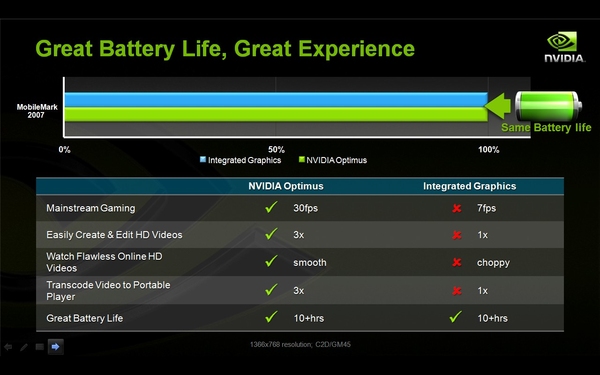

Technically speaking, NVIDIA Optimus does not bring anything new, it just allows you to use resources more competently. You can imagine a scale, at one end of which performance, on the other - power consumption. Optimus allows you to dynamically move the balance. Previously, the laptop was either powerful or economical. Now nothing has changed except that the laptop has learned a little better to choose the optimal position on this scale.

However, from marketing materials, we can conclude that the laptop has become both productive and economical, i.e., both scales have risen.

As you can see, it follows from the slide that both parameters will improve simultaneously, and even to a level that did not reach the system without switchable graphics. It is clear that in fact it does not work out that way.

On this major note, let's launch the laptops for testing and see how things stand in reality.

Practical implementation

NVIDIA was so kind that it gave two laptops for testing: one of them is simple with automatically switched graphics, the second one with Optimus technology. I must say that, as is very often the case, laptops came to the test with a crappy and poorly working system. After the first tests I tried to update the driver, but it did not bring the desired effect. Tests on a clean system with a new driver will be described in the second part of our review.

Nevertheless, we managed to drive away the main tests.

| PCMark Vantage | ASUS UL50Vt Non-optimus |

ASUS UL50Vf Optimus |

||

|---|---|---|---|---|

| NVIDIA | Intel | NVIDIA | Intel | |

| PCMark Score | 3870 | 3853 | 3128 | 3019 |

| Memories Score | 2659 | 2098 | 2246 | 1810 |

| TV and Movies Score | 2612 | 2582 | 1487 | 2202 |

| Gaming Score | 2941 | 1893 | 1763 | 1689 |

| Music Score | 4099 | 4075 | 2043 | 3352 |

| Communications Score | 3592 | 3695 | 1683 | 2870 |

| Productivity Score | 3116 | 3266 | 1437 | 2589 |

| HDD Score | 3340 | 3325 | 3167 | 3306 |

The results, to put it mildly, are discouraging. If the results of the system without Optimus are adequate enough to show that the graphics system of the laptop affects one test (there is still a difference in the memory subsystem test, but it's unclear why) of all, the results of the Optimus laptop are a mess. Even assuming that I confused the speakers (which is not the case), it's still very difficult to explain why the performance of this laptop in Gaming is the same, and in other tests - in the mode with Intel results are higher.

I had to reinstall the system and drivers, including install new driver for NVIDIA Optimus from the manufacturer (version 189.42). It seems that the update was successful, although after the reinstallation, Optimus refused to save the settings different from the default settings. I had to chemize, however, this should not have affected the test results.

So, let's see what happened after cleaning the system and installing the new system on the Optimus platform.

| PCMark Vantage | Non-optimus | Optimus | ||

|---|---|---|---|---|

| new | the old | new | the old | |

| PCMark Score | 3740 | 3870 | 2890 | 3128 |

| Memories Score | 2496 | 2659 | 2247 | 2246 |

| TV and Movies Score | 2529 | 2612 | 1372 | 1487 |

| Gaming Score | 2899 | 2941 | 2443 | 1763 |

| Music Score | 3982 | 4099 | 3274 | 2043 |

| Communications Score | 3495 | 3592 | 2933 | 1683 |

| Productivity Score | 3232 | 3116 | 2628 | 1437 |

| HDD Score | 3258 | 3340 | 3215 | 3167 |

The results have slightly improved and stabilized, but it should be noted that new system lags behind everywhere, in all tests, and this lag can not be attributed to the measurement error (although the error there is healthy, it is worth at least to compare the new and old data on the system without Optimus, where the drivers were NOT changed, just retested one week later). Since it is unclear which of the hardware characteristics affect how the test, you can fantasize for a long time. We will not do this and try to double-check the data. Let's see what the installed system itself says.

The system with Optimus was allowed to switch graphics in automatic mode (in fact, there was no other output with a poorly working driver), so the result for it is the same.

Interestingly, the rating data of Windows 7 in general confirm the conclusions made above. First, judging by the results of the test of a noutube with the usual switchable graphics, one can see approximately what difference in the rating has the integrated Intel and external NVIDIA G210M. And secondly - that the system Optimus lags steadily even in OS tests, as much as possible isolated from the situation with drivers and software.

And the results, as usual, otherwise how strange you will not name. First, the CPU lag behind is not clear. In laptops there is technology ASUS Turbo 33, but it is disabled (judging by the switch on the desktop). It is unlikely that it was activated in one laptop, but not in the other. And the discrepancy is very significant.

The results of the graphics system are seriously different, but we can conclude from them that when analyzing the "graphics" parameter the system worked on the built-in video card, and the "graphics for games" - went to the external one. During the tests it was visible when the laptop switched the adapter - it happened during the test of the possibilities in Direct3D (ie in this case the automatic switching worked).

Thus, at this stage, with the sample provided and the operating system (it is necessary to make many reservations) the system performance on NVIDIA Optimus does not reach the same laptop, but equipped with the usual switchable graphics. I want to once again pay attention to the fact that the processors, the amount of RAM and the video subsystem of the tested laptops are the same. Proceeding from this, in the second part of the material we will once again test the laptops on a clean system.

Battery life

Improved battery life is one of the main declared advantages of NVIDIA Optimus technology. Let's see, maybe the new technology is more efficient here?

The battery capacity is approximately equal to 79114 mWh and 80514 mWh from Optimus and Non-Optimus, respectively. In practice, this difference has almost no effect on the results. Traditionally, the system worked on an adaptive power scheme with default settings.

| Non-optimus | Optimus | |||

|---|---|---|---|---|

| NVIDIA | Intel | NVIDIA | Intel | |

| Reader | 8 hours 20 minutes. | 9 hours 08 minutes | 7 hours 02 min. | 8 hours 15 minutes. |

| Video | 4 hours 04 minutes | 6 hours 40 minutes | 3 hours 07 minutes | 4 hours 57 minutes |

The first thing I would like to note by the result of testing is an impressive absolute working time and very good optimization energy consumption (the better, the greater the difference between the idle and the running time).

The second is an interesting difference in performance, depending on the adapter used. As you can see, when working in idle time, the NVIDIA chip takes away an hour of battery life. However, when working under load (and the load is specific - video playback), this difference becomes not less, but more. Thus, with a not very heavy load, it is still worth using the built-in adapter, and you are unlikely to achieve any advantages in autonomous operation with the NVIDIA adapter.

Finally, the third and most interesting conclusion for us: under any conditions of use, the laptop on NVIDIA Optimus loses to a regular laptop for about one hour, plus or minus 10 minutes.

After cleaning the drivers, I once again conducted a test to see what happens. The normal system showed 9 hours and 18 minutes, the system on Optimus (with a re-run OS and a new driver) - 7 hours 55 minutes.

Thus, there is no gain in battery life either. Most of all I'm confused by the loss of the system on Optimus in read mode and with the Intel graphics system. It seems extremely strange for me to have such a stable loss for one hour (or even a little more) of autonomous work.

Conclusion

Based on the above tests, it was decided to install a clean system on both laptops, put the drivers on them and carry out objective testing on clean systems. It will be outlined in the review devoted to the new laptops ASUS rulers UL50V. Although completely objective it still will not, because the laptop on Optimus we have in the status of the sample.

In the meantime, if you ignore the test results, then Optimus offers a good solution for those who do not want to manage the system manually. In the end, and fans of manual gearbox in cars is getting smaller, most prefer "automatic" for their comfort, and for the sake of it they are ready to put up with their shortcomings.

By the way, this is a good analogy. The automatic transmission has many drawbacks and limitations: it is more complicated, more tender in operation, there is more loss in the transfer of torque (worse economy), it does not always adequately switch gears. But at the same time it gives a big increase in comfort: you do not have to hammer your head with gear shifting and do a lot of unnecessary movements.

The same can be said about Optimus. He does not always switch adequately, he has his own limitations, his efficiency is lower. But at the same time Optimus gives you comfort - you do not need to climb in the settings, it's like everything "itself" happens. Therefore, for those users who previously could not use switchable graphics (can not use manual shifting in the car), Optimus is a definite plus. Now they will be able to use the computer more efficiently (they can drive). For those who are familiar with switching graphics, but lazy to climb on the settings, this is also a plus - it is easier to adjust to the features and limitations of Optimus (how to easily adjust for the brake and algorithm for switching the machine) than constantly switch graphics in manual mode. Remain only accustomed to fully control every computer chip, but for them in Optimus you can find benefits: fast switching graphics and the lack of dependence on the included applications (like driving on the machine in manual mode).

The pay for it is productivity and battery life (although this still needs to be clarified).

If you look "from the wrong side," then Optimus is a set of crutches, which seems to create the impression of the user that "everything is fine", but by what internal means it is achieved! And, if you look closely, crutches are everywhere: in the hardware part, one chip is connected to another instead of direct output. The generated frame pulls one chip out of the memory and puts it in the other, which necessitated the creation of a separate line for copying data from the video memory to the system memory and back. The software system is based on a powerful crutch - the name of the executable file. In ancient times, from the name of the test package, the drivers included "optimizations" that allowed to get a "better" result ahead without real advantage, now they have taken a step further: now an entire external chip is turned on for this. True, there is also a progressive switching mode when using Direct3D and DXVA, but if the laptop switches to external graphics in the movie it's the minus hour of battery life, that is, you can not banally watch the movie. Is such "acceleration" necessary? And since it is obvious that the target audience with the settings will not bother, a set of universal recipes will be submitted from the Internet: how to make the user better according to the manufacturer.

This opinion is confirmed by the performance results - if they do not improve on a clean system, then the opinion will only be strengthened. Optimus offers neither performance gains nor battery life. All that it offers is an increase in convenience for those who do not like to customize their own user system and the ability for manufacturers not to make the button for switching graphics on the case - due to the fall and performance and autonomy. And the next step, obviously, will be another increase in computing resources - to hide their fall as a result of "improving convenience."