AMD Radeon came the turn and the company Nvidia. Its GeForce graphics processors are no less popular among players than the products of the competitor. Both companies keep pace in the market, offering customers solutions similar in terms of productivity and price. Therefore, it would be unfair to devote the material to building a CrossFire configuration, but bypassing the similar capabilities of Nvidia products.

A bit of history

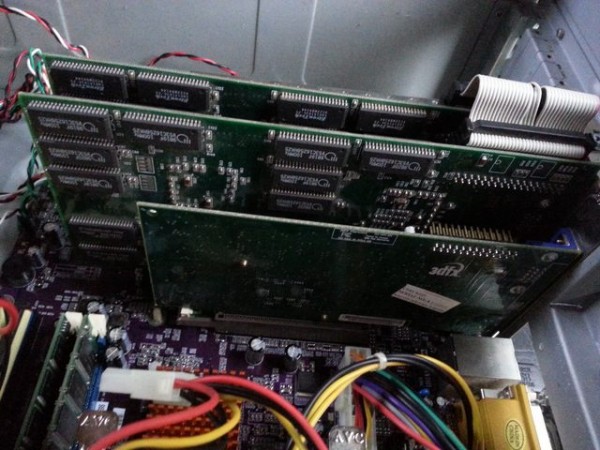

The beginning of the era of SLI can be considered 1998. Then the company 3dfx for the first time managed to realize the possibility of combining several video cards to solve one problem. However, the development of the AGP interface slowed development in this direction, since all motherboards of that time were equipped with only one slot for a video card.

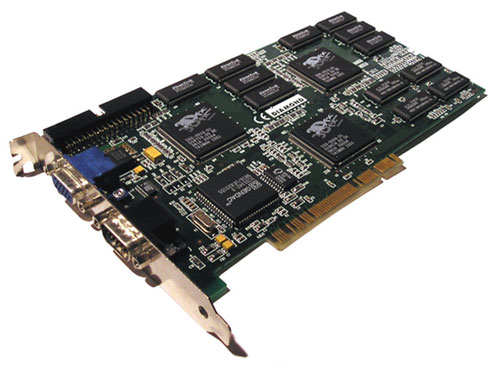

SLI from 3DFX - "grandfather" of modern technology

After in 2001, 3dfx were bought by Nvidia, all developments in this direction were waiting for their time, which came only in 2004. It was then, in the 6000 series GeForce (the first video cards designed specifically for the newest PCI-Express interface at the time), the available designs were improved and introduced into mass-consumption products.

SLI of two GeForce 6600GT

First, you could combine two geForce video cards 6600 or 6800, with the output of the 7000 series (in which the first full-fledged dual-processor board from Nvidia, 7900GX2 was presented), it became possible to build a four-processor configuration (of 2 cards), and later a combination of 3 or 4 separate GPs became possible.

How to combine video cards in SLI: requirements

As for CrossFire, it is not enough to buy two Nvidia video cards in order to configure them in SLI. There are a number of computer requirements that must be followed to ensure the proper functioning of the ligament.

Which video cards can be combined into SLI

Connecting two SLI video cards-It is possible only if there is an interface on the board for this bridge. Software can combine them, too, you can try, but without "dancing with a tambourine" in this case can not do. And it does not make sense to build a tandem of two GT610 or GT720. One card, the speed of which will be higher than that of a similar bundle, 2 or 3 times, will be cheaper than a set of a pair of office cards and a compatible motherboard. That is, the lack of graphic accelerators on the younger representatives of the SLI-interface is caused not by the producer's greed. No one puts the bridges, because this will make the card more expensive, but there will be no meaning from them. In this way,connection of two SL video cardsI is possible for models of medium and top class. These include GPUs, the second digit of which is 5 or higher (GTX55 0Ti, GTX96 , GTX6 7 0, GTX7 8 0, etc.).

The GeForce GT 720 does not have a bridge for SLI

Before , how to connect SLI two video cards, it is worth making sure that they are built on one version of the graphics processor. For example, GeForce GTX650 and GTX650Ti, despite the similarity of names, operate on the basis of completely different GPs and therefore can not work in tandem.

Is there any practical benefit from SLI?

Before, how to combine video cards in SLI, it is advisable to familiarize yourself with the experience of using such configurations by other gamers and study the specifications of their cards, as well as the characteristics of more productive solutions in the line. Often, neither in terms of savings, nor in performance, win two-card configuration does not. For example, two GTX950s in SLI show results comparable to one GTX970. The difference in price (about 200 and 400 dollars, respectively) of the cards themselves is justified, but if you consider the additional costs for a powerful PSU, a dual-slot motherboard, a quality and well-ventilated case - it looks doubtful.

A completely different situation, if - part of the procedure for upgrading an existing PC purchased a couple of years ago. The cards of the GTX650Ti or GTX750 level can still be found on sale at a reasonable price (the difference with the new models is roughly equivalent to the speed difference), and the technical progress for 3 years did not present anything revolutionary for the graphics cards (except that the HBM memory presented by AMD, but Nvidia is until it touches). Therefore, the addition of another graphics processor is a very rational step for such players.

SLI: connection of video cards

If the computer meets the requirements for creating an SLI configuration, a suitable second video card is purchased - you can proceed to the assembly. After turning off the PC, remove the cover the system unit, install the second card in the corresponding slot, connect an additional power cable (if required) to it and connect the two cards with the bridge that comes in the kit. Hardwareconnection of two video cards in SLI this is completed and you can proceed to the software configuration.

Power Cables Connected to SLI Link

Connecting two SLI video cards in Windows

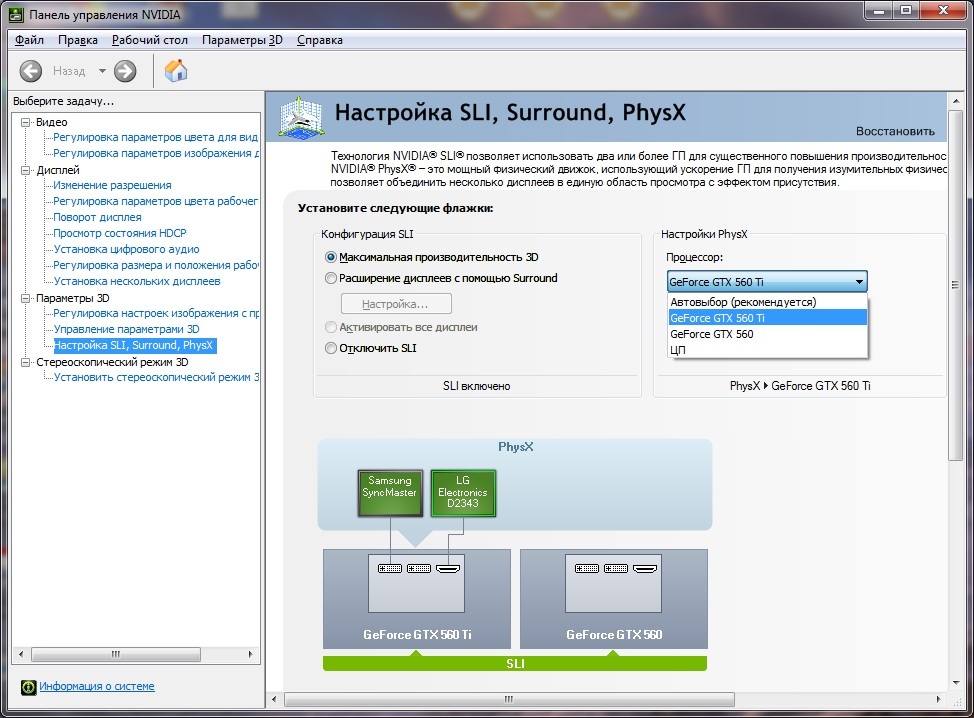

In order for the bundle of two GPs to function properly, you need to install it on a PC Windows versions Vista or newer (7, 8, 8.1 or 10). It is also recommended to download the latest version of the driver for the video card from the Nvidia website. After that you can proceed to setup.

After applying the settings, the bundle is ready for use. Perhaps, in order to correctly configure SLI operation in some games, you will have to set them special parameters in the submenu "Program settings". But, as a rule, most modern software does not need it.

Hello, today we will talk about Nvidia graphics cards working in SLI mode, namely about their problems and how to solve them, but first we will understand what SLI mode is. Nvidia SLI is a technology that allows you to use several graphics cards simultaneously and at the same time significantly improve system performance. One of the main requirements is the use of the same graphics processor on the graphics cards used. Below, more detailed requirements will be listed for building a computer that will work with this technology.

- The motherboard must have two or more connectors that support this technology;

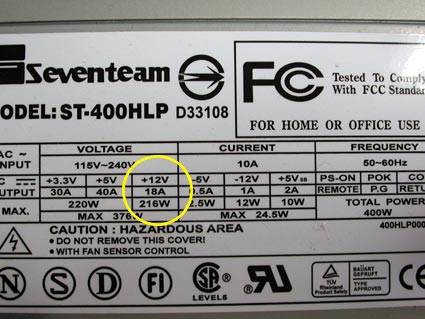

- A good power supply that can supply power to our system (SLI-Ready units are recommended);

- Video cards that support this technology;

- A bridge that can combine video cards;

- A processor with a high clock speed, which will be able to open the potential of video cards, so as not to cause a drop in the frame rate and performance in general.

From the above, it turns out that the construction of NVIDIA SLI is not something unattainable, but on the contrary, it does not differ much from the assembly of a usual home computer.

Problems

Well, the above was outlined the information, why SLI is needed, its pluses, and now we turn to the problems and their solution.

- The first problem is the dependence on the drivers, the driver needs to be updated very often, as serious changes are made and optimizations are released for specific games or applications;

- The second problem is that not all games / applications support this technology and in some games / applications you need to make it work just a few of your video cards;

- The third problem of this technology in the same updates, since every time you update the driver you need to do some manipulation to work all the video cards, and it's not always convenient and not all owners of this technology are ready to "rummage" in the settings and deal with their problems.

Problem solving:

- The first problem is solved quite simply. Download the utility from the official site of NVIDIA GeForce Experience, register to use this program, go to your account, and click the "Check for updates" button. If there is a more recent driver, it will be downloaded, and you will be asked to install it.

- The second problem is not always solved, because not all application vendors optimize their applications for this technology, but you can still try to fix the performance problems with some simple manipulations in the driver settings for your SLI system.

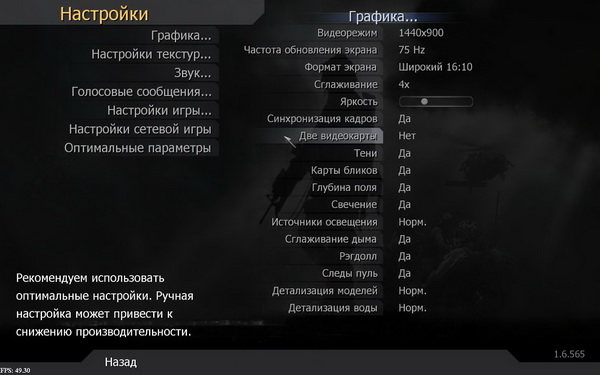

Below I'll give you an example of setting up the game The Sims 2, which by default is not optimized for SLI mode.

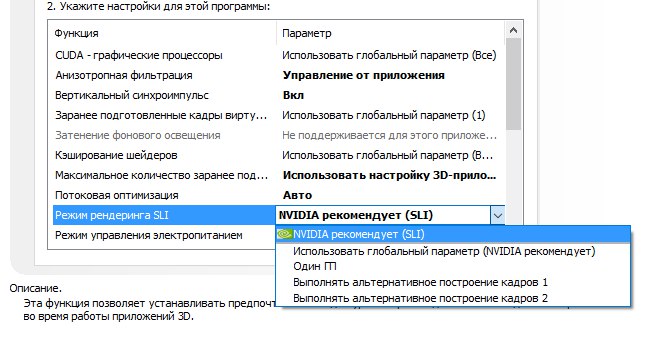

Open the "Nvidia Control Panel" - manage the 3D settings - program settings and click on the "Add" button - specify the path to the file that launches our game - look at the second section "Specify settings for this program" - the SLI rendering mode, if there is a " Nvidia recommends (SLI), then select this item, if not, then choose "Perform alternative construction of frames 2".

We also need to configure the power management, and for this we go to "Power Management Mode" and select "Maximum Performance Mode" is selected.

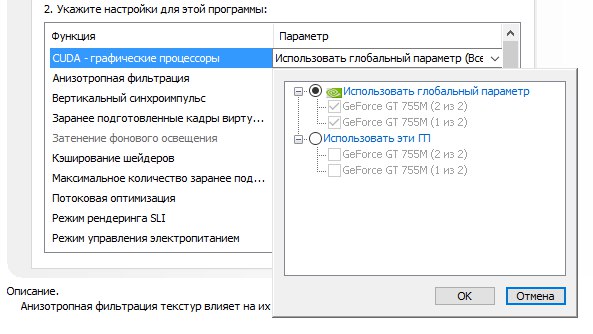

The last point we will have is the "CUDA - graphics processors" setting in this setting, absolutely all of your video cards that you want to use in this application should be ticked.

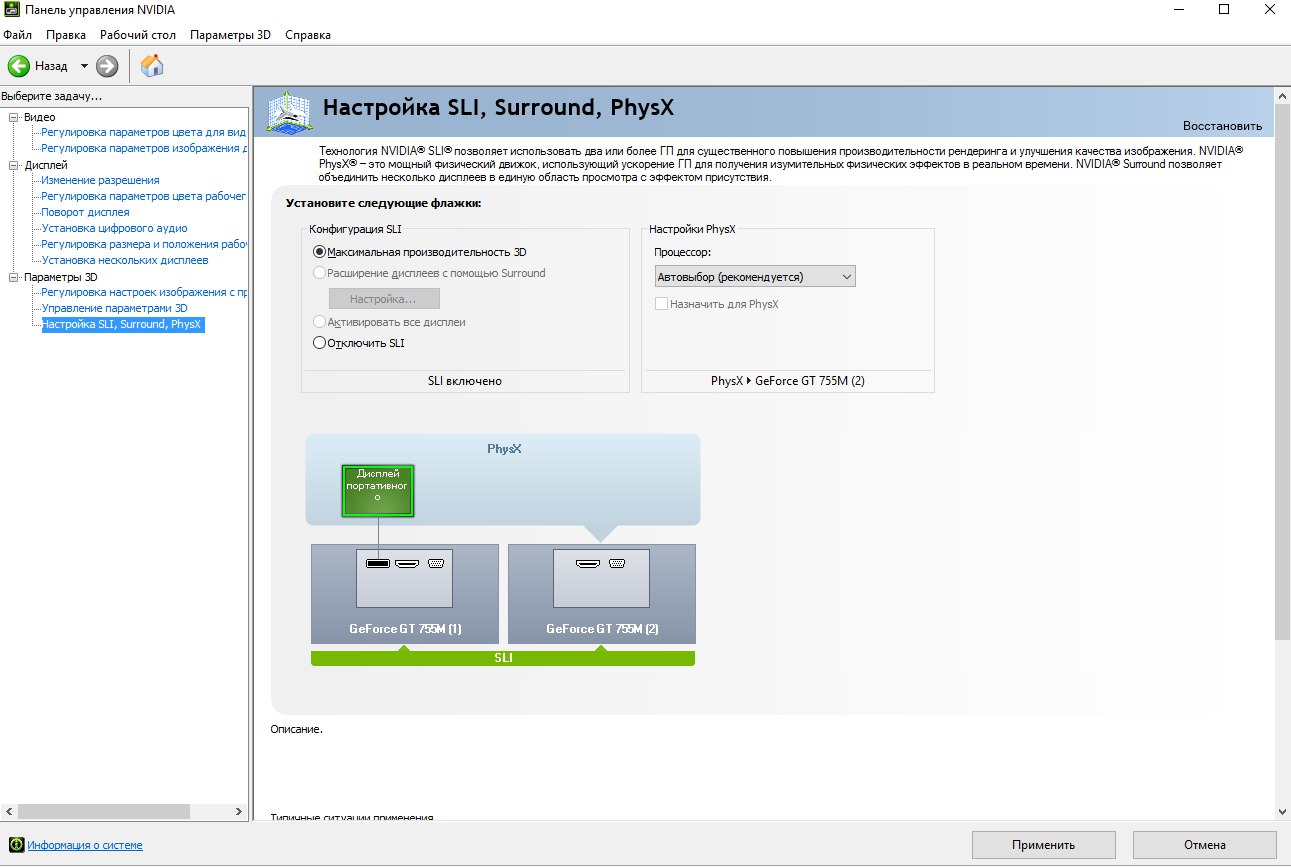

- The third problem is solved quite simply, but it appears every time we update the driver and need a few clicks to fix it. Since we have not yet left the Nvidia control panel, we need to click on the "SLI Settings, Surround, PhysX" tab and click on "Update Settings" then on the "Apply" button and this problem will be solved.

Finally, we waited! At least, those users who are not enough for one graphics card today. What difference does it make how much this solution costs if it provides an unsurpassed speed in the highest resolutions with the maximum level of detail. Finally, nVidia was able to present a working realization of SLI technology. The concept of SLI is familiar to us for a long time: two video cards working in parallel - remember the old Voodoo2 cards from 3dfx? Although nVidia is also called SLI, its implementation is somewhat different.

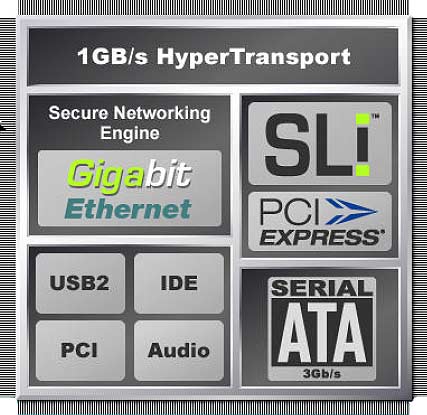

Revival of the principle of using two graphics cards It became possible due to the appearance of the PCI Express interface. Having released the nForce4 chipset, nVidia was the first to introduce the PCI Express platform, which corresponds to the task. For more information, please see nVidia implements SLI: the power of two GPUs in one computer .

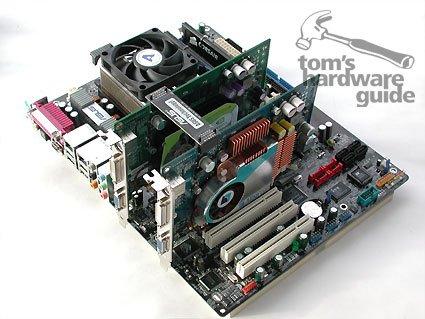

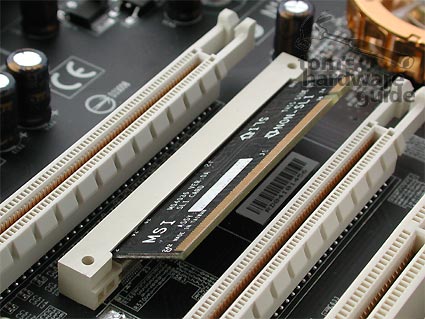

Collect components for sLI systems It is not difficult: you need to find two identical cards nVidia GeForce 6800 Ultra, 6800 GT or 6600 GT with support for PCI Express, as well as an SLI-compatible motherboard based on the nForce4 SLI chipset plus a bridge for connecting two cards. Usually this bridge manufacturers motherboards are enclosed in the delivery set. Since manufacturers often change the distance between two x16 slots, some bridges allow you to adjust your length.

For SLI, nothing else is needed - except for the money that will have to be paid for all this economy. The fact is that the motherboard with SLI support will cost you much more than the usual version, not counting the cost of the second video card. Also, you should get a powerful power supply with a 24-pin plug. Owners of LCD monitors can also have problems. The fact is that most displays have a "native" resolution of 1280x1024. However, the performance potential of the SLI system on two GeForce 6800 cards today can only be revealed in a resolution of 1600x1200. If you reduce the resolution, the CPU bottleneck will be the bottleneck, so you will have to spend money on a more powerful processor.

In general, the SLI system is not cheap. In the next sections of this article we will share our experience and test results of the first motherboards with SLI support from ASUS and MSI.

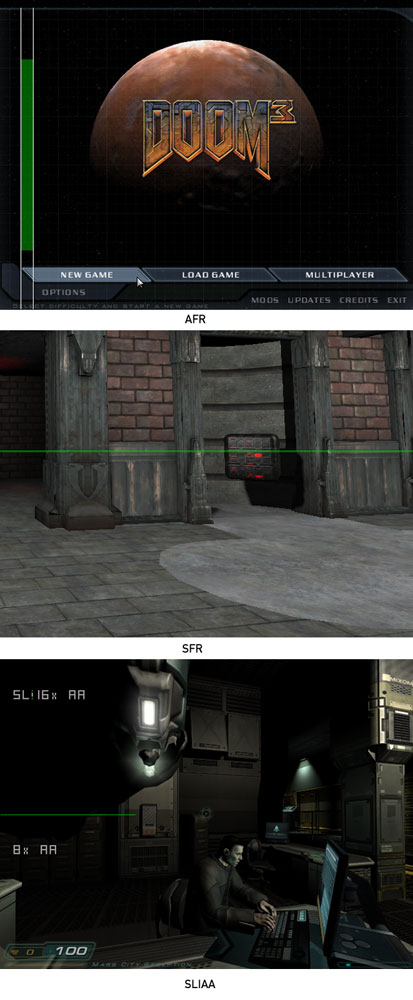

At the moment, nVidia offers three different modes for SLI.

- Compatibility Mode.

- Alternate Frame Rendering (AFR).

- Split Frame Rendering (SFR).

In compatibility mode, only one card of the two works. There is no performance gain. In AFR mode, one of the computer cards produces all even frames, and the second card - all odd. That is, the rendering is divided between two cards in frames.

In SFR mode, the display is divided into two parts. The first card gives the top of the picture, and the second - the bottom one. Due to dynamic load balancing, the driver evenly distributes the load between the two cards.

nVidia explains in some detail the specifics of SLI mode rendering in two tutorials (in English): GPU Programming Guide and SLI Developer FAQ .

For our SLI test, we obtained from NVIDIA two reference cards GeForce 6800 Ultra and 6800 GT with PCI Express interface. Outwardly these cards differed only in the size of the radiator. Both models are equipped with two DVI-I outputs (VGA output is provided via an adapter) and a TV-out. As usual in the case of PCI Express cards, the PEG power connector (PCI Express Graphics) was located on the back of them. Since such connectors are equipped only with the latest power supplies, you may need adapters.

Unfortunately, we were not able to quickly find two SLI-compatible GeForce 6600 GT cards, because the two card models in our lab did not support SLI, despite the suitable connectors.

Configuring SLI on nVidia GeForce 6800 Ultra cards.

Configuring SLI on nVidia GeForce 6800 GT cards.

The SLI configuration on nVidia GeForce 6600 GT cards did not work.

Without a bridge, SLI is indispensable here. At motherboards the distance between graphic cards is clearly set. All motherboard manufacturers specify a different distance between the x16 slots, so they attach the appropriate bridges to the package. We got two adjustable bridges from nVidia, which cover any desired distance.

Different SLI bridges.

Without a SLI bridge, the driver issues a warning. The SLI mode will work, but very limited (see performance analysis).

The first experience of SLI

Hardware

As you know, we were looking forward to the release of SLI. In March, publishing an article on pCI Express bus , we expected future solutions on several maps. Of course, the subsequent announcement of such a decision from nVidia chained all our attention.

However, after the appearance of the first motherboards with support for SLI, our enthusiasm quickly came to naught. Our test configuration constantly failed, and the GeForce 6800 and 6600 GT PCIe cards either were not recognized at all, or the computer "flew" a few seconds after loading. After many unsuccessful attempts to launch the SLI system, we found the reason: the cards were too "old". Our models relate to the very early samples of nVidia, and, according to the manufacturer, they do not work properly with SLI. However, these cards have not been sold for a long time, and this should hardly be given special attention. The latest 6800 Ultra and GT cards work with SLI technology without any problems. Here, of course, the question arises - can I then purchase a second video card for SLI work from another manufacturer? While we do not know the answer.

We also had considerable problems with SLI motherboards. The ASUS A8N-SLI card completely reset the BIOS every time we restarted the computer - and this problem was not just on our test card. Because of the limitations of the testing time, we could not change the fee for another. As it turned out later, the cause of the failure was a batch of defective BIOS chips. Fortunately, the BIOS chip is in the socket, so we were able to replace it quickly. After that, the A8N-SLI Deluxe earned without any problems.

The MSI K8N Diamond, on the other hand, had memory problems when working in SLI. Our Kingston HyperX memory modules in 2/2/2/6/6 DDR400 mode led to a permanent system crash. The computer was loaded and worked with desktop applications and even with Prime95 in 2/2/2/6 mode, but immediately "crashed" when SLI mode was enabled in most games. When using one card, this problem does not arise. Only after we increased the delays to 2.5 / 3/3/7, the MSI K8N Diamond board worked steadily in the SLI mode. Our test card also had problems with memory bandwidth. Even with delays of 2/2/2/6, the Sandra test produced a memory bandwidth of only 4.9 GB / s, which corresponds to DDR 333! For example, the ASUS A8N-SLI card yields slightly less than 6 GB / s.

Then we had to increase the memory latency for SLI and ASUS work, as in the 2/2/2/6 mode and it crashed. When switching to 2/3/3/6, the board worked stably. As we believe and hope, these problems are related to the test status of motherboards, and they will not appear in retail versions. In recent weeks, ASUS and MSI have arranged a real race for who will be the first to release the SLI work card. As you understand, "hurry up - people make fun of." Problems were to be expected. In addition, most of them occur only in the SLI mode.

After eliminating the described hardware problems, the SLI configuration was immediately recognized by the video card driver. After enabling SLI mode and restarting the computer, everything worked correctly. SLI operation becomes visible only when using the FRAPS frame counting utility in the game. The quality of the image was no different from the usual mode - no lines, flicker or other artifacts, we did not notice. Only by enabling the SLI HUD mode in the driver, which shows the distribution of screen portions for rendering in SLI mode, you can judge the operation of SLI.

After installing the SLI system, the driver 66.93 correctly recognized everything.

By enabling HUD mode, you can see the load distribution between the two cards.

FROM motherboard nothing much to do is not necessary - except that download latest version nForce4 drivers.

After several tests, we found a relatively slow performance in two games. After turning on the SLI HUD in the driver, we saw that in these games SLI is not activated. Even forced inclusion of SLI in advanced driver settings did not lead to the desired effect. After talking with the representatives of nVidia, we found out the cause of the problem: SLI really does not work on some games. nVidia uses the so-called SLI profiles for games that are defined in the driver. The driver recognizes the game through application detection technology and includes the desired SLI (split or alternative) mode specified in the profile. If the SLI profile for the game does not exist, then the SLI mode will not be enabled. You can not force SLI mode or create your own profile. However, according to nVidia, the driver already has more than 50 profiles for games, where the SLI mode will be turned on. If the game is completely new, then the owners of SLI, probably will have to wait for the release of a new driver. But even then there is no guarantee that the SLI mode will be included in this or that game.

According to nVidia, there are games that are simply incompatible with SLI. For example, Microsoft Flight Simulator 9 and Novalogic Joint Operations. The reason for the time of publication of the article, we do not know for sure. nVidia only indicates that these games use frame buffer technologies that cause problems with SLI. In general, out of 10 games that we included in our testing, the two were incompatible with SLI.

Theoretically, the PCI Express standard allows you to connect an infinite number of parallel PCI Express lines, but the new desktop chipsets are limited to 20 lines or even a smaller number. If you take into account that the PEG slot (PCI Express for graphics-PCI Express for graphics) already takes 16 lines, then for all other slots there are only four.

nVidia cheated a little with the release chipset nForce 4 . When working with one video card, all 16 lines are available. But when switching to SLI mode, 16 lines are distributed between two slots x16. So each PEG slot works in x8 PCI Express mode.

However, in practice, such a solution has almost no effect on performance, because the throughput of x8 PCIe corresponds to AGP 8x. As the comparative testing of x8 and x16 PCIe modes , this does not lead to tangible differences in productivity.

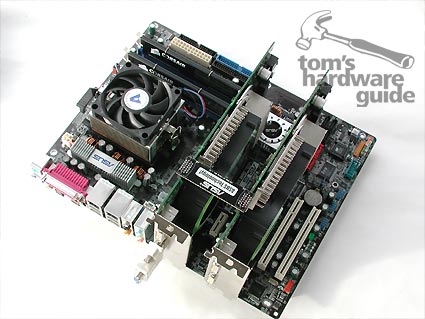

Motherboards with SLI support

ASUS supplies the A8N-SLI with a good set of accessories.

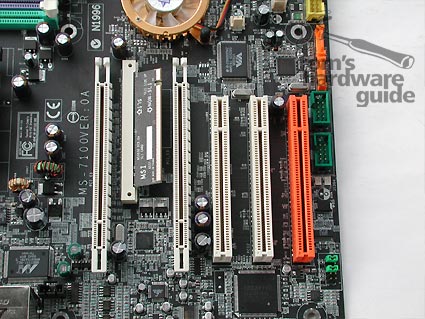

The ASUS A8N-SLI Deluxe card is well equipped. ASUS increased the distance between the x16 PCIe slots by placing two x1 PCIe slots between them. A small card determines the operation of one or two graphics cards, depending on which end is inserted. Spring fasteners "EZ Switch" facilitate the process. In SLI mode, you need to connect an additional Molex power plug (EZ Plug). The fact is that the PCI Express specification allows a maximum power of 75 watts per slot x16. According to ASUS, without additional power connection for SLI systems, power supplies which are equipped with 20-pin ATX plugs, there can be problems. In the manual, ASUS recommends the use of ATX 2.0 power supplies that have 24-pin plugs - even though using additional connector power supply. Unlike MSI, ASUS explains that two GeForce 6800 Ultra cards can load a 12-V power supply line up to 14.8 amps. If you are using the Athlon FX-55, then add another 8.6 A. The old 20-pin ATX power plugs (1.0) provide only one 12-volt line. As a result, due to the high continuous load on this line, the power supply can fail . New ATX 2.0 power supplies use a 24-pin connector, where there are two 12 V lines, which allows you to distribute the load between them. Thanks to the ASUS EZ Plug, the power supply can even better distribute the load. ASUS recommends using a 450W or higher power supply for SLI systems with Athlon FX-55 and GeForce 6800 Ultra, since only these components will require at least 281 watts.

The only drawback, we think, is the active cooling of the north bridge. As experience shows, the fan can relatively quickly fail. It would be better if ASUS used only a radiator.

Appearance A8N-SLI (click to enlarge).

This small card translates the motherboard into the mode with one or two slots.

ASUS provides two additional x1 PCI Express slots and three PCI slots.

Extra power for the second video card in SLI mode. If you forget to connect it, then when using the second card the red LED will light up.

At first glance, the MSI K8N Diamond board is very similar to the ASUS model. At the same time, MSI has placed two x16 slots closer to each other. Between them, too, is a small board for setting the operating modes (one or two video cards), but this time it is fixed at an angle. MSI decided not to add additional PCI Express slots. For the second slot x16 additional power is also not provided. In order for the two-card system to work without problems, it is recommended to use a powerful power supply with a 24-pin power plug. According to MSI, a 20-pin ATX plug can also be used if the power supply delivers not less than 18A on the 12 V line. Here, the MSI and ASUS assertions differ somewhat, since the latter indicates that the SLI system requires more.

The fan of the north bridge is located next to the second slot x16, and the video card closes it. However, it has a small thickness, so the map does not interfere.

The appearance of the K8N Diamond motherboard (click to enlarge).

A small card switches the motherboard into a mode with one or two graphics cards.

MSI does not provide additional x1 PCI Express slots, so you'll have to settle for three PCI slots.

MSI recommends a 450 W power supply for SLI systems. When using a 20-pin ATX plug, make sure that the 12 V line is at least 18 A.

The lack of additional power can lead to problems with ATX power supplies, equipped with 20-pin plugs.

Test configuration

We tested the SLI configuration with GeForce 6800 Ultra and GT cards with both ASUS and MSI motherboards. To test the performance of a single video card, we used only the ASUS board. As a processor, we took the Athlon 64 4000+ (it corresponds to the Athlon 64 FX-53) and equipped the system with 1 GB of memory. We used the Antec True Control ATX (1.0) power supply with a 20-pin plug. Note that during testing we did not find any problems. We also tested a 760-watt power supply for the workstation, when we checked whether the stability problems associated with malnutrition were connected.

Due to the stability problems described above, we decided not to run tests for "overclocking".

| Test system 1 | |

| CPU | AMD Athlon 64 4000+ |

| Motherboard | ASUS A8N-SLI Deluxe (nForce4 SLI) |

| Memory | |

| HDD | |

| DVD | Hitachi GD-7000 |

| LAN | Netgear FA-312 |

| Power Supply | Antec True Control 550W |

| Test system 2 | |

| CPU | AMD Athlon 64 4000+ |

| Motherboard | MSI K8N Diamond (nForce4 SLI) |

| Memory | 2x Kingston DDR400 KHX3200 / 512, 1024 MB |

| HDD | Seagate Barracuda 7200.7 120GB S-ATA (8 MB) |

| DVD | Hitachi GD-7000 |

| LAN | Netgear FA-312 |

| Power Supply | Antec True Control 550W |

| Drivers and configuration | |

| Video Cards | ATi Catalyst v4.11 nVidia v66.93 |

| Chipset | Intel Inf. Update |

| OS | Windows XP Prof. SP1a |

| DirectX | DirectX 9.0c |

| Video cards used for tests | |

| ATi | Radeon X800 XT PE PCIe (520/560 MHz, 256 MB) |

| nVidia | GeForce 6800 Ultra PCIe (420/550 MHz, 256 MB) GeForce 6800 GT PCIe (350/500 MHz, 256 MB) GeForce 6600 GT PCIe (420/550 MHz, 128 MB) |

| Tests | |

| 3DMark 2005 | Standard Resolution |

| Custom Timedemo Max Details / Quality Map: Assault-FallenCity |

|

| Call Of Duty v1.4 | Max Details / Quality Demo: VGA |

| Doom3 | Demo: Demo1 High Quality |

v1.3 |

Custom Timedemo cooler01 Very High Quality Flashlight: On |

| Custom Video Custom Quality Scenario: Hong Kong at Dusk |

|

v1.2 |

FRAPS Max Details / Quality Map: Ho Chi Minh Trail |

| The Sims 2 | FRAPS Max Details / Quality Custom Scene |

| Half-Life 2 | Three custom Timedemos Max Details / Quality |

Test results

Unreal Tournament 2004, special timedemo (level of Fallen City, Assault):

Call of Duty, version 1.4, built-in timedemo timedemo1:

Doom 3, built-in timedemo demo1

Farcy, version 1.3, special timedemo cooler01, the flashlight is on

The Sims2, the selected Goth Family scene (5 seconds with FRAPS)

Flight Simulator 2004, version v9.1, a specially recorded video, the script "Hong Kong At Dusk" (FRAPS):

Battlefield Vietnam, version 1.2, the map "Ho Chi Minh Trail", Gameplay Measurement (FRAPS)

Half-Life 2, special timedemo THG2

Half-Life 2, special timedemo THG5

Half-Life 2, special timedemo THG8

Performance Analysis

Our first tests immediately showed that the SLI mode requires a very powerful CPU. With the fastest GeForce 6800 GT and Ultra cards in SLI mode, even the fastest processor does not have time to provide them with data. All the power of SLI is noticeable only at high resolutions of 1280x1024 or, better, 1600x1200 with the inclusion of FSAA and anisotropic filtering - that is, in those scenarios that most load modern graphics cards. With lower resolution and disabled FSAA and AF, the SLI mode provides little benefit. By the way, on some games the transition to SLI gave even some deceleration (UT 2004), which, probably, is connected with additional computational costs for load distribution, which SLI entrusts to the CPU. At the same time, we found a performance drop in SLI mode even on Flight Simulator 2004, compared to a single card, despite the fact that FS2004 does not support SLI mode at all. Or, in other words, if only one card works in the SLI system, the performance will decrease compared to the computer where only one card is installed. Not much, but tangible.

Below we gave an example of the game Unreal Tournament 2004. We used the GeForce 6800 GT in a resolution of 1024x768 without FSAA and AF. Both in SLI mode and in normal mode, the CPU could not keep up with the graphics card and load it with enough data, being a bottleneck. The more powerful the processor, the higher the performance:

- sLI mode is enabled (2 cards): 157.2 FPS;

- sLI mode is off (2 cards): 163.2 FPS;

- one card (1 card): 163.4 FPS.

If this phenomenon can be explained in UT2004 and other games (probably the SLI load distribution lays additional calculations on the processor), then we do not understand why this situation occurs in games that do not support SLI, where even with SLI switching only one card works (Flight Simulator 2004). By the way, in games OpenGL, such as Call of Duty, this problem is not so tangible.

If the game actively uses shaders (for example, Doom 3 and FarCry), then the performance gain of SLI compensates for any shortcomings at high resolutions and enabled quality settings.

The type of game also has a considerable effect on the effectiveness of SLI. Games with old 3D engines like Call of Duty benefit from SLI only at high resolutions and enabled quality settings. But performance here is already very high without SLI, so there will be no problems. At the same time, modern games that actively use shaders (for example, Doom 3 and FarCry) demonstrate the advantage of SLI even at small resolutions.

To dispel all doubts about the observed phenomenon of performance degradation at low resolutions in SLI, we analyzed the performance of one card in x8 PCIe and x16 PCI modes. The results were completely identical.

Finally, our last measurement concerns UT2004 without a connected bridge SLI at 1600x1200 resolution 4xAA / 8xAF:

- with a bridge SLI (2 cards): 153.3 FPS;

- without bridge SLI (2 cards): 133.0 FPS;

- one card (1 card): 101.1 FPS.

In addition to the standard work of SLI on motherboards, you can implement two more scenarios. Instead of having two video cards working together, you can use them to connect up to four monitors to a computer. And this mode of operation is not limited only to nVidia cards - we have not experienced any problems when installing X800 or X700. After boot Windows I recognized the second card and installed the driver.

At the same time, the latest drivers ATi CCC (Catalyst v4.11) have some problems with the recognition of this mode of operation. The Display Manager Wizard recognizes the outputs of only the first card, although the Display Manager shows three monitors. In either case, you will not be able to change the resolution type parameters, etc. for all monitors. CCC allows you to set these parameters only for the first X800 card. When you try to click on a display connected to another card, the CCC immediately goes to the first display. On the other hand, adjusting the settings in the Windows Display Manager runs without any problems.

Microsoft Flight Simulator 9 launched without any problems on three displays in the mode of two and three displays on X800 and X700 cards. At the same time, the "3D-span" mode on two ATi cards is not possible yet. We will try to find out the reason for ATi and let you know later.

Now let's move on to nVidia. We decided to install the GeForce PCX 5750 in the second slot, and the first one - the GeForce 6800 Ultra. The second card was again recognized by Windows without any problems. In the driver menu of nVidia, both cards appeared, so that the settings can be carried out separately. However, the "span" mode for combining three monitors into one big screen (like the Matrox Surround Gaming function) with nVidia cards is impossible, even if the second card installs the GeForce 6800 Ultra. The "span" mode is limited to only one card - not too good! For some reason, when using two cards in the nView settings, the nView Display Wizard was unavailable.

The result will be as follows. Support for three or four displays through two video cards on SLI motherboards still requires the development of drivers. If the "span" mode was not limited to one card and two displays, then we would get a good configuration for gaming (surround gaming). In the end, Matrox, which developed Surround Gaming, all three monitors work from one card.

![]()

Finally, we could not deny ourselves the pleasure of conducting one final test. Even at the time of Voodoo2, users could run 3D games through a standard graphics card or a separate Voodoo2 card. So why not try to install Radeon X800 XT PE together with GeForce 6800 Ultra and do not connect both cards to a monitor equipped with two inputs? So we did.

Installation of ATi Catalyst v4.11 driver together with nVidia Detonator v66.93 did not cause any problems. After the restart, the ATi driver failed because it could not activate the second display. After we corrected the situation in windows menu Display, we have access to the ATi driver options.

ATi and nVidia in harmony - at least on our computer.

Now the ATi and nVidia icons appear on the taskbar.

On the first card GeForce 6800 games were launched without problems. After we switched the main display to the ATi X800, we got a D3D error when starting the game. Restarting the computer did not help.

Changing the cards, we installed the X800 in the upper slot - the system did not work at all. After determining the graphics cards and rebooting geForce driver 6800 could not boot correctly, although the card was still present in Device Manager.

But we do not give up so easily! So we went back to the configuration when the GeForce 6800 Ultra occupied the first slot, and the X800 XT was the second. Again, we defined the X800 as the main monitor. In addition, we turned off the GeForce 6800 display by disabling the "Extend my Windows Desktop on this Monitor" windows Wallpapers on this monitor). Restarted - and now all 3D-games have earned and on the X800!

Below we have resulted step-by-step instructions. First, only the GeForce 6800 Ultra is activated. NVidia drivers and ATi are installed as described above.

3D Mark 2003 runs on the GeForce 6800 Ultra.

In the Windows Display Manager, the Radeon X800 XT card is disabled (display 2).

After clicking on the second display, select the option "Extend my desktop on this monitor" and then the "Use this device as the primary monitor" option and click "Apply". Now switch your monitor to the second VGA input.

Now select the first display with the mouse and turn it off by unchecking the option "Extend my desktop on this monitor".

After you click "Apply", the GeForce 6800 card will be turned off.

Running a 3D application produces an error message - time to reboot!

When the PC is booted, the screen will initially be black, as all boot messages are output through the first card (GF 6800), and our monitor's input is connected to the X800. After loading Windows, a picture appears, and the GeForce 6800 card turns off.

After the reboot: run 3D Mark 2005 - all is well!

Switching back to the GeForce 6800 is exactly the same. But for some reason the GF 6800 first starts only with a color depth of 4 bits! Do not worry. After rebooting the computer, you can set the correct resolution and color depth.

Of course, this mode can not be called convenient, since it requires a computer restart, but it still works. You can choose between ATi and nVidia cards on one computer!

Conclusion

This could be expected: a computer with support for SLI, equipped with two cards GeForce 6800 Ultra or GT wins the performance of all the others! The performance reserves of the SLI system are simply superb. Even with the Athlon 64 4000+ processor, performance almost always rested on the CPU - it just did not have time to calculate and issue information to the cards. Only at a resolution of 1600x1200 pixels with the inclusion of 4x FSAA and 8x anisotropic filtering, SLI cards were able to get sufficient load. The form of the game, too, in no small measure affects the performance gain provided by SLI. If the game is heavily using shaders (for example, Doom 3 and FarCry), the advantage of SLI will be noticeable even at low resolutions. At the same time, on relatively old games such as UT 2004 and Call of Duty, the gain is smaller. By the way, even Half-Life2 gets a relatively small increase from SLI. It should be noted that some games in SLI mode will not be able to start at all.

The performance of the SLI system on two GeForce 6600 GT cards will be evaluated only when new test cards arrive at our laboratory. One thing is clear right now: the SLI system can not be assembled easily.

When buying an SLI system, be aware that this mode is not supported by all games. If the driver does not find a suitable SLI profile for the game, the game will work with one card even when SLI is turned on - and the performance in this case may even decrease compared to the system in which only one card is installed. This is largely influenced by the age of the game and the level of 3D technologies in it. Another financial problem is connected with the fact that retail prices for GeForce 6800 PCIe cards and SLI-compatible motherboards are still unknown. So far, we've heard about the price increase by about $ 50 compared to the standard motherboards for nForce4 Ultra.

Among the tested SLI-compatible motherboards for nForce4, our choice fell on ASUS A8N-SLI Deluxe. And not only because of better performance. Although MSI will no doubt solve the memory bandwidth issue in the final version of the motherboard, the ASUS model provides two additional x1 PCI Express slots that MSI does not have. Another factor is additional food for the second video card on the ASUS motherboard - it looks like the manufacturer came here more thoughtfully.

We do not agree that SLI support today is only possible on games released together with nVidia. Similar to "overclocking", it would be nice to get the option in the drivers that forcibly includes sLI work, even if this leads to potential errors and crashes. After all sLI technology is aimed at enthusiasts! Anyone who agrees to invest $ 1000 in the graphics subsystem of their computer can accept the risk that the game or computer will sometimes "crash" in SLI mode. In our opinion, it's not too much fun waiting for the next version of the driver or using a "leak" driver for a new game. It is unlikely that this should be done with the target audience for which SLI technology is intended.

Another use of SLI motherboards can be considered the use of configurations with more than two monitors. And some fanatics can install ATi and nVidia cards simultaneously on their computer.

Judging by the tests of modern adapters with two top-end GPUs (Radeon HD 7990, GeForce GTX 690) or similar configurations based on individual cards, NVIDIA SLI and AMD CrossFireX technologies generally overcame the period of agonizing growing up. In many games, a dual-processor system demonstrates an almost double advantage over the same single GPU, while in others it is possible to expect a bonus of 50-70%. At least this is the case among the games of the AAA category. Less popular toys usually elude attention of IT publications, and how well they are optimized for SLI and CrossFireX, we do not judge. In addition, a generally optimistic picture spoils failure in individual games, where either performance still poorly scales, or developers are addicted to one of the competing technologies, ignoring the other.

In general, video cards with two GPUs have already proved the right to exist at the very top of the NVIDIA and AMD model lines. Meanwhile, SLI and CrossFireX connectors are now installed even on the weak by the standards of gamers adapters like Radeon HD 7770 and GeForce GTX 650 Ti BOOST. And every time when another cheap video card with support for SLI or CrossFireX comes into the hands, there is a desire to check - and how is a pair of these babies behaving? On the one hand, the interest is purely academic. The processors in the low-end gaming graphics cards in terms of the number of functional blocks represent approximately halves of the top-end GPUs. Here's another way to test the effectiveness of technologies that parallelize the computational load between two GPUs - try to get a performance from two halves close to 100% of the result of the top processor.

⇡ Do we need it?

But we will try to find in this experiment a share of practical utility. In theory, tandem weak video cards can become a more profitable purchase than one top-end adapter. The oldest AMD models are the permanent Radeon HD 7970 and its overclocked HD 7970 GHz Edition. Among the products of NVIDIA, the GeForce GTX 680 and GTX 770 can be considered a similar pair. Well, the Radeon HD 7790 and HD 7850 (for AMD), GTX 650 Ti BOOST and GTX 660 (for NVIDIA) are suitable for their approximate halves.

Compare the retail prices of these models. Radeon HD 7790 with a standard memory capacity of 1 GB in Moscow online stores can be purchased for a sum of 4,100 rubles, HD 7850 2 GB - for 4,800 rubles. At the same time, the Radeon HD 7970 sells at a price of 9,800 rubles, which is only 200 rubles more expensive than a pair of HD 7850. But the HD 7970 GHz Edition is already difficult to find for less than 14,000 rubles.

Thus, CrossFireX on two inexpensive AMD cards seems a dubious venture, if only because the Radeon HD 7850, although it's half the HD 7970 by the number of active computational units in the GPU, is significantly inferior to the latter by the clock frequency of the core and memory. The HD 7790 twists the processor at 1 GHz, which partly compensates for its more modest configuration compared to the HD 7850, but the weak point of the HD 7790 is the 128-bit memory bus, and most importantly its 1 GB capacity, which will definitely affect the performance in heavy graphic modes (namely for them we also collect such a tandem).

From the point of view of pure experiment, not burdened by financial considerations, on the configuration of computing units and GPU frequency, the aggregate power of a pair of junior AMD adapters capable of working in the CrossFireX mode (we do not take into account the Radeon HD 7770, an assembly of two such ones would be completely ridiculous) , slightly misses the flagship mark. Let's not forget this circumstance when discussing the results of benchmarks. However, in favor of a pair of HD 7850 is a 256-bit memory bus - something that NVIDIA can not offer in this price segment.

| Model | Radeon HD 7790 | Radeon HD 7850 | HD 7970 | HD 7970 GHz Edition |

|---|---|---|---|---|

| Main Components | ||||

| GPU | Bonaire | Pitcairn Pro | Tahiti XT | Tahiti XT |

| Number of transistors, million | 2080 | 2800 | 4313 | 4313 |

| Process technology, nm | 28 | 28 | 28 | 28 |

| 1000 / ND | 860 / ND | 925 / ND | 1000/1050 | |

| Stream Processors | 896 | 1024 | 2048 | 2048 |

| Texture blocks | 56 | 64 | 128 | 128 |

| ROP | 16 | 32 | 32 | 32 |

| GDDR5, 1024 | GDDR5, 2048 | GDDR5, 3072 | GDDR5, 3072 | |

| Memory bus width, bit | 128 | 256 | 384 | 384 |

| 1500 (6000) | 1200 (4800) | 1375 (5500) | 1500 (6000) | |

| Interface | PCI-Express 3.0 x16 | |||

| Image output | ||||

| Interfaces | 1 x DL DVI-I, 1 x DL DVI-D, 1 x HDMI 1.4a, 1 x DisplayPort 1.2 | 1 x DL DVI-I, 1 x HDMI 1.4a, 2 x Mini DisplayPort 1.2 |

||

| Max. resolution | VGA: 2048x1536, DVI: 2560x1600, HDMI: 4096x2160, DP: 4096x2160 |

|||

| 85 | 130 | 250 | 250+ | |

| Average retail price, rub. | 4 100 | 4 800 | 9 800 | 14 000 |

Price distribution among video cards nvidia more favorable for assembling a tandem of two SKUs of small performance compared to a single powerful adapter. GeForce GTX 650 Ti BOOST can be bought at a price starting from 5 200 rubles, GeForce GTX 660 - for 5 800 rubles. Both have two gigabytes of onboard memory. At the same time, the prices for the GeForce GTX 680 and the newcomer GTX 770, which is actually an updated version of the GTX 680, start at 13,000 rubles. According to the specifications of the GTX 650 Ti BOOST, the smallest graphics accelerator in the NVIDIA range that supports SLI, corresponds to the half of the GTX 680 (GTX 770) and even has a large number of ROPs, only works at lower frequencies. GTX 660, with a frequency equal to the GTX 650 Ti BOOST, is more closely packed with CUDA cores and texture blocks. Both models are burdened by a relatively narrow 192-bit memory bus.

| Model | GeForce GTX 650 Ti BOOST | GeForce GTX 660 | GTX 680 | GTX 770 |

|---|---|---|---|---|

| Main Components | ||||

| GPU | GK106 | GK106 | GK104 | GK104 |

| Number of transistors, million | 2 540 | 2 540 | 3 540 | 3 540 |

| Process technology, nm | 28 | 28 | 28 | 28 |

| Clock speed GPU, MHz: Base Clock / Boost Clock | 980/1033 | 980/1033 | 1006/1058 | 1046/1085 |

| Stream Processors | 768 | 960 | 1536 | 1536 |

| Texture blocks | 64 | 80 | 128 | 128 |

| ROP | 24 | 24 | 32 | 32 |

| Video memory: type, volume, MB | GDDR5, 1024/2048 | GDDR5, 2048 | GDDR5, 2048 | GDDR5, 2048 |

| Memory bus width, bit | 1502 (6008) | 1502 (6008) | 1502 (6008) | 1753 (7010) |

| Memory clock speed: real (effective), MHz | 192 | 192 | 256 | 256 |

| Interface | PCI-Express 3.0 x16 | |||

| Image output | ||||

| Interfaces | 1 x DL DVI-I, 1 x DL DVI-D, 1 x HDMI 1.4a, 1 x DisplayPort 1.2 |

|||

| Max. resolution | - | - | - | - |

| Typical power consumption, W | 140 | 140 | 195 | 230 |

| Average retail price, rub. | 5 200 | 5 800 | 13 000 | 13 000 |

⇡ Deceptive gigabytes

A significant problem in any configuration with multiple GPUs is the amount of memory of the video adapters. The fact is that the RAM of individual video cards does not stack into one large array. Instead, their contents are duplicated. Thus, even if the cumulative computing power of two "cropped" GPUs is comparable to the power of a single flagship core, the tandem has a frame buffer in the amount of one card's memory. And heavy graphics modes, you know, are extremely demanding on the amount of memory. Let's take games known for their thirst for additional megabytes, which we use as benchmarks. Metro 2033 at a resolution of 2560x1440 with the maximum settings occupies up to 1.5 GB of RAM, Far Cry 3 - 1,7, and Crysis 3 - almost 2 GB.

And since it is for such games and such settings that we are ready to resort to such a strange solution as two relatively budget cards in the SLI / CrossFireX configuration, video adapters with a RAM less than 2 GB are bad for us. In this respect, NVIDIA products are a little more fortunate, since even the GeForce GTX 650 Ti BOOST, according to official specifications, comes in a version with 2GB of memory, not to mention the GTX 660. In addition, even the GTX 680 has no more memory (only for GTX 770 are valid versions with 4 GB of GDDR 5).

The Radeon HD 7790 is conceived as an adapter with only 1 GB of memory, although AMD partners produce models with a double frame buffer on their own initiative. The HD 7850 version with 1 GB of memory due to the appearance of the HD 7790, on the contrary, officially ceased to exist. And most importantly, the flagship, the Radeon HD 7970 GHz Edition, comes with 3 GB of memory - one and a half times more than the "average" of AMD.

In general, let's stop guessing. Honestly, the performance of building two gaming graphics cards of the middle category in SLI or CrossFireX is difficult to predict based on naked theory. We need to test, and we are ready for any surprises. But before we move on to practical work, we will discuss one more aspect of our exotic project - energy consumption. Based on the typical power of AMD adapters, the HD 7850 tandem should not consume much more power than the single Radeon HD 7970: 2x130 versus 250W, respectively. Building HD 7790 is even more economical: it's twice 85 watts.

Comparable performance NVIDIA cards are more voracious. GeForce GTX 650 Ti BOOST and GTX 660 have the same TDP - 140W. In a pair, these adapters far outperform the GeForce GTX 680 (195W) and even the GTX 770 (230W).

⇡ Participants in testing

Our task is to collect four dual-processor configurations: based on GeForce GTX 650 Ti BOOST and GeForce GTX 660, on the one hand, and on the Radeon HD 7790 and HD 7850 on the other, and then compare their performance with the performance of powerful adapters on a single GPU.

- 2 x GeForce GTX 660 (980/6008 MHz, 2 GB)

- 2 x GeForce GTX 650 Ti BOOST (980/6008 MHz, 2 GB)

- 2 x Radeon HD 7850 (860/4800 MHz, 2 GB)

- 2 x Radeon HD 7790 (1000/6000 MHz, 1 GB)

And these models were chosen as competitors:

- AMD Radeon HD 7970 GHz Edition (1050/6000 MHz, 3 GB)

- AMD Radeon HD 7970 (925/5500 MHz, 3 GB)

- AMD Radeon HD 7950 (800/5000 MHz, 3 GB)

- NVIDIA GeForce GTX 780 (863/6008 MHz, 3 GB)

- NVIDIA GeForce GTX 770 (1046/7012 MHz, 2 GB)

- NVIDIA GeForce GTX 680 (1006/6008 MHz, 2 GB)

- NVIDIA GeForce GTX 670 (915/6008 MHz, 2 GB)

⇡ Testing participants, in detail

Most of the cards in multiprocessor assemblies are provided for testing by NVIDIA partners, and differ from reference design adapters at clock speeds. For the purity of the study, they had to be brought to reference specifications. However, showing respect for the work of producers, we briefly list below the merits of each of the participants in the testing.

- AMD Radeon HD 7850

- ASUS GeForce GTX 650 Ti BOOST DirectCU II OC (GTX650TIB-DC2OC-2GD5)

- ASUS Radeon HD 7790 DirectCU II OC (HD7790-DC2OC-1GD5)

- Gainward GeForce GTX 650 Ti BOOST 2GB Golden Sample (426018336-2876)

- GIGABYTE Radeon HD 7790 (GV-R779OC-1GD)

- MSI GeForce GTX 660 (N660 TF 2GD5 / OC)

- ZOTAC GeForce GTX 660 (ZT-60901-10M)

ASUS GeForce GTX 650 Ti BOOST DirectCU II OC (GTX650TIB-DC2OC-2GD5)

ASUS sent us a slightly overclocked version of the GeForce GTX 650 Ti BOOST 2 GB with a core core frequency increased by 40 MHz compared to the reference specification. The board is equipped with a well-known DirectCU II cooling system, which includes heat pipes directly in contact with the GPU crystal. Hence the name DirectCU. That's only, unlike more expensive models, in the GTX 650 Ti BOOST cooler there are only two heat pipes, and not three.

Gainward GeForce GTX 650 Ti BOOST 2GB Golden Sample (426018336-2876)

Gainward also produces an overclocked version of the GeForce GTX 650 Ti BOOST, with the difference from the ASUS implementation that the base frequency of the GPU is increased by only 26 MHz, but there is an increase of 100 MHz to the resulting video memory frequency. The cooler of the video card also belongs to the open type and contains two copper heat pipes.

MSI GeForce GTX 660 (N660 TF 2GD5 / OC)

GeForce GTX 660 in MSI version is equipped with a solid cooling system Twin Frozr III with three heat pipes already. The basic clock frequency of the GPU manufacturer increased by 53 MHz relative to the reference value, without touching the frequency of the video memory.

ZOTAC GeForce GTX 660 (ZT-60901-10M)

This video card has already lit up on 3DNews in the initial testing of the GeForce GTX 660. There is a slight overclock of the graphics processor: from the base frequency of 980 to 993 MHz. The cooling system is an open type design with two heat pipes and a copper plate in the base.

AMD Radeon HD 7850

This is a one hundred percent reference video adapter provided by AMD itself. Accordingly, the core and memory frequencies correspond to the standard ones with an accuracy of megahertz. Unlike all other test participants, the reference Radeon HD 7850 is equipped with a turbine cooler. The radiator has a copper sole and is permeated with three heat pipes.

ASUS Radeon HD 7790 DirectCU II OC (HD7790-DC2OC-1GD5)

The overclocker version of Radeon HD 7790 from ASUS is dispersed at 75 MHz by the processor frequency and by 400 MHz by video memory. In general, AMD GPUs allow greater factory overclocking compared to NVIDIA's analogs. The cooling system of the card is similar to that of the above-described ASUS GeForce GTX 650 Ti BOOST DirectCU II OC. This is a simplified version of the DirectCU II cooler with two heat pipes pressed to the GPU crystal.

GIGABYTE Radeon HD 7790 (GV-R779OC-1GD)

This card is similar in configuration to the Radeon HD 7990 from ASUS: the GPU operates at the same frequency of 1075 MHz, but the memory frequency is left at the standard 6000 MHz. The cooler is a simple design, formed by a solid aluminum radiator and a large turntable. GIGABYTE can not boast of heat pipes or an additional metal radiator sole.

Extensiveness and intensity - these are the two key concepts of the development of human progress. Extensive and intensive ways of development of society, technology, and the whole of mankind as a whole succeeded each other for many centuries. There are many examples of this in all spheres of economic activity. But agriculture and cattle breeding do not interest us today, we are interested in information technology and computers. For an example, let's briefly consider the development of CPUs.

We will not go deep into the far 80-90s, we will start right away with the Pentium 4. After the continued intensive development of NetBurst technology, the growth of the "steroid" megahertz, the industry rested against the wall - further "overclocking" single-core processors turned out to be very difficult and, to get more more powerful processor, you need big expenses. And then there were dual-core processors - an extensive solution to the problem. Soon they stopped providing the required performance, and a new Core architecture appeared, which, with lower clock speeds, gives more performance - an intensive solution to the problem. And so it goes on.

And what happens with the development of the second component, an important for the fan of entertainment games on the PC - video card? And there is the same thing as with the processors. At this stage of development, single-chip video cards can no longer provide the required performance. Therefore, both leading video card companies (NVIDIA and AMD) are developing and actively implementing their own technologies to increase the performance of the video system by combining two, three, and even four video cards in one PC. In this article, we'll look at the first company (since it was the first company to present its development), NVIDIA and its SLI technology.

What is SLI technology?

NVIDIA SLI technology is a revolutionary approach to scalability of graphics performance by combining several NVIDIA graphics cards in one system.

History

In 1998, 3dfx introduced the Voodoo2 graphics processor, among other innovations of which was SLI technology (English Scan Line Interleave - alternating lines), which involved working together two Voodoo2 chips to create an image. With SLI technology, even cards from different manufacturers, as well as cards with different memory sizes, could work. SLI-system allowed to work with a resolution of up to 1024x768, which at that time seemed incredible. The disadvantages of SLI from 3dfx were the high cost of accelerators ($ 600) and large heat dissipation. However, soon the video cards move from the PCI bus to the faster dedicated AGP graphics port. Since this port was only on motherboards, the issue of video cards with SLI support stopped for a while.

In 2000, with the release of the new VSA-100 3dfx chip, it was possible to implement SLI on AGP, but this time within the same board, which housed two or four such chips.

However, the boards based on the SLI-system had high power consumption and failed due to power problems. For the whole world of Voodoo5 6000 boards, about 200 were sold, and really only 100 of them were working. The unsuccessful advancement of a promising accelerator, in which very large funds were invested, led to the bankruptcy of the company. In 2001, NVIDIA buys 3dfx for 110 million dollars.

In 2004, with the release of the first solutions based on the new PCI Express bus, NVIDIA announces the support in its products of the technology of multichip data processing SLI, which is deciphered in a different way - Scalable Link Interface (scalable interface).

![]()

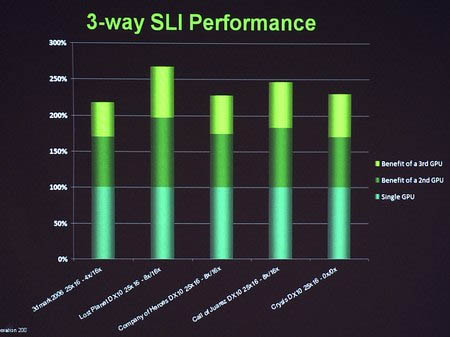

Initially, the advancement of SLI technology did not go very smoothly, primarily because of the constantly revealed flaws in the drivers, and also because of the need to "sharpen" them for each specific application, or else the player did not benefit from buying a pair of accelerators. But generations of accelerators were changing, the drivers were being finalized, the list of supported games expanded. And now, at the end of 2007, Triple SLI technology was put into operation, which allows combining 3 video cards of NVIDIA:

![]()

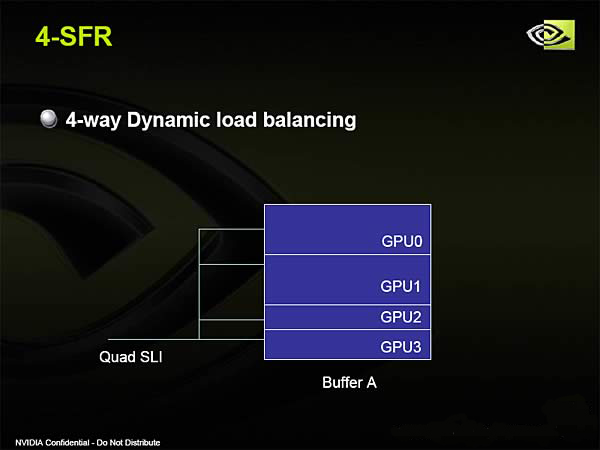

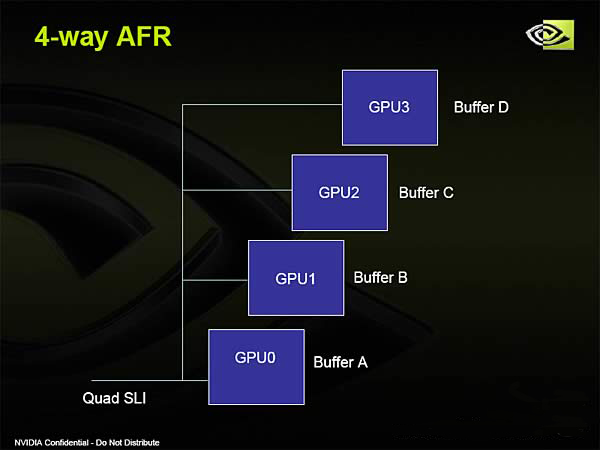

The manufacturer promises a performance gain of up to 250% compared to a single chip. But this did not become a limit, now Quad SLI is on the queue, which makes it possible to get 4 GPUs to work on the visualization of the game scene, however, as part of a couple of dual-chip video cards.

Image Algorithms

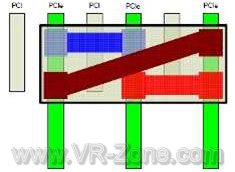

Split Frame Rendering

Split Frame Rendering algorithm

This is often used mode, when the image is divided into several parts, the number of which corresponds to the number of video cards in the bundle. Each part of the image is processed by one video card completely, including the geometric and pixel components. ( Analog in CrossFire - Scissor algorithm)

The algorithm of Split Frame Rendering is easily scaled to 3, 4, and in the future, perhaps, to a larger number of GPUs.

Alternate Frame Rendering

Alternate Frame Rendering algorithm scheme

The processing of frames occurs in turn: one video card processes only even frames, and the second - only odd ones. However, this algorithm has a drawback. The fact is that one frame can be simple, and the other one is difficult to process. In addition, this algorithm is patented by ATI during the release of the dual-chip video card.

In the Quad SLI technology, a hybrid mode is used, which combines SFR and AFR.

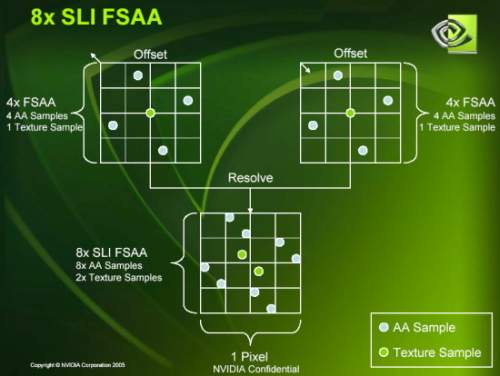

SLI AA (Anti Aliasing), SLI FSAA (Full Scene Anti Aliasing)

This algorithm is aimed at improving the quality of the image. The same picture is generated on all video cards with different anti-aliasing patterns. The video card produces a smoothing of the frame with a certain step relative to the image of the other video card. Then the resulting images are mixed and output. Thus, maximum clarity and detail of the image is achieved. The following anti-aliasing modes are available: 8x, 10x, 12x, 14x, 16x and 32x. ( Analog in CrossFire - SuperAA)

Principles of construction

To build a computer on sLI-based you must have:

- a motherboard with two or more PCI Express slots that supports SLI technology (usually the word SLI is in the name of the motherboard).

- Powerful enough power supply unit (usually recommended from 550 W);

- video card GeForce 6/7/8/9 / GTX or Quadro FX with PCI Express bus;

- a bridge that combines video cards.

At the same time, we note that the support for chipsets for working with SLI is implemented programmatically, and not hardware. But video cards must belong to the same class, while the version BIOS Cards and their producer does not matter.

At the moment, SLI is supported by the following operating systems:

- Windows XP 32-bit

- Windows XP 64-bit

- Windows Vista 32-bit

- Windows Vista 64-bit

- Linux 32-bit

- Linux 64-bit (AMD-64 / EM64T)

SLI-system can be organized in two ways:

- Using a special bridge;

- Programmatically.

In the latter case, the load on the PCIe bus increases, which has a negative effect on performance, so this method has not been widely used. Such a regime can only be used with relatively weak accelerators.

If the SLI bridge is not installed, then the driver issues a warning that the SLI mode will not work at full strength.

For example, this is how a special bridge can look to connect video cards, if it is made on textolite. In addition, nowadays, flexible bridges have also become widespread, as they are cheaper in production. But to activate the 3-Way SLI technology, only a hard special bridge is used so far, which actually accommodates three conventional ones in the "ring" mode.

As mentioned above, the Quad SLI system is also being popularized. It involves the integration into a single system of two dual-chip boards. Thus, it turns out that 4 chips participate in image building, however this is still the lot of avid enthusiasts and for ordinary users has no practical value.

For a simple user, what's important is something else, for what purpose this technology was actually conceived. Buying a motherboard with SLI support, you are doing a good job for upgrading your PC in the future, as it provides the ability to add one more video card. No other method of "upgrade", except for a complete change of system, does not even close the increase in gaming performance that the second video card provides.

It looks something like this. You buy a good PC with a good modern graphics card. Quietly play modern games, but after a year and a half your system can no longer satisfy you with image quality and speed in the latest games. Then instead of replacing the old video card (or even the whole PC), you simply add one more and get almost doubled the power of the video system.

However, everything sounds so simple in theory. But in practice, the user will face many difficulties.

The first is the games and applications themselves. From the terrible word "optimization" no one has come up with a cure - yes, not only drivers, but games should be optimized for SLI technology in order to work with it correctly. NVIDIA claims that SLI technology supports " the longest list of games ». If the game you are interested in is not in the list, the company suggests creating its own settings profile for it.

Unfortunately, there are still a lot of games that are not compatible with SLI, and creating a profile does not fix it. The only way out is to wait for patches from game developers and new drivers from NVIDIA. However, these games are mostly old, with which a single modern graphics card, or few popular ones, can cope without problems, which are not of interest to the overwhelming majority of players due to poor "playability". But the new powerful games already provide for the use of two video cards in advance. For example, in the game Call of Duty 4 in the graphics settings section there is a very specific switch.

You can learn about the inclusion of the SLI function by special indicators right in the game, with the appropriate "test" driver setting.

If you see horizontal or vertical green bars on the monitor screen, this means that the "Show visual SLI indicators" mode is enabled in the NVIDIA Display control panel. When this option is enabled, you can see how the graphics load is distributed to the GPU, and the SLI names of the components in your system will be changed: SLI for two GPUs will be called "SLI", the name 3-way NVIDIA SLI will be replaced with "SLI x3", and Quad SLI will be called "Quad SLI". For games that use the Alternate Frame Rendering (AFR) technology, the vertical green bar will grow or decrease depending on the scale of the expansion. For games that use the Split-Frame Rendering (SFR) technology, the horizontal green bar will rise and fall, showing how the load is distributed among all the GPUs. If the level of detail of the top and bottom half of the screen is the same, then the horizontal bar will be close to the middle. To enable or disable this feature, simply open the NVIDIA control panel and select "3D Settings".

The second common problem of computing multiple graphics chips is the synchronization of the latter. As mentioned above, the AFR rendering method has one feature: one frame can be simple, and the other one is difficult to process. With a large difference in the complexity of processing a frame, one video card can process it much faster than the second one. This leads to "microlags" - small image delays, as if jerks, but the total number of FPS looks comfortable. Such an effect appears rather rarely, but here's how to deal with it do not even know the developers. For the user, there is a simple way out - in games where there are "microlag" with the AFR method to switch to the SFR method, pointing it in the game profile.

In SFR mode, the display is divided into two parts. The first card gives the top of the picture, and the second - the bottom one. Due to dynamic load balancing, the driver evenly distributes the load between the two cards.

The third problem is the processor. The fact is that when using multiple video adapters, the CPU load increases not only due to the use of heavy graphics modes, but also due to the synchronization of video chips. Therefore, in order to unleash the full potential of a bundle from several video cards, you will need a powerful processor. Although today it is not such an acute issue as a couple of years ago.

Additional features of the new technology

A new generation of motherboards and graphics cards with SLI support provide users with much more functions than the usual acceleration of three-dimensional graphics.

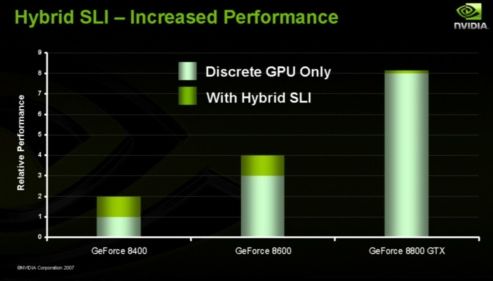

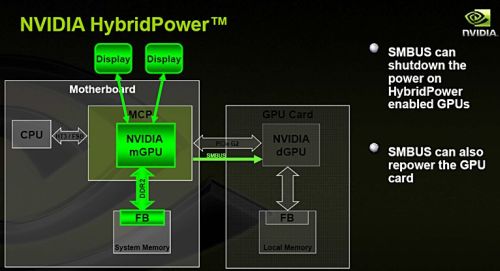

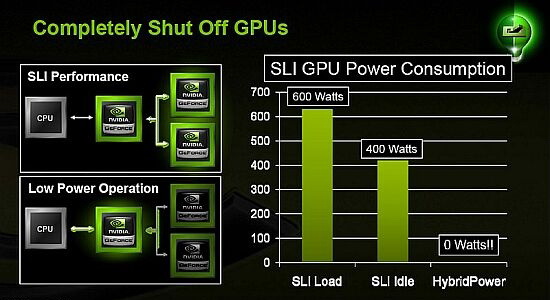

Hybrid SLI provides more rational use of the mode of sharing the graphics core built into the chipset, and a discrete video card.

The technology consists of two parts: GeForce Boost and HybridPower.

The first will be used in applications that work intensively with 3D graphics. With GeForce Boost, part of the calculations for the three-dimensional scene will be taken over by an accelerator integrated into the set of system logic, which will give a noticeable increase in performance to systems that do not have the fastest discrete adapter, such as GeForce 8500 GT or GeForce 8400 GS.

HybridPower, on the other hand, will allow to use the built-in graphics, disabling the external accelerator when the user is working on the Internet, office applications or watching video. The greatest benefit, according to NVIDIA, from this technology will be owners of laptops with a dedicated video card, the battery life of which will increase significantly.

Currently, Hybrid SLI is supported by: GeForce 8500 GT desktop GPUs and GeForce 8400 GS for GeForce Boost; performance GeForce GTX 280, GeForce 9800 GX2, GeForce GTX 260, GeForce 9800 GTX +, GeForce 9800 GTX and GeForce 9800 GT in HybridPower mode; as well as motherboards for AMD processors on nForce 780a, nForce 750a, nForce 730a (only GeForce Boost function) with integrated graphics accelerator GeForce 8200.

As you can see, energy savings can reach impressive values.

A very useful feature for overclockers, as well as saving power, allows the main video card to "rest" when idle and prolong its life, especially if extreme overclocking is used.

Another useful additional feature of the SLI-link is the ability to use up to 4 monitors at the same time.

Practical use

We have repeatedly tested various video cards in SLI mode. Let's try to summarize the information.

In preparing the article, information was used from the official site