Instruction manual

Divide your search engine into three parts. The first part is the interface of the future web search engine, which is written in PHP. The second part is the index (My SQL database), which stores all the information about the pages. The third part is a search robot that will index web pages and enter their data into the index, it is done in Delphi.

Let's start creating the interface. Create the index.php file. To do this, divide the page into two parts using tables. The first part is the search form, the second is the search results. In the upper part, create a form that will send information to the index.php file using the get method. Three elements will be located on it - a text field and two more buttons. One button is needed to send a request, the second - to clear the field (this button is optional).

Give the text field the name “search”, the first button (the one that sends the request) the name “Search”. Leave the name of the form itself as it is - "form1".

Connect the configuration file to connect to the database.

include "config.php";

Check if the “Search” button has been clicked.

if (isset ($ _ GET ["button"])) (code that executes if the "Search" button is pressed) else (code that executes if the "Search" button is not pressed)

If the button is pressed, then check for a search query.

if (isset ($ _ GET ["search"])) ($ search \u003d $ _ GET ["search"];)

If a search query is, then assign the text of the search query to the variable $ search.

if ($ search! \u003d "" && strlen ($ search)\u003e 2) (database search code) else (echo "An empty search query was specified or the search string contains less than 3 characters.";)

In the event that the search query satisfies the upper condition, run the search script itself.

Run a loop that will print the search results through printf.

That's all. If you have the necessary knowledge, then you can well add the elements you need to the search engine and create your own algorithm for creating it.

Popular web sites attract users not only with their original design, interesting thematic content, but also with functional services. People go to the Internet for information, daily searching for the materials they are interested in. Therefore, it makes sense to create search engine on website, giving users the ability to quickly find what they need on manually selected resources.

You will need

- - browser;

- - Internet connection;

- - rights to edit the content or page templates of the site.

Instruction manual

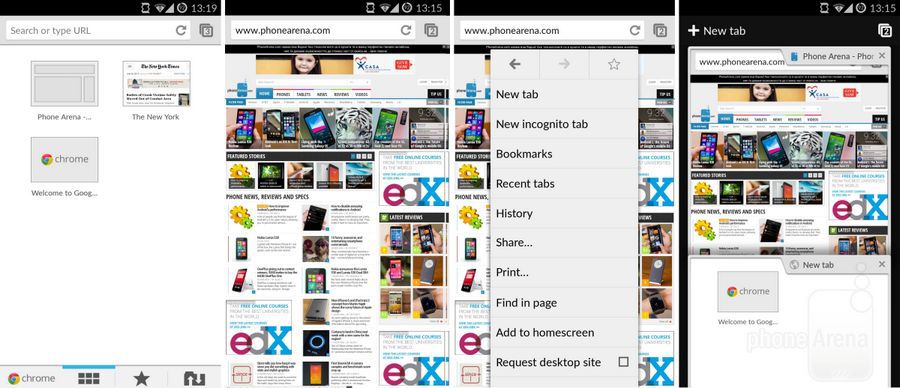

Start building your Google search custom search engine. Log in to the search engine management service panel. In the browser, open the page with the address http://www.google.com/cse/. Use your Google account to work with the system. Click on the button "Create a user search system." If you are not logged in at the moment, then click on the "Login" link. Enter the data from the account into the form and click the "Login" button. If you do not have in common google account, create it by clicking on the link “Create an account now” and follow the suggested steps.

Enter the basic parameters of the user search system you are creating. Fill in the “Name” and “Description” fields, select the interface language in the “Language” drop-down list. In the "Sites for search" text box, enter a list of resources from which information will be presented in the search results using the created system. Press "Next".

Get the javascript code to install the search engine on the site. Select all the content in the text box on the current page. Copy the selected contents to the clipboard and save in a temporary file.

If you have already decided on a future mail server, you can proceed to register an email address. The registration process on any portal is approximately the same and suggests filling out a questionnaire and instructions security question, in case you forget the password from your email. It is necessary to approach to filling out the questionnaire responsibly, because if your mail is hacked, you will have to provide the registrar with the data from the questionnaire. Therefore, if you decide to take a pseudonym or deliberately use fake data, you should save it in a safe place.

note

Email, as a rule, should be offered on free terms. But there are sites that do this for a certain monthly subscription fee with a beautiful and exclusive domain name and a ton of additional features. Before you buy mailboxworth considering all the possibilities free services, and after already accept commercial offers.

When choosing mail server pay particular attention to the popular portals that offer post service. As a rule, such portals are time-tested and guarantee reliability and functionality.

To improve performance reliability site, the safety of information on the site, increasing traffic site, reduce the load on the site, etc. are doing mirror site. It is understood that in the case when the main resource is unavailable for a number of reasons, the visitor gets to the reserve resource, that is, the site mirror.

Many newcomers to the field of "webmastering" (let's call it that) at some point acquire a "brilliant" idea, "rather than stir up my search engine ?! sell advertising, cut loot! ". I admit, it happened to me ... 3 times.

Runet Search Engine - Yandex killer

I collected links on the subject, began to study, shoveled everything that I found on Aport and Yandex. I downloaded several free engines with spiders, but there wasn’t enough “knowledge”, even to simply put them. The need for inventions is tricky: I took a catalog script (without the database, on txt files), searched the database of sites and began to fill it with sites: first, myself, then hired a moderator. And what would you think? Of course, the idea failed, but there were ideas that resulted in a search engine for books, more about it later.

Book Search

Rummaging around in a few runet (2004-2007, approximately), I took two books: Hummingbirds and Boleros, the reason for choosing is simple - in both cases, databases of goods from these stores could be downloaded from the partner interface. There was little information in the databases: the name of the book, the author, the address on the store’s website. But this was enough to create a directory + search engine. Moreover, annotations were also given out on books (they were parsed in real time from store sites, yes, I did not suspect caching then, nor did I use automatic redirects ...).

The book search engine was not successful, but the catalog brought traffic oil tones from Yandex, respectively, the sale of books. Most of the purchases were delivery by mail, cash on delivery, so I had to wait for receipts to my account for months ... Russian Post.

Google killer

The main direction of my work was in the “bourgeois”, in particular, I worked with PPC, mainly with Yumaks, and therefore I chose their feed as the “engine” for the next search engine. Armed with php (or rather, redoing parsers of book catalogs), I learned to add additional information to the output according to the user's request, pictures, etc. (just like now.).

And then the beautiful happened. Search Engines: Msn (now Bing) and Google began to index the results of “my search engine” and delighted with traffic, which in turn was generously paid by Umaks.

And while my colleagues riveted the doors, I riveted such search engines: different diza, different sources for additional information. Why make a doorway and redirect traffic to the feed, risking a ban due to a redirect, when you can do, for example, thematic mini-sites, without a redirect? White doorways, it seems so now they are called. The idyll did not last long - less than a year. Changing the algorithms at the beginning to msn, then Google buried similar solutions (more precisely, made them much less effective).

Somewhere during the collapse of the “system” in the MSN, I took one of the banned domains “from grief” - the site and transferred to it a blog that had previously been posted on some forum, or as part of the website of an advertising agency.

3 times! 3 times stepped on a similar rake: some people do not even learn from their own mistakes :)

| Subscribe to our newsletter and get what is not included in the blog, announcements and collections + several guides (gathering subscribers and selling information). |

Have you ever wondered how search engines such as Yandex or Google work? If you were faced with the task of writing search engine from scratch, where would you start? Surely many of you have already written simple content sites with an internal search system for them, and you implemented the search very simply - with the LIKE SQL syntax command. Do you think Yandex works like that too? 🙂

To tell about all the mechanisms implemented in modern search engines is clearly not a single post task (and I won’t be able to tell a lot 🙂), so here I’ll talk about the most significant and unknown part of search engines - the index. But let's not rush.

In general, the entire search system can be divided into 3 parts: user interface, search agent and index.

The user interface is familiar to everyone - google.com, ya.ru. This is usually just a search string. Search agent - a program that crawls through sites, collecting page texts and url from them. The search agent stores the collected information in an index.

Well, the most important part is the index, or search base data.

The index stores all the information collected by search agents - Internet pages.

In general terms, the agent’s job is to collect information - he visits the page of the site, takes the text from there, pulls out links from it and sends them along with the text to the index. This moment should be considered in more detail, since it consists in it main job search engine.

How exactly is the data stored in the index? What structure do index tables have? This is one of the key details of the search engine.

If we look at the search engine in a very abstract way, then the index can be divided into three tables: a dictionary, documents, and links.

To make it clearer, imagine three tables:

words (dictionary) with fields:

id, name

documents with fields:

id, document

and relations (relations) with the fields:

word_id, doc_id

Before adding page text to the index, the search engine breaks it into words. After receiving a list of words from the document, she adds to her dictionary (words table) those words from them that are not there yet. And the document itself saves in the documents table.

After that, word-document relationships are added to the relations table, which determine which words appear in which document.

The next complex process that is implemented in search engines is the selection and ranking of information at the request of the user.

After you go to google.com and type in “php”, a very complex mechanism is launched, the purpose of which is to show you a list of documents related to the request, in descending order of relevance.

How is this implemented? Everything is very complicated here. Firstly, the search engine must select the appropriate documents - those documents in which these words appear. With the help of the tables indicated above, it is already possible in general to imagine how this is done. But with the ranking (ordering), problems already begin that each search engine solves in its own way.

Here, very complex clustering and classification algorithms are already applied, which divide all documents into groups and determine the category for each document. Already, based on these categories, some information appears on the degree of relevance of each document. In addition to this factor, modern search engines take into account a huge number of others.

It should be true to distinguish between search engines on the web (google, Yandex, etc.) from relatively small information retrieval systems. The former are significantly larger in scale than the latter, which means that their structure is much more complicated.

Small search engines include projects such as sphinx and lucene.

That's all. Such a small and useful excursion into search engines. 🙂

Additional Information.