By Mike Weiner

Co-author: Burzin Patel

Editors: Lubor Kollar, Kevin Cox, Bill Emmert, Greg Husemeier, Paul Burpo, Joseph Sack, Danny Lee , Sanjay Mishra, Lindsey Allen, Mark Souza

Microsoft SQL Server 2008 includes a number of enhancements and new functionality that extend the functionality of previous versions. Administration and maintenance of databases, maintaining manageability, availability, security and performance are all the responsibility of the database administrator. This article describes ten of the most useful new features in SQL Server 2008 (in alphabetical order) that make your DBA's job easier. In addition to a brief description, for each of the functions there are possible situations of its use and important recommendations for use.

Activity Monitor

When troubleshooting performance issues or monitoring server activity in real time, an administrator typically runs a series of scripts or checks relevant information sources to gather general information about running processes and identify the root cause of the problem. The SQL Server 2008 Activity Monitor aggregates this information to provide visual information about running and recently running processes. The DBA can both view high-level information and analyze any of the processes in more detail and get acquainted with the waiting statistics, which makes it easier to identify and resolve problems.

To open Activity Monitor, right-click the registered server name in Object Explorer, then select Activity Monitor alternatively, use the standard toolbar icon in SQL Server Management Studio. Activity Monitor offers the administrator an overview section similar in appearance to the Dispatcher windows tasks, as well as the components for detailed viewing of individual processes, waiting for resources, I / O to data files and recent resource-intensive requests, as shown in Fig. 1.

Figure: 1:Activity Monitor viewSQL Server 2008 in WednesdayManagement Studio

Note. Activity Monitor uses a data refresh rate setting that can be changed by right-clicking. If you choose to refresh the data frequently (every less than 10 seconds), the performance of a highly loaded production system may decrease.

With the Activity Monitor, the administrator can also perform the following tasks:

· Pause and resume Activity Monitor with one right click. This allows the administrator to "save" the state information at a specific point in time, it will not be updated or overwritten. However, keep in mind that if you manually refresh the data, expand or collapse a partition, old data will be updated and lost.

· Right-click a line item to display the full query text or a graphical execution plan using the Recent High-Power Queries menu item.

· Trace by Profiler or terminate processes in Processes view. Profiler app events include events RPC: Completed, SQL: BatchStarting and SQL: BatchCompleted, as well as Audit Login and AuditLogout.

Activity Monitor also allows you to monitor the activity of any local or remote instance of SQL Server 2005 registered with SQL Server Management Studio.

Audit

The ability to track and log events that occur, including information about users accessing objects, and the timing and content of changes, helps an administrator ensure compliance with regulatory or organizational security standards. In addition, understanding the events in the environment can also help in developing a plan to mitigate risks and maintain security in the environment.

In SQL Server 2008 (Enterprise and Developer editions only), SQL Server Audit implements automation that allows an administrator and other users to prepare, save, and view audits of various server and database components. The feature provides audit capabilities with granularity of the server or database.

There are server-level audit activity groups, such as the following:

· FAILED_LOGIN_GROUP tracks failed logon attempts.

· BACKUP_RESTORE_GROUP reports when the database was backed up or restored.

· DATABASE_CHANGE_GROUP audits when the database was created, modified, or dropped.

Database audit action groups include the following:

· DATABASE_OBJECT_ACCESS_GROUP is called whenever a CREATE, ALTER, or DROP statement is executed on a database object.

DATABASE_OBJECT_PERMISSION_CHANGE_GROUP is called when you use GRANT, REVOKE, or DENY statements on database objects.

There are other audit actions such as SELECT, DELETE, and EXECUTE. additional information, including a complete list of all audit groups and activities, see SQL Server Audit Groups and Activities.

Audit results can be sent for later viewing to a file or event log ( system log or Windows Security Event Log). Audit data is generated using Extended events is another new feature in SQL Server 2008.

SQL Server 2008 audits allow an administrator to answer questions that used to be very difficult to answer after the fact, for example, "Who deleted this index?", "When was the stored procedure changed?", "What change might be preventing the user from accessing this table ? " and even "Who executed the SELECT or UPDATE statement on the table [ dbo.Payroll] ?».

For more information about using SQL Server auditing and examples of how to implement it, see the SQL Server 2008 Compliance Guide.

Compressing backups

DBAs have long suggested enabling this feature in SQL Server. Now it's done, and just in time! Recently, for a number of reasons, for example, due to the increased duration of data storage and the need to physically store more data, the size of databases began to grow exponentially. When backing up a large database, it is necessary to allocate a significant amount of disk space for the backup files, as well as to allocate a significant amount of time for the operation.

With SQL Server 2008 backup compression, the backup file is compressed as it is written, which not only requires less disk space, but also less I / O and takes less time to back up. In laboratory tests with real user data, 70-85% reduction in backup file size was observed in many cases. In addition, tests have shown that copy and restore times were reduced by about 45%. It should be noted that additional processing during compression increases the processor load. To decouple the resource-intensive copying process from other processes in time and minimize its impact on their work, you can use another function described in this document - Resource Governor.

Compression is enabled by adding the WITH COMPRESSION clause to the BACKUP command (see SQL Server Books Online for more information) or by setting this option on Options dialog box Database backup... To eliminate the need to make changes to all existing backup scripts, a global option has been implemented to compress all backups created on the server instance by default. (This option is available on the page Database settings dialog box Server properties; it can also be installed by running the stored procedure sp_ configure with parameter value backupcompressiondefaultequal to 1). The backup command requires an explicit compression parameter, and the restore command automatically recognizes the compressed backup and decompresses it when restoring.

Compressing backups is an extremely useful feature that conserves disk space and time. For more information on configuring backup compression, see the technical note Tuning backup compression performance inSQL Server 2008 ... Note. Compressed backups are only supported in the Enterprise and Developer editions of SQL Server 2008, however, all editions of SQL Server 2008 allow you to restore compressed backups.

Centralized Management Servers

Often, the DBA manages many SQL Server instances at once. The ability to centralize the management and administration of multiple SQL instances at a single point saves significant effort and time. The centralized management server implementation available in SQL Server Management Studio through the Registered Servers feature allows an administrator to perform various administrative operations on multiple SQL Servers from a single management console.

Centralized management servers allow an administrator to register a group of servers and perform the following operations on them as on a single group:

· Multi-Server Query Execution: Now you can run a script from a single source on many SQL Servers, data will be returned to that source, and you do not need to log on to each server separately. This can be especially useful when you need to view or compare data from multiple SQL Servers without running a distributed query. In addition, if the query syntax is supported by previous versions of SQL Server, a query run from the SQL Server 2008 Query Editor can run on both SQL Server 2005 and SQL Server 2000 instances. For more information, see the blog post working group For SQL Server manageability, see Executing Multiple Server Queries in SQL Server 2008.

Importing and defining policies on many servers: within functionality Policy Based Management (another new feature in SQL Server 2008, also described in this article), SQL Server 2008 provides the ability to import policy files into specific groups of centralized management servers and allows you to define policies on all servers registered in a specific group.

· Manage Services and Call SQL Server Configuration Manager: The Management Central Servers tool helps you create a Control Center where the DBA can view and even change (with the appropriate permissions) the state of services.

· Import and export of registered servers: Servers registered with Central Management Servers can be exported and imported as they are transferred between administrators or different installed instances of SQL Server Management Studio. This capability serves as an alternative to the administrator importing or exporting his own local groups in SQL Server Management Studio.

Keep in mind that permissions are enforced using Windows Authentication, so user rights and permissions may differ on different servers registered in the Management Central Server group. For more information, see Administering Multiple Servers Using Central Management Servers and Kimberly Tripp's blog: SQL Central Management Servers Server 2008 - are you familiar with them?

Data collector and data warehouse management

Performance tuning and diagnostics are time consuming and can require professional SQL Server skills and an understanding of the internal structure of databases. Windows Performance Monitor (Perfmon), SQL Server Profiler, and dynamic management views did some of these things, but they often had server impact, were cumbersome to use, or used scattered data gathering techniques that made it difficult to combine and interpret.

To provide meaningful insights into system performance so that you can take specific actions, SQL Server 2008 provides a fully extensible performance data collection and storage tool, the Data Collector. It contains several ready-to-use data collection agents, a centralized repository of performance data, the so-called management data repository, and several pre-prepared reports to present the collected data. The Data Collector is a scalable tool that collects and aggregates data from a variety of sources, such as Dynamic Management Views, Perfmon Performance Monitor, and Transact-SQL queries, at a fully configurable collection rate. The data collector can be extended to collect data on any measurable attribute of the application.

Another useful feature of the Management Data Warehouse is the ability to install it on any SQL Server and then collect data from one or more SQL Server instances. This minimizes the impact on the performance of production systems and improves scalability in the context of tracking and collecting data from multiple servers. In lab testing, the observed throughput loss while running agents and running the management data warehouse on a busy server (using an OLTP workload) was approximately 4%. Loss of performance can vary depending on the frequency of data collection (the mentioned test was conducted under an extended workload, with data being transferred to storage every 15 minutes), it can also increase dramatically during data collection periods. In any case, you should expect some reduction in available resources, since the DCExec.exe process uses a certain amount of memory and CPU resources, and writing to the management data store will increase the load on the I / O subsystem and require space to be allocated at the location of the data and log files. (Figure 2) shows a typical data collector report.

Figure: 2:Data collector report viewSQL Server 2008

The report shows the activity of SQL Server during the data collection period. It collects and reports events such as waits, CPU, I / O, and memory usage, and statistics on resource-intensive requests. An administrator can also drill down to a detailed look at the report items, focusing on a single request or operation to investigate, identify, and fix performance issues. These data collection, storage, and reporting capabilities enable proactive monitoring of the health of SQLServers in your environment. They allow you to go back to historical data as needed to understand and evaluate changes that have affected performance over the monitored period. The Data Collector and Control Data Warehouse are supported in all editions of SQLServer 2008 except SQLServerExpress.

Data compression

Ease of database management greatly facilitates the execution of routine administrative tasks. As tables, indexes, and files grow in size and the proliferation of very large databases (VLDBs), managing data and dealing with bulky files becomes more complex. In addition, the increasing demands for memory and physical I / O bandwidth with the volume of data requested also complicate the operations of administrators and are costly for organizations. As a result, in many cases, administrators and organizations have to either expand memory or I / O bandwidth of servers, or accept performance degradation.

Data compression, introduced in SQL Server 2008, helps resolve these issues. This feature allows the administrator to selectively compact any tables, table partitions, or indexes, thereby reducing disk and memory footprint and I / O size. Compressing and decompressing data is processor intensive; however, in many cases the additional processor load is more than offset by the I / O gain. In configurations where I / O is the bottleneck, data compression can also provide performance gains.

In some lab tests, enabling data compression yielded 50-80% disk space savings. The space savings varied significantly: if the data contained few duplicate values, or the values \u200b\u200bused all the bytes allocated for the specified data type, the savings were minimal. However, the performance of many workloads did not increase. However, when working with data containing a lot of numeric data and a lot of duplicate values, there were significant savings in disk space and performance gains ranging from a few percent to 40-60% for some sample query workloads.

SQLServer 2008 supports two types of compression: row compression, which compresses individual table columns, and page compression, which compresses data pages using row compression, prefixes, and dictionary compression. The compression ratio achieved is highly dependent on the data types and database content. In general, using row compression reduces the overhead on application operations, but also reduces the compression ratio, which means less space is gained. At the same time, compressing pages leads to more additional application load and CPU utilization, but also saves significantly more space. Page compression is a superset of row compression, that is, if an object or section of an object is compressed using page compression, it also applies line compression. In addition, SQLServer 2008 supports the storage format vardecimal from SQL Server 2005 Service Pack 2 (SP2). Please note that since this format is a subset of string compression, it is deprecated and will be removed from future versions of the product.

Both row compression and page compression can be enabled for a table or index in operational modewithout compromising the availability of data for applications. At the same time, it is impossible to shrink or unpack an individual partition of a partitioned table online without turning it off. Tests have shown that the combined approach is best, which compresses only a few of the largest tables, and achieves an excellent ratio of disk space savings (significant) to performance loss (minimum). Since the shrink operation, like the index create or rebuild operations, also has requirements for available disk space, shrink should be done with these requirements in mind. A minimum of free space is required during the compression process if you start compression with the smallest objects.

Data compression can be done using Transact-SQL statements or the Data Compression Wizard. You can use the system stored procedure to determine if an object might be resized when it is compressed. sp_estimate_data_compression_savings or the Data Compression Wizard. Compacting the database is only supported in the SQLServer 2008 Enterprise and Developer editions. It is implemented exclusively in the databases themselves and does not require any changes to the applications.

For more information on using compression, see Creating Compressed Tables and Indexes.

Policy Based Management

In many business scenarios, it is necessary to maintain specific configurations or enforce policies either on a specific SQLServer or multiple times across a SQLServer server group. An administrator or organization might require a specific naming scheme for all custom tables or stored procedures that are created, or require specific configuration changes to be applied on many servers.

Policy Based Management (PBM) provides the administrator with a wide range of options for managing the environment. Policies can be created and checked against them. If the scan target (for example, the database engine, database, table, or SQLServer index) does not meet the requirements, the administrator can automatically reconfigure it according to these requirements. There are also a number of policy modes (many of which are automated) that make it easy to check for policy compliance, log policy violations and send notifications, and even roll back changes to ensure policy compliance. For more information on definition modes and how they map to aspects (the concept of Policy Based Management (PBM), also discussed in this blog), see the SQL Server Policy Based Management Blog.

Policies can be exported and imported as XML files to define and apply across multiple server instances. In addition, in SQLServerManagement Studio and in the Registered Server View, you can define policies on many servers registered in a local server group or in a Management Central Server group.

IN previous versions Not all of the policy-based management functionality may be implemented in SQL Server. However, the function making report policies can be used on both SQL Server 2005 and SQL Server 2000. For more information on using policy-based management, see Administering Servers Using Policy-Based Management in SQLServer Books Online. For more information on the policy technology itself, with examples, see the SQL Server 2008 Compliance Guide.

Predicted performance and concurrency

Many administrators have significant difficulty maintaining SQLServers with ever-changing workloads and delivering predictable performance levels (or minimizing variance in query and performance plans). Unexpected performance changes during query execution, changes in query plans, and / or general performance-related problems can be caused by a number of reasons, including increased load from applications running on the SQLServer or a version update of the database itself. The predictability of queries and operations on the SQLServer makes it much easier to achieve and maintain your availability, performance, and / or business continuity goals (SLAs and operational support agreements).

In SQLServer 2008, several features have been changed to improve performance predictability. So, certain changes have been made to the plan structures of SQLServer 2005 ( consolidation of plans) and added the ability to control lock escalation at the table level. Both enhancements contribute to more predictable and orderly communication between the application and the database.

First, the Plan Guide:

SQL Server 2005 introduced improved query stability and predictability with a then new feature called "plan guides" that contained instructions for executing queries that could not be changed directly in the application. For more information, see the white paper Query Plan Enforcement. Although the USE PLAN query hint is very powerful, it only supported SELECT DML operations and was often awkward to use because of the formatting sensitivity of plan guides.

In SQL Server 2008, the plan guide mechanism has been extended in two ways: first, the hint support in the USE PLAN query has been extended, which is now compatible with all DML statements (INSERT, UPDATE, DELETE, MERGE); secondly, introduced new function consolidating plansthat allows you to directly create a plan structure (pinning) any query plan that exists in the SQL Server plan cache, as shown in the following example.

sp_create_plan_guide_from_handle

@name \u003d N'MyQueryPlan ',

@plan_handle \u003d @plan_handle,

@statement_start_offset \u003d @offset;

A plan guide created in any way has a database area; it is stored in a table sys.plan_guides... Plan guides only affect the optimizer's query plan selection process, but they do not eliminate the need to compile the query. Also added function sys.fn_validate_plan_guideto validate existing SQL Server 2005 plan guides and ensure they are compatible with SQL Server 2008. Plan pinning is available in SQL Server 2008 Standard, Enterprise, and Developer editions.

Second, escalation of locks:

Lock escalation often caused lock problems and sometimes even deadlocks. The administrator had to fix these problems. In previous versions of SQLServer, lock escalation could be controlled (trace flags 1211 and 1224), but this was only possible for instance-level granularity. For some applications this fixed the problem, while for others it caused even bigger problems. Another disadvantage of the lock escalation algorithm in SQL Server 2005 was that the locks on partitioned tables were escalated directly to the table level rather than to the partition level.

SQLServer 2008 offers a solution to both problems. It introduces a new parameter to control lock escalation at the table level. With the ALTERTABLE command, you can choose to disable escalation or escalate to partition level for partitioned tables. Both of these features improve scalability and performance without unwanted side effects affecting other objects in the instance. Lock escalation is set at the database object level and does not require any application changes. It is supported in all editions of SQLServer 2008.

Resource Governor

Maintaining a consistent level of service by preventing rogue requests and ensuring that critical workloads are provisioned has been difficult in the past. There was no way to guarantee the allocation of a certain amount of resources to a set of requests, there was no control of access priorities. All requests had equal rights to access all available resources.

New sQL function Server 2008 - "Resource Governor" - helps to deal with this problem by giving the ability to differentiate workloads and allocate resources according to user needs. Resource Governor limits are easily reconfigurable in real time with minimal impact on running workloads. The distribution of workloads across the resource pool is configurable at the connection level, and this process is completely transparent to applications.

The diagram below shows the resource allocation process. In this scenario, three workload pools (Admin, OLTP, and Report workloads) are configured and the OLTP workload pool is given the highest priority. At the same time, two resource pools (Pool and Application pool) are configured with specified limits on memory and processor (CPU) time. In the final step, the Admin workload is assigned to the Admin pool, and the OLTP and Report workloads are assigned to the Application pool.

Here are some things to consider when using Resource Governor.

- Resource Governor uses the login credentials, hostname, or application name as the "resource pool identifier", so using the same login for an application with a certain number of clients per server can complicate pooling.

- Grouping of objects at the database level, where access to resources is regulated based on the database objects being accessed, is not supported.

- Only CPU and memory usage can be configured. I / O resource management is not implemented.

- It is not possible to dynamically switch workloads between resource pools once connected.

- Resource Governor is only supported in SQL Server 2008 Enterprise and Developer editions and can only be used for SQL Server Database Engine; Management of SQL Server Analysis Services (SSAS), SQL Server Integration Services (SSIS), and SQL Server Reporting Services (SSRS) is not supported.

Transparent data encryption (TDE)

Many organizations place great emphasis on security issues. There are many different layers that protect one of the most valuable assets of an organization - its data. Most often, organizations successfully protect the data they use with physical security measures, firewalls, and strict access restriction policies. However, in case of loss physical medium with data, such as a disk or tape with a backup, all the above security measures are useless, since an attacker can simply restore the database and gain full access to the data. SQL

Server 2008 offers a solution to this problem with Transparent Data Encryption (TDE). With TDE encryption, I / O data is encrypted and decrypted in real time; data and log files are encrypted using a database encryption key (DEK). DEK is a symmetric key protected by a certificate stored in the server's database\u003e master, or an asymmetric key protected by the Advanced Key Management Module (EKM).

TDE protects "inactive" data, so data in MDF, NDF, and LDF files cannot be viewed with a Hexadecimal Data Editor or otherwise. However, active data, such as the results of a SELECT statement in SQL Server Management Studio, will remain visible to users who have view rights to the table. In addition, because TDE is implemented at the database level, the database can use indexes and keys to optimize queries. TDE should not be confused with column-level encryption - it is a standalone feature that can encrypt even active data.

Database encryption is a one-time process that can be started by a Transact - SQL command or from within SQL Server Management Studio and then executed in a background thread. Encryption or decryption status can be monitored using dynamic management view sys.dm_database_encryption_keys... During laboratory tests, a 100 GB database was encrypted using an algorithm aES encryption _128 took about an hour. While the TDE overhead is driven primarily by the application workload, in some of the tests that we performed, the overhead was less than 5%. One thing to keep in mind that can affect performance is that if TDE is used in any of the databases on the instance, then the system database is also encrypted. tempDB... Finally, when using different functions at the same time, the following should be considered:

- When using backup compression to compress an encrypted database, the compressed backup size will be larger than without encryption, because encrypted data is not compressed well.

- Database encryption does not affect data compression (line or page).

TDE enables an organization to comply with regulatory requirements and an overall level of data protection. TDE is supported only in the SQL Server 2008 Enterprise and Developer editions; its activation does not require changes to existing applications. For more information, see Data Encryption in SQL Server 2008 Enterprise Edition or Discussion in Transparent data encryption.

In summary, SQL Server 2008 offers features, enhancements, and capabilities to make the database administrator's job easier. The 10 most popular are described here, but SQL Server 2008 has many more features to make life easier for administrators and other users. For “Top 10 Features” lists for other areas of working with SQL Server, see the other Top 10 ... in SQL Server 2008 articles on this site. Full list functions and their detailed description see SQL Server Books Online and the SQL Server 2008 Overview Web site.

SQL Server provides a number of different control constructs that are essential to writing efficient algorithms.

Grouping two or more teams into single block carried out using the BEGIN and END keywords:

<блок_операторов>::=

Grouped commands are treated as a single command by the SQL interpreter. Such grouping is required for constructions of polyvariant branching, conditional and cyclic constructions. BEGIN ... END blocks can be nested.

Some SQL commands should not be executed in conjunction with other commands (such as backup commands, changing the structure of tables, stored procedures, and the like), so they cannot be included in the BEGIN ... END clause together.

Often, a certain part of the program must be executed only when some logical condition is realized. The syntax for the conditional statement is shown below:

<условный_оператор>::=

IF log_expression

(sql_operator | statement_block)

(sql_operator | block_operators)]

Loops are organized using the following construction:

<оператор_цикла>::=

WHILE log_expression

(sql_operator | statement_block)

(sql_operator | statement_block)

A loop can be forced to stop by executing a BREAK command in its body. If you need to start the loop over without waiting for all the commands in the body to complete, you must execute the CONTINUE command.

To replace multiple single or nested conditionals, use the following construction:

<оператор_поливариантных_ветвлений>::=

CASE input_value

WHEN (value_for_compare |

log_expression) THEN

out_expression [, ... n]

[ELSE otherwise_out_expression]

If the input value and the value to be compared are the same, then the construction returns the output value. If the value of the input parameter is not found in any of the WHEN ... THEN lines, then the value specified after the ELSE keyword will be returned.

Basic objects of the SQL server database structure

Let's consider the logical structure of the database.

The logical structure defines the structure of tables, relationships between them, a list of users, stored procedures, rules, defaults, and other database objects.

Data is logically organized in SQL Server as objects. The main objects in a SQL Server database include the following objects.

A quick overview of the main database objects.

Tables

All data in SQL is contained in objects called tables... Tables are a collection of any information about objects, phenomena, processes of the real world. No other objects store data, but they can access data in the table. Tables in SQL have the same structure as tables in all other DBMS and contain:

· Strings; each line (or record) is a collection of attributes (properties) of a particular object instance;

· Columns; each column (field) represents an attribute or collection of attributes. The row field is the smallest element in the table. Each column in a table has a specific name, data type, and size.

Representation

Views (views) are virtual tables, the content of which is determined by the query. Like real tables, views contain named columns and rows of data. To end users, a view looks like a table, but it does not actually contain data, but only represents data located in one or more tables. The information that the user sees through the view is not stored in the database as an independent object.

Stored procedures

Stored procedures are a group of SQL commands combined into a single module. This group of commands is compiled and executed as a unit.

Triggers

Triggers are a special class of stored procedures that are automatically launched when data is added, changed, or deleted from a table.

Functions

Functions in programming languages \u200b\u200bare constructs that contain frequently executable code. The function performs some action on the data and returns some value.

Indexes

Index - a structure associated with a table or view and designed to speed up the search for information in them. An index is defined on one or more columns, called indexed columns. It contains the sorted values \u200b\u200bof the indexed column or columns with references to the corresponding row in the source table or view. The performance is improved by sorting the data. Using indexes can significantly improve search performance, but additional space is required in the database to store indexes.

© 2015-2019 site

All rights belong to their authors. This site does not claim authorship, but provides free use.

Date the page was created: 2016-08-08

My company just went through their annual review process and I finally convinced them that it was time to find the best solution for managing our SQL schema / scenes. We currently only have a few manual update scripts.

I've worked with VS2008 Database Edition at another company and it's an amazing product. My boss asked me to take a look at SQL Compare by Redgate and look for any other products that might be better. SQL Comparison is a great product too. However, they do not seem to support Perforce.

Have you used many products for this?

What tools do you use to manage SQL?

What must be included in the requirements before my company makes a purchase?

10 replies

I don't think there is a tool that can handle all the parts. VS Database Edition does not provide a decent release engine. Running individual scripts from the Solution Browser doesn't scale well in large projects.

At least you need

- IDE / editor

- repository source codewhich can be launched from your IDE

- naming convention and organization of various scripts in folders

- process for handling changes, managing releases, and executing deployments.

The last bullet is where things usually break. That's why. For better manageability and version tracking, you want to store each db object in its own own file script. That is, every table, stored procedure, view, index, etc. has its own file.

When something changes, you update the file and you have a new version in your repository with necessary information... When it comes to bundling multiple changes into a release, handling individual files can be cumbersome.

2 options that I used:

Besides storing all the individual database objects in their files, you have the release scripts, which are the concatenation of the individual scripts. Disadvantage of this: You have code in 2 places with all the risks and disadvantages. Potential: Running a release is as easy as executing a single script.

write a small tool that can read the script metadata from the release manifest and execute the eadch script specified in the manifest on the target server. There is no downside for this, except that you have to write code. This approach does not work for tables that cannot be dropped and recreated (once you live and have data), so for tables, you will have change scripts. So it will actually be a combination of both approaches.

I'm in the "script it yourself" camp as third party products will only guide you to manage your database code. I don't have one script for each object, because the objects change over time, and nine times out of ten just updating my "create table" script to have three new columns would be inadequate.

Database creation is by and large trivial. Set up a bunch of CREATE scripts, arrange them correctly (create database in front of schemas, schemas in front of tables, tables in front of procedures, call procedures before calls, etc.) and do it. Database change management is not easy:

- If you add a column to a table, you can't just drop the table and create it with a new column, because that would destroy all of your valuable production data.

- If Fred adds a column to table XYZ and Mary adds another column to table XYZ, which column is added first? Yes, the order of the columns in the tables doesn't matter [because you never use SELECT *, right?] If you are not trying to manage your database and keep track of versioning, then you have two "valid" databases that don't look like as each other become a real headache. We use SQL comparisons not for management, but for reviewing and keeping track of things, especially during development, and the few "they are different (but not magger)" situations that we can prevent us from noticing differences that matter.

- Likewise, when several projects (developers) work simultaneously and separately in common base data, it can get very tricky. Maybe everyone is working on the Next Big Thing project when suddenly someone has to start working on fixing bugs in the Last Big Thing project. How do you manage the required code modifications when the release order is variable and flexible? (Really funny times.)

- Changing table structures means changing data, and it can get hellishly difficult when you have to deal with backward compatibility. You add the "DeltaFactor" column, okay, so what do you do to fill that esoteric value for all your existing (read: stale) data? You add new table search and matching column, but how do you populate it for existing rows? These situations may not happen often, but when they do, you have to do it yourself. Third party tools simply cannot anticipate the needs of your business logic.

Basically, I have a CREATE script for each database followed by a series of ALTER scripts as our codebase changes over time. Each script checks to see if it can be run: it is the correct "view" of the database, the necessary pre-scripts have been executed, this script is already running. Only when the checks are passed will the script execute its changes.

As a tool, we use SourceGear Fortress to manage the underlying source code, Redgate SQL Compare for general support and troubleshooting, and a range of SQLCMD-based home scripts to "bulk" deploy scripts with changes to multiple servers and databases and track who applied what scripts to databases at what time. End result: All our databases are stable and stable, and we can readily prove which version is or was at any given time.

We require all database changes or inserts to things like lookup tables to be done in script and saved in source control. They are then deployed in the same way as any other software version deployment code. Since our developers do not have deployment rights, they have no choice but to create scripts.

I usually use MS Server Management Studio to manage sql, work with data, develop databases and debug it, if I need to export some data to sql script or I need to create some complex object in the database, I use EMS SQL Management Studio for SQL Server, because there I can see more clearly that narrow sections of my code and visual design in this environment give me an easier

I have an open source project (licensed under the LGPL) that is trying to solve problems with the correct version of the DB schema for (and more) SQL Server (2005/2008 / Azure), bsn ModuleStore. The whole process is very close to the concept explained by Philip Kelly's post here.

Basically, a separate part of the toolbox scripts the SQL Server database objects of the DB schema into files with standard formatting, so the contents of the file only change if the object has actually changed (as opposed to scripts made by VS, which also creates scripts, etc. ., noting all changed objects, even if they are virtually identical).

But the toolbox goes beyond that if you're using .NET: it allows you to embed SQL scripts in a library or application (as embedded resources) and then compare the compared embedded scripts to the current state in the database. Non-table changes (those that are not "destructive changes" as defined by Martin Fowler) can be applied automatically or on demand (for example, creating and deleting objects such as views, functions, stored procedures, types, indexes) and change scripts (which must be recorded manually) can be applied in the same process; new tables are also created as well as their setting data. After the update, the database schema is again compared against the scripts to ensure that the database is successfully updated before the changes are committed.

Note that all scripting and comparison code works without SMO, so you don't have the painful SMO dependency when using the bsn ModuleStore in applications.

Depending on how you want to access the database, the toolkit offers even more - it implements some of the ORM's capabilities and offers a very good and useful front-end approach for calling stored procedures, including transparent XML support with native .NET XML classes, and also for TVP (Table-Valued Parameters) as IEnumerable

Here is my script for keeping track of stored proc and udf and triggers in a table.

Create a table to hold your existing proc source code

Inject a table with all existing trigger and script data

Create a DDL trigger to track changes to them

/ ****** Object: Table. Script Date: 9/17/2014 11:36:54 AM ****** / SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO CREATE TABLE. (IDENTITY (1, 1) NOT NULL, (1000) NULL, (1000) NULL, (1000) NULL, (1000) NULL, NULL, NTEXT NULL, CONSTRAINT PRIMARY KEY CLUSTERED (ASC) WITH (PAD_INDEX \u003d OFF, STATISTICS_NORECOMPUTE \u003d OFF, IGNORE_DUP_KEY \u003d OFF, ALLOW_ROW_LOCKS \u003d ON, ALLOW_PAGE_LOCKS \u003d ON) ON) ON GO ALTER TABLE. ADD CONSTRAINT DEFAULT ("") FOR GO INSERT INTO. (,,,,,) SELECT "sa", "loginitialdata", r.ROUTINE_NAME, r.ROUTINE_TYPE, GETDATE (), r.ROUTINE_DEFINITION FROM INFORMATION_SCHEMA.ROUTINES r UNION SELECT "sa", "loginitialdata", v.TABLE_NAME "view", GETDATE (), v.VIEW_DEFINITION FROM INFORMATION_SCHEMA.VIEWS v UNION SELECT "sa", "loginitialdata", o.NAME, "trigger", GETDATE (), m.DEFINITION FROM sys.objects o JOIN sys.sql_modules m ON o.object_id \u003d m.object_id WHERE o.type \u003d "TR" GO CREATE TRIGGER ON DATABASE FOR CREATE_PROCEDURE, ALTER_PROCEDURE, DROP_PROCEDURE, CREATE_INDEX, ALTER_INDEX, DROP_INDEX, CREATE_TRIGGER, ALTER_TRIGGER, DROP_TRIGGER, ALTER_TABLE, ALTER_VIEW, CREATE_VIEW, DROP_VIEW AS BEGIN SET NOCOUNT ON DECLARE @data XML SET @data \u003d Eventdata () INSERT INTO sysupdatelog VALUES (@ data.value ("(/ EVENT_INSTANCE / LoginName)", "nvarchar (255)"), @ data.value ("(/ EVENT_INSTANCE / EventType) "," nvarchar (255) "), @ data.value (" (/ EVENT_INSTANCE / ObjectName) "," nvarchar (255) "), @ data.value ("(/ EVENT_INSTANCE / ObjectType)", "nvarchar (255)"), getdate (), @ data.value ("(/ EVENT_INSTANCE / TSQLCommand / CommandText)", "nvarchar (max)")) SET NOCOUNT OFF END GO SET ANSI_NULLS OFF GO SET QUOTED_IDENTIFIER OFF GO ENABLE TRIGGER ON DATABASE GO

Sometimes you really want to put your thoughts in order, put them on the shelves. And even better in alphabetical and thematic sequence, so that finally there is clarity of thinking. Now imagine what kind of chaos would be happening in " electronic brains»Any computer without clear structuring of all data and Microsoft SQL Server:

MS SQL Server

This software product is a relational database management system (DBMS) developed by Microsoft Corporation. A specially developed Transact-SQL language is used for data manipulation. The language commands for selecting and modifying a database are based on structured queries:

Relational databases are built on the interconnection of all structural elements, including due to their nesting. Relational databases have built-in support for the most common data types. As a result, SQL Server integrates support for programmatically structuring data using triggers and stored procedures.

Overview of MS SQL Server Features

The DBMS is part of a long chain of specialized software that Microsoft has created for developers. This means that all the links in this chain (application) are deeply integrated with each other.

That is, their tools easily interact with each other, which greatly simplifies the process of developing and writing software code. An example of such a relationship is the MS Visual Studio programming environment. Its installation package already includes SQL Server Express Edition.

Of course, this is not the only popular DBMS on the world market. But it is she who is more acceptable for computers running under windows management, due to its focus on this particular operating system. And not only because of this.

Benefits of MS SQL Server:

- Has a high degree of performance and fault tolerance;

- It is a multi-user DBMS and works on the client-server principle;

The client part of the system supports creating custom requests and sending them to the server for processing.

- Close integration with the Windows operating system;

- Support for remote connections;

- Support for popular data types, as well as the ability to create triggers and stored procedures;

- Built-in support for user roles;

- Extended database backup function;

- High degree of security;

- Each issue includes several specialized editions.

Evolution of SQL Server

The peculiarities of this popular DBMS are most easily traced when considering the history of the evolution of all its versions. In more detail, we will dwell only on those releases to which the developers have made significant and fundamental changes:

- Microsoft SQL Server 1.0 - Released back in 1990. Even then, experts noted the high speed of data processing, demonstrated even at maximum load in a multi-user mode;

- SQL Server 6.0 - Released in 1995. This version introduces the world's first support for cursors and data replication;

- SQL Server 2000 - in this version the server received a completely new engine. Most of the changes affected only the user side of the application;

- SQL Server 2005 - DBMS scalability has increased, management and administration process has been greatly simplified. A new API was introduced to support the .NET programming platform;

- Subsequent releases were aimed at developing the interaction of the DBMS at the level cloud technologies and business intelligence tools.

The basic package of the system includes several utilities for configuring SQL Server. These include:

Configuration manager. Lets you manage everyone network settings and database server services. Used to configure SQL Server on the network.

- SQL Server Error and Usage Reporting:

The utility is used to configure the sending of error reports to Microsoft support.

Used to optimize database server performance. That is, you can customize the functioning of SQL Server to suit your needs by enabling or disabling certain features and components of the DBMS.

The set of utilities included in Microsoft SQL Server may differ depending on the version and edition of the software package. For example, in the 2008 version you will not find SQL Server Surface Area Configuration.

Running Microsoft SQL Server

For example, we will use the 2005 version of the database server. The server can be started in several ways:

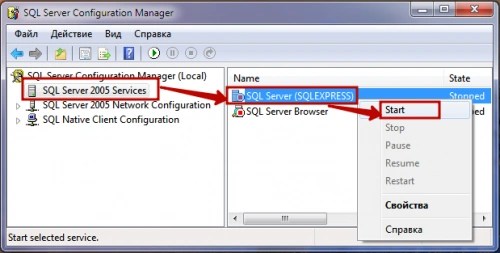

- Through the utility SQL Server Configuration Manager... In the application window on the left, select "SQL Server 2005 Services", and on the right - the required database server instance. Mark it and select "Start" in the submenu of the right mouse button.

- Using the environment SQL Server Management Studio Express... It is not included in the Express edition installation package. Therefore, it must be downloaded separately from the official Microsoft website.

To start the database server, run the application. In the dialog box " Server connection"In the" Server name "field, select the instance we need. In field " Authentication"Leave the value" Windows Authentication". And click on the "Connect" button:

SQL Server Administration Basics

Before starting MS SQL Server, you need to briefly familiarize yourself with the basic features of its configuration and administration. Let's start with a more detailed overview of several utilities from the DBMS:

- SQL Server Surface Area Configuration - you should contact here if you want to enable or disable any feature of the database server. At the bottom of the window there are two points: the first is responsible for network parameters, and in the second, you can activate a service or function that is turned off by default. For example, enable integration with the .NET framework via T-SQL queries:

If you've ever written locking schemes in other database languages \u200b\u200bto overcome the lack of locking (as I did), then you might still feel like you need to deal with locking yourself. Let me assure you that you can completely trust the lock manager. However, SQL Server offers several methods for managing locks, which we will discuss in detail in this section.

Do not apply lock settings or randomly change isolation levels — trust the SQL Server lock manager to balance contention and transaction integrity. Only if you are absolutely sure that the database schema is well configured and program code literally polished, you can slightly adjust the work of the lock manager to solve a specific problem. In some cases, setting select queries to not block will solve most problems.

Setting the isolation level of a connection

The isolation level determines the duration of a shared or exclusive lock on a connection. Setting the isolation level affects all queries and all tables used throughout the entire connection or until you explicitly replace one isolation level with another. The following example sets up tighter isolation than the default and prevents non-duplicate reads:

SET TRANSACTION ISOLATION LEVEL REPEATABLE READ Valid isolation levels are:

Read uncommited? serializable

Read commited? snapshot

Repeatable read

The current isolation level can be checked using the Database Integrity Check (DBCC) command:

DBCC USEROPTIONS

The results will be as follows (abbreviated):

Set Option Value

isolation level repeatable read

Isolation levels can also be set at the query or table level using locking parameters.

Using Database Snapshot Level Isolation

There are two options for the isolation level of database snapshots: snapshot and read commited snapshot. Snapshot isolation works like a repeatable read without dealing with blocking issues. The read commited snapshot isolation mimics the SQL Server default read commited level, while also removing blocking issues.

While transaction isolation is typically established at the connection level, snapshot isolation must be configured at the database level because it is

effectively tracks the versioning of rows in the database. Row versioning is a technology that creates copies of rows in the TempDB database to update. Besides the main loading of the TempDB database, the string versioning also adds a 14-byte string identifier.

Using Snapshot Isolation

The following snippet enables the snapshot isolation level. No other connections must be made to the database to update the database and enable the snapshot isolation level.

ALTER DATABASE Aesop

SET ALLOW_SNAPSHOT_ISOLATION ON

| To check if snapshot isolation is enabled in the database, issue the following SVS query: SELECT name, snapshot_isolation_state_desc FROM [* sysdatabases.

The first transaction now starts reading and remains open (i.e. not committed): USE Aesop

BEGIN TRAN SELECT Title FROM FABLE WHERE FablelD \u003d 2

You will get the following output:

At this time, the second transaction starts updating the same row that was opened by the first transaction:

SET TRANSACTION ISOLATION LEVEL Snapshot;

BEGIN TRAN UPDATE Fable

SET Title \u003d ‘Rocking with Snapshots’

WHERE FablelD \u003d 2;

SELECT * FROM FABLE WHERE FablelD \u003d 2

The result is as follows:

Rocking with Snapshots

Isn't it amazing? The second transaction was able to update the row even though the first transaction remained open. Let's go back to the first transaction and see the initial data:

SELECT Title FROM FABLE WHERE FablelD \u003d 2

The result is as follows:

If you open the third and fourth transactions, they will see the same initial value of The Bald Knight:

Even after the second transaction confirms the changes, the first one will still see the original value, and all subsequent transactions will see a new one, Rocking with Snapshots.

Using ISOLATION Read Commited Snapshot

Read Commited Snapshot isolation is enabled using a similar syntax:

ALTER DATABASE Aesop

SET READ_COMMITTED_SNAPSHOT ON

Like Snapshot isolation, this level also uses row versioning to resolve locking issues. Based on the example described in the previous section, in this case, the first transaction will see the changes made by the second as soon as they are committed.

Because Read Commited is the default isolation level in SQL Server, all you need to do is set database parameters.

Resolving Write Conflicts

Transactions writing data when the Snapshot isolation level is set may be blocked by previous uncommitted write transactions. Such a lock will not force a new transaction to wait - it will simply generate an error. Use a try statement to handle situations like this. ... ... catch, wait a couple of seconds and try to retry the transaction again.

Using lock options

The locking parameters allow you to make temporary adjustments to the locking strategy. While the isolation level affects the overall connection, the locking parameters are specific to each table in a particular query (Table 51.5). The WITH (lock_option) option is placed after the table name in the FROM clause of the query. Several parameters can be specified for each table, separated by commas.

|

Table 51.5. Blocking options |

Parameter blocking |

Description |

Isolation level. He does not establish or hold a lock. Equivalent to no blocking |

Default isolation level for transactions |

Isolation level. Holds shared and exclusive locks until the transaction is confirmed |

Isolation level. Holds a shared lock until the transaction completes |

Skipping locked lines instead of waiting |

Enabling row-level locking instead of page, extent, or table level |

Enabling page level locking instead of table level |

Automatically escalate row, page and extent locks to table level granularity |

Parameter blocking |

Description |

Non-application and non-retention of locks. Same as ReadUnCommited |

Enables exclusive table locking. Prevent other transactions from working with the table |

Hold shared lock until transaction is confirmed (similar to Serializable) |

Using an update lock instead of a shared lock and holding it. Locking other writes to data between initial reads and writes |

Holding an exclusive data lock until the transaction is confirmed |

The following example uses a locking option in the FROM clause of an UPDATE statement to prevent the dispatcher from escalating the locking granularity:

USE OBXKites UPDATE Product

FROM Product WITH (RowLock)

SET ProductName \u003d ProductName + ‘Updated 1

If the query contains subqueries, remember that accessing the table of each query generates a lock, which can be controlled using parameters.

Index-level locking limits

Isolation levels and blocking settings are applied at the connection and query level. The only way to manage table-level locks is to limit the lock granularity based on specific indexes. Using the sp_indexoption system stored procedure, row and / or page locks can be disabled for a specific index using the following syntax: sp_indexoption ‘index_name 1,

AllowRowlocks or AllowPagelocks,

This can come in handy in a number of special cases. If a table frequently waits because of page locks, setting allowpagelocks to off will set the row-level locking. Reduced lock granularity will have a positive effect on competition. In addition, if the table is rarely updated but frequently read, row and page level locks are undesirable; in this case, table-level locking is optimal. If updates are infrequent, then exclusive table locks are not a big problem.

The Sp_indexoption stored procedure is designed to fine tuning data schemas; that is why it uses index-level locking. To restrict locks on a table's primary key, use sp_help table_name to find the name of the primary key index.

The following command configures the ProductCategory table as a rarely updated classifier. The sp_help command first displays the name of the table's primary key index: sp_help ProductCategory

The result (truncated) is:

index index index

name description keys

PK_____________ ProductCategory 79A814 03 nonclustered, ProductCategorylD

unique, primary key located on PRIMARY

With the real name of the primary key available, the system stored procedure can set the index locking parameters:

EXEC sp_indexoption

'ProductCategory.РК__ ProductCategory_______ 7 9А814 03 ′,

'AllowRowlocks', FALSE EXEC sp_indexoption

'ProductCategory.PK__ ProductCategory_______ 79A81403 ′,

'AllowPagelocks', FALSE

Lock Timeout Management

If a transaction is waiting for a lock, then this wait will continue until the lock becomes possible. There is no default timeout limit - theoretically, it can last forever.

Fortunately, you can set the lock timeout using the set lock_timeout connection parameter. Set this parameter to the number of milliseconds or, if you want not to limit the time, set it to -1 (it is the default). If this parameter is set to 0, then the transaction will be immediately rejected if there is any lock. In this case, the application will be extremely fast, but ineffective.

The following query sets the lock timeout to two seconds (2000 milliseconds):

SET Lock_Timeout 2 00 0

If the transaction exceeds the specified timeout limit, then an error number 1222 is generated.

It is highly recommended to set a lock timeout limit at the connection level. This value is selected based on typical database performance. I prefer to set the wait time to five seconds.

Evaluating Competition Performance in a Database

It is very easy to create a database that does not address the issues of lock contention and contention when testing on a group of users. The real test is when several hundred users update orders at the same time.

Competition testing needs to be properly organized. At one level, it should contain the concurrent use of the same final form by many users. A .NET program that continually simulates

user viewing and updating of data. Good test should run 20 instances of a script that constantly loads the database and then let the testing team use the application. The lock count will help you see the performance monitor discussed in Chapter 49.

Multiplayer competition is best tested multiple times during development. As the MCSE exam manual says, “Don't let the real-world test come first.”

Application locks

SQL Server uses a very complex locking scheme. Sometimes a process or resource other than data needs a lock. For example, it might be necessary to run a procedure that is harmful if another user has started another instance of the same procedure.

Several years ago I wrote a cable routing program for nuclear power plant projects. When the plant geometry was designed and tested, engineers entered the composition of the cable equipment, its location and the types of cables used. After several cables were inserted, the program formed the route of their laying so that it was as short as possible. The procedure also considered cabling safety issues and segregated incompatible cables. At the same time, if multiple routing procedures were run at the same time, each instance tried to route the same cables, with the result that the result was incorrect. App blocking was a great solution to this problem.

Locking Applications opens up a whole world of SQL locks for use in applications. Instead of using data as a lockable resource, application locks lock the use of all custom resources declared in the sp__GetAppLock stored procedure.

Application locking can be applied in transactions; the blocking mode may be Shared, Update, Exclusive, IntentExclusice, or IntentShared. The return value of the procedure indicates whether the lock was successfully applied.

0. The lock was established successfully.

1. The lock was set when another procedure released its lock.

999. The lock was not set for another reason.

The sp_ReleaseApLock stored procedure releases the lock. The following example demonstrates how application locking can be used in a package or procedure: DECLARE @ShareOK INT EXEC @ShareOK \u003d sp_GetAppLock

@Resource \u003d ‘CableWorm’,

@LockMode \u003d 'Exclusive'

IF @ShareOK< 0

... Error handling code

... Program code ...

EXEC sp_ReleaseAppLock @Resource \u003d ‘CableWorm’

When application locks are viewed using Management Studio or sp_Lock, they are displayed as APP type. The following listing shows the abbreviated output of sp_Lock running concurrently with the above code: spid dbid Objld Indld Type Resource Mode Status

57 8 0 0 APP Cabllf 94cl36 X GRANT

There are two small differences to note in how application locks are handled in SQL Server:

Deadlocks are not automatically detected;

If a transaction acquires the lock several times, it must release it exactly the same number of times.

Deadlocks

A deadlock is a special situation that occurs only when transactions with multiple tasks compete for each other's resources. For example, the first transaction has acquired a lock on resource A, and it needs to lock resource B, and at the same time, the second transaction, which has locked resource B, needs to lock resource A.

Each of these transactions is waiting for the other to release its lock, and none of them can complete until it does. If there is no external influence or one of the transactions will be completed by certain reason (for example, by waiting time), then this situation can continue until the end of the world.

Deadlocks used to be a serious problem, but now SQL Server can successfully resolve it.

Creating a deadlock

The easiest way to create a deadlock situation in SQL Server is with two connections in the Management Studio Query Editor (Figure 51.12). The first and second transactions try to update the same rows, but in the opposite order. Using the third window to start the pGetLocks procedure, you can monitor the locks.

1. Create a second window in the query editor.

2. Place the block code Step 2 in the second window.

3. In the first window, place the block code Step 1 and press the key

4. In the second window, similarly execute the code Step 2.

5. Return to the first window and execute the block code Step 3.

6. After a short period of time, SQL Server detects the deadlock and resolves it automatically.

Below is the program code of the example.

- Transaction 1 - Step 1 USE OBXKites BEGIN TRANSACTION UPDATE Contact

SET LastName \u003d 'Jorgenson'

WHERE ContactCode \u003d 401 ′

Puc. 51.12. Create a deadlock situation in Management Studio using two connections (their windows are at the top)

The first transaction now has an exclusive write lock with a value of 101 in the ContactCode field. The second transaction will acquire an exclusive lock on the row with the value 1001 in the ProductCode field, and then try to exclusively lock the record already locked by the first transaction (ContactCode \u003d 101).

- Transaction 2 - Step 2 USE OBXKites BEGIN TRANSACTION UPDATE Product SET ProductName

\u003d 'DeadLock Repair Kit'

WHERE ProductCode \u003d '1001'

SET FirstName \u003d ‘Neals’

WHERE ContactCode \u003d '101'

COMMIT TRANSACTION

There is no deadlock yet, because transaction 2 is waiting for transaction 1 to complete, but transaction 1 is not yet waiting for transaction 2 to complete. In this situation, if transaction 1 exits and executes a COMMIT TRANSACTION statement, the data resource will be released and transaction 2 is safe. will get the opportunity to block it necessary and continue its actions.

The problem occurs when transaction 1 tries to update the row with ProductCode \u003d l. However, it will not receive the necessary exclusive lock for this, since this record is locked by transaction 2:

- Transaction 1 - Step 3 UPDATE Product SET ProductName

\u003d 'DeadLock Identification Tester'

WHERE ProductCode \u003d '1001'

COMMIT TRANSACTION

Transaction 1 will return the following text error message after a couple of seconds. The resulting deadlock can also be seen in SQL Server Profiler (Figure 51.13):

Server: Msg 1205, Level 13,

State 50, Line 1 Transaction (Process ID 51) was

deadlocked on lock resources with another process and has been chosen as the deadlock victim. Rerun the transaction.

Transaction 2 will complete its work as if the problem did not exist:

(1 row (s) affected)

(1 row (s) affected)

Figure: 51.13. SQL Server Profiler allows you to monitor for deadlocks using the Locks: Deadlock Graph event and identify the resource that caused the deadlock

Automatic deadlock detection

As demonstrated in the above code, SQL Server automatically detects a deadlock situation by checking for blocking processes and rolling back transactions.

those who completed the least amount of work. SQL Server continually checks for the existence of crosslocks. Deadlock detection latency can range from zero to two seconds (in practice, the longest time I've had to wait for this is five seconds).

Deadlock handling

When a deadlock occurs, the connection selected as the victim of the deadlock must retry its transaction. Since the work needs to be redone, it is good that the transaction that has managed to complete the least amount of work is rolled back - it will be the transaction that will be repeated from the beginning.

Error with code 12 05 must be caught by the client application, which must restart the transaction. If everything goes as it should, the user won't even suspect that a deadlock has occurred.

Instead of letting the server decide which of the transactions to choose as the “victim,” the transaction itself can be “played giveaway”. The following code, when placed in a transaction, informs SQL Server to rollback the transaction if a deadlock occurs:

SET DEADLOCKJPRIORITY LOW

Minimizing deadlocks

Even though deadlocks are easy to detect and deal with, it is best to avoid them. The guidelines below will help you avoid deadlocks.

Try to keep transactions short and free of unnecessary code. If some code does not need to be present in the transaction, it should be derived from it.

Never make a transaction code dependent on user input.

Try to create packages and locks in the same order. For example, table A is processed first, then tables B, C, etc. Thus, one procedure will wait for the second, and deadlocks cannot occur by definition.

Plan the physical layout to store concurrently sampled data as closely as possible on the data pages. To do this, use normalization and choose clustered indexes wisely. Reducing the spread of locks will help to avoid their escalation. Small locks will help you avoid competition.

Do not increase the isolation level unless necessary. A stricter isolation level increases the duration of the locks.