What is information? What is it based on? What goals does it pursue and perform tasks? We will talk about all this in the framework of this article.

general information

In what cases does it apply semantic way measuring information? The essence of the information is used, the content side of the received message is of interest - these are the indications for its application. But first, let's give an explanation of what it is. It should be noted that the semantic way of measuring information is a difficultly formalized approach, which has not yet been fully formed. It is used to measure the amount of meaning in the data that has been received. In other words, how much of the information received is necessary in this case. This approach is used to determine the content of the information received. And if we are talking about a semantic way of measuring information, the concept of a thesaurus is used, which is inextricably linked with the topic under consideration. What is it?

Thesaurus

I would like to make a short introduction and answer one question about the semantic way of measuring information. Who introduced it? The founder of cybernetics, Norbert Wiener, suggested using this method, but it was significantly developed under the influence of our compatriot A. Yu. Schreider. What is the name used to denote the totality of information that the recipient of the information has. If we correlate the thesaurus with the content of the message that was received, then we can find out how much it reduced the uncertainty. I would like to correct one mistake, which often affects a large number of people. So, they believe that the semantic way of measuring information was introduced by Claude Shannon. It is not known exactly how this misconception arose, but this opinion is incorrect. Claude Shannon introduced a statistical way of measuring information, the "heir" of which is considered to be semantic.

Graphical approach for determining the amount of semantic information in the received message

Why do you need to draw something? Semantic Measurement uses this opportunity to visualize the usefulness of the data in easy-to-understand pictures. What does this mean in practice? To clarify the state of affairs, a dependence is built in the form of a graph. If the user does not have knowledge about the essence of the message that was received (equal to zero), then the amount of semantic information will be equal to the same value. Can you find the optimal value? Yes! This is the name of the thesaurus, where the amount of semantic information is maximum. Let's take a quick example. Suppose the user received a message written in an unfamiliar foreign language, or a person can read what is written there, but this is no longer news to him, since all this is known. In such cases, it is said that the message contains zero semantic information.

Historical development

Probably, this should have been discussed a little higher, but it is not too late to catch up. The original semantic way of measuring information was introduced by Ralph Hartley in 1928. Earlier it was mentioned that Claude Shannon is often mentioned as the founder. Why is there such confusion? The fact is that, although the semantic way of measuring information was introduced by Ralph Hartley in 1928, it was Claude Shannon and Warren Weaver who summarized it in 1948. After that, the founder of cybernetics, Norbert Wiener, formed the idea of \u200b\u200bthe thesaurus method, which received the greatest recognition in the form of a measure developed by Yu. I. Schneider. It should be noted that in order to understand this, enough high level knowledge.

Effectiveness

What does the thesaurus method give us in practice? It is a real confirmation of the thesis that information has such a property as relativity. It should be noted that it has a relative (or subjective) value. In order to be able to objectively evaluate scientific information, the concept of a universal thesaurus was introduced. Its degree of change shows the significance of the knowledge that humanity receives. At the same time, it is impossible to say for sure what the final result (or intermediate) can be obtained from the information. Take computers, for example. Computing technology was created on the basis of lamp technology and the bit state of each structural element and was originally used for settlements. Now almost every person has something that works on the basis of this technology: radio, telephone, computer, TV, laptop. Even modern refrigerators, stoves and washbasins contain a bit of electronics, which are based on information about facilitating human use of these household devices.

Scientific approach

Where is the semantic way of measuring information being studied? Computer science is the science that deals with various aspects of this issue. What is the feature? The method is based on the use of the "true / false" system, or the bit system "one / zero". When certain information arrives, it is divided into separate blocks, which are named like units of speech: words, syllables, and the like. Each block gets a certain value. Let's take a quick example. Two friends are standing nearby. One addresses the other with the words: "Tomorrow we have a day off." When are the days for rest - everyone knows. Therefore, the value of this information is zero. But if the second says that he is working tomorrow, then for the first it will be a surprise. Indeed, in this case, it may turn out that the plans that one person was building, for example, to go bowling or rummage in the workshop, will be violated. Each part of the described example can be described using ones and zeros.

Operating with concepts

But what else is used besides the thesaurus? What else do you need to know to understand the semantic way of measuring information? The basic concepts, which can be studied further, are sign systems. They are understood as means of expressing meaning, such as rules for interpreting signs or their combinations. Let's look at another example from computer science. Computers operate with conditional zeros and ones. In fact, this is a low and high voltage that is applied to the components of the equipment. Moreover, they transmit these ones and zeros without end and edge. How to distinguish between the two techniques? The answer to this was found - interrupts. When this same information is transmitted, different blocks are obtained such as words, phrases and individual meanings. In oral human speech, pauses are also used to break down data into separate blocks. They are so invisible that we notice most of them on the "machine". Dots and commas are used for this purpose in writing.

Features:

Let's also touch on the topic of properties that a semantic way of measuring information has. We already know that this is the name of a special approach that evaluates the importance of information. Can we say that the data that will be assessed in this way will be objective? No, this is not true. Information is subjective. Let's take a look at the example of a school. There is an excellent student who is ahead of the approved program, and the average average student who studies what is presented in the classroom. For the former, most of the information that he will receive at school will be of rather weak interest, since he already knows this and hears / reads this not for the first time. Therefore, on a subjective level, it will not be very valuable for him (except perhaps for some of the teacher's remarks, which he noticed during the presentation of his subject). Whereas the middle man oh new information I heard something only remotely, so for him the value of the data that will be presented in the lessons is an order of magnitude greater.

Conclusion

It should be noted that in informatics the semantic way of measuring information is not the only option within which it is possible to solve existing problems. The choice should depend on the goals and opportunities present. Therefore, if the topic is of interest or there is a need for it, then we can only strongly recommend that you study it in more detail and find out what other ways of measuring information, besides semantic, exist.

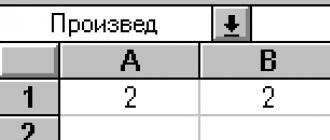

To measure information, two parameters are introduced: the amount of information I and the amount of data V d.

These parameters have different expressions and interpretation depending on the considered form of adequacy.

Syntactic adequacy. It reflects the formal and structural characteristics of information and does not affect its semantic content. At the syntactic level, the type of medium and the way of presenting information, the speed of transmission and processing, the size of the codes for the presentation of information, the reliability and accuracy of the conversion of these codes, etc. are taken into account.

Information considered only from a syntactic point of view is usually called data, since the semantic side does not matter.

Semantic (semantic) adequacy. This form determines the degree of correspondence between the image of the object and the object itself. The semantic aspect involves taking into account the semantic content of information. At this level, the information that information reflects is analyzed, semantic connections are considered. In computer science, semantic links are established between codes for the presentation of information. This form serves to form concepts and representations, to identify the meaning, content of information and its generalization.

Pragmatic (consumer) adequacy. It reflects the relationship between information and its consumer, the compliance of information with the management goal, which is implemented on its basis. The pragmatic properties of information are manifested only if there is a unity of information (object), user and management goal.

The pragmatic aspect consideration is associated with the value, usefulness of the use of information in the development of a consumer solution to achieve its goal. From this point of view, the consumer properties of information are analyzed. This form of adequacy is directly related to practical use information, with the correspondence of its target function to the activity of the system.

Each form of adequacy has its own measure of the amount of information and the amount of data (Fig. 2.1).

Figure: 2.1. Information measures

2.2.1. Syntactic measure of information

Syntactic measure the amount of information operates with impersonal information that does not express a semantic relationship to the object.

The amount of data V d in a message is measured by the number of characters (bits) in this message. IN different systems reckoning, one digit has a different weight and the data unit changes accordingly:

- in binary system reckoning unit - bit ( bit - binary digit - binary digit);

- in decimal notation, the unit is dit (decimal place).

Example. A message in a binary system in the form of an eight-bit binary code 10111011 has a data size V d \u003d 8 bits.

The message in the decimal system in the form of a six-digit number 275903 has a data volume V d \u003d 6 dit.

The amount of information is determined by the formula:

![]()

where H (α) is the entropy, i.e. the amount of information is measured by changing (decreasing) the uncertainty of the state of the system.

The entropy of the system H (α), which has N possible states, according to Shannon's formula, is:

where p i is the probability that the system is in the i -th state.

For the case when all states of the system are equally probable, its entropy is determined by the relation

where N is the number of all possible displayed states;

m - base of the number system (variety of symbols used in the alphabet);

n is the number of digits (characters) in the message.

2.2.2. Semantic measure of information

To measure the semantic content of information, i.e. its quantity at the semantic level, the thesaurus measure, which connects the semantic properties of information with the user's ability to receive an incoming message, received the greatest recognition. For this, the concept is used user thesaurus.

A thesaurus is a collection of information held by a user or a system.

Depending on the relationship between the semantic content of information S and the user's thesaurus S p, the amount of semantic information I s, perceived by the user and subsequently included by him in his thesaurus, changes. The nature of this dependence is shown in Figure 2.2:

- when S p \u003d 0, the user does not perceive, does not understand the incoming information;

- as S p → ∞, the user knows everything, he does not need the incoming information.

Figure: 2.2. Dependence of the amount of semantic information perceived by the consumer on his thesaurus I с \u003d f (S p)

When assessing the semantic (meaningful) aspect of information, it is necessary to strive to reconcile the values \u200b\u200bof S and S p.

A relative measure of the amount of semantic information can be the content coefficient C, which is defined as the ratio of the amount of semantic information to its volume:

2.2.3. Pragmatic measure of information

This measure determines the usefulness of the information (value) for the achievement of the user's goal. This measure is also a relative value, due to the peculiarities of using information in a particular system. It is advisable to measure the value of information in the same units (or close to them) in which the objective function is measured.

For comparison, the introduced measures of information are presented in Table. 2.1.

Table 2.1. Information units and examples

| Measure of information | Units | Examples (for the computer field) |

|---|---|---|

| Syntactic: shannon's approach computer approach |

Uncertainty reduction | Event probability |

| Information presentation units | Bit, Byte, Kbyte, etc. | |

| Semantic | Thesaurus | Package application programs, personal Computer, computer networks etc. |

| Economic indicators | Profitability, productivity, depreciation rate, etc. | |

| Pragmatic | Use value | Monetary expression |

| Memory capacity, computer performance, baud rate, etc. | Information processing and decision-making time |

per state on average is called entropy of a discrete source of information

mation.

H p i logp i

i 1 N

If we again focus on measuring uncertainty in binary units, then the base of the logarithm should be taken equal to two.

H p ilog 2 p i

i 1 N

In an equally probable election, all

p log | ||||

and formula (5) is transformed into R. Hartley's formula (2):

1 log2 | N log2 | ||||||

The proposed measure was called entropy for a reason. The point is that the formal structure of expression (4) coincides with the entropy of the physical system, determined earlier by Boltzmann. According to the second law of thermodynamics, the entropy of a closed space is determined by the expression

P i 1 | ||||||

growth, then

can be written as

p iln

i 1 N

This formula completely coincides with (4)

In both cases, the value characterizes the degree of system diversity. | ||||||

Using formulas (3) and (5), it is possible to determine the redundancy of the alphabet of the source |

||||||

Which shows how rationally symbols are used of this alphabet: |

||||||

) - the maximum possible entropy, determined by the formula (3); | () - entropy |

|||||

source, determined by the formula (5). | ||||||

The essence of this measure lies in the fact that with an equiprobable choice, the same informational load on a sign can be provided using a smaller alphabet than in the case of an unequal choice.

Semantic level information measures

To measure the semantic content of information, i.e. its quantity at the semantic level, the most widespread is the thesaurus measure, which connects the semantic properties of information with the user's ability to receive the message. Indeed, to understand and use the information received, the recipient must have a certain amount of knowledge. Complete ignorance of the subject does not allow us to extract useful information from the received message about this subject. As knowledge about the subject grows, so does the number useful informationretrieved from the message.

If we call the recipient's knowledge about a given subject a "thesaurus" (that is, a certain set of words, concepts, names of objects connected by semantic links), then the amount of information contained in a certain message can be estimated by the degree of change in an individual thesaurus under the influence of this message ...

A thesaurus is a collection of information held by a user or a system.

In other words, the amount of semantic information extracted by the recipient from incoming messages depends on the degree of preparation of his thesaurus for the perception of such information.

Depending on the relationship between the semantic content of information and the user's thesaurus, the amount of semantic information perceived by the user and subsequently included by him in his thesaurus changes. The nature of this dependence is shown in Figure 3. Consider two limiting cases when the amount of semantic information is

Figure 3 - Dependence of the amount of semantic information perceived by the consumer on his thesaurus ()

The consumer acquires the maximum amount of semantic information upon agreement

combining its semantic content with its thesaurus (), when the incoming information is understandable to the user and brings him previously unknown (missing in his thesaurus) information.

Consequently, the amount of semantic information in the message, the amount of new knowledge received by the user is a relative value. The same message can have meaningful content for a competent user and be meaningless for an incompetent user.

When assessing the semantic (content) aspect of information, it is necessary to strive for the agreement of the values \u200b\u200band.

A relative measure of the amount of semantic information can be the content coefficient, which is defined as the ratio of the amount of semantic information to its volume:

Another approach to semantic evaluations of information, developed in the framework of science of science, is that the number of references to it in other documents is taken as the main indicator of the semantic value of information contained in the analyzed document (message, publication). Specific indicators are formed on the basis of statistical processing of the number of links in various samples.

Pragmatic level information measures

This measure determines the usefulness of the information (value) for the achievement of the user's goal. It is also a relative value due to the peculiarities of using this information in a particular system.

One of the first domestic scientists to address this problem was A.A. Kharkevich, who proposed to take as a measure of the value of information the amount of information necessary to achieve the set goal, i.e. calculate the increment in the probability of achieving the goal. So if

Thus, the value of information is measured in units of information, in this case in bits.

Expression (7) can be considered as the result of normalizing the number of outcomes. The explanation in Figure 4 shows three schemes in which the same values \u200b\u200bof the number of outcomes 2 and 6 are taken for points 0 and 1, respectively. The starting position is point 0. Based on the information received, a transition is made to point 1. The target is indicated by a cross. Favorable outcomes are depicted by lines leading to a goal. Let's determine the value of the information received in all three cases:

a) the number of favorable outcomes is three: | and therefore |

|||||||||||||||||||||||||||||

b) there is one favorable outcome: | ||||||||||||||||||||||||||||||

c) the number of favorable outcomes is four: | ||||||||||||||||||||||||||||||

In example b) a negative value of information (negative information) is obtained. Such information, which increases the initial uncertainty and decreases the likelihood of achieving the goal, is called disinformation. Thus, in example b), we got a disinformation of 1.58 binary units.

LEVELS OF INFORMATION TRANSMISSION PROBLEMS

When implementing information processes there is always a transfer of information in space and time from the source of information to the receiver (recipient). At the same time, various signs or symbols are used to convey information, for example, natural or artificial (formal) language, allowing it to be expressed in some form, called a message.

Message- a form of information representation in the form of a collection of characters (symbols) used for transmission.

Communication as a set of signs from the point of view of semiotics (from the Greek. semeion -sign, sign) - a science that studies the properties of signs and sign systems - can be studied at three levels:

1) syntactic,where the internal properties of messages are considered, that is, the relations between signs reflecting the structure of a given sign system. External properties are studied at the semantic and pragmatic levels;

2) semantic,where the relations between signs and the objects, actions, qualities designated by them are analyzed, that is, the semantic content of the message, its relation to the source of information;

3) pragmatic,where the relationship between the message and the recipient is considered, that is, the consumer content of the message, its relation to the recipient.

Thus, taking into account a certain relationship between the problems of transferring information with the levels of studying sign systems, they are divided into three levels: syntactic, semantic and pragmatic.

Problems syntactic levelrelate to the creation of theoretical foundations of construction information systems, the main performance indicators of which would be close to the maximum possible, as well as improvement existing systems in order to improve the efficiency of their use. It's clean technical problems improving methods of transmission of messages and their tangible media - signals. At this level, the problems of delivering messages to the recipient as a set of characters are considered, taking into account the type of medium and the way of presenting information, the transmission and processing speed, the size of the information presentation codes, the reliability and accuracy of the conversion of these codes, etc., completely abstracting from the semantic content of messages and their intended purpose. At this level, information considered only from a syntactic point of view is usually called data, since the semantic side does not matter.

Modern information theory studies mainly the problems of this particular level. It is based on the concept of "amount of information", which is a measure of the frequency of the use of signs, which in no way reflects either the meaning or the importance of the messages transmitted. In this regard, it is sometimes said that modern information theory is at the syntactic level.

Problems semantic levelare associated with formalizing and taking into account the meaning of the transmitted information, determining the degree of correspondence between the image of the object and the object itself. On this level the information that reflects the information is analyzed, semantic connections are considered, concepts and representations are formed, the meaning, content of information is revealed, and its generalization is carried out.

The problems of this level are extremely complex, since the semantic content of information depends more on the recipient than on the semantics of the message presented in any language.

At the pragmatic level, the consequences of receiving and using this information by the consumer are interested. Problems at this level are associated with the determination of the value and usefulness of using information when the consumer develops a solution to achieve his goal. The main difficulty here is that the value and usefulness of information can be completely different for different recipients and, in addition, it depends on a number of factors, such as the timeliness of its delivery and use. High requirements for the speed of information delivery are often dictated by the fact that control actions must be carried out in real time, i.e., at the rate of change in the state of controlled objects or processes. Delays in the delivery or use of information can be disastrous.

As already noted, the concept of information can be considered under various restrictions imposed on its properties, i.e. at different levels of consideration. Basically, there are three levels - syntactic, semantic and pragmatic. Accordingly, on each of them, different estimates are used to determine the amount of information.

At the syntactic level, to estimate the amount of information, probabilistic methods are used that take into account only the probabilistic properties of information and do not take into account others (semantic content, usefulness, relevance, etc.). Developed in the middle of XX century. mathematical and, in particular, probabilistic methods made it possible to form an approach to assessing the amount of information as a measure of reducing the uncertainty of knowledge.

This approach, also called probabilistic, postulates the principle: if some message leads to a decrease in the uncertainty of our knowledge, then it can be argued that such a message contains information. In this case, the messages contain information about any events that can be realized with different probabilities.

The formula for determining the amount of information for events with different probabilities and obtained from a discrete source of information was proposed by the American scientist K. Shannon in 1948. According to this formula, the amount of information can be determined as follows:

Where I - the amount of information; N - the number of possible events (messages); p i - the probability of individual events (messages).

The amount of information determined using the formula (2.1) takes only a positive value. Since the probability of individual events is less than one, then, accordingly, the expression log 2, - is a negative value and to obtain a positive value of the amount of information in formula (2.1), a minus sign is in front of the sum sign.

If the probability of occurrence of individual events is the same and they form a complete group of events, i.e.:

then formula (2.1) is transformed into R. Hartley's formula:

In formulas (2.1) and (2.2), the relationship between the amount of information I and, accordingly, the probability (or number) of individual events is expressed using the logarithm.

The use of logarithms in formulas (2.1) and (2.2) can be explained as follows. For simplicity of reasoning, we will use relation (2.2). We will sequentially assign to the argument N values \u200b\u200bselected, for example, from a series of numbers: 1, 2, 4, 8, 16, 32, 64, etc. To determine which event from N equiprobable events have occurred, for each number of the series it is necessary to sequentially perform selection operations from two possible events.

So, for N \u003d 1 the number of operations will be equal to 0 (the probability of an event is 1), for N \u003d 2, the number of operations will be 1, for N \u003d 4 the number of operations will be equal to 2, for N \u003d 8, the number of operations will be 3, etc. Thus, we get the following series of numbers: 0, 1, 2, 3, 4, 5, 6, etc., which can be considered corresponding to the values \u200b\u200bof the function I in relation (2.2).

A sequence of number values \u200b\u200bthat an argument takes N, is a series, known in mathematics as a series of numbers forming a geometric progression, and a sequence of values \u200b\u200bof numbers that the function takes I, will be a series forming an arithmetic progression. Thus, the logarithm in formulas (2.1) and (2.2) establishes the relationship between the series representing the geometric and arithmetic progressions, which is well known in mathematics.

To quantify (estimate) any physical quantity, it is necessary to determine the unit of measurement, which in the theory of measurements is called measures

.

As already noted, information must be encoded before being processed, transmitted and stored.

Coding is done using special alphabets (character systems). In informatics, which studies the processes of receiving, processing, transmitting and storing information using computing (computer) systems, binary coding is mainly used, in which a sign system consisting of two symbols 0 and 1 is used. For this reason, in formulas (2.1) and (2.2) the number 2 is used as the base of the logarithm.

Based on the probabilistic approach to determining the amount of information, these two symbols of a binary sign system can be considered as two different possible events, therefore, as a unit of information amount, we take such an amount of information that contains a message that halves the uncertainty of knowledge (before the events are received, their probability is 0 , 5, after receiving - 1, the uncertainty decreases accordingly: 1 / 0.5 \u003d 2, i.e., 2 times). Such a unit of information is called a bit (from the English word binary digit - binary digit). Thus, as a measure for estimating the amount of information at the syntactic level, under the condition of binary coding, one bit is adopted.

The next largest unit for measuring the amount of information is a byte, which is a sequence made up of eight bits, i.e .:

1 byte \u003d 2 3 bits \u003d 8 bits.

In informatics, multiple byte units for measuring the amount of information are also widely used, however, in contrast to the metric system of measures, where the coefficient 10n is used as multipliers of multiple units, where n \u003d 3, 6, 9, etc., in multiple units of measuring the amount of information the factor 2n is used. This choice is explained by the fact that the computer basically operates with numbers not in decimal, but in the binary number system.

Byte multiples of the unit of measurement of the amount of information are entered as follows:

1 kilobyte (KB) \u003d 210 bytes \u003d 1024 bytes;

1 megabyte (MB) \u003d 210 KB \u003d 1024 KB;

1 gigabyte (GB) \u003d 210 MB \u003d 1024 MB;

1 terabyte (TB) \u003d 210 GB \u003d 1024 GB;

1 petabyte (PB) \u003d 210 TB \u003d 1024 TB

1 exabyte (Ebyte) \u003d 210 PB \u003d 1024 PB.

Units for measuring the amount of information, in the name of which there are prefixes "kilo", "mega", etc., from the point of view of measurement theory, are not correct, since these prefixes are used in the metric system of measures, in which the coefficient is used as multipliers of multiple units 10 n, where n \u003d 3, 6, 9, etc. To eliminate this incorrectness, an international organization International Electrotechnical Commission, which sets standards for the electronic technology industry, has approved a number of new prefixes for units of measurement of information: kibi, mebi, gibi, tebi, peti, exbi. However, the old designations of the units of measurement of the amount of information are still used, and it takes time for the new names to begin to be widely used.

The probabilistic approach is also used to determine the amount of information presented using sign systems. If we consider the symbols of the alphabet as a set of possible messages N, then the amount of information that one character of the alphabet carries can be determined by formula (2.1). If each letter of the alphabet is equally probable in the text of the message, you can use formula (2.2) to determine the amount of information.

The amount of information that one character of the alphabet carries, the more, the more characters are included in this alphabet. The number of characters included in the alphabet is called the power of the alphabet. The amount of information (information volume) contained in a message encoded using a sign system and containing a certain number of characters (symbols) is determined using the formula:

where V - information volume of the message; I= log 2 N, the information volume of one symbol (sign); TO - the number of characters (characters) in the message; N - the power of the alphabet (the number of characters in the alphabet).