08.10.2012

The question is whether it is worth taking a faster memory - it faces many buyers. Due to lower prices for DDR3 modules with a frequency of 1600 megahertz and higher, it has become even more relevant. The answer would seem obvious - certainly worth it! But what increase can provide a higher memory frequency, and is it worth it to overpay? This is what we will try to find out.

If more recently, the choice of RAM was simple, there is extra money, you take DDR3 with a frequency of 1600 megahertz, if there are none, you are satisfied with DDR3-1333. At the moment, on the shelves of stores there is a huge selection of RAM with a frequency above 1600 megahertz, and at a very reasonable price. This encourages buyers to opt for faster models, with a frequency of 1866, 2000, and 2133 megahertz. And this is quite justified in theory - the higher the memory frequency, the greater the throughput, the higher the performance.

However, in real conditions, the situation may be slightly different. No, a system with DDR3-2000 modules cannot be slower than a system with DDR3-1333 modules. In this case, "do not spoil the porridge with butter." But the difference in performance can be almost invisible in most applications that we use in everyday life. In fact, from constantly used applications, only archivers clearly and unambiguously respond to the increased frequency by increasing productivity. The rest is not easy to notice the difference.

At the same time, fast RAM continues to be actively promoted by manufacturers and sellers, as a solution for gamers. As a result, it makes users feel that the frequency of the memory is almost as critical as the number of cores in the processor, the number of stream processors, and the width of the memory bus in the video chip.

To debunk, or vice versa, confirm this statement, we conceived this test. Its principle is simple - we will test the same set of memory in several games when working at different frequencies, and try to find out what, in fact, the increase gives an increase in the memory frequency. And does it give at all.

For the test, we used our test bench, in which we installed the Team Xtreem Dark memory kit with a base frequency of 1866 megahertz produced by Team Group. Two memory modules with a capacity of 4 gigabytes each have standard timings of 9-11-9-27 for the nominal frequency, are marked TDD34G1866HC9KBK, and operate at a voltage of 1.65 volts. Quite affordable and at the same time fast memory modules with a three-year warranty and original radiators, which may well become the choice of a gamer who does not want to give crazy money for modules with a frequency above 2 gigahertz. And therefore, they fit perfectly into the concept of the test.

It was decided to test the memory at three frequencies - 1333, 1600, and 1866 megahertz. It was decided to abandon the lower frequencies of 800 and 1066 megahertz, since the purchase of such modules (if you can still find them on sale) will be unreasonable, since they will be the same price with the DDR3-1333 modules. Although the 2000 megahertz mode was theoretically planned, the harsh reality made changes to these plans. The memory frequency multiplier in our motherboard ASUS P8Z77-V does not support such a frequency, and the next step is over 1866 megahertz, it offers 2133. At this memory frequency, the system booted up at a constant voltage, allowed to work, and even passed the 3DMark Physics test, but starting any game led to the blue screen. Moreover, neither an increase in timings nor an increase in voltage helped. Therefore, it was necessary to refuse high frequencies.

In principle, this is not a big deal, because the purpose of this test is not to check the most expensive and fast memory modules, but to determine the dependence of performance in games on frequency. If as a result it turns out that there is an increase, then based on the results of tests with three different frequencies, it will be possible to derive an approximate increase for models with frequencies above 2000 megahertz by interpolating the results.

During the test, we decided not to change the timings, so as not to confuse the results. But in the end, we decided to give a small head start to the lowest frequency, and in addition to the mode with timings 9-11-9-27, we ran tests with timings 7-7-7-21, which are standard for good DDR3-1333 modules. Note that we performed all tests at a resolution of 1280 by 720 pixels, at maximum quality settings using 16x anisotropic filtering, and without smoothing. It was necessary to reduce the resolution in order to reduce the impact of the performance of the video card, which traditionally becomes a bottleneck in gaming tests.

Well, there are input data, it’s time to move on to the test results. To evaluate the theoretical increase in memory bandwidth with increasing frequency, all configurations were tested in the AIDA 64 package. This synthetic test produced quite logical and expected results. There is an increase in throughput with an increase in frequency, and a mode with minimal timings allowed to obtain higher results than a mode with less. We pass to the results of game tests.

In Performance 3DMark 11 mode, he demonstrated that there is a influence of the memory frequency on the final result, and it is quite linear. The faster the memory, the more points. How much more? As you can see in the diagram, with a total result of more than 6000 points, a system with DDR3-1866 memory outperformed DDR3-1333 with equal timings, only 111 points. This difference can be expressed by a modest figure - 1.8 percent. If the DDR3-1333 memory runs on timings 7-7-7-21-21 more familiar to itself, then the difference in result with the fastest memory decreases to 1.5 percent. That is, in this case, the use of faster memory does not give a noticeable increase.

This test was the only one in the 3DMark 11 package, which reacted very positively to an increase in the memory frequency and lower timings. The load on the video card here is small, but the load on the processor when calculating the physics is very high. Accordingly, the load on memory, which stores all the data processing results, is also large. As a result, the gap between DDR3-1866 and DDR3-1333 with equal timings amounted to just over 16 percent. Reducing the timings of the slowest memory can reduce the gap to 12.8 percent. DDR3-1600 was exactly in the middle between DDR3-1333 and DDR3-1866, as it should be in frequency. Given the use of resources in this test, which is very strange for real applications, we will not take into account its results. There are no such games with such a load distribution, and most likely never will be.

Metro 2033

To be honest, we did not expect to see such interesting results. And they are interesting not by a big increase, but by their dependence on timings. In a direct comparison of three frequencies with equal timings, we observe the same linearity - with increasing frequency, productivity also increases. But the growth is scanty, and almost imperceptible: DDR3-1866 is faster than DDR3-1333 by only 0.8 frames per second, and this is a modest 1.3 percent. Quite a bit. Between them again was the DDR3-1600 memory. But DDR3-1333 with timings 7-7-7-21 showed remarkable potential, showing the same result as fast DDR3-1866 with timings 9-11-9-27. This suggests that lower timings for this game are preferable, and DDR3-1600 with timings of 8-8-8-24 could well become the winner of this test. By the way, shifting the physics calculation from the video card to the processor did not change the balance of power and discontinuities, as might be expected after the 3DMark 11 Physics test.

Crysis 2

Inspired by the results of previous tests, which showed the path and almost invisible to the naked eye, but still present a performance increase, we switched to the game Crysis 2, and here we were waiting for a revelation. All four configurations, as can be seen in the diagram, showed exactly the same result, with an accuracy of up to one tenth of a frame per second. Yes, it does. Apparently, the CryEngine engine is completely insensitive to the bandwidth of the memory subsystem. We state this fact and move on to the last test.

DiRT Showdown

This test produced the most controversial and inexplicable result. Firstly, I was surprised by the DDR3-1333 memory with minimal timings, which was inferior to the memory operating at the same frequency, but with large timings, which, in principle, is unnatural. True, it was completely miserable - 00.8 percent. DDR3-1600 was faster than DDR3-1333 with the same timings, by a reasonable and explainable 1.7 percent. But DDR3-1866 showed an incredible increase! The superiority over DDR3-1600 amounted to a solid 5.8 percent. This is really a lot. Given all the previous results. After all, it was quite logical and expected to see the same 1.7 percent that divided DDR3-1600 and DDR3-1333 - then the increase would be linear. Based on our experience, we know that such results can be random, and inexplicable, due to some kind of internal program malfunction, as in our practice there was a case when 3DMark 03 completely undeservedly produced a GeForce FX 5200 result that exceeded the results of top cards of that time . Well, considering that it is customary to ignore nonlinear results in statistics, this is what we will do.

the announcement of a new utility for measuring performance in terms of memory-dependent applications

Typically, when testing platform performance, the emphasis is on processor-dependent applications. But the speed of the system depends not only on the central processor. And now we don’t even remember about graphically rich applications and the use of GPUs for general purpose computing, in which the choice of a video card plays a significant role. This, as you might guess, will talk about the impact of memory performance, and our attempt to quantify this effect.

The dependence of the overall system performance on the memory is complex, which makes it difficult to directly evaluate the memory speed, that is, comparing various modules. For example, a memory with a frequency of 1600 MHz has twice as much bandwidth than 800 MHz. And synthetic memory tests diligently display a column twice as high. But if you test the whole system with these two types of memory using popular test applications, which are usually tested by processors, then you will not get close to a double difference in performance. The integrated performance index may differ by a maximum of several tens of percent.

This makes synthetic memory tests uninformative from a practical point of view. However, we cannot guarantee that the approach using real applications gives us a completely reliable picture, since it is likely that some modes where memory performance is really critical were left unattended and were not taken into account.

Brief theory

To understand the specifics of the problem, we will consider a schematic diagram of the interaction of the application, the CPU and the memory subsystem. For a long time, an analogy with a factory conveyor has been considered successful to describe the operation of the central process. And instructions from the program code move along this conveyor, and the functional modules of the processor process them like machines. Then modern multi-core CPUs will be similar to factories with several workshops. For example, the operation of Hyper-Threading technology can be compared to a conveyor, along which parts of several cars travel at once, and smart machines process them simultaneously, using a mark on the parts, determining which model of machine they belong to. For example, a red and blue machine is assembled, then the dyeing machine uses red paint for the details of the red machine and blue paint for the blue. And the flow of parts for two models at once allows you to better load the machines. And if the painting apparatus has two sprays, and can paint two parts at the same time in different colors, the conveyor will be able to work at full power, regardless of the order in which the parts arrive. Finally, the latest fashion trend, which will be implemented in future AMD processors, in which various CPU cores will have some common functional blocks, can be compared with the idea of \u200b\u200bmaking some particularly bulky and expensive machines common for two workshops in order to save factory space and reduce capital costs.

From the point of view of this analogy, system memory will be the outside world, which supplies raw materials to the plant and receives the finished product, and cache memory is a kind of warehouse directly on the factory territory. The more system memory we have, the larger virtual world we can provide with our products, and the higher the CPU frequency and the number of cores, the more powerful and productive our plant. And the larger the size of the cache memory, that is, the factory warehouse, the fewer calls to the system memory — requests for the supply of raw materials and components.

The memory performance in this analogy will correspond to the speed of the transport system for the delivery of raw materials and sending parts to the outside world. Suppose delivery to a factory is carried out by truck. Then the parameters of the transport system will be truck capacity and speed, that is, delivery time. This is a good analogy, since the work of the CPU with memory is carried out using separate transactions with fixed-size memory blocks, and these blocks are located side by side, in the same memory location, and not arbitrarily. And for the overall productivity of the plant, not only the speed of the conveyor is important, but also the speed of transportation of components and the export of finished products.

The product of the volume of the body and the speed of movement, that is, the number of goods that can be transported per unit of time, will correspond to the memory bandwidth (PSP). But it is obvious that systems with the same memory bandwidth are not necessarily equivalent. The importance of each component is important. A fast maneuverable truck may turn out to be better than a large but slow transport, since the necessary data can lie in different sections of memory located far from each other, and the truck capacity (or transaction volume) is much less than the total volume (memory), and then even a large truck will have to make two flights, and its capacity will not be in demand.

Other programs have so-called local memory access, that is, they read or write to closely located memory cells - they are relatively indifferent to the speed of random access. This property of the programs explains the effect of increasing the amount of cache memory in processors, which, due to its close proximity to the core, is ten times faster. Even if a program requires, for example, 512 MB of total memory, in each separate small period of time (for example, a million clock cycles, that is, one millisecond), the program can work with only a few megabytes of data that are successfully cached. And you only need to update the contents of the cache from time to time, which, in general, happens quickly. But there may be a reverse situation: the program takes up only 50 MB of memory, but constantly works with all this volume. And 50 MB significantly exceeds the typical cache size of existing desktop processors, and, relatively speaking, 90% of memory accesses (with a cache size of 5 MB) are not cached, that is, 9 out of 10 hits go directly to memory, since there is no necessary data cache. And overall performance will be almost completely limited by memory speed, since the processor will almost always be waiting for data.

The memory access time when there is no data in the cache is hundreds of clock cycles. And one instruction to access memory in time is equivalent to dozens of arithmetic ones.

“Memory Independent” Applications

Let us once use such a clumsy term for applications in which performance in practice does not depend on changing modules to higher-frequency and low-latency ones. Where do such applications come from? As we have already noted, all programs have different memory requirements, depending on the volume used and the nature of access. For some programs, only the general memory bandwidth is important, while others, on the contrary, are critical to the speed of access to random sections of memory, which is also called memory latency. But it is also very important that the degree of the program’s dependence on the memory parameters is largely determined by the characteristics of the central processor - first of all, by the size of its cache, since with an increase in the cache memory the program’s working area (the most frequently used data) can fit entirely into the processor’s cache which will speed up the program qualitatively and make it insensitive to memory characteristics.

In addition, it is important how often instructions for accessing memory are found in the program code. If a significant part of the calculations occurs with registers, the percentage of arithmetic operations is large, then the influence of memory speed is reduced. Moreover, modern CPUs can change the order of execution of instructions and begin to load data from memory long before they are really needed for calculations. This technology is called prefetch. The quality of the implementation of this technology also affects the memory dependence of the application. Theoretically, CPUs with perfect prefetch would not require fast memory, as it would not stand idle while waiting for data.

Speculative prefetching technologies are being actively developed, when the processor, even without having the exact value of the memory address, already sends a read request. For example, the processor for the number of some memory access instruction remembers the last address of the memory cell that was read. And when the CPU sees that it will soon be necessary to execute this instruction, it sends a request to read data to the last stored address. If you are lucky, then the memory read address will not change, or it will change within the limits of the block read in one call to the memory. Then the latency of access to memory is partially hiding, because in parallel with the delivery of data, the processor executes instructions that precede reading from memory. But, of course, this approach is not universal and the effectiveness of prefetching strongly depends on the features of the program algorithm.

However, program developers are also aware of the characteristics of the modern generation of processors, and often they can (if they wish) optimize the amount of data so that it fits in the cache memory of even budget processors. If we work with a well-optimized application - for example, we can recall some video encoding programs, graphic or three-dimensional editors - from a practical point of view, memory will not have such a parameter as performance, it will only have volume.

Another reason that the user may not detect the difference when changing the memory is because it is already too fast for the processor used. If now all the processors suddenly slowed down by 10 times, then for the system performance in most programs it would absolutely not matter what type of memory is installed in it - at least DDR-400, at least DDR3-1600. And if the CPUs radically accelerated, then the performance of a significant part of the programs on the contrary would become much more dependent on the characteristics of the memory.

Thus, the actual memory performance is a relative value, and is determined including the processor used, as well as software features.

Memory-dependent applications

And in what user tasks does memory performance matter more? For a strange, but in fact deeply grounded reason, in cases that are difficult to test.

Here, one immediately recalls strategy toys with complex and “slow” artificial intelligence (AI). They no one likes to test the CPU, since the tools for evaluation are either absent or are characterized by large errors. A lot of factors influence the speed of making a decision by the AI \u200b\u200balgorithm - for example, the variability of decisions sometimes embedded in the AI, so that the decisions themselves look more “human”. Accordingly, the implementation of various behaviors takes different times.

But this does not mean that the system in this task has no performance, that it is not defined. It is simply difficult to accurately calculate, for this it will be necessary to collect a large amount of statistical data, that is, to conduct many tests. In addition, such applications are highly dependent on memory speed due to the use of a complex data structure distributed across random access memory in often unpredictable ways, so the optimizations mentioned above may simply not work or may be ineffective.

Games of other genres may depend quite strongly on memory performance, albeit not with such smart artificial intelligence, but with their own algorithms for simulating the virtual world, including the physical model. However, in practice they most often rest on the performance of the video card, therefore, testing memory on them is also not very convenient. In addition, the minimum fps value is an important parameter of comfortable gameplay in three-dimensional first-person games: its possible subsidence in the heat of a fierce battle can have the most dire consequences for a virtual hero. And the minimum fps, too, can be said to be impossible to measure. Again - due to the variable behavior of AI, the features of the calculation of “physics” and random system events, which can also lead to subsidence. How do you order in this case to analyze the data?

Testing the speed of games in demos is also of limited use because not all parts of the game engine are used to play demos, and in a real game other factors may influence the speed. Moreover, even in such semi-artificial conditions, the minimum fps is unstable, and it is rarely given in the test reports. Although, we repeat, this is the most important parameter, and in cases where there is access to data, fps subsidence is very likely. Indeed, modern games, due to their complexity and variety of code, which, in addition to supporting the physical engine and artificial intelligence, also include preparing a graphic model, processing sound, transmitting data through a network, etc., are very dependent on both the volume and memory performance. By the way, it would be a mistake to assume that the graphics processor processes all the graphics itself: it only draws triangles, textures and shadows, and the CPU is still involved in the formation of commands, and for a complex scene this is a computationally intensive task. For example, when Athlon 64 with an integrated memory controller came out, the greatest increase in speed compared to the old Athlon was in games, although it did not use 64-bit, SSE2 and other new Athlon 64 “chips”. It is a significant increase in the efficiency of working with Thanks to the integrated controller, the then new AMD processor became the champion and the leader in performance primarily in games.

Many other complex applications, primarily server applications, in the case of which there is a random flow of events processing, also significantly depend on the performance of the memory subsystem. In general, the software used in organizations, from the point of view of the nature of the program code, often has no analogues among popular applications for home staffers, and therefore a very significant stratum of tasks remains without an adequate assessment.

Another fundamental case of increased memory dependency is multitasking, that is, the launch of several demanding applications at the same time. Let us recall again the same AMD Athlon 64 with an integrated memory controller, which at the time of the announcement of Intel Core was already released in a dual-core version. When Intel Core came out on the new core, AMD processors began to lose everywhere except the SPEC rate - the multi-threaded version of the SPEC CPU, when as many copies of the test task are run as there are cores in the system. The new Intel core, with more computing power, stupidly shut up memory performance in this test, and even a large cache and wide memory bus did not help.

But why didn’t this appear in individual user tasks, including multithreaded ones? The main reason was that most user applications, which in principle support multicore well, are optimized in every way. Let us recall once again the packages for working with video and graphics, which most of all gain from multithreading - all these are optimized applications. In addition, the amount of memory used is less when the code is parallelized inside the program - compared to the option when several copies of the same task are launched, and even more so, different applications.

But if you run several different applications on your PC at once, the memory load will increase many times. This will happen for two reasons: firstly, the cache memory will be divided between several tasks, that is, each part will get only a part. In modern CPUs, the L2 or L3 cache is common for all cores, and if one program uses many threads, then they can all run on their own core and work with a common data array in the L3 cache, and if the program is single-threaded, then it gets the whole amount L3 entirely. But if the threads belong to different tasks, the cache volume will be forced to share between them.

The second reason is that more threads will create more memory read / write requests. Returning to the analogy with the plant, it is clear that if all the workshops operate at full capacity at the plant, then more raw materials will be needed. And if they make different machines, then the factory warehouse will be full of various parts, and the conveyor of each workshop will not be able to use parts intended for another workshop, since they are from different models.

In general, problems with limited memory performance are the main reason for the low scalability of multicore systems (after, in fact, the fundamental limitations of the possibility of parallelizing algorithms).

A typical example of such a situation on a PC will be the simultaneous launch of a game, Skype, antivirus and a video encoding program. It’s not a typical, but not a fantastic situation, in which it’s very difficult to correctly measure the speed of work, since the result is influenced by the actions of the scheduler in the OS, which with each measurement can differently distribute tasks and threads across different cores and give them different priorities , time intervals and do it in a different sequence. And again, the most important parameter will be the notorious smoothness of work - the characteristic, by analogy with the minimum fps in games, which in this case is even more difficult to measure. What is the use of starting a game or some other program at the same time as encoding a video file, if you play normally it fails due to jerking images? Even if the video file is quickly converted, since the multi-core processor in this case may be underloaded. Here the load on the memory system will be much greater than when performing each of the listed tasks separately.

In the case of using a PC as a workstation, the situation of simultaneous execution of several applications is even more typical than for a home PC, and the speed of work is even more important.

Testing issues

A whole group of factors immediately reduces the sensitivity of CPU-oriented tests to memory speed. Very memory-sensitive programs are bad CPU tests - in the sense that they respond poorly to the CPU model. Such programs can distinguish between processors with a memory controller that reduces the latency of memory access, and without it, but within the same family almost do not respond to the processor frequency, showing similar results when working at the frequencies of 2500 and 3000 MHz. Often, such applications are rejected as CPU tests, because the tester simply does not understand, what limits their performance, and it seems that the matter is in the "eccentricities" of the program itself. It will be surprising if all processors (both AMD and Intel) show the same result in the test, but this is quite possible for an application that is very memory dependent.

To avoid accusations of bias and questions of why this or that program was chosen, only the most popular applications that everyone uses are included in the tests. But such a selection is not entirely representative: the most popular applications, because of their mass character, are often very well optimized, and program optimization begins with optimizing its work with memory - it is more important, for example, than optimization for SSE1-2-3-4. But not all programs in the world are so well optimized; simply for all programs there will not be enough programmers who can write fast code. Returning again to the popular coding programs, many of them were written with the direct active participation of engineers from CPU manufacturers. Like some other popular resource-intensive programs, in particular slow filters of two-dimensional graphic editors and rendering engines of three-dimensional modeling studios.

At one time, it was popular to compare computer programs with roads. This analogy was required to explain why Pentium 4 works faster on some programs, and on some Athlon. The Intel processor did not like branching and “drove” faster along direct roads. This is a very simplified analogy, but it conveys the essence surprisingly well. It is especially interesting when two points on the map connect two roads - an “optimized” direct quality road and a “non-optimized” bumpy curve. Depending on the choice of one of the roads leading to the goal, this or that processor wins, although in each case they do the same thing. That is, Athlon wins on non-optimized code, and Pentium 4 wins with simple application optimization - and now we are not even talking about special optimization for the Netburst architecture: in this case, Pentium 4 could even compete with Core. Another thing is that it is expensive and long to build good “optimized” roads, and this fact in many respects predetermined the sad fate of Netburst.

But if we move away from the popular well-trodden tracks, we will find ourselves in the forest - there are generally no roads there. And a lot of applications were written without any optimization, which almost inevitably entails a strong dependence on memory speed if the amount of working data exceeds the size of the CPU cache. In addition, many programs are written in programming languages \u200b\u200bthat, in principle, do not support optimization.

Special memory test

In order to correctly assess the effect of memory speed on system performance in the case when memory matters (for the mentioned "memory-dependent" applications, multitasking, etc.), based on all the above circumstances, it was decided to create a special memory test, which by structure The code is a kind of generalized complex, memory-dependent application and has the mode of launching several programs.

What are the advantages of this approach? There are a lot of them. Unlike "natural" programs, it is possible to control the amount of memory used, control over its distribution, control over the number of threads. A special controlled allocation of memory allows you to level out the impact of the features of the program memory manager and the operating system on the performance so that the results are not noisy, and you can correctly and quickly test. Measurement accuracy allows you to test in a relatively short time and evaluate a larger number of configurations.

The test is based on measuring the speed of operation of algorithms from software constructs typical for complex applications that work with non-local data structures. That is, the data is distributed randomly in the memory, rather than making up one small block, and access to the memory is not sequential.

As a model problem, we took a modification of the Astar test from SPEC CPU 2006 Int (by the way, proposed for inclusion in this package by the author of the article; an algorithm adapted for graphs was used for the memory test) and the task of sorting data using various algorithms. Astar has a complex algorithm with integrated memory access, and sorting algorithms for a numerical array are the basic programming task used in many applications; it is included, including, for additional confirmation of the results of a complex test with performance data of a simple but common and classical task.

Interestingly, there are several sorting algorithms, but they differ in the type of memory access pattern. In some, memory access is generally local, while others use complex data structures (for example, binary trees), and memory access is chaotic. It is interesting to compare how much the memory parameters affect a different type of access - even though the same data size is processed and the number of operations is not much different.

According to studies of the SPEC CPU 2006 test suite, the Astar test is one of several that are most correlated with the overall result of the package on x86-compatible processors. But in our memory test, the amount of data used by the program has been increased, since the typical memory size has increased since the release of the SPEC CPU 2006 test. The program also acquired internal multithreading.

Astar program implements the algorithm for finding the path on the map using the algorithm of the same name. The task itself is typical of computer games, primarily strategies. But the software constructs used, in particular the multiple use of pointers, are also typical of complex applications - for example, server code, databases, or just computer game code, not necessarily artificial intelligence.

The program carries out operations with the graph connecting the map points. That is, each element contains links to neighboring ones, as if they were connected by roads. There are two subtests: in one graph is built on the basis of a two-dimensional matrix, that is, a flat map, and in the second - on the basis of a three-dimensional matrix, which is a kind of complex data array. The data structure is similar to the so-called lists - a popular way of organizing data in programs with dynamic creation of objects. This type of addressing is generally characteristic of object-oriented software. In particular, these are almost all financial, accounting, and expert applications. And the nature of their memory accesses is in stark contrast to the type of access for low-level optimized computing programs, such as video coding programs.

Each of the subtests has two options for implementing multithreading. In each of the options, N threads are started, but in one of each threads it searches for a path on its own map, and in the other, all threads search for paths simultaneously on one map. This results in several different access patterns, which makes the test more revealing. The default memory usage is the same in both cases.

Thus, in the first version of the test, 6 subtests are obtained:

- 2D Matrix Path Finder, Common Map

- Finding a path on a 2D matrix, a separate map for each stream

- 3D Matrix Path Finder, community map

- Search for a path on a 3D matrix, a separate map for each stream

- Array sorting using quicksort algorithm (local memory access)

- Array sorting using heapsort algorithm (complex memory access)

Test results

The test results reflect the time spent finding a given number of paths and the time of sorting the array, that is, a lower value corresponds to a better result. First of all, it is qualitatively assessed: does this processor in principle respond at a given frequency to a change in the memory frequency or its settings, bus frequency, timings, etc. That is, do the test results on this system differ when using different types of memory, or is the processor enough minimum speed.

Quantitative results in percent relative to the default configuration give an estimate of the increase or decrease in the speed of memory-dependent applications or multitask configuration when using various types of memory.

The test itself is not intended to accurately compare different CPU models, since due to the fact that the organization of caches and data prefetching algorithms can differ significantly, the test may partly favor certain models. But a qualitative assessment of the CPU families among themselves is quite possible. And the memory of the production of various companies is the same, so the subjective component is excluded here.

Also, the test can be used to assess the scalability of processors in frequency during overclocking or inside the model range. It allows you to understand at what frequency the processor begins to "shut up" in memory. Often the processor formally accelerates strongly, and synthetic tests based on simple arithmetic operations show an increase corresponding to a change in frequency, but in a memory-dependent application, there may not be an increase at all due to the lack of a corresponding increase in memory speed. Another reason is that the CPU core could theoretically consume more power in the event of a complex application and will either start to crash or lower the frequency itself, which is not always possible to detect in simple arithmetic tests.

Conclusion

If platforms and sockets did not change so often, then you could always recommend buying the fastest memory, since after upgrading to a new, more powerful and faster processor, memory requirements will increase. However, the optimal strategy is still to buy a balanced configuration, since the memory itself is also progressing, albeit not so fast, but by the time the processor is changed, it may well be necessary to update the memory. Therefore, testing the performance of the memory subsystem in combination with different processors, including in overclocking mode, remains an urgent and even urgent task that will allow you to choose the optimal bundle without overpaying for extra megahertz.

In fact, the problem of accelerating data access is the cornerstone of modern processor engineering. There will always be a bottleneck here, unless, of course, the processor itself will consist entirely of cache memory, which, incidentally, is not far from the truth - the cache of different levels occupies the lion's share of the crystal area of \u200b\u200bmodern CPUs. (In particular, Intel earned its record billions, including due to the fact that at one time it had developed a method of denser allocation of caches on a chip, that is, more cache cells and more bytes of cache memory are placed per unit area of \u200b\u200bthe crystal.) However, always there will be applications that either cannot be optimized in such a way that the data fits in the cache, or there is simply no one to do it.

Therefore, quick memory is often as practical a choice as buying an SUV for a person who wants to be able to comfortably move both on asphalt and on roads with "non-optimized" pavement.

In this study, we will try to find the answer to the following question - what is more important to achieve maximum computer performance, the high frequency of RAM or its low timings. And two sets of RAM produced by Super Talent will help us in this. Let's see how the memory modules look externally, and what characteristics they have.

⇡ Super Talent X58

This kit the manufacturer "dedicated" to the Intel X58 platform, as evidenced by the inscription on the sticker. However, several questions immediately arise here. As everyone is well aware, to achieve maximum performance on the Intel X58 platform, it is strongly recommended to use the three-channel RAM mode. Despite this, this Super Talent memory kit consists of only two modules. Of course, for Orthodox system builders this approach can be bewildering, but there is still a rational grain in this. The fact is that the segment of top-end platforms is relatively small, and most personal computers use RAM in dual channel mode. In this regard, the purchase of a set of three memory modules to an ordinary user may seem unjustified, and if you really need a lot of RAM, you can purchase three sets of two modules each. The manufacturer indicates that the Super Talent WA1600UB2G6 memory can operate at a frequency of 1600 MHz DDR with timings of 6-7-6-18. Now let's see what information is sewn into the SPD profile of these modules.

And again, there is some discrepancy between the real and declared characteristics. The maximum JEDEC profile assumes the operation of modules at a frequency of 1333 MHz DDR with timings of 9-9-9-24. However, there is an extended XMP profile, the frequency of which coincides with the declared one - 800 MHz (1600 MHz DDR), but the timings are somewhat different, and for the worse - 6-8-6-20, instead of 6-7-6-18, which are indicated on the sticker. Nevertheless, this set of RAM worked without problems in the declared mode - 1600 MHz DDR with timings of 6-7-6-18 and a voltage of 1.65 V. As for overclocking, higher frequencies were not obeyed by the modules, despite the installation of higher timings and increase in supply voltage. Moreover, with an increase in voltage Vmem to a level of 1.9 V, instability of operation was observed in the initial mode. Unfortunately, the radiators are very firmly glued to the memory chips, so we did not dare to remove them, for fear of damaging the memory modules. What a pity, the type of microcircuit used could shed light on this behavior of the modules.

⇡ Super Talent P55

The second set of RAM, which we will consider today, is positioned by the manufacturer as a solution for the Intel P55 platform. The modules are equipped with low profile black heatsinks. The maximum declared mode assumes the operation of these modules at a frequency of 2000 MHz DDR with timings of 9-9-9-24 and a voltage of 1.65 V. Now let's look at the profiles wired in SPD.The most productive JEDEC profile involves the operation of modules at a frequency of 800 MHz (1600 MHz DDR) with timings of 9-9-9-24 and a voltage of 1.5 V, and there are no XMP profiles in this case. As for overclocking, with a slight increase in timings, these memory modules were able to operate at a frequency of 2400 MHz DDR, as evidenced by the screenshot below.

Moreover, the system was also loaded at a frequency of 2600 MHz DDR modules, however, the launch of test applications led to a freeze or reboot. As in the case with the previous Super Talent memory kit, these modules did not react in any way to an increase in the supply voltage. As it turned out, better memory overclocking and system stability were more facilitated by an increase in the voltage of the memory controller integrated into the processor. However, the search for the maximum possible frequencies and parameters at which stability is achieved in such extreme modes will be left to enthusiasts. Next, we will focus on studying the following question - to what extent does the frequency of RAM and its timings affect the overall performance of the computer. In particular, we will try to find out what is best - to install high-speed RAM that works with high timings, or it is preferable to use the lowest possible timings, even if not at maximum operating frequencies.

⇡ Test conditions

Testing was carried out at the stand of the following configuration. In all tests, the processor worked at a frequency of 3.2 GHz, the reasons for this will be explained below, and a powerful graphics card was necessary for tests in the game Crysis.As mentioned above, we will try to find out how the frequency of RAM and its timings affect the overall performance of the computer. Of course, these parameters can simply be set in the BIOS and run tests. But, as it turned out, with a Bclk frequency of 133 MHz, the operating frequency range of the RAM in the motherboard we used is 800 - 1600 MHz DDR. This is not enough, because one of the Super Talent memory kits considered today supports DDR3-2000 mode. Anyway, more and more high-speed memory modules are being released, manufacturers assure us of their unprecedented performance, so it will definitely not hurt to find out their real performance. In order to set the memory frequency, say, 2000 MHz DDR, you need to increase the frequency of the Bclk bus. However, this will change the frequency of both the processor core and its third-level cache memory, which operates at the same frequency as the QPI bus. Of course, comparing the results obtained under such different conditions is incorrect. In addition, the degree of influence of the CPU frequency on the test results can turn out to be much more significant than the timings and frequencies of the RAM. The question arises - is it possible to somehow get around this problem? As for the processor frequency, it can be changed with a multiplier within certain limits. However, it is advisable to choose a value of the bclk frequency so that the final RAM frequency is equal to one of the standard values \u200b\u200bof 1333, 1600, or 2000. As you know, the base bclk frequency in Intel Nehalem processors is currently equal to 133.3 MHz. Let's see what the frequency of RAM will be at different values \u200b\u200bof the bclk bus frequency, taking into account the factors that the motherboard we use can set. The results are shown in the table below.

| Frequency bclk, MHz | |||||

| 133.(3) | 150 | 166.(6) | 183.(3) | 200 | |

| Memory multiplier | RAM frequency, MHz DDR | ||||

| 6 | 800 | 900 | 1000 | 1100 | 1200 |

| 8 | 1066 | 1200 | 1333 | 1466 | 1600 |

| 10 | 1333 | 1500 | 1667 | 1833 | 2000 |

| 12 | 1600 | 1800 | 2000 | 2200 | 2400 |

As can be seen from the table, with a bclk frequency of 166 MHz, the frequencies 1333 and 2000 MHz can be obtained for RAM. If the frequency bclk is 200 MHz, then we get the coincidence of the frequencies of the RAM at 1600 MHz, as well as the required 2000 MHz. In other cases, there are no coincidences with standard memory frequencies. So what is the preferred frequency of bclk - 166 or 200 MHz? The answer to this question will tell the following table. Here are the CPU frequencies, depending on the multiplier and bclk frequency. To assess the impact of timings, we need not only the same memory frequencies, but also the CPU, so that this does not affect the results.

| Frequency bclk, MHz | |||||

| CPU multiplier | 133.(3) | 150.0 | 166.(6) | 183.(3) | 200.0 |

| 9 | 1200 | 1350 | 1500 | 1647 | 1800 |

| 10 | 1333 | 1500 | 1667 | 1830 | 2000 |

| 11 | 1467 | 1650 | 1833 | 2013 | 2200 |

| 12 | 1600 | 1800 | 2000 | 2196 | 2400 |

| 13 | 1733 | 1950 | 2167 | 2379 | 2600 |

| 14 | 1867 | 2100 | 2333 | 2562 | 2800 |

| 15 | 2000 | 2250 | 2500 | 2745 | 3000 |

| 16 | 2133 | 2400 | 2667 | 2928 | 3200 |

| 17 | 2267 | 2550 | 2833 | 3111 | 3400 |

| 18 | 2400 | 2700 | 3000 | 3294 | 3600 |

| 19 | 2533 | 2850 | 3167 | 3477 | 3800 |

| 20 | 2667 | 3000 | 3333 | 3660 | 4000 |

| 21 | 2800 | 3150 | 3500 | 3843 | 4200 |

| 22 | 2933 | 3300 | 3667 | 4026 | 4400 |

| 23 | 3067 | 3450 | 3833 | 4209 | 4600 |

| 24 | 3200 | 3600 | 4000 | 4392 | 4800 |

As a starting point, we took the maximum processor frequency (3200 MHz), which it can show with a base frequency of bclk equal to 133 MHz. The table shows that under these conditions, only at a frequency of bclk \u003d 200 MHz can you get exactly the same CPU frequency. The remaining frequencies, although close to 3200 MHz, are not exactly equal to it. Of course, we could take the CPU frequency as the initial one and lower, say - 2000 MHz, then we could get the correct results for all three values \u200b\u200bof the bclk bus - 133, 166 and 200 MHz. However, we abandoned this option. And that's why. Firstly, there are no Intel desktop processors with Nehalem architecture with such a frequency, and they are unlikely to appear. Secondly, a decrease in the CPU frequency by more than 1.5 times can lead to the fact that it will become a limiting factor, and the difference in results will practically not depend on the operating mode of the RAM. Actually, the first estimates showed exactly that. Thirdly, it is unlikely that the user who buys a deliberately weak and cheap processor will be very concerned about the choice of expensive high-speed RAM. So, we will test with the values \u200b\u200bof the base frequency bclk - 133 and 200 MHz. The frequency of the CPU in both cases is the same and equal to 3200 MHz. Below are screenshots of the CPU-Z utility in these modes.

If you noticed, the frequency of QPI-Link depends on the frequency of bclk and, accordingly, they differ by 1.5 times. This, by the way, will make it possible to find out how the frequency of the third-level cache in Nehalem processors affects the overall performance. So, let's start testing.

Good day dear visitors.

When buying RAM, you need to pay attention to its frequency. Do you know why? If not, I suggest that you read this article, from which you will find out what the frequency of RAM affects. Information can be useful to those who are already a little guided in this topic: what if you still don’t know something?

Answers on questions

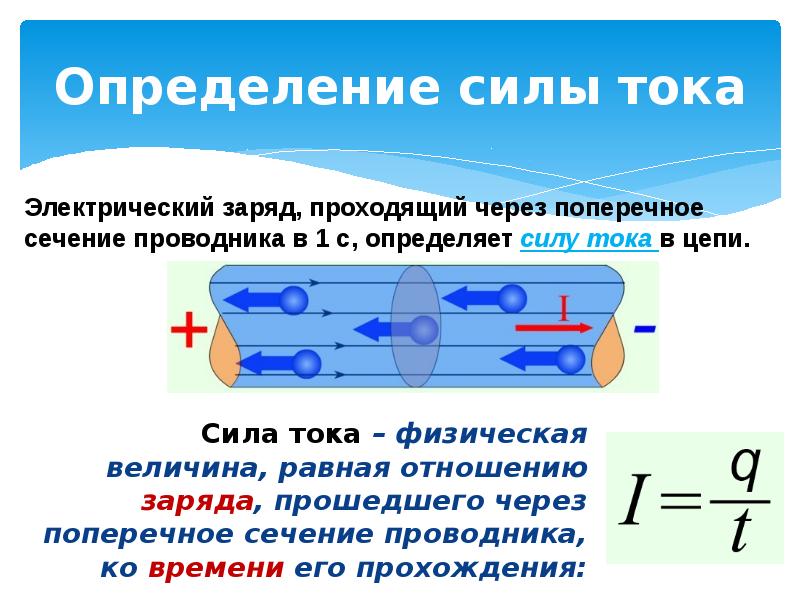

The frequency of the RAM is more correctly called the frequency of data transfer. It shows how many of them the device can transmit in one second via the selected channel. Simply put, the performance of RAM depends on this parameter. The higher it is, the faster it works.

What is measured?

The frequency is calculated in gigatransfer (GT / s), megatransfer (MT / s) or in megahertz (MHz). Typically, the number is indicated by a hyphen in the name of the device, for example, DDR3-1333.

However, do not flatter yourself and confuse this number with a real clock frequency, which is half less than the one written in the name. This is also indicated by the decoding of the abbreviation DDR - Double Data Rate, which translates as double data rate. Therefore, for example, the DDR-800 actually operates at a frequency of 400 MHz.

Maximum features

The fact is that the maximum frequency is written on the device. But this does not mean that all resources will always be used. To make this possible, the memory needs an appropriate bus and slot on the motherboard with the same bandwidth.

Suppose you decide to install 2 RAMs in order to speed up your computer: DDR3-2400 and 1333. This is a waste of money, because the system can only work at the maximum capabilities of the weakest module, that is, the second. Also, if you install the DDR3-1800 board in the socket on the motherboard with a bandwidth of 1600 MHz, then in fact you will get the last digit.

Due to the fact that the device is not designed to constantly operate at maximum, and the motherboard does not meet such requirements, the throughput will not increase, but, on the contrary, will decrease. Because of this, errors may occur in the loading and operation of the operating system.

But the parameters of the motherboard and the bus are not all that affect the speed of RAM taking into account its frequency. What else? Read on.

Device Modes

To achieve the greatest efficiency in the work of RAM, take into account the modes that the motherboard sets for it. They are of several types:

- Single chanell mode (single channel or asymmetric). It works when installing one module or several, but with different characteristics. In the second case, the capabilities of the weakest device are taken into account. An example was given above.

- Dual Mode (two-channel mode or balanced). It comes into effect when two RAMs with the same volume are installed in the motherboard, as a result of which the possibilities of RAM are theoretically doubled. It is advisable to put the device in slot 1 and 3 or in 2 and 4.

- Triple Mode (Three Channel). The same principle as in the previous version, but this refers not to 2, but 3 modules. In practice, the effectiveness of this mode is inferior to the previous one.

- Flex Mode It makes it possible to increase memory productivity by installing 2 modules of different sizes, but with the same frequency. As in the symmetric version, it is necessary to put them in the same slots of different channels.

Timings

In the process of transferring information from RAM to the processor, timings are of great importance. They determine how many RAM clock cycles will cause a delay in returning the data requested by the CPU. Simply put, this parameter indicates the delay time of the memory.

The measurement is carried out in nanoseconds and is recorded in the characteristics of the device under the acronym CL (CAS Latency). The timings are set in the range from 2 to 9. Consider an example: a module with CL 9 will delay 9 clock cycles when transmitting information that requires percent, and CL 7, as you know, 7 cycles. In this case, both boards have the same amount of memory and clock speed. However, the second will work faster.

From this we draw a simple conclusion: the less the number of timings, the higher the speed of the RAM.

That's all.

Armed with the information from this article, you can correctly select and install RAM according to your needs.

Story random access memory, or RAM, began back in 1834, when Charles Bebbage developed the "analytical machine" - in fact, the prototype of the computer. The part of this machine that was responsible for storing the intermediate data, he called the “warehouse." The storage of information there was organized in a purely mechanical way, by means of shafts and gears.

In the first generations of computers, cathode ray tubes and magnetic drums were used as RAM, later magnetic cores appeared, and after them, in the third generation of computers, memory appeared on microcircuits.

Now RAM is running on technology DRAM in form factors DIMM and SO-DIMM, is a dynamic memory organized in the form of integrated circuits of semiconductors. It is volatile, that is, the data disappears in the absence of power.

The choice of RAM is not a difficult task today, the main thing here is to understand the types of memory, its purpose and basic characteristics.

Types of memory

SO-DIMM

The SO-DIMM form factor memory is intended for use in laptops, compact ITX systems, all-in-ones - in a word, where the minimum physical size of memory modules is important. It differs from the DIMM form factor by about 2 times reduced module length and fewer contacts on the board (204 and 360 contacts for SO-DIMM DDR3 and DDR4 versus 240 and 288 on boards of the same types of DIMM memory).

For other characteristics - frequency, timings, volume, SO-DIMM modules can be any, and do not differ from the DIMM in principle.

Dimm

DIMM - random access memory for full-size computers.The type of memory you choose must first be compatible with the connector on the motherboard. RAM for the computer is divided into 4 types - DDR, DDR2, DDR3 and DDR4.

DDR type memory appeared in 2001, and had 184 contacts. The supply voltage ranged from 2.2 to 2.4 V. The frequency of operation is 400 MHz. It is still on sale, however, the choice is small. Today the format is outdated - it’s suitable only if you don’t want to update the system completely, and in the old motherboard, the connectors are only for DDR.

The DDR2 standard was already released in 2003, received 240 contacts, which increased the number of threads, decently accelerating the data transfer bus to the processor. The frequency of DDR2 could be up to 800 MHz (in some cases - up to 1066 MHz), and the supply voltage from 1.8 to 2.1 V was slightly less than that of DDR. Consequently, the power consumption and heat dissipation of the memory decreased.

Differences between DDR2 from DDR:

240 contacts vs 120

· New DDR-incompatible slot

· Lower power consumption

· Improved design, better cooling

Higher maximum operating frequency

Also, like DDR, an outdated type of memory - now it’s only suitable for old motherboards, in other cases it makes no sense to buy, since the new DDR3 and DDR4 are faster.

In 2007, RAM was updated with the DDR3 type, which is still widely distributed. All the same 240 contacts remained, but the connection slot for DDR3 became different - there is no compatibility with DDR2. The frequency of the modules on average from 1333 to 1866 MHz. There are also modules with a frequency up to 2800 MHz.

DDR3 is different from DDR2:

· DDR2 and DDR3 slots are not compatible.

· The clock speed of DDR3 is 2 times higher - 1600 MHz versus 800 MHz for DDR2.

· It features a reduced supply voltage - about 1.5V, and lower power consumption (in the versionDDR3L this value is even lower on average, around 1.35 V).

· Delays (timings) DDR3 more than DDR2, but the operating frequency is higher. In general, the speed of DDR3 is 20-30% higher.

DDR3 is a good choice for today. In many motherboards, DDR3 memory slots are on sale, and due to the mass popularity of this type, it is unlikely to disappear soon. It is also slightly cheaper than DDR4.

DDR4 is a new type of RAM developed only in 2012. It is an evolutionary development of the previous types. Memory bandwidth has increased again, now reaching 25.6 Gb / s. The operating frequency also rose - on average from 2133 MHz to 3600 MHz. If we compare the new type with DDR3, which lasted 8 years on the market and gained widespread use, then the performance increase is insignificant, and not all motherboards and processors support the new type.

Differences DDR4:

Incompatibility with previous types

· Reduced supply voltage - from 1.2 to 1.05 V, power consumption also decreased

· The operating memory frequency up to 3200 MHz (can reach 4166 MHz in some trims), while, of course, the timings increased proportionally

· May slightly outperform DDR3

If you already have DDR3 strips, then there is no point in rushing to change them to DDR4. When this format is distributed in large quantities, and all motherboards will already support DDR4, the transition to a new type will happen by itself with an update of the entire system. Thus, we can conclude that DDR4 is more of a marketing than a really new type of RAM.

What memory frequency to choose?

Choosing a frequency you need to start by checking the maximum supported frequencies with your processor and motherboard. It makes sense to take a frequency higher than that supported by the processor only when overclocking the processor.Today, you should not choose a memory with a frequency below 1600 MHz. The 1333 MHz option is acceptable in the case of DDR3, if these are not ancient modules that were lying around the seller, which will obviously be slower than the new ones.

The best option for today is a memory with a frequency interval from 1600 to 2400 MHz. The frequency above has almost no advantage, but it costs much more, and as a rule it is overclocked modules with raised timings. For example, the difference between the modules in 1600 and 2133 MHz in a number of work programs will be no more than 5-8%, in games the difference can be even less. Frequencies in the 2133-2400 MHz are worth taking if you are engaged in video / audio encoding, rendering.

The difference between the frequencies of 2400 and 3600 MHz will cost you quite expensive, while not adding significantly to the speed.

How much RAM should I take?

The amount that you need depends on the type of work performed on the computer, the installed operating system, and the programs used. Also, do not lose sight of the maximum supported memory capacity of your motherboard.

2 GB - for today, maybe only for browsing the Internet. The operating system will eat more than half, the remaining will be enough for unhurried work of undemanding programs.

4 GB - Suitable for a mid-range computer, for a home PC media center. Enough to watch movies, and even play undemanding games. Modern - alas, it will be difficult to pull. (It will be a better choice if you have a 32-bit Windows operating system that sees no more than 3 GB of RAM)

8 GB (or 2x4GB kit) - the recommended amount for today for a full-fledged PC. This is enough for almost any game, for working with any resource-demanding software. The best choice for a universal computer.

The volume of 16 GB (or sets of 2 × 8 GB, 4 × 4 GB) - will be justified if you work with graphics, heavy programming environments, or constantly render video. It is also perfect for online streaming - there may be freezes with 8 GB, especially with high quality video streaming. Some games in high resolutions and with HD textures can behave better with 16 GB of RAM on board.

32 GB (a set of 2x16GB, or 4x8GB) is still a very controversial choice, useful for some very extreme work tasks. It would be better to spend money on other computer components, this will have a stronger effect on its speed.

Operating modes: better than 1 memory bar or 2?

RAM can work in single-channel, two-, three- and four-channel modes. Definitely, if your motherboard has a sufficient number of slots, it is better to take several identical smaller volumes instead of one memory bar. The speed of access to them will grow from 2 to 4 times.

For the memory to work in dual-channel mode, you need to install the slats in the same color slots on the motherboard. As a rule, the color is repeated through the connector. It is important that the memory frequency in the two slats be the same.

- Single chanell mode - single-channel operation mode. It turns on when one memory bar is installed, or different modules operating at different frequencies. As a result, the memory runs at the frequency of the slowest bar.

- Dual mode - two-channel mode. It works only with memory modules of the same frequency, increases the speed by 2 times. Manufacturers produce specifically for this set of memory modules, which can be 2 or 4 of the same bracket.

- Triple mode - works on the same principle as a two-channel. In practice, not always faster.

- Quad mode - four-channel mode, which works on the principle of two-channel, respectively increasing the speed of 4 times. It is used where extremely high speed is needed - for example, in servers.

- Flex mode- a more flexible version of the two-channel mode of operation, when the straps are of different volumes, and only the same frequency. At the same time, in the two-channel mode, the same module volumes will be used, and the remaining volume will function in a single-channel.

Do I need a memory heatsink?

Now is not the time when, at a voltage of 2 V, the operating frequency of 1600 MHz was reached, and as a result a lot of heat was released, which had to be somehow removed. Then the radiator could be a criterion for the survival of the overclocked module.

Currently, the memory power consumption has decreased significantly, and the heatsink on the module can be justified from a technical point of view only if you are into overclocking and the module will work at frequencies beyond its limits. In all other cases, radiators can be justified, perhaps, with a beautiful design.

In the event that the heatsink is massive and significantly increases the height of the memory bar - this is a significant minus, since it can prevent you from putting a processor super cooler into the system. By the way, there are special low-profile memory modules designed for installation in compact cases. They are slightly more expensive than regular size modules.

What are timings?

Timingsor latency (latency) - One of the most important characteristics of RAM that determine its speed. We outline the general meaning of this parameter. Simplified RAM can be represented as a two-dimensional table in which each cell carries information. Access to the cells occurs by indicating the column and row numbers, and this is indicated by a gating access pulse to the row RAS(Row access strobe) and a gate access pulse to the column Cas (Acess strobe) by changing the voltage. Thus, calls are made for each step of the work. RAS and Cas, and between these calls and write / read commands there are certain delays, which are called timings.

In the description of the RAM module, you can see five timings, which for convenience are recorded by a sequence of digits through a hyphen, for example 8-9-9-20-27 .

· tRCD (time of RAS to CAS Delay)- timing, which determines the delay from the RAS pulse to CAS

· CL (time of CAS Latency) - timing determining the delay between the write / read command and the CAS pulse

· tRP (time of Row Precharge) - timing determining the delay in transitions from one line to the next

· tRAS (time of Active to Precharge Delay) - timing, which determines the delay between the activation of the line and the end of work with it; considered the main value

· Command rate- determines the delay between the command to select an individual chip on the module to the line activation command; This timing is not always indicated.

To put it even easier, it is important to know only one thing about timings - the less their values, the better. At the same time, the trims can have the same frequency of operation, but different timings, and a module with lower values \u200b\u200bwill always be faster. So you should choose the minimum timings, for DDR4 the average values \u200b\u200bwill be the timings 15-15-15-36, for DDR3 - 10-10-10-30. It is also worth remembering that timings are related to the memory frequency, so during overclocking you will most likely have to raise the timings, and vice versa - you can manually lower the frequency, while lowering the timings. It is most advantageous to pay attention to the totality of these parameters, choosing the balance rather, and not to chase the extreme values \u200b\u200bof the parameters.

How to decide on a budget?

With a larger amount, you can afford more RAM. The main difference between cheap and expensive modules will be in timings, frequency of operation, and in the brand - well-known, advertised can cost a little more than noname modules of an obscure manufacturer.In addition, the radiator mounted on the modules costs extra money. Not all bars require it, but manufacturers are not stingy with them now.

The price will also depend on the timings, the lower they are, the higher the speed, and accordingly, the price.

So having up to 2000 rubles, you can purchase a 4 GB memory module, or 2 2 GB modules, which is preferable. Choose depending on what your PC configuration allows. Modules like DDR3 cost almost half as much as DDR4. With such a budget, it’s wiser to take DDR3.

To group up to 4000 rubles includes 8 GB modules, as well as 2x4 GB sets. This is the best choice for any task, except for professional work with video, and in any other difficult environments.

In the amount of up to 8000 rubles will cost 16 GB of memory. Recommended for professional purposes, or for avid gamers - enough even in reserve, in anticipation of new demanding games.

If not a problem to spend up to 13000 rubles, then the best choice is to invest them in a set of 4 strips of 4 GB. For this money, you can even choose prettier radiators, possibly for subsequent overclocking.

I do not advise taking more than 16 GB without the purpose of working in professional heavy environments (and even then not in all), but if you really want to, then for the amount from 13000 rubles you can climb Mount Olympus by purchasing a 32 GB or even 64 GB kit. True, there will not be much sense for the average user or gamer - it is better to spend money, say, on the flagship video card.