As complex and reliable as the cryptosystem itself is, it is based on the use of keys. If the key exchange process is trivial to ensure the confidential exchange of information between two users, then in a system where the number of users is tens or hundreds, key management is a serious problem.

Key information is understood as the totality of all keys operating in the system. If a sufficiently reliable management of key information is not ensured, then, having taken possession of it, an attacker gains unlimited access to all information.

Key management is an information process that includes three elements:

generation of keys;

accumulation of keys;

distribution of keys.

Key generation. In real systems, special hardware and software methods for generating random keys are used. As a rule, random number sensors are used. However, the degree of randomness of their generation should be quite high. The ideal generators are devices based on “natural” random processes. For example, key generation based on white radio noise. Another random mathematical object is the decimal places of irrational numbers, such as or e, which are calculated using standard mathematical methods.

In systems with medium security requirements, software key generators are quite acceptable, which calculate random numbers as a complex function of the current time and (or) the number entered by the user.

Accumulation of keys. The accumulation of keys means the organization of their storage, accounting and disposal.

Since the key is the most attractive object for the attacker, opening the way to confidential information for him, special attention should be paid to the issues of key accumulation.

Private keys should never be written explicitly on media that can be read or copied.

In a rather complex system, one user can work with a large amount of key information, and sometimes it even becomes necessary to organize mini-databases for key information. Such databases are responsible for accepting, storing, recording and deleting the keys used.

Each information about the keys used must be stored encrypted. Keys that encrypt key information are called master keys. It is desirable that each user knows the master keys by heart and does not store them at all on any material media.

A very important condition for the security of information is the periodic updating of key information in the system. In this case, both regular keys and master keys must be reassigned. In critical systems, key information must be updated daily.

The issue of updating key information is also related to the third element of key management - key distribution.

Distribution of keys. Key distribution is the most critical process in key management. There are two requirements for it:

efficiency and accuracy of distribution;

secrecy of distributed keys.

Recently, there has been a shift towards the use of public key cryptosystems, in which the problem of key distribution is eliminated. Nevertheless, the distribution of key information in the system requires new effective solutions.

The distribution of keys between users is implemented in two different ways:

1 By creating one or more key distribution centers. The disadvantage of this approach is that the distribution center knows to whom and which keys are assigned, and this allows all messages circulating in the system to be read. Potential abuse significantly affects protection.

2 Direct exchange of keys between users of the system. In this case, the challenge is to reliably authenticate the subjects.

In both cases, the authenticity of the communication session must be guaranteed. This can be achieved in two ways:

1 Request-response mechanism, which is as follows. If user A wants to be sure that the messages he receives from user B are not false, he includes an unpredictable element (request) in the message sent to B. When answering, user B must perform some operation on this element (for example, add 1). This cannot be done in advance, since it is not known what random number will come in the request. After receiving a response with the results of the actions, user A can be sure that the session is authentic. The disadvantage of this method is the ability to establish, albeit complex, patterns between a request and a response.

2 Time stamping mechanism. It implies time stamping for each message. In this case, each user of the system can know how “old” the received message is.

In both cases, encryption should be used to ensure that the response is not sent by an attacker and that the time stamp is not altered.

The use of time stamps raises the problem of the acceptable time lag interval for authenticating the session. After all, a message with a time stamp, in principle, cannot be transmitted instantly. In addition, the recipient's and sender's computer clocks cannot be perfectly synchronized.

Public key cryptosystems can be used for key exchange using the same RSA algorithm.

But the Diffie-Hellman algorithm turned out to be very effective, which allows two users to exchange a key without intermediaries, which can then be used for symmetric encryption.

Diffie-Hellman algorithm. Diffie and Helman proposed the function of discrete exponentiation to create public-key cryptographic systems.

The irreversibility of the transformation in this case is ensured by the fact that it is quite easy to calculate the exponential function in a finite Galois field consisting of p elements ( p- either a prime number, or a prime to any degree). Calculation of the logarithms in such fields is a much more laborious operation.

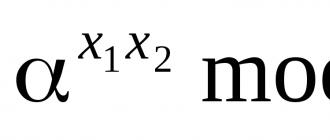

To exchange information, the first user chooses a random number x 1 equiprobable of integers from 1 to p- 1. He keeps this number secret, and sends the number to another user y 1 =, where α is a fixed element of the Galois field Gf(p), which, together with p, is distributed in advance between users.

The second user does the same, generating x 2 and calculating y 2, sending it to the first user. As a result, they both can compute the shared secret. k 12

=

.

.

In order to calculate k 12, the first user erects y 2 to the power x 1 and finds the remainder of division by p... The second user does the same, only using y 1 and x 2. Thus, both users have a common key k 12, which can be used to encrypt information with conventional algorithms. Unlike the RSA algorithm, this algorithm does not allow encrypting the information itself.

Without knowing x 1 and x 2, an attacker can try to compute k 12 knowing only intercepted y 1 and y 2. The equivalence of this problem to the problem of computing the discrete logarithm is a major and open question in public key systems. So far, no simple solution has been found. So, if the direct transformation of 1000-bit primes requires 2000 operations, then for the inverse transformation (calculating the logarithm in the Galois field), it will take about 1030 operations.

As you can see, for all the simplicity of the Diffie-Hellman algorithm, its disadvantage compared to the RSA system is the absence of a guaranteed lower bound for the complexity of key disclosure.

In addition, although the described algorithm avoids the problem of hidden key transmission, the need for authentication remains. Without additional funds, one of the users cannot be sure that he exchanged keys with the exact user he needs.

Key distribution protocol(key establishment protocol) is a cryptographic protocol, during the execution of which a shared secret is made available to two or more parties for subsequent use for cryptographic purposes.

Key distribution protocols are divided into two classes:

Key transport protocols;

Key exchange protocols.

Key transport protocols(key transport) are key distribution protocols in which one participant creates or otherwise acquires a secret and securely transfers it to other participants.

Key exchange protocols(key agreement, key exchange) are key distribution protocols in which a shared secret is generated by two or more participants as a function of information contributed by each of them (or associated with them) in such a way that (ideally) no other party can predetermine their shared secret.

There are two additional forms of key distribution protocols. A protocol is said to perform a key update if a completely new key is generated in the protocol, independent of the keys generated in previous sessions of the protocol execution. The protocol performs key derivation if a new key is "derived" from the existing ones of the participants of the cryptosystem.

Key properties of key distribution protocols include those for key authentication, key validation, and explicit key authentication.

(Implicit) key authentication(implicit key authentication) is a property by which one protocol participant makes sure that no other party, except for a specially identified second protocol participant (and possibly a trust center), can access the secret keys obtained in the protocol. There is no guarantee that the second participant actually got access to the key, but no one else but him could get it. Implicit key authentication is independent of the actual possession of the key by another party and does not require any action from the other party.

Key confirmation(key confirmation) is a property by which one participant in the protocol is convinced that another participant (possibly unidentified) really possesses the secret keys obtained in the protocol.

Explicit Key Authentication(explicit key authentication) is a property that is executed when (implicit) key authentication and key validation take place at the same time.

Needham-Schroeder protocol on symmetric keys

This protocol underlies a large number of key distribution protocols using trusted authorities. There are two types of this protocol:

Needham-Schroeder protocol on symmetric keys;

Needham-Schroeder protocol on asymmetric keys.

The symmetric key protocol works as follows:

Preliminary stage:

Key management

Besides choosing a cryptographic system suitable for a particular IC, an important issue is key management. No matter how complex and reliable the cryptosystem itself is, it is based on the use of keys. If to ensure confidential exchange of information between two users the key exchange process is trivial, then in IS, where the number of users is tens and hundreds, key management is a serious problem.

Under key information the set of all keys operating in the IS is understood. If a sufficiently reliable control of key information is not provided, then, having taken possession of it, the attacker gets unrestricted access to all information.

Key management- information process, which includes three elements:

* generation of keys;

* accumulation of keys;

* distribution of keys.

Let's consider how they should be implemented in order to ensure the security of key information in the IS.

Generating keys

At the very beginning of the conversation about cryptographic methods, it was said that you should not use non-random keys in order to make them easy to remember. Serial ICs use special hardware and software methods for generating random keys. As a rule, PSC sensors are used. However, the degree of randomness of their generation should be high enough. The ideal generators are devices based on "natural" random processes. For example, there were serial samples of key generation based on white radio noise... Another random mathematical object is decimal places and rational numbers, for example or e which are calculated using standard mathematical methods.In ISs with average security requirements, software key generators are quite acceptable, which calculate the PRNG as a complex function of the current time and (or) the number entered by the user.

Accumulation of keys

Under accumulation of keys means the organization of their storage, accounting and disposal.Since the key is the most attractive object for the attacker, opening the way to confidential information for him, special attention should be paid to the issues of key accumulation.

Secret keys should never be written explicitly on media that can be read or copied.

In a rather complex IS, one user can work with a large amount of key information, and sometimes it even becomes necessary to organize mini-databases for key information. Such databases are responsible for accepting, storing, recording and deleting the keys used.

So, each information about the keys used must be stored in encrypted form. Keys that encrypt key information are called master keys... It is desirable that each user knows the master keys by heart, and does not store them at all on any material media.

A very important condition for the security of information is the periodic update of key information in the IS. In this case, both regular keys and master keys must be reassigned. In especially responsible information systems, it is advisable to update key information on a daily basis.

The issue of updating key information is also associated with the third element of key management - distribution of keys.

Key distribution

Key distribution is the most critical process in key management. It has two requirements:- Efficiency and accuracy of distribution

- The secrecy of the shared keys.

Distribution of keys between users is implemented in two different approaches:

- By creating one or several key distribution centers. The disadvantage of this approach is that the distribution center knows to whom and which keys are assigned, and this allows reading all messages circulating in the IS. Potential abuse significantly affects protection.

- Direct key exchange between users of the information system.

In both cases, the authenticity of the communication session must be guaranteed. This can be achieved in two ways:

- Request-response mechanism, which is as follows. If user A wants to be sure that the messages he receives from B are not false, he includes an unpredictable element (request) in the message sent to B. When answering, user B must perform some operation on this element (for example, add 1). This cannot be done in advance, since it is not known what random number is received in the request. After receiving a response with the results of the actions, user A can be confident that the session is authentic. The disadvantage of this method is the possibility of establishing an albeit complex pattern between a request and a response.

- Time stamping mechanism ("time stamp"). It means fixing a time for each message. In this case, each IS user can know how "old" the received message is.

When using time stamps, there is a problem with the acceptable time delay interval for verifying the authenticity of the session. After all, a message with a "time stamp" in principle cannot be transmitted instantly. In addition, the recipient's and sender's computer clocks cannot be absolutely synchronized. What is the delay of the "stamp" to be considered suspicious.

Therefore, in real ICs, for example, in credit card payment systems, it is the second mechanism of authentication and protection against counterfeiting that is used. The interval used is from one to several minutes. A large number of known methods of stealing electronic money are based on "wedging" into this gap with forged requests to withdraw money.

For key exchange, one can use public-key cryptosystems using the same RSA algorithm.

But the Diffie-Hellman algorithm turned out to be very effective, which allows two users to exchange a key without intermediaries, which can then be used for symmetric encryption.

Diffie-Hellman algorithm

Diffie and Hellman proposed to create open-key cryptographic systems discrete exponentiation function.The irreversibility of the transformation in this case is ensured by the fact that it is quite easy to calculate the exponential function in a finite Galois field consisting of p elements. ( p- either a prime number, or simple to any degree). The calculation of logarithms in such fields is a much more laborious operation.

If y= x, 1<x<p-1, where is a fixed element of the field GF (p), then x= lo g y above GF (p)... Having x, it is easy to calculate y... This will require 2 ln ( x+y) multiplication operations.

Inverse calculation problem x from y will be difficult enough. If p is chosen correctly enough, then extracting the logarithm will require calculations that are proportional to

L (p) = exp((ln p ln ln p) 0.5 }

To exchange information, the first user chooses a random number x 1, equiprobable of integers 1 ... p-1. He keeps this number in secret, and sends the number to another user

y 1 = x mod p

The second user acts in the same way, generating x 2 and calculating y 2 by sending it to the first user. As a result, they can calculate k 12 = x 1 x 2 mod p.

In order to calculate k 12, the first user builds y 2 to the power x 1 . The second user does the same. Thus, both users have a common key. k 12, which can be used to encrypt information with conventional algorithms. Unlike the RSA algorithm, this algorithm does not allow encrypting the information itself.

Without knowing x 1 and x 2, an attacker can try to compute k 12 knowing only the intercepted y 1 and y 2. The equivalence of this problem to the problem of computing a discrete log is a major and open question in public-key systems. No simple solution has been found so far. So, if for the direct transformation of 1000-bit prime numbers 2000 operations are required, then for the inverse transformation (calculating the logarithm in the Galois field) - about 10 30 operations are required.

As you can see, for all the simplicity of the Diffie-Hellman algorithm, its second drawback compared to the RSA system is the absence of a guaranteed lower bound for the complexity of the key expansion.

In addition, although the described algorithm allows you to bypass the problem of hidden key transfer, the need for authentication remains. Without additional funds, one of the users cannot be sure that he exchanged keys with the very user he needs. The danger of imitation remains in this case.

As a generalization of what has been said about the distribution of keys, the following should be said. The task of managing keys is reduced to finding such a key distribution protocol that would provide:

* Possibility of refusal from the center of distribution of keys;

* mutual confirmation of the authenticity of the participants in the session;

* Confirmation of the authenticity of the session by the request-response mechanism, the use of software or hardware for this;

* using the minimum number of messages for key exchange.

After the public keys have been distributed and made available, it becomes a real organization of secure communication, which does not allow the possibility of intercepting or corrupting messages, or both. However, some users will choose to use public-key encryption only in exceptional cases due to the fact that the data transfer rate is relatively slow in the context of this encryption. Therefore, public key encryption has to be viewed more as a means of distributing the secret keys used for traditional encryption.

EASY DISTRIBUTION OF SECRET KEYS

An extremely simple diagram is shown in Fig. 11.4.

If initiator A intends to exchange data with the user V, for this, the following procedure is assumed.

Rice. 11.4.

- 1. Party A generates a public / private key pair (KU a, KR a) and sends to Party B a message containing KU a and IDa of the sender A.

- 2. Recipient B generates a secret key K y and transfers this key to the initiator of the message A encrypted with the public key KU a of the initiator A.

- 3. User A computes D | a ^] to recover the secret key. Since only user A can decrypt this message, only communication participants A and B will know the meaning K s.

- 4. Participant A throws away the key KR a, and participant B throws out the key KU a.

Both sides A and B can now use traditional session key encryption. K s. Despite its simplicity, this protocol is very attractive. No keys exist before communication starts, and no keys remain after communication ends. Therefore, the risk of key compromise is minimal. At the same time, communication is protected from eavesdropping.

This protocol is vulnerable to active attacks. If the adversary E has the ability to penetrate the communication channel, then he can compromise the communication, without being detected, as follows.

- 5. Participant A generates a pair of public / private keys (KU a, KR „) and sends to party B a message containing KU a and the identifier SHA of the sender A.

- 6. Adversary E intercepts the message, creates his own public / private key pair (KU e, KR,) and transmits to the addressee B a message containing KU e || SH A.

- 7. B generates a secret key K v and sends EkiLK,].

- 8. Opponent E intercepts this message and learns K v, calculating D KRe].

- 9. Opponent E transmits to participant A the message Ekia [Ku | -

As a result, both participants, A and B, will know K 4, but they will not suspect that K l is also known to enemy E. Therefore, parties A and B can start exchanging messages using K s. Enemy E will no longer actively interfere with the communication channel, but will simply intercept messages. Knowing K s, he will be able to decrypt any message, and participants A and B will not even suspect that there is a problem. Thus, this simple protocol is only useful when passive eavesdropping is the only possible threat.

With symmetric encryption, two parties that want to exchange confidential information must have the same key. The key change frequency should be large enough so that the adversary does not have enough time to completely brute-force the key. Hence, the strength of any cryptosystem depends largely on the technology key distribution... This term means the transfer of a key to two parties who want to exchange data in such a way that no one else can either spy on or change this key. For two participants A and B, the key distribution can be done in one of the following ways.

- The key can be created by A and physically transferred to B.

- A third party can create a key and physically transfer it to A and B.

- A and B have a pre-created and short-lived key, one participant can transfer a new key to another, using the old key for encryption.

- If A and B each have a secure connection with a third party C, C can transmit the key over that secure channel to A and B.

The first and second methods are called manual key distribution... These are the most reliable ways key distribution, however, in many cases it is inconvenient and even impossible to use them. In a distributed system, any host or server must be able to exchange sensitive information with many authenticated hosts and servers. Thus, each host must have a dynamically maintained keyset. The problem is especially relevant in large distributed systems.

The number of keys required depends on the number of participants that need to communicate. If encryption is performed at the network or IP layer, then a key is required for every pair of hosts on the network. Thus, if there are N hosts, then the required number of keys is / 2. If encryption is performed at the application level, then the key is needed for each pair of application processes, which are much more than hosts.

The third key distribution method can be applied at any level. protocol stack, but if an attacker gains access to one key, then the entire sequence of keys will be disclosed. Moreover, the initial distribution of a large number of keys still needs to be done.

Therefore, in large automated systems various variants of the fourth method are widely used. This scheme assumes the existence of the so-called key distribution center(Key Destribution Center - KDC), which is responsible for distributing keys for hosts, processes and applications. Each participant must share a unique key with the KDC.

Usage key distribution center based on the use of a hierarchy of keys. At least two types of keys are used: master keys and session keys.

To ensure confidential communication between end systems, a temporary key called session key... Usually the session key is used to encrypt the transport connection and then destroyed. Each session key must be received over the network from key distribution center... Session keys are transmitted encrypted using a master key that is shared between the key distribution center and the end system.

These master keys must also be distributed in some secure way. However, this significantly reduces the number of keys that require manual distribution. If there are N participants who want to establish connections, then at each time / 2 session keys are needed. But only N is required