CURL is a software package consisting of a command line utility and a library for transferring data using URL syntax.

CURL supports many protocols, including DICT, FILE, FTP, FTPS, Gopher, HTTP, HTTPS, IMAP, IMAPS, LDAP, LDAPS, POP3, POP3S, RTMP, RTSP, SCP, SFTP, SMTP, SMTPS, Telnet and TFTP.

Upload a separate file

The following command will get the content of the URL and display it on standard output (i.e., in your terminal).

Curl https://mi-al.ru/\u003e mi-al.htm% Total% Received% Xferd Average Speed \u200b\u200bTime Time Time Current Dload Upload Total Spent Left Speed \u200b\u200b100 14378 0 14378 0 0 5387 0 -: -: - - 0:00:02 -: -: - 5387

Saving cURL output to fileWe can save the output of the curl command to a file using the -o / -O options.

- -o (lowercase o) the result will be saved in the file specified on the command line

- -O (Uppercase O) the filename will be taken from the URL and used to save the received data.

$ curl -o mygettext.html http://www.gnu.org/software/gettext/manual/gettext.html

The gettext.html page will now be saved in a file called 'mygettext.html'. When curl is run with the -o option, it displays the download progress bar as follows.

% Total% Received% Xferd Average Speed \u200b\u200bTime Time Time Current Dload Upload Total Spent Left Speed \u200b\u200b66 1215k 66 805k 0 0 33060 0 0:00:37 0:00:24 0:00:13 45900 100 1215k 100 1215k 0 0 39474 0 0:00:31 0:00:31 -: -: - 68987

When you use curl -O (uppercase O), it will by itself save the contents to a file called ‘gettext.html’ on the local machine.

$ curl -O http://www.gnu.org/software/gettext/manual/gettext.html

Note: When curl needs to write data to the terminal, it turns off the progress bar so that there is no confusion in the printed data. We can use the ‘\u003e’ | ’-o’ | ’-O’ options to transfer the results to a file.

Fetching multiple files at the same time

We can download multiple files at one time by specifying all URLs on the command line.

Curl -O URL1 -O URL2

The command below will download both index.html and gettext.html and save them with the same names in the current directory.

Curl -O http://www.gnu.org/software/gettext/manual/html_node/index.html -O http://www.gnu.org/software/gettext/manual/gettext.html

Please note that when we download multiple files from the same server as shown above, curl will try to reuse the connection.

Follow the HTTP Location headers with the -L option

By default, CURL does not follow HTTP Location headers (redirects). When the requested web page is moved to a different location, the corresponding response will be sent in the HTTP Location headers.

For example, when someone types google.com into the browser bar from their country, they will automatically be redirected to ‘google.co.xx’. This is done based on the HTTP Location header as shown below.

Curl https://www.google.com/?gws_rd\u003dssl

302 Moved

The document has moved here.The above output says the requested document has been moved to ‘

Hidden from guests

.You can tell curl to follow redirects using the -L option as shown below. Now the html source code will be downloaded with

Hidden from guests

.Curl -L https://www.google.com/?gws_rd\u003dssl

Resuming a previous downloadUsing the -C option, you can continue a download that was stopped for some reason. This will be useful when downloading large files is interrupted.

If we say '-C -' then curl will look for where to resume the download. We can also specify ‘-C<смещение>’. The specified byte offset will be skipped from the beginning of the source file.

Start a big download with curl and press Ctrl-C to stop in the middle of a download.

$ curl -O http://www.gnu.org/software/gettext/manual/gettext.html ############## 20.1%

The download was stopped at 20.1%. Using “curl -C -” we can continue downloading from where we left off. Loading will now continue from 20.1%.

Curl -C - -O http://www.gnu.org/software/gettext/manual/gettext.html ############### 21.1%

Data rate limitingYou can limit the data transfer rate with the –limit-rate option. You can pass the maximum speed as an argument.

$ curl --limit-rate 1000B -O http://www.gnu.org/software/gettext/manual/gettext.html

The command above will limit the transfer rate to 1000 bytes / second. curl can use higher speeds on peak. But the average speed will be about 1000 bytes / second.

Below is a progress bar for the above command. You can see that the current speed is around 1000 bytes.

% Total% Received% Xferd Average Speed \u200b\u200bTime Time Time Current Dload Upload Total Spent Left Speed \u200b\u200b1 1215k 1 13601 0 0 957 0 0:21:40 0:00:14 0:21:26 999 1 1215k 1 14601 0 0 960 0 0:21:36 0:00:15 0:21:21 999 1 1215k 1 15601 0 0 962 0 0:21:34 0:00:16 0:21:18 999

Load the file only if it was changed before / after the specified timeYou can get files that have changed after a certain amount of time using the -z option to curl. This will work for both FTP and HTTP.

The above command will load yy.html only if it changed later than the specified date and time.

The command above will load the file.html file if it changed before the specified date and time. Type ‘man curl_getdate’ to learn more about the different syntaxes supported for date expressions.

Passing HTTP Authentication in cURL

Sometimes websites require a username and password to view their content. With the -u option, you can pass these credentials from cURL to the web server as shown below.

$ curl -u username: password URL

Note: By default curl uses basic HTTP authentication. We can specify other authentication methods using –ntlm | –Digest.

cURL can also be used to download files from FTP servers. If the specified FTP path is a directory, the list of files in it will be displayed by default.

$ curl -u ftpuser: ftppass -O ftp: //ftp_server/public_html/xss.php

The command above will download the xss.php file from the ftp server and save it in your local directory.

$ curl -u ftpuser: ftppass -O ftp: // ftp_server / public_html /

Here the URL refers to a directory. Therefore, cURL will list the files and directories at the given URL.

CURL supports URL ranges. When a range is given, the corresponding files within that range will be loaded. This will be useful when downloading packages from FTP mirror sites.

$ curl ftp://ftp.uk.debian.org/debian/pool/main//

The command above will list all packages in the a-z range in the terminal.

Uploading files to FTP server

Curl can also be used to upload to an FTP server with the -T option.

$ curl -u ftpuser: ftppass -T myfile.txt ftp://ftp.testserver.com

The command above will upload a file named myfile.txt to the FTP server. You can also upload multiple files at one time using ranges.

$ curl -u ftpuser: ftppass -T "(file1, file2)" ftp://ftp.testserver.com

Optionally, we can use "." to get from standard input and send it to a remote machine.

$ curl -u ftpuser: ftppass -T - ftp://ftp.testserver.com/myfile_1.txt

The command above will get the output from the user from standard input and save the contents to the ftp server under the name ‘myfile_1.txt’.

You can specify ‘-T’ for each URL, and each file-address pair will determine what to upload where

More information with increased verbosity and tracing option

You can find out what is going on using the -v option. The -v option turns on verbal mode and will print details.

Curl -v https://www.google.co.th/?gws_rd\u003dssl

The command above will output the following

* Rebuilt URL to: https://www.google.co.th/?gws_rd\u003dssl * Hostname was NOT found in DNS cache * Trying 27.123.17.49 ... * Connected to www.google.co.th (27.123. 17.49) port 80 (# 0)\u003e GET / HTTP / 1.1\u003e User-Agent: curl / 7.38.0\u003e Host: www.google.co.th\u003e Accept: * / *\u003e< HTTP/1.1 200 OK < Date: Fri, 14 Aug 2015 23:07:20 GMT < Expires: -1 < Cache-Control: private, max-age=0 < Content-Type: text/html; charset=windows-874 < P3P: CP="This is not a P3P policy! See https://support.google.com/accounts/answer/151657?hl=en for more info." * Server gws is not blacklisted < Server: gws < X-XSS-Protection: 1; mode=block < X-Frame-Options: SAMEORIGIN < Set-Cookie: PREF=ID=1111111111111111:FF=0:TM=1439593640:LM=1439593640:V=1:S=FfuoPPpKbyzTdJ6T; expires=Sun, 13-Aug-2017 23:07:20 GMT; path=/; domain=.google.co.th ... ... ...

If you need more detailed information, then you can use the –trace option. The –trace option will enable a complete trace dump of all incoming / outgoing data for the specified file

CURL is a software package consisting of a command line utility and a library for transferring data using URL syntax.

CURL supports many protocols, including DICT, FILE, FTP, FTPS, Gopher, HTTP, HTTPS, IMAP, IMAPS, LDAP, LDAPS, POP3, POP3S, RTMP, RTSP, SCP, SFTP, SMTP, SMTPS, Telnet and TFTP.

Upload a separate file

The following command will get the content of the URL and display it on standard output (i.e., in your terminal).

Curl https://mi-al.ru/\u003e mi-al.htm% Total% Received% Xferd Average Speed \u200b\u200bTime Time Time Current Dload Upload Total Spent Left Speed \u200b\u200b100 14378 0 14378 0 0 5387 0 -: -: - - 0:00:02 -: -: - 5387

Saving cURL output to fileWe can save the output of the curl command to a file using the -o / -O options.

- -o (lowercase o) the result will be saved in the file specified on the command line

- -O (Uppercase O) the filename will be taken from the URL and used to save the received data.

$ curl -o mygettext.html http://www.gnu.org/software/gettext/manual/gettext.html

The gettext.html page will now be saved in a file called 'mygettext.html'. When curl is run with the -o option, it displays the download progress bar as follows.

% Total% Received% Xferd Average Speed \u200b\u200bTime Time Time Current Dload Upload Total Spent Left Speed \u200b\u200b66 1215k 66 805k 0 0 33060 0 0:00:37 0:00:24 0:00:13 45900 100 1215k 100 1215k 0 0 39474 0 0:00:31 0:00:31 -: -: - 68987

When you use curl -O (uppercase O), it will by itself save the contents to a file called ‘gettext.html’ on the local machine.

$ curl -O http://www.gnu.org/software/gettext/manual/gettext.html

Note: When curl needs to write data to the terminal, it turns off the progress bar so that there is no confusion in the printed data. We can use the ‘\u003e’ | ’-o’ | ’-O’ options to transfer the results to a file.

Fetching multiple files at the same time

We can download multiple files at one time by specifying all URLs on the command line.

Curl -O URL1 -O URL2

The command below will download both index.html and gettext.html and save them with the same names in the current directory.

Curl -O http://www.gnu.org/software/gettext/manual/html_node/index.html -O http://www.gnu.org/software/gettext/manual/gettext.html

Please note that when we download multiple files from the same server as shown above, curl will try to reuse the connection.

Follow the HTTP Location headers with the -L option

By default, CURL does not follow HTTP Location headers (redirects). When the requested web page is moved to a different location, the corresponding response will be sent in the HTTP Location headers.

For example, when someone types google.com into the browser bar from their country, they will automatically be redirected to ‘google.co.xx’. This is done based on the HTTP Location header as shown below.

Curl https://www.google.com/?gws_rd\u003dssl

302 Moved

The document has moved here.The above output says the requested document has been moved to ‘

Hidden from guests

.You can tell curl to follow redirects using the -L option as shown below. Now the html source code will be downloaded with

Hidden from guests

.Curl -L https://www.google.com/?gws_rd\u003dssl

Resuming a previous downloadUsing the -C option, you can continue a download that was stopped for some reason. This will be useful when downloading large files is interrupted.

If we say '-C -' then curl will look for where to resume the download. We can also specify ‘-C<смещение>’. The specified byte offset will be skipped from the beginning of the source file.

Start a big download with curl and press Ctrl-C to stop in the middle of a download.

$ curl -O http://www.gnu.org/software/gettext/manual/gettext.html ############## 20.1%

The download was stopped at 20.1%. Using “curl -C -” we can continue downloading from where we left off. Loading will now continue from 20.1%.

Curl -C - -O http://www.gnu.org/software/gettext/manual/gettext.html ############### 21.1%

Data rate limitingYou can limit the data transfer rate with the –limit-rate option. You can pass the maximum speed as an argument.

$ curl --limit-rate 1000B -O http://www.gnu.org/software/gettext/manual/gettext.html

The command above will limit the transfer rate to 1000 bytes / second. curl can use higher speeds on peak. But the average speed will be about 1000 bytes / second.

Below is a progress bar for the above command. You can see that the current speed is around 1000 bytes.

% Total% Received% Xferd Average Speed \u200b\u200bTime Time Time Current Dload Upload Total Spent Left Speed \u200b\u200b1 1215k 1 13601 0 0 957 0 0:21:40 0:00:14 0:21:26 999 1 1215k 1 14601 0 0 960 0 0:21:36 0:00:15 0:21:21 999 1 1215k 1 15601 0 0 962 0 0:21:34 0:00:16 0:21:18 999

Load the file only if it was changed before / after the specified timeYou can get files that have changed after a certain amount of time using the -z option to curl. This will work for both FTP and HTTP.

The above command will load yy.html only if it changed later than the specified date and time.

The command above will load the file.html file if it changed before the specified date and time. Type ‘man curl_getdate’ to learn more about the different syntaxes supported for date expressions.

Passing HTTP Authentication in cURL

Sometimes websites require a username and password to view their content. With the -u option, you can pass these credentials from cURL to the web server as shown below.

$ curl -u username: password URL

Note: By default curl uses basic HTTP authentication. We can specify other authentication methods using –ntlm | –Digest.

cURL can also be used to download files from FTP servers. If the specified FTP path is a directory, the list of files in it will be displayed by default.

$ curl -u ftpuser: ftppass -O ftp: //ftp_server/public_html/xss.php

The command above will download the xss.php file from the ftp server and save it in your local directory.

$ curl -u ftpuser: ftppass -O ftp: // ftp_server / public_html /

Here the URL refers to a directory. Therefore, cURL will list the files and directories at the given URL.

CURL supports URL ranges. When a range is given, the corresponding files within that range will be loaded. This will be useful when downloading packages from FTP mirror sites.

$ curl ftp://ftp.uk.debian.org/debian/pool/main//

The command above will list all packages in the a-z range in the terminal.

Uploading files to FTP server

Curl can also be used to upload to an FTP server with the -T option.

$ curl -u ftpuser: ftppass -T myfile.txt ftp://ftp.testserver.com

The command above will upload a file named myfile.txt to the FTP server. You can also upload multiple files at one time using ranges.

$ curl -u ftpuser: ftppass -T "(file1, file2)" ftp://ftp.testserver.com

Optionally, we can use "." to get from standard input and send it to a remote machine.

$ curl -u ftpuser: ftppass -T - ftp://ftp.testserver.com/myfile_1.txt

The command above will get the output from the user from standard input and save the contents to the ftp server under the name ‘myfile_1.txt’.

You can specify ‘-T’ for each URL, and each file-address pair will determine what to upload where

More information with increased verbosity and tracing option

You can find out what is going on using the -v option. The -v option turns on verbal mode and will print details.

Curl -v https://www.google.co.th/?gws_rd\u003dssl

The command above will output the following

* Rebuilt URL to: https://www.google.co.th/?gws_rd\u003dssl * Hostname was NOT found in DNS cache * Trying 27.123.17.49 ... * Connected to www.google.co.th (27.123. 17.49) port 80 (# 0)\u003e GET / HTTP / 1.1\u003e User-Agent: curl / 7.38.0\u003e Host: www.google.co.th\u003e Accept: * / *\u003e< HTTP/1.1 200 OK < Date: Fri, 14 Aug 2015 23:07:20 GMT < Expires: -1 < Cache-Control: private, max-age=0 < Content-Type: text/html; charset=windows-874 < P3P: CP="This is not a P3P policy! See https://support.google.com/accounts/answer/151657?hl=en for more info." * Server gws is not blacklisted < Server: gws < X-XSS-Protection: 1; mode=block < X-Frame-Options: SAMEORIGIN < Set-Cookie: PREF=ID=1111111111111111:FF=0:TM=1439593640:LM=1439593640:V=1:S=FfuoPPpKbyzTdJ6T; expires=Sun, 13-Aug-2017 23:07:20 GMT; path=/; domain=.google.co.th ... ... ...

If you need more detailed information, then you can use the –trace option. The –trace option will enable a complete trace dump of all incoming / outgoing data for the specified file

I propose to talk a little about the PHP programming language, and specifically touch on the topic of extension CURL, i.e. the ability to interact with different servers using different protocols from the PHP script itself.

Before proceeding with curl, I want to remind you that we have already touched on the PHP language, for example, in the material about uploading to Excel in PHP or the possibility of authentication in PHP, and now let's talk about the possibility of sending requests to PHP.

What is CURL?

CURL Is a PHP function library that can be used to send requests, such as HTTP, from a PHP script. CURL supports protocols such as HTTP, HTTPS, FTP and others. You can send HTTP requests using the GET, POST, PUT methods.

CURL can be useful in cases where you need to call a remote script and get the result or just save the HTML code of the called page, in general, everyone can find their own application, but the point is that you can send requests during the execution of the script.

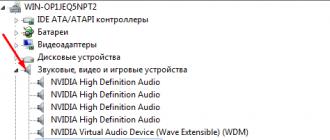

Connecting the CURL library in PHP

In order to use the CURL library, you need to connect it accordingly.

Note! As an example, we'll use PHP 5.4.39 on Windows 7, and we'll use Apache 2.2.22 as the web server.

The first thing to do is copy the libraries ssleay32.dll, libeay32.dll, libssh2.dll they are located in the PHP directory, in the Windows system directory, namely in C: \\ Windows \\ System32.

Then connect the php_curl.dll library in php.ini, i.e. uncomment the following line

Library is not connected

; extension \u003d php_curl.dllLibrary connected

Extension \u003d php_curl.dll

That's it, restart Apache, call the phpinfo () function and in case of a successful connection, you should have a curl section

If it is not there, then this means only one thing, that the library did not load, the most common reason for this was that the above DLLs were not copied to the Windows system directory.

CURL example - requesting a remote page to display

In this example, we will simply request a remote page over HTTP using the GET method and display its contents on the screen.

We have a directory test in it 2 PHP files are test_curl.php and test.php, where test_curl.php is a script where we will use curl, and test.php is a remote script that we will call. I commented on the code in detail.

Test_curl.php code

Test.php code

Heading 1"; break; case 2: echo"<Н2>Heading 2"; break; case 3: echo"<Н3>Heading 3"; break;))?\u003e

As a result, if you run test_curl.php you will see the inscription "Heading 1" on the screen, you can experiment with passing id parameters ( in this case 2 or 3).

CURL example - calling a remote script and getting the result

Now let's try to call the script and get the result, in order to process it later, for example, let's use the POST method. Leave the file names the same.

Test_curl.php code

Test.php code

And if we run test_curl.php then 111 will be displayed on the screen, i.e. 1.11 the result of a call to a remote script multiplied by 100.

Now let's talk about functions and constants to them.

Frequently used CURL functions and constants

- curl_init - Initializes a session;

- curl_close - Terminate the session;

- curl_exec - Executes the request;

- curl_errno - Returns the error code;

- curl_setopt - Sets a parameter for a session, for example:

- CURLOPT_HEADER - value 1 means that headers must be returned;

- CURLOPT_INFILESIZE - parameter for specifying the expected file size;

- CURLOPT_VERBOSE - value 1 means that CURL will display detailed messages about all operations performed;

- CURLOPT_NOPROGRESS - disable the operation progress indicator, value 1;

- CURLOPT_NOBODY - if you do not need a document, but only need headers, then set the value to 1;

- CURLOPT_UPLOAD - to upload a file to the server;

- CURLOPT_POST - execute a request using the POST method;

- CURLOPT_FTPLISTONLY - getting a list of files in the FTP server directory, value 1;

- CURLOPT_PUT - execute the request using the PUT method, value 1;

- CURLOPT_RETURNTRANSFER - return the result without outputting to the browser, value 1;

- CURLOPT_TIMEOUT The maximum execution time in seconds;

- CURLOPT_URL - specifying the address for contact;

- CURLOPT_USERPWD - string with username and password in the form:;

- CURLOPT_POSTFIELDS - data for POST request;

- CURLOPT_REFERER - sets the value of the HTTP header "Referer:";

- CURLOPT_USERAGENT - sets the value of the HTTP header "User-Agent:";

- CURLOPT_COOKIE - the content of the "Cookie:" header that will be sent with an HTTP request;

- CURLOPT_SSLCERT- name of the certificate file in PEM format;

- CURLOPT_SSL_VERIFYPEER - value 0 to disable verification of the remote server certificate (default 1);

- CURLOPT_SSLCERTPASSWD is the password for the certificate file.

- curl_getinfo - Returns information about the operation, the second parameter can be a constant to indicate what exactly needs to be shown, for example:

- CURLINFO_EFFECTIVE_URL - last used URL;

- CURLINFO_HTTP_CODE The last received HTTP code;

- CURLINFO_FILETIME - modification date of the uploaded document;

- CURLINFO_TOTAL_TIME - operation time in seconds;

- CURLINFO_NAMELOOKUP_TIME - server name resolution time in seconds;

- CURLINFO_CONNECT_TIME - time taken to establish a connection, in seconds;

- CURLINFO_PRETRANSFER_TIME - time elapsed from the start of the operation to the readiness for the actual data transfer, in seconds

- CURLINFO_STARTTRANSFER_TIME - the time elapsed from the start of the operation to the moment the first data byte was transferred, in seconds;

- CURLINFO_REDIRECT_TIME - time spent on redirection, in seconds;

- CURLINFO_SIZE_UPLOAD - number of bytes when uploading;

- CURLINFO_SIZE_DOWNLOAD - number of bytes when loading;

- CURLINFO_SPEED_DOWNLOAD - average download speed;

- CURLINFO_SPEED_UPLOAD - average download speed;

- CURLINFO_HEADER_SIZE - total size of all received headers;

- CURLINFO_REQUEST_SIZE - total size of all sent requests;

- CURLINFO_SSL_VERIFYRESULT - the result of SSL certificate verification requested by setting the CURLOPT_SSL_VERIFYPEER parameter;

- CURLINFO_CONTENT_LENGTH_DOWNLOAD - the size of the loaded document, read from the Content-Length header;

- CURLINFO_CONTENT_LENGTH_UPLOAD - uploaded data size;

- CURLINFO_CONTENT_TYPE - the content of the received Content-type header, or NULL in the case when this header was not received.

You can find more details about CURL functions and constants to them on the official PHP website -

The life of a web developer is clouded by complexity. It is especially frustrating when the source of these difficulties is unknown. Is it a problem with sending a request, or with a response, or with a third-party library, or is the external API buggy? There are a bunch of different tools that can make our life easier. Here are some command line tools that I personally find invaluable.

cURL

cURL is a program for transferring data over various protocols, similar to wget. The main difference is that by default wget saves to a file and cURL outputs to the command line. This makes it very easy to view the content of the website. For example, here's how to quickly get your current external IP:

$ curl ifconfig.me 93.96.141.93

Options -i (show headers) and -I (only show headers) make cURL a great tool for debugging HTTP responses and analyzing what the server is sending you:

$ curl -I habrahabr.ru HTTP / 1.1 200 OK Server: nginx Date: Thu, 18 Aug 2011 14:15:36 GMT Content-Type: text / html; charset \u003d utf-8 Connection: keep-alive Keep-alive: timeout \u003d 25

Parameter -L useful too, it makes cURL automatically follow redirects. cURL supports HTTP authentication, cookies, tunneling through an HTTP proxy, manual header settings, and much, much more.

Siege

Siege is a load testing tool. Plus, it has a handy option -gwhich is very similar to curl –iL, but also shows you the http request headers. Here's an example from google.com (some headers have been removed for brevity):

$ siege -g www.google.com GET / HTTP / 1.1 Host: www.google.com User-Agent: JoeDog / 1.00 (X11; I; Siege 2.70) Connection: close HTTP / 1.1 302 Found Location: http: // www.google.co.uk/ Content-Type: text / html; charset \u003d UTF-8 Server: gws Content-Length: 221 Connection: close GET / HTTP / 1.1 Host: www.google.co.uk User-Agent: JoeDog / 1.00 (X11; I; Siege 2.70) Connection: close HTTP / 1.1 200 OK Content-Type: text / html; charset \u003d ISO-8859-1 X-XSS-Protection: 1; mode \u003d block Connection: close

But what Siege is really great for is load testing. Like the Apache benchmark ab, he can send many concurrent requests to the site and see how he handles the traffic. The following example shows how we test Google with 20 queries for 30 seconds, after which the result is displayed:

$ siege -c20 www.google.co.uk -b -t30s ... Lifting the server siege ... done. Transactions: 1400 hits Availability: 100.00% Elapsed time: 29.22 secs Data transferred: 13.32 MB Response time: 0.41 secs Transaction rate: 47.91 trans / sec Throughput: 0.46 MB / sec Concurrency: 19.53 Successful transactions: 1400 Failed transactions: 0 Longest transaction: 4.08 Shortest transaction: 0.08

One of the most useful features of Siege is that it can work not only with one address, but also with a list of URLs from a file. This is great for load testing because you can simulate real-world traffic to your site, rather than just hitting the same URL over and over again. For example, here's how to use Siege to load a server using addresses from your Apache log:

$ cut -d "" -f7 /var/log/apache2/access.log\u003e urls.txt $ siege -c

Ngrep

For serious traffic analysis, there is Wireshark with thousands of settings, filters and configurations. There is also a command line version tshark... But for simple tasks, I consider the functionality of Wireshark to be redundant. So as long as I don't need a powerful weapon, I use. It lets you do the same thing with network packets as grep does with files.

For web traffic, you'll almost always want to use the parameter -Wto preserve string formatting as well as the option -q, which hides redundant information about unsuitable packets. Here is an example of a command that intercepts all packets with a GET or POST command:

Ngrep -q -W byline "^ (GET | POST). *"

You can add an additional filter for packets, for example, by a given host, IP address or port. Here is a filter for all inbound and outbound traffic to google.com, port 80, which contains the word “search”.

Ngrep -q -W byline "search" host www.google.com and port 80

A real practical example: you need to reboot the router (modem) to change the IP address. To do this, you need to: log in to the router, go to the maintenance page and click the "Restart" button. If this action needs to be performed several times, then the procedure must be repeated. Agree, you don't want to do this routine manually every time. cURL lets you automate all of this. With just a few cURL commands, you can achieve authorization and task execution on the router.

- cURL is handy for retrieving data from websites on the command line.

Those. The use cases for cURL are quite real, although, in most cases, cURL is needed by programmers who use it for their programs.

CURL supports many protocols and authorization methods, is able to transfer files, works correctly with cookies, supports SSL certificates, proxies and much more.

cURL in PHP and command line

We can use cURL in two main ways: in PHP scripts and on the command line.

To enable cURL in PHP on the server, you need to uncomment the line in the php.ini file

Extension \u003d php_curl.dll

And then restart the server.

On Linux, you need to install the curl package.

On Debian, Ubuntu or Linux Mint:

$ sudo apt-get install curl

On Fedora, CentOS, or RHEL:

$ sudo yum install curl

To clearly see the difference in use in PHP and on the command line, we will perform the same tasks twice: first in a PHP script, and then on the command line. We will try not to get confused.

Retrieving data with cURL

Getting data with cURL in PHP

PHP example:

Everything is very simple:

$ target_url - the address of the site that interests us. After the site address, you can put a colon and add the port address (if the port is different from the standard one).

curl_init - initializes a new session and returns a descriptor, which in our example is assigned to a variable $ ch.

Then we execute the cURL request with the function curl_exec, to which a descriptor is passed as a parameter.

Everything is very logical, but when this script is executed, the content of the site will be displayed on our page. What if we don't want to display the content, but want to write it to a variable (for subsequent processing or parsing).

Let's add our script a bit:

0) (echo "Curl error:". Curl_error ($ ch);) curl_close ($ ch); ?\u003e

We have a line curl_setopt ($ ch, CURLOPT_RETURNTRANSFER, 1);.

curl_setopt - sets options. A complete list of options can be found on this page:

Hidden from guests

$ response_data \u003d curl_exec ($ ch);

Now the value of the script is assigned to the $ response_data variable, with which further operations can be performed. For example, you can display its contents.

Stitching

If (curl_errno ($ ch)\u003e 0) (echo "Curl error:". Curl_error ($ ch);)

serve for debugging, in case of errors.

Getting data with cURL on the command line

At the command line, just type

where instead of mi-al.ru - your site address.

If you need to copy the data into a variable, and not display the result on the screen, then we do this:

Temp \u003d "curl mi-al.ru"

In this case, some data is still displayed:

To prevent them from being displayed, add the key -s:

Temp \u003d "curl -s mi-al.ru"

You can see what has been recorded:

Echo $ temp | less

Basic Authentication and HTTP AuthenticationAuthentication, in simple terms, is entering a username and password.

Basic authentication is server-side authentication. For this, two files are created: .htaccess and .htpasswd

Htaccess file content is something like this

AuthName "For registered users only!" AuthType Basic require valid-user AuthUserFile /home/freeforum.biz/htdocs/.htpassw

Htpasswd file contents something like this:

Mial: CRdiI.ZrZQRRc

Those. login and password hash.

When you try to access a password-protected folder, the browser displays something like this:

HTTP authentication is the case when we enter a username and password into a form on the site. It is this kind of authentication that is used when entering mail, forums, etc.

CURL Basic Authentication (PHP)

There is a website

Hidden from guests

Let's try our initial script:

0) (echo "Curl error:". Curl_error ($ ch);) else (echo $ response_data;) curl_close ($ ch); ?\u003e

Although the script thinks that there is no error, we don't like the output at all:

Add two lines:

Curl_setopt ($ ch, CURLOPT_HTTPAUTH, CURLAUTH_BASIC); curl_setopt ($ ch, CURLOPT_USERPWD, "ru-board: ru-board");

The first line we set the type of authentication - basic. The second line contains the name and password separated by a colon (in our case, the name and password are the same - ru-board). It turned out like this:

0) (echo "Curl error:". Curl_error ($ ch);) else (echo $ response_data;) curl_close ($ ch); ?\u003e Trying: 30946 Great! Basic cURL authentication (on the command line) The same can be achieved on the command line with one line: curl -u ru-board: ru-board http://62.113.208.29/Update_FED_DAYS/

I didn't forget to specify the authentication type, it's just that in cURL the basic authentication type is the default.

On the command line, everything turned out so quickly that out of frustration I wrote this program. She connects to the site and downloads the most recent update:

Temp \u003d `curl -s -u ru-board: ru-board http://62.113.208.29/Update_FED_DAYS/ | grep -E -o "Update_FED_201 (1). (2). (2) .7z" | uniq | tail -n 1`; curl -o $ temp -u ru-board: ru-board http://62.113.208.29/Update_FED_DAYS/$temp

With just a few more commands, you can add:

- unpacking the archive into the specified directory;

- launch of updates ConsultantPlus (these are updates for him);

- you can check whether the last available update has already been downloaded or a new one has appeared;

- add it all to Cron for daily updates.

HTTP cURL authentication in PHP

We need to know:

- address where to send data for authentication

- send method GET or POST

- login

- password

The address where the data should be sent can be taken from the authentication form. For example: