Preamble. P2P video chat on the base WebRTC is an alternative to Skype and other means of communication. The main elements of a p2p video chat based on WebRTC are browser and a contact server. P2P video chats are peer-to-peer video chats, in which the server does not take part in the transfer of information streams. Information is transferred directly between users' browsers (peering) without any additional programs. In addition to browsers, p2p video chats use contact servers, which are designed to register users, store data about them and ensure switching between users. Browsers that support the latest WebRTC and HTML5 technologies provide instant text messages and files, as well as voice and video communication over IP networks.

So, chats, web chats, voice and video chats in the web interface, IMS, VoIP are services that provide online communications through composite packet-switched networks. As a rule, communication services require either the installation of client applications on user devices (PCs, smartphones, etc.), or the installation of plugins and extensions into browsers. Services have their own communication networks, most of which are built on a client-server architecture.

Communication services are applications, with the exception of IMS, in which voice, video, data and text channels are not integrated. The networks of each service are applied. It should be noted that these applications cannot work simultaneously in several communication networks, i.e. applications, as a rule, cannot interact with each other, as a result of which a separate application must be installed for each communication network.

The problem of integrating real-time communication services (chat, telephony, video conferencing), i.e. integration of voice, video, data channels and access to them using one application (browser) can be solved in peer-to-peer or p2p video chats (peer-to-peer, point - to - point) based on webRTC protocol... In fact, a browser that supports WebRTC becomes a unified interface for all user devices (PCs, smartphones, iPads, IP phones, mobile phones, etc.) that work with communication services.

It is WebRTC that provides the implementation in the browser of all technologies that provide real-time communication. The essence of p2p video chats is that multimedia and text data is transmitted directly between users' browsers (remote peering) without the participation of a server and additional programs. Thus, browsers not only provide access to almost all Internet information resources that are stored on servers, but also become a means of access to all real-time communication services and postal services (voice mail, e-mail, SMS, etc.)

Servers (contact servers) p2p video chats are intended only for registering users, storing data about users and establishing a connection (switching) between users' browsers. The first p2p video chats were implemented using flash technologies. Flash p2p video chats are used, for example, in social networks. Flash p2p video chats do not provide high quality multimedia data transfer. In addition, to output voice and video stream from a microphone and a video camera in p2p flash video chats, a flash plug-in must be installed in a web browser.

But the next generation telecommunication services include web communicationsthat use only browsers and contact serverssupporting webRTC protocols and specification HTML5... Any user device (PC, iPad, smartphones, etc.) equipped with such a browser can provide high-quality voice and video calls, as well as the transfer of instant text messages and files.

So, a new technology for web communications (p2p chats, video chats) is the WebRTC protocol. WebRTC together with HTML5, CSS3 and JavaScript allows you to create various web applications. WebRT is intended for organizing web communications (peer-to-peer networks) in real time using a peer-to-peer architecture. P2P chats based on WebRTC provide file transfer, as well as text, voice and video communication of users over the Internet using only web browsers without using external add-ons and plugins in the browser.

In p2p chats, the server is used only to establish a p2p connection between two browsers. HTML5, CSS3 and JavaScript are used to create the client side of a p2p chat based on the WebRTC protocol. The client application interacts with browsers via the WebRTC API.

WebRTC is implemented by three JavaScript APIs:

- RTCPeerConnection;

- MediaStream (getUserMedia);

- RTCDataChannel.

Browsers transmit media over SRTP, which runs over UDP. Since NAT creates problems for browsers (clients) behind NAT routers that use p2p connections over the Internet, STUN is used to traverse NAT translators. STUN is a client-server protocol that runs on top of the UDP transport protocol. In p2p chats, as a rule, a public STUN server is used, and the information received from it is used for a UDP connection between two browsers if they are behind NAT.

Examples of implementing WebRTC applications (p2p chats, voice and video web chats):

1. P2P video chat Bistri (one-click video chat, p2p chat), made on the basis of WebRTC, can be opened on Bistri. Bistri works in a browser without installing additional programs and plugins. The essence of the work is as follows: open a p2p video chat using the specified link, after registration in the interface that opens, invite partners, then from the list of peer-clients select the partner who is in the network and click on the "video call" button.

As a result, MediaStream (getUserMedia) will capture the microphone + webcam, and the server will exchange signaling messages with the selected partner. After exchanging signaling messages, the PeerConnection API creates channels for transmitting voice and video streams. In addition, Bistri carries out the transfer of instant text messages and files. In fig. 1 shows a screenshot of the Bistri p2p video chat interface.

Figure: 1.P2P video chat Bistri

2. Twelephone (p2p video chat, p2p chat, SIP Twelephone) - this client application is built on the basis of HTML5 and WebRTC, which allows you to make voice and video calls, as well as transfer instant text messages, i.e. Twelephone includes test p2p chat, video chat and SIP Twelephone. It should be noted that Twelephone supports SIP protocol and now you can make and receive voice and video calls from SIP phones using your Twitter account as a phone number. In addition, text messages can be entered by voice through a microphone, and voice recognition software enters text into the "Send a message" line.

Twelephone is a web telephony based on the Google Chrome browser, starting from version 25, without additional software. Twelephone was developed by Chris Matthieu. Twelephone backend is built on top of Node.js. The server (contact server) is used only to establish a p2p connection between two browsers or WebRTC clients. The Twelephone application does not have its own means of authorization, but is focused on connecting to an account (account) on Twitter.

In fig. 2 shows a screenshot of the p2p video chat interface Twelephone.

Figure: 2. P2P Twelephone

3. Group p2p video chat Conversat.io is built on the basis of the latest WebRTC and HTML5 technologies. Conversat video chat is developed on the basis of the SimpleWebRTC library and is intended for communication of up to 6 peer-clients in one room (for communication, enter the name of the common room for peer-clients in the "Name the conversation" line). P2P video chat Conversat provides communication services to users without registering on a contact server. In fig. 3 shows a screenshot of the Conversat p2p video chat interface.

Figure: 3. Group P2P video chat Conversat.io

To participate in P2P video chats based on WebRTC, users must have a browser that supports the WebRTC protocol and the HTML5 specification. Currently, Google Chrome browsers starting with version 25 and Mozilla Firefox Nightly support the WebRTC protocol and the HTML5 specification. WebRTC applications are superior to Flash applications in terms of image and sound transmission quality.

WebRTC (Web Real-Time Communications) is a technology that allows Web applications and sites to capture and selectively transmit audio and / or video media streams, as well as exchange arbitrary data between browsers, without the need for intermediaries. The set of standards that WebRTC technology includes allows data exchange and peer-to-peer teleconferencing without the need for the user to install plugins or any other third-party software.

WebRTC is made up of several interconnected programming interfaces (APIs) and protocols that work together. The documentation you find here will help you understand the basics of WebRTC, how to set up and use a data and media streaming connection, and more.

Compatibility

Since the implementation of WebRTC is in the making and every browser has WebRTC functions, we strongly recommend using the Adapter.js polyfill library from Google before starting to work on your code.

Adapter.js uses wedges and polyfills to seamlessly join differences in WebRTC implementations among the contexts that support it. Adapter.js also handles vendor prefixes and other property naming differences, making it easier to develop with WebRTC, with the most consistent result. The library is also available as an NPM package.

For further exploration of the Adapter.js library, take a look.

WebRTC concepts and usage

WebRTC is multipurpose and, together with, provides powerful multimedia capabilities for the Web, including support for audio and video conferencing, file sharing, screen capture, identity management, and legacy phone systems interoperability, including support for DTMF dial tone. Connections between nodes can be created without using special drivers or plugins, and often without intermediate services.

The connection between the two nodes is represented as an object of the RTCPeerConnection interface. Once a connection is established and opened using the RTCPeerConnection object, media streams (MediaStream s) and / or data channels (RTCDataChannel s) can be added to the connection.

Media streams can consist of any number of media tracks (tracks). These tracks, represented by objects in the MediaStreamTrack interface, can contain one or more types of media, including audio, video, text (such as subtitles or chapter titles). Most streams consist of at least one audio track (one audio track), or video track, and can be sent and received as streams (real-time media) or saved to a file.

Also, you can use the connection between two nodes to exchange arbitrary data using the RTCDataChannel interface object, which can be used to transfer service information, exchange data, game status packets, file transfer or closed data transfer channels.

more details and links to relevant guides and tutorials needed

WebRTC interfaces

Due to the fact that WebRTC provides interfaces that work together to perform various tasks, we have divided them into categories. See the sidebar alphabetical index for quick navigation.

Connection setup and management

These interfaces are used to configure, open and manage WebRTC connections. They represent peer-to-peer media connections, data channels, and interfaces used to exchange information about the capabilities of each node to select the best configuration for establishing a two-way multimedia connection.

RTCPeerConnection Represents a WebRTC connection between a local computer and a remote host. Used to handle successful data transfer between two nodes. RTCSessionDescription Represents session parameters. Each RTCSessionDescription contains a description of the type showing which part (offer / response) of the negotiation process it describes, and an SDP-descriptor for the session. RTCIceCandidate This is an Internet Connection Establishment (ICE) server candidate for establishing an RTCPeerConnection. RTCIceTransport Represents information about an Internet Connectivity Tool (ICE). RTCPeerConnectionIceEvent Represents events that occur for ICE candidates, usually RTCPeerConnection. One type is passed to this event object: icecandidate. RTCRtpSender Controls crawling and data transfer through an object of type MediaStreamTrack for an object of type RTCPeerConnection. RTCRtpReceiver Controls the receipt and decoding of data through a MediaStreamTrack object for an RTCPeerConnection object. RTCTrackEvent Indicates that a new incoming MediaStreamTrack object has been created and an RTCRtpReceiver object has been added to the RTCPeerConnection object. RTCCertificate Represents a certificate that uses an RTCPeerConnection object. RTCDataChannel Represents a bi-directional data channel between two connection nodes. RTCDataChannelEvent Represents the events that are raised when an RTCDataChannel object is attached to an RTCPeerConnection datachannel object. RTCDTMFSender Controls the encoding and transmission of dual-tone multi-frequency (DTMF) signaling for an RTCPeerConnection object. RTCDTMFToneChangeEvent Indicates an inbound Dual Tone Multi-Frequency (DTMF) tone change event. This event does not bubble (unless otherwise noted) and is not canceled (unless otherwise noted). RTCStatsReport Asynchronously reports the status for the passed object of type MediaStreamTrack. RTCIdentityProviderRegistrar Registers an identity provider (idP). RTCIdentityProvider Enables the browser to request the creation or validation of an identity declaration. RTCIdentityAssertion Represents the remote host ID of the current connection. If the node is not yet set and verified, the interface reference will return null. Does not change after installation. RTCIdentityEvent Represents an identity declaration by an identity provider (idP) event object. Event of an object of type RTCPeerConnection. One type is passed to this event, identityresult. RTCIdentityErrorEvent Represents an error event object associated with an identity provider (idP). Event of an object of type RTCPeerConnection. Two types of error are passed to this event: idpassertionerror and idpvalidationerror.Manuals

Overview of the WebRTC Architecture Underneath the API that developers use to create and use WebRTC is a set of network protocols and connection standards. This overview is a showcase of these standards. WebRTC allows you to establish a host-to-host connection for transferring arbitrary data, audio, video streams, or any combination of these in a browser. In this article, we will take a look at the life of a WebRTC session, starting with establishing a connection and going all the way until it ends when it is no longer needed. Overview of WebRTC API WebRTC consists of several interconnected programming interfaces (APIs) and protocols that work together to provide support for exchanging data and media streams between two or more nodes. This article provides a quick overview of each of these APIs and what their purpose is. WebRTC Basics This article walks you through creating a cross-browser RTC application. By the end of this article, you should have a working point-to-point data and media feed. WebRTC Protocols This article introduces the protocols, in addition to which the WebRTC API has been created. This guide describes how you can use a node-to-node connection and a linkedThe technologies for calls from the browser have been for many years: Java, ActiveX, Adobe Flash ... In the past few years, it has become clear that plugins and left-hand virtual machines do not shine with convenience (why should I install anything at all?) And, most importantly, security ... What to do? There is an exit!

Until recently, several protocols for IP telephony or video have been used in IP networks: SIP, the most widespread protocol that is leaving the scene H.323 and MGCP, Jabber / Jingle (used in Gtalk), half-open Adobe RTMP * and, of course, proprietary Skype. The WebRTC project, initiated by Google, is trying to revolutionize the world of IP and web telephony by making all softphones, including Skype, unnecessary. WebRTC not only implements all communication capabilities directly inside the browser currently installed on almost every device, but also tries to simultaneously solve the more general problem of communication between browser users (exchange of various data, broadcasting screens, collaborating with documents, and much more).

WebRTC from the web developer side

From a web developer's point of view, WebRTC consists of two main parts:

- control of media streams from local resources (camera, microphone or local computer screen) is implemented by the navigator.getUserMedia method, which returns a MediaStream object;

- peer-to-peer communication between devices that generate media streams, including the definition of communication methods and their direct transmission - RTCPeerConnection objects (for sending and receiving audio and video streams) and RTCDataChannel (for sending and receiving data from the browser).

What do we do?

We will figure out how to organize the simplest multi-user video chat between browsers based on WebRTC using web sockets. Let's start experimenting in Chrome / Chromium, as the most advanced browsers in terms of WebRTC, although Firefox 22, released on June 24, almost caught up with them. I must say that the standard has not yet been adopted, and the API may change from version to version. All examples were tested in Chromium 28. For simplicity, we will not follow the code cleanliness and cross-browser compatibility.

MediaStream

The first and simplest WebRTC component is MediaStream. It provides the browser with access to media streams from the camera and microphone of the local computer. In Chrome, this requires calling the navigator.webkitGetUserMedia () function (since the standard is not yet complete, all functions come with a prefix, and in Firefox this same function is called navigator.mozGetUserMedia ()). When you call it, the user will be prompted for permission to access the camera and microphone. It will be possible to continue the call only after the user gives his consent. The parameters of the required media stream and two callback functions are passed as parameters of this function: the first will be called in case of successful access to the camera / microphone, the second in case of an error. First, let's create an HTML file rtctest1.html with a button and an element

Microsoft CU-RTC-Web

Microsoft would not be Microsoft if, in response to Google's initiative, it had not immediately released its own incompatible non-standard version called CU-RTC-Web (html5labs.interoperabilitybridges.com/cu-rtc-web/cu-rtc-web.htm). Although IE's share, which is already small, continues to decline, the number of Skype users gives Microsoft hope to squeeze Google, and it can be assumed that this standard will be used in the browser version of Skype. The Google standard focuses primarily on communication between browsers; at the same time, the bulk of voice traffic still remains in the regular telephone network, and gateways between it and IP networks are needed not only for ease of use or faster distribution, but also as a monetization tool that will allow more players to develop them ... The emergence of another standard can not only lead to an unpleasant need for developers to support two incompatible technologies at once, but also in the long term give the user a wider choice of possible functionality and available technical solutions. Wait and see.

Enabling local stream

Inside tags of our HTML file, let's declare a global variable for the media stream:

Var localStream \u003d null;

The first parameter to the getUserMedia method needs to specify the parameters of the requested media stream - for example, just enable audio or video:

Var streamConstraints \u003d ("audio": true, "video": true); // Request access to both audio and video

Or specify additional parameters:

Var streamConstraints \u003d ("audio": true, "video": ("mandatory": ("maxWidth": "320", "maxHeight": "240", "maxFrameRate": "5"), "optional":) );

The second parameter to the getUserMedia method must be passed a callback function that will be called if it is successfully executed:

Function getUserMedia_success (stream) (console.log ("getUserMedia_success ():", stream); localVideo1.src \u003d URL.createObjectURL (stream); // Connect the media stream to the HTML element

The third parameter is an error handler callback function that will be called in case of an error

Function getUserMedia_error (error) (console.log ("getUserMedia_error ():", error);)

Actually calling the getUserMedia method - requesting access to the microphone and camera when the first button is pressed

Function getUserMedia_click () (console.log ("getUserMedia_click ()"); navigator.webkitGetUserMedia (streamConstraints, getUserMedia_success, getUserMedia_error);)

It is not possible to access the media stream from a file opened locally. If we try to do this, we get an error:

NavigatorUserMediaError (code: 1, PERMISSION_DENIED: 1) "

We will upload the resulting file to the server, open it in a browser and, in response to the request that appears, allow access to the camera and microphone.

You can select the devices that Chrome will access in Settings, Show advanced settings link, Privacy section, Content button. In Firefox and Opera browsers, devices are selected from the drop-down list directly when access is allowed.

With the HTTP protocol, permission will be requested every time the media stream is accessed after the page has loaded. Switching to HTTPS will allow you to display the request once, only at the very first access to the media stream.

Pay attention to the pulsing circle in the icon on the bookmark and the camera icon on the right side of the address bar:

RTCMediaConnection

RTCMediaConnection is an object designed to establish and transmit media streams over the network between participants. In addition, this object is responsible for generating the description of the media session (SDP), obtaining information about ICE candidates for traversing NAT or firewalls (local and using STUN) and interacting with the TURN server. Each participant must have one RTCMediaConnection per connection. Media streams are transmitted using the encrypted SRTP protocol.

TURN servers

ICE candidates are of three types: host, srflx, and relay. Host contains information received locally, srflx - how the node looks to an external server (STUN), and relay - information for proxying traffic through the TURN server. If our node is behind NAT, then host candidates will contain local addresses and will be useless, srflx candidates will only help with certain types of NAT and relay will be the last hope to pass traffic through an intermediate server.

An example of an ICE candidate of the host type, with the address 192.168.1.37 and port udp / 34022:

A \u003d candidate: 337499441 2 udp 2113937151 192.168.1.37 34022 typ host generation 0

General format for specifying STUN / TURN servers:

Var servers \u003d ("iceServers": [("url": "stun: stun.stunprotocol.org: 3478"), ("url": "turn: [email protected]: port "," credential ":" password ")]);

There are many public STUN servers on the Internet. For example, there is a large list. Unfortunately, they solve too few problems. There are practically no public TURN servers, unlike STUN. This is due to the fact that the TURN server passes through itself media streams that can significantly load both the network channel and the server itself. Therefore, the easiest way to connect to TURN servers is to install it yourself (it is clear that a public IP is required). Of all the servers, rfc5766-turn-server is the best in my opinion. There is even a ready-made image for Amazon EC2 for it.

With TURN, not everything is as good as we would like, but active development is underway, and I hope that after some time WebRTC, if not equal to Skype in the quality of passing through address translation (NAT) and firewalls, then at least noticeably will come closer.

RTCMediaConnection requires an additional mechanism for exchanging control information to establish a connection - although it forms this data, it does not transmit it, and the transfer to other participants must be implemented separately.

The choice of the transfer method is left to the developer - even manually. As soon as the exchange of the necessary data is completed, the RTCMediaConnection will set up the media streams automatically (if possible, of course).

Offer-answer model

To establish and change media streams, the offer / answer model (described in RFC3264) and the Session Description Protocol (SDP) are used. They are also used by the SIP protocol. In this model, two agents are distinguished: Offerer - the one who generates the SDP description of the session to create a new or modify the existing one (Offer SDP), and Answerer - the one who receives the SDP description of the session from another agent and answers it with his own session description (Answer SDP). At the same time, the specification requires the presence of a higher-level protocol (for example, SIP or its own over web sockets, as in our case), which is responsible for transferring SDP between agents.

What data needs to be transferred between two RTCMediaConnections in order for them to successfully establish media streams:

- The first participant initiating the connection generates an Offer, in which it transmits an SDP data structure (the same protocol is used for the same purpose in SIP) describing the possible characteristics of the media stream that it is going to start transmitting. This data block must be transferred to the second participant. The second participant forms an Answer with his SDP and forwards it to the first one.

- Both the first and second participants perform the procedure for determining possible ICE candidates, with the help of which the second participant can transmit the media stream to them. As candidates are identified, information about them should be passed on to another participant.

Formation of Offer

We need two functions to form an Offer. The first will be called if it is successfully formed. The second parameter of the createOffer () method is a callback function that is called in case of an error during its execution (provided that the local stream is already available).

Additionally, you need two event handlers: onicecandidate when defining a new ICE candidate and onaddstream when connecting a media stream from the far side. Let's go back to our file. Add to HTML after lines with elements

And after the line with the element

Also, at the beginning of the JavaScript code, we declare a global variable for RTCPeerConnection:

Var pc1;

When calling the RTCPeerConnection constructor, you must specify STUN / TURN servers. See the sidebar for more details; as long as all participants are on the same network, they are not required.

Var servers \u003d null;

Parameters for preparing Offer SDP

Var offerConstraints \u003d ();

The first parameter of the createOffer () method is a callback function called when an Offer is successfully generated.

Function pc1_createOffer_success (desc) (console.log ("pc1_createOffer_success (): \\ ndesc.sdp: \\ n" + desc.sdp + "desc:", desc); pc1.setLocalDescription (desc); // Set RTCPeerConnection generated by Offer SDP using the setLocalDescription method. // When the far side sends its Answer SDP, it will need to be set using the setRemoteDescription method // Do nothing until the second side is implemented // pc2_receivedOffer (desc);)

The second parameter is a callback function that will be called in case of an error

Function pc1_createOffer_error (error) (console.log ("pc1_createOffer_success_error (): error:", error);)

And we will declare a callback function to which ICE candidates will be passed as they are defined:

Function pc1_onicecandidate (event) (if (event.candidate) (console.log ("pc1_onicecandidate (): \\ n" + event.candidate.candidate.replace ("\\ r \\ n", ""), event.candidate); // Until the second side is implemented, do nothing // pc2.addIceCandidate (new RTCIceCandidate (event.candidate));))

And also a callback function for adding a media stream from the far side (for the future, since we have only one RTCPeerConnection so far):

Function pc1_onaddstream (event) (console.log ("pc_onaddstream ()"); remoteVideo1.src \u003d URL.createObjectURL (event.stream);)

When you click on the "createOffer" button, create an RTCPeerConnection, set the onicecandidate and onaddstream methods and request the formation of the Offer SDP by calling the createOffer () method:

Function createOffer_click () (console.log ("createOffer_click ()"); pc1 \u003d new webkitRTCPeerConnection (servers); // Create RTCPeerConnection pc1.onicecandidate \u003d pc1_onicecandidate; // Callback function to process ICE candidates pc1._onaddstream \u003d pc1 // Callback-function called when a media stream appears from the far side. It is not yet available pc1.addStream (localStream); // Let's transfer the local media stream (we assume that it has already been received) pc1.createOffer (// And actually request the formation of Offer pc1_createOffer_success , pc1_createOffer_error, offerConstraints);)

Save the file as rtctest2.html, upload it to the server, open it in a browser and see in the console what data is generated during its operation. The second video will not appear yet, as there is only one participant. Recall that SDP is a description of media session parameters, available codecs, media streams, and ICE candidates are possible options for connecting to this participant.

Formation of Answer SDP and exchange of ICE candidates

Both the Offer SDP and each of the ICE candidates must be passed to the other side and there, after receiving them from the RTCPeerConnection, call the setRemoteDescription methods for the Offer SDP and addIceCandidate for each ICE candidate received from the far side; the reverse is the same for Answer SDP and remote ICE candidates. Answer SDP itself is formed similarly to Offer; the difference is that not the createOffer method is called, but the createAnswer method and before this RTCPeerConnection the Offer SDP received from the caller is passed by the setRemoteDescription method.

Let's add another video element to HTML:

And a global variable for the second RTCPeerConnection under the first one:

Var pc2;

Offer and Answer SDP Processing

Answer SDP generation is very similar to Offer. In the callback function called upon successful formation of the Answer, similarly to Offer, we will give the local description and pass the received Answer SDP to the first participant:

Function pc2_createAnswer_success (desc) (pc2.setLocalDescription (desc); console.log ("pc2_createAnswer_success ()", desc.sdp); pc1.setRemoteDescription (desc);)

The callback function, called in case of an error while forming an Answer, is completely similar to Offer:

Function pc2_createAnswer_error (error) (console.log ("pc2_createAnswer_error ():", error);)

Parameters for generating Answer SDP:

Var answerConstraints \u003d ("mandatory": ("OfferToReceiveAudio": true, "OfferToReceiveVideo": true));

When an Offer is received by the second participant, create an RTCPeerConnection and form an Answer similarly to Offer:

Function pc2_receivedOffer (desc) (console.log ("pc2_receiveOffer ()", desc); // Create an RTCPeerConnection object for the second participant similarly to the first pc2 \u003d new webkitRTCPeerConnection (servers); pc2.onicecandidate \u003d pc2_onicecandidate; // Set an event handler when ICE candidate pc2.onaddstream \u003d pc_onaddstream; // When a stream appears, connect it to HTML

In order to pass the Offer SDP from the first participant to the second within the framework of our example, uncomment in the pc1 function createOffersuccess () call string:

Pc2_receivedOffer (desc);

To implement the processing of ICE candidates, let's uncomment the handler of the ICE candidate readiness event of the first participant pc1_onicecandidate () to pass it to the second one:

Pc2.addIceCandidate (new RTCIceCandidate (event.candidate));

The second participant readiness event handler for ICE candidates is mirrored like the first:

Function pc2_onicecandidate (event) (if (event.candidate) (console.log ("pc2_onicecandidate ():", event.candidate.candidate); pc1.addIceCandidate (new RTCIceCandidate (event.candidate));))

Callback function for adding a media stream from the first participant:

Function pc2_onaddstream (event) (console.log ("pc_onaddstream ()"); remoteVideo2.src \u003d URL.createObjectURL (event.stream);)

Terminating the connection

Let's add another button to HTML

And a function to terminate the connection

Function btnHangupClick () (// Disconnect local video from HTML elements

Let's save it as rtctest3.html, put it on the server and open it in a browser. This example implements bi-directional media streaming between two RTCPeerConnections within the same browser bookmark. To organize the exchange of Offer and Answer SDP, ICE candidates between participants and other information through the network, instead of a direct call of procedures, it will be necessary to implement the exchange between the participants using some kind of transport, in our case, web sockets.

Screen broadcast

The getUserMedia function can also capture a screen and broadcast as a MediaStream by specifying the following parameters:

Var mediaStreamConstraints \u003d (audio: false, video: (mandatory: (chromeMediaSource: "screen"), optional:));

To successfully access the screen, several conditions must be met:

- enable the screenshot flag in getUserMedia () in chrome: // flags /, chrome: // flags /;

- the source file must be uploaded over HTTPS (SSL origin);

- the audio stream should not be requested;

- multiple requests should not be made in one browser tab.

Libraries for WebRTC

Although WebRTC is not finished yet, there are already several libraries based on it. JsSIP is designed to create browser-based softphones that work with SIP switches such as Asterisk and Camalio. PeerJS will simplify the creation of P2P networks for exchanging data, and Holla will reduce the amount of development required for P2P communication from browsers.

Node.js and socket.io

In order to organize the exchange of SDP and ICE candidates between two RTCPeerConnections over the network, we use Node.js with the socket.io module.

Installing the latest stable version of Node.js (for Debian / Ubuntu) is described

$ sudo apt-get install python-software-properties python g ++ make $ sudo add-apt-repository ppa: chris-lea / node.js $ sudo apt-get update $ sudo apt-get install nodejs

Installation for other operating systems is described

Let's check:

$ echo "sys \u003d require (" util "); sys.puts (" Test message ");" \u003e nodetest1.js $ nodejs nodetest1.js

Install socket.io and the express add-on using npm (Node Package Manager):

$ npm install socket.io express

Let's check by creating a nodetest2.js file for the server side:

$ nano nodetest2.js var app \u003d require ("express") (), server \u003d require ("http"). createServer (app), io \u003d require ("socket.io"). listen (server); server.listen (80); // If port 80 is free app.get ("/", function (req, res) (// When accessing the root page res.sendfile (__ dirname + "/nodetest2.html"); // send the HTML file)) ; io.sockets.on ("connection", function (socket) (// When connecting socket.emit ("server event", (hello: "world")); // send message socket.on ("client event", function (data) (// and declare an event handler when a message is received from the client console.log (data);));));

And nodetest2.html for the client side:

$ nano nodetest2.html

Let's start the server:

$ sudo nodejs nodetest2.js

and open the page http: // localhost: 80 (if running locally on port 80) in a browser. If everything is successful, in the JavaScript console of the browser we will see the exchange of events between the browser and the server upon connection.

Exchange of information between RTCPeerConnection over websockets

Client part

Let's save our main example (rtcdemo3.html) under the new name rtcdemo4.html. Let's connect the socket.io library in the element:

And at the beginning of the JavaScript script - connecting to websockets:

Var socket \u003d io.connect ("http: // localhost");

Let's replace the direct call of functions of another participant by sending him a message via websockets:

Function createOffer_success (desc) (... // pc2_receivedOffer (desc); socket.emit ("offer", desc); ...) function pc2_createAnswer_success (desc) (... // pc1.setRemoteDescription (desc); socket .emit ("answer", desc);) function pc1_onicecandidate (event) (... // pc2.addIceCandidate (new RTCIceCandidate (event.candidate)); socket.emit ("ice1", event.candidate); .. .) function pc2_onicecandidate (event) (... // pc1.addIceCandidate (new RTCIceCandidate (event.candidate)); socket.emit ("ice2", event.candidate); ...)

In the hangup () function, instead of directly calling the functions of the second participant, we pass the message through web sockets:

Function btnHangupClick () (... // remoteVideo2.src \u003d ""; pc2.close (); pc2 \u003d null; socket.emit ("hangup", ());)

And add handlers for receiving the message:

Socket.on ("offer", function (data) (console.log ("socket.on (" offer "):", data); pc2_receivedOffer (data);)); socket.on ("answer", function (data) (e console.log ("socket.on (" answer "):", data); pc1.setRemoteDescription (new RTCSessionDescription (data));)); socket.on ("ice1", function (data) (console.log ("socket.on (" ice1 "):", data); pc2.addIceCandidate (new RTCIceCandidate (data));)); socket.on ("ice2", function (data) (console.log ("socket.on (" ice2 "):", data); pc1.addIceCandidate (new RTCIceCandidate (data));)); socket.on ("hangup", function (data) (console.log ("socket.on (" hangup "):", data); remoteVideo2.src \u003d ""; pc2.close (); pc2 \u003d null;) );

Server part

On the server side, save the nodetest2 file under the new name rtctest4.js and add receiving and sending client messages inside the io.sockets.on ("connection", function (socket) (...) function:

Socket.on ("offer", function (data) (// Upon receipt of the "offer" message, // since there is only one client connection in this example, // send the message back through the same socket socket.emit ("offer" , data); // If it were necessary to send a message across all connections, // except for the sender: // soket.broadcast.emit ("offer", data);)); socket.on ("answer", function (data) (socket.emit ("answer", data);)); socket.on ("ice1", function (data) (socket.emit ("ice1", data);)); socket.on ("ice2", function (data) (socket.emit ("ice2", data);)); socket.on ("hangup", function (data) (socket.emit ("hangup", data);));

In addition, we will change the name of the HTML file:

// res.sendfile (__ dirname + "/nodetest2.html"); // Send the HTML file res.sendfile (__ dirname + "/rtctest4.html");

Server start:

$ sudo nodejs nodetest2.js

Despite the fact that the code of both clients is executed within the same browser tab, all interaction between the participants in our example is completely carried out through the network and the participants no longer require any special difficulties to "separate" the participants. However, what we did was also very simple - these technologies are good for their ease of use. Even if sometimes deceiving. In particular, let's not forget that without STUN / TURN servers, our example cannot work in the presence of address translation and firewalls.

Conclusion

The resulting example is very conditional, but if we slightly universalize the event handlers so that they do not differ between the caller and the callee, instead of two objects pc1 and pc2, make an array RTCPeerConnection and implement dynamic creation and removal of elements

We can assume that very soon, thanks to WebRTC, there will be a revolution not only in our understanding of voice and video communications, but also in the way we perceive the Internet in general. WebRTC is positioned not only as a technology for calls from a browser to a browser, but also as a real-time communication technology. The video communication that we have analyzed is only a small part of the possible options for its use. There are already examples of screencasting and collaboration, and even a browser-based P2P content delivery network using RTCDataChannel.

The purpose of this article is to familiarize yourself with its structure and operation principle using a demo sample of peer-to-peer video chat (p2p video chat). For this purpose, we will use the webrtc.io-demo multiuser video chat demo. It can be downloaded from the link: https://github.com/webRTC/webrtc.io-demo/tree/master/site.

It should be noted that GitHub is a site or web service for collaborative development of Web projects. On it developers can post codes for their developments, discuss them and communicate with each other. In addition, some large IT companies host their official repositories on this site. The service is free for open source projects. GitHub is a repository of open source, free source libraries.

So, we will place the demo sample of peer-to-peer video chat downloaded from GitHub on the C drive of the personal computer in the created directory for our application "webrtc_demo".

Figure: one

As follows from the structure (Fig. 1), peer-to-peer video chat consists of client-side script.js and server-side server.js scripts, implemented in the JavaScript programming language. The script (library) webrtc.io.js (CLIENT) - provides the organization of real-time communication between browsers on a peer-to-peer scheme: "client-client", and webrtc.io.js (CLIENT) and webrtc.io.js (SERVER), using the WebSocket protocol, they provide full-duplex communication between the browser and the web server according to the client-server architecture.

The webrtc.io.js (SERVER) script is included in the webrtc.io library and is located in the node_modules \\ webrtc.io \\ lib directory. The index.html video chat interface is implemented in HTML5 and CSS3. The contents of the webrtc_demo application files can be viewed with one of the html editors, for example "Notepad ++".

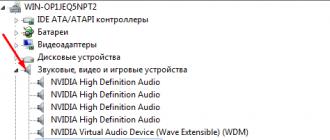

We will check how video chat works in the PC file system. To run the server (server.js) on the PC, you need to install the node.js runtime. Node.js allows JavaScript code to run outside the browser. You can download node.js from the link: http://nodejs.org/ (version v0.10.13 on 07/15/13). On the main page of the node.org site, click on the download button and go to http://nodejs.org/download/. For windows users, first download win.installer (.msi), then run win.installer (.msi) on the PC, and install nodejs and "npm package manager" into the Program Files directory.

Figure: 2

Thus, node.js consists of a development and execution environment for JavaScript code, as well as a set of internal modules that can be installed using the npm package manager or manager.

To install modules, you need to run the command in the command line from the application directory (for example, "webrtc_demo"): npm install module_name... During the installation of modules, the npm manager creates a node_modules folder in the directory from which the installation was performed. In the process, nodejs automatically connects modules from the node_modules directory.

So, after installing node.js, open the command line and update the express module in the node_modules folder of the webrtc_demo directory using the npm package manager:

C: \\ webrtc_demo\u003e npm install express

The express module is a web framework for node.js or a web framework for developing applications. To have global access to express, you can install it like this: npm install -g express.

Then we update the webrtc.io module:

C: \\ webrtc_demo\u003e npm install webrtc.io

Then, on the command line, start the server: server.js:

C: \\ webrtc_demo\u003e node server.js

Figure: 3

Everything, the server is working successfully (Figure 3). Now, using a web browser, you can access the server by ip address and download the index.html web page, from which the web browser will extract the client script code - script.js and the webrtc.io.js script code, and execute them. For peer-to-peer video chat to work (to establish a connection between two browsers), it is necessary from two browsers that support webrtc to contact the signaling server running on node.js by the ip-address.

As a result, the interface of the client part of the communication application (video chat) will open with a request for permission to access the camera and microphone (Fig. 4).

Figure: 4

After clicking the "Allow" button, the camera and microphone are connected for multimedia communication. In addition, textual data can be communicated through the video chat interface (Fig. 5).

Figure: 5

It should be noted that. The server is signaling, and is mainly intended to establish a connection between users' browsers. Node.js is used to run the server.js script that provides WebRTC signaling.

WebRTC is an API provided by a browser that allows you to organize a P2P connection and transfer data directly between browsers. There are quite a few tutorials on the Internet for writing your own video chat using WebRTC. For example, here is an article on Habré. However, they are all limited to connecting two clients. In this article I will try to talk about how to organize connection and exchange of messages between three or more users using WebRTC.

The RTCPeerConnection interface is a peer-to-peer connection between two browsers. To connect three or more users, we will have to organize a mesh network (a network in which each node is connected to all other nodes).

We will use the following scheme:

- When opening the page, check for the presence of a room ID in location.hash

- If the room ID is not specified, we generate a new one

- We send the signaling server "a message that we want to join the specified room

- The signaling server sends out a new user notification to the rest of the clients in this room

- Clients already in the room send an SDP offer to the newcomer

- Newbie responds to offer

0. Signaling server

As you know, although WebRTC provides a P2P connection between browsers, it still requires an additional transport to exchange service messages. In this example, such a transport is a WebSocket server written in Node.JS using socket.io:

Var socket_io \u003d require ("socket.io"); module.exports \u003d function (server) (var users \u003d (); var io \u003d socket_io (server); io.on ("connection", function (socket) (// New user wants to join the room socket.on ("room ", function (message) (var json \u003d JSON.parse (message); // Add the socket to the list of users users \u003d socket; if (socket.room! \u003d\u003d undefined) (// If the socket is already in some room , exit it socket.leave (socket.room);) // Enter the requested room socket.room \u003d json.room; socket.join (socket.room); socket.user_id \u003d json.id; // Send to other clients in this room a message about a new participant joining socket.broadcast.to (socket.room) .emit ("new", json.id);)); // Message related to WebRTC (SDP offer, SDP answer or ICE candidate) socket.on ("webrtc", function (message) (var json \u003d JSON.parse (message); if (json.to! \u003d\u003d undefined && users! \u003d\u003d undefined) (// If the message contains a recipient and this recipient known to the server, we send a message only to him ... users.emit ("webrtc", message); ) else (// ... otherwise, consider the message as a broadcast socket.broadcast.to (socket.room) .emit ("webrtc", message);))); // Someone disconnected socket.on ("disconnect", function () (// When a client disconnects, notify others about it socket.broadcast.to (socket.room) .emit ("leave", socket.user_id); delete users;)); )); );

1.index.html

The source code for the page itself is pretty simple. I deliberately did not pay attention to layout and other beauties, since this article is not about that. If someone wants to make her beautiful, it will not be difficult.

2.main.js

2.0. Getting links to page elements and WebRTC interfaces

var chatlog \u003d document.getElementById ("chatlog"); var message \u003d document.getElementById ("message"); var connection_num \u003d document.getElementById ("connection_num"); var room_link \u003d document.getElementById ("room_link");We still have to use browser prefixes to refer to WebRTC interfaces.

Var PeerConnection \u003d window.mozRTCPeerConnection || window.webkitRTCPeerConnection; var SessionDescription \u003d window.mozRTCSessionDescription || window.RTCSessionDescription; var IceCandidate \u003d window.mozRTCIceCandidate || window.RTCIceCandidate;

2.1. Finding the room ID

Here we need a function to generate a unique room and user ID. We will use UUID for these purposes.

Function uuid () (var s4 \u003d function () (return Math.floor (Math.random () * 0x10000) .toString (16);); return s4 () + s4 () + "-" + s4 () + "-" + s4 () + "-" + s4 () + "-" + s4 () + s4 () + s4 ();)

Now let's try to pull the room ID from the address. If none is specified, we will generate a new one. We will display a link to the current room on the page, and, in one step, generate the current user ID.

Var ROOM \u003d location.hash.substr (1); if (! ROOM) (ROOM \u003d uuid ();) room_link.innerHTML \u003d "Link to the room"; var ME \u003d uuid ();

2.2. WebSocket

Immediately upon opening the page, we will connect to our signaling server, send a request to enter the room and specify the message handlers.

// We indicate that when the message is closed, a notification about this should be sent to the server var socket \u003d io.connect ("", ("sync disconnect on unload": true)); socket.on ("webrtc", socketReceived); socket.on ("new", socketNewPeer); // Immediately send a request to enter the room socket.emit ("room", JSON.stringify ((id: ME, room: ROOM))); // Helper function for sending WebRTC-related address messages function sendViaSocket (type, message, to) (socket.emit ("webrtc", JSON.stringify ((id: ME, to: to, type: type, data: message )));)

2.3. PeerConnection Settings

Most ISPs provide NAT over Internet connectivity. Because of this, direct connection becomes less trivial. When creating a connection, we need to specify a list of STUN and TURN servers that the browser will try to use to traverse NAT. We will also indicate a couple of additional options for connection.

Var server \u003d (iceServers: [(url: "stun: 23.21.150.121"), (url: "stun: stun.l.google.com: 19302"), (url: "turn: numb.viagenie.ca", credential: "your password goes here", username: " [email protected]")]); var options \u003d (optional: [(DtlsSrtpKeyAgreement: true), // required for connecting between Chrome and Firefox (RtpDataChannels: true) // required for Firefox to use DataChannels API])

2.4. Connecting a new user

When a new peer is added to the room, the server sends us a message new... According to the message handlers above, the function will be called socketNewPeer.

Var peers \u003d (); function socketNewPeer (data) (peers \u003d (candidateCache:); // Create a new connection var pc \u003d new PeerConnection (server, options); // Initialize it initConnection (pc, data, "offer"); // Save the peer in the list peers peers.connection \u003d pc; // Create a DataChannel over which messages will be exchanged var channel \u003d pc.createDataChannel ("mychannel", ()); channel.owner \u003d data; peers.channel \u003d channel; // Install event handlers channel bindEvents (channel); // Create SDP offer pc.createOffer (function (offer) (pc.setLocalDescription (offer);));) function initConnection (pc, id, sdpType) (pc.onicecandidate \u003d function (event) ( if (event.candidate) (// When a new ICE candidate is found, add it to the list for further sending peers.candidateCache.push (event.candidate);) else (// When candidate detection is complete, the handler will be called again, but without candidate // In this case, we will send the SDP offer to the peer first, or SDP answer (depending on function parameter) ... sendViaSocket (sdpType, pc.localDescription, id); // ... and then all previously found ICE candidates for (var i \u003d 0; i< peers.candidateCache.length; i++) { sendViaSocket("candidate", peers.candidateCache[i], id); } } } pc.oniceconnectionstatechange = function (event) { if (pc.iceConnectionState == "disconnected") { connection_num.innerText = parseInt(connection_num.innerText) - 1; delete peers; } } } function bindEvents (channel) { channel.onopen = function () { connection_num.innerText = parseInt(connection_num.innerText) + 1; }; channel.onmessage = function (e) { chatlog.innerHTML += "

2.5. SDP offer, SDP answer, ICE candidate

When one of these messages is received, we call the corresponding message handler.

Function socketReceived (data) (var json \u003d JSON.parse (data); switch (json.type) (case "candidate": remoteCandidateReceived (json.id, json.data); break; case "offer": remoteOfferReceived (json. id, json.data); break; case "answer": remoteAnswerReceived (json.id, json.data); break;))

2.5.0 SDP offer

function remoteOfferReceived (id, data) (createConnection (id); var pc \u003d peers.connection; pc.setRemoteDescription (new SessionDescription (data)); pc.createAnswer (function (answer) (pc.setLocalDescription (answer);)); ) function createConnection (id) (if (peers \u003d\u003d\u003d undefined) (peers \u003d (candidateCache:); var pc \u003d new PeerConnection (server, options); initConnection (pc, id, "answer"); peers.connection \u003d pc ; pc.ondatachannel \u003d function (e) (peers.channel \u003d e.channel; peers.channel.owner \u003d id; bindEvents (peers.channel);)))2.5.1 SDP answer

function remoteAnswerReceived (id, data) (var pc \u003d peers.connection; pc.setRemoteDescription (new SessionDescription (data));)2.5.2 ICE candidate

function remoteCandidateReceived (id, data) (createConnection (id); var pc \u003d peers.connection; pc.addIceCandidate (new IceCandidate (data));)2.6. Sending a message

By pressing the button Send function is called sendMessage... All it does is go through the peer list and try to send the specified message to everyone.