4.2.3. Organization of disk arrays (raid)

Another way to improve the performance of disk memory was the construction of disk arrays, although this is aimed not only (and not so much) at achieving higher performance, but also greater reliability of the storage devices on disks.

RAID technology ( Redundant Array of Independent Disks - Redundant Array of Independent Disks) was conceived as combining multiple low-cost hard drives into a single drive array to increase performance, volume, and reliability over a single drive. In this case, the computer must see such an array as one logical disk.

If we simply combine multiple disks into a (non-redundant) array, then the mean time between failures (MVT) will be equal to the MVMO of one disk divided by the number of disks. This figure is too low for applications critical to hardware failures. It can be improved by applying redundancy when storing information, implemented in various ways.

To improve reliability and performance in RAID systems, combinations of three main mechanisms are used, each of which is well known separately: - organization of "mirrored" disks, ie. complete duplication of stored information; - counting control codes (parity, Hamming codes), allowing information to be restored in case of failure; - distribution of information on different disks of the array, as it is done when interleaving accesses across memory blocks (see interleave), which increases the possibility of parallel operation of disks during operations on stored information. When describing RAID, this technique is called “stripped disks”, which literally means “stripped disks”, or simply “striped disks”.

Figure: 43. Partitioning disks into alternating blocks - "stripes".

Initially, five types of disk arrays were defined, designated RAID 1 - RAID 5, differing in their features and performance. Each of these types, due to a certain redundancy of the recorded information, provided increased fault tolerance in comparison with a single drive. In addition, an array of disks that does not have redundancy, but allows for increased performance (due to stratification of accesses), are often called RAID 0.

The main types of RAID arrays can be briefly described as follows.

RAID 0... Typically, this type of array is defined as a group of stripped disks with no parity and no data redundancy. Stripes (or blocks) can be large in a multi-user environment or small in a single-user system when sequentially accessing long records.

The organization of RAID 0 is exactly the same as shown in Fig. 43. Write and read operations can be performed simultaneously on each drive. The minimum number of drives for RAID 0 is two.

This type is characterized by high performance and the most efficient use of disk space, however, failure of one of the disks makes it impossible to work with the entire array.

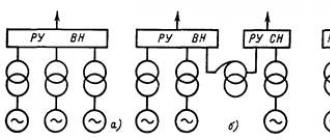

RAID 1... This type of disk array (Fig. 44, and) is also known as mirrored disks and is simply a pair of drives that duplicate stored data, but appear to the computer as one disk. Although there is no striping within a single pair of mirrored drives, striping can be done across multiple RAID 1 arrays that together form one large array of multiple mirrored pairs of drives. This variant of the organization is called RAID 1 + 0. There is also the opposite variant.

All write operations are performed simultaneously to both disks of the mirrored pair, so that the information in them is identical. But when reading, each of the disks in a pair can operate independently, which allows two reads to be performed simultaneously, thereby doubling the read performance. In this sense, RAID 1 provides the best performance of any disk array option.

RAID 2... In these disk arrays, blocks - data sectors are interleaved across the disk group, some of which are used only for storing control information - ECC (error correcting codes) codes. But since all modern drives have built-in ECC control, RAID 2 does little compared to other types of RAID, and is now rarely used.

RAID 3... As in RAID 2 in this type of disk array (Fig. 44, b) blocks - sectors are interleaved across the disk group, but one of the disks in the group is reserved for storing parity information. In the event of a drive failure, data recovery is performed by calculating XOR values \u200b\u200bfrom the data written to the remaining disks. Writes usually take up all disks (since strips are short), which increases the overall data transfer rate. Since each I / O requires access to each disk, a RAID 3 array can only service one request at a time. Therefore, this type provides the best performance for a single user in a single-tasking environment with long recordings. When working with short writes, synchronization of the drive spindles is required to avoid performance degradation. In terms of its characteristics, RAID 3 is close to RAID 5 (see below).

RAID 4. This organization, shown in Fig. 35, at), is similar to RAID 3 with the only difference that it uses large blocks (stripes), so that records can be read from any disk in the array (except for a disk that stores parity codes). This allows you to combine read operations on different disks. Write operations always update the parity disk, so they cannot be combined. In general, this architecture has no particular advantage over other RAID options.

RAID 5. This type of disk array is similar to RAID 4, but the parity codes are not stored on a dedicated disk, but in blocks that are located alternately on all disks. This organization is even sometimes called an array with “rotating parity” (one can note a certain analogy with the assignment of interrupt lines for PCI bus slots or with the cyclic priority of the interrupt controller in x86 line processors). This distribution avoids the limitation of concurrent writes due to the storage of parity codes on only one disk, which is typical for RAID 4.. 44, r) shows an array consisting of four drives, with one parity block for every three data blocks (these blocks are shaded), the location of which for each three data blocks changes, moving cyclically through all four drives.

Read operations can be performed in parallel on all disks. Writes that require two drives (for data and for parity) can usually also be combined, since parity codes are distributed across all drives.

Comparison of various options for organizing disk arrays shows the following.

RAID 0 is the fastest and most efficient option, but does not provide fault tolerance. It requires a minimum of 2 floppy drives. Write and read operations can be performed simultaneously on each drive.

The RAID 1 architecture is most suitable for high performance, high availability applications, but also the most expensive. It is also the only fault tolerant option if only two drives are used. Reads can be performed simultaneously for each drive, and writes are always duplicated for a mirrored pair of drives.

RAID 2 architecture is rarely used.

A RAID 3 disk array can be used to speed up data transfers and improve fault tolerance in a single-user environment with sequential access to long records. But it does not allow combining operations and requires synchronization of the rotation of the drive spindles. It requires at least three drives: 2 for data and one for parity codes.

The RAID 4 architecture does not support concurrent operations and does not offer any advantages over RAID 5.

RAID 5 is characterized by efficiency, fault tolerance, and good performance. But the write performance and in the event of a drive failure is worse than that of RAID 1. In particular, since the parity block refers to the entire block being written, if only part of it is written, you must first read the previously written data, then calculate the new values \u200b\u200bof the parity codes. and only then write new data (and parity). Rebuilding operations also take longer due to the need to generate parity codes. This type of RAID requires a minimum of three drives.

In addition, on the basis of the most common RAID options: 0, 1 and 5, so-called two-tier architectures can be formed, which combine the principles of organizing various types of arrays. For example, several RAID arrays of the same type can be combined into one data array group or parity array.

This two-tier organization can achieve the desired balance between the increased storage reliability of RAID 1 and RAID 5 and the fast read speeds inherent in striping blocks of disks in a RAID 0. These two-tier designs are sometimes referred to as RAID 0 + 1 or 10. and 0 + 5 or 50.

Operation of RAID arrays can be controlled not only by hardware, but also by software, the possibility of which is provided in some server versions of operating systems. However, it is clear that such an implementation will have significantly worse performance characteristics.

RAID (eng. redundant array of independent disks - redundant array of independent hard drives) - an array of several disks controlled by the controller, interconnected by high-speed channels and perceived by the external system as a whole. Depending on the type of array used, it can provide different degrees of fault tolerance and performance. Serves to improve the reliability of data storage and / or to increase the speed of reading / writing information. Initially, such arrays were built as a backup to media on random access (RAM) memory, which was expensive at that time. Over time, the acronym acquired a second meaning - the array was already made up of independent disks, implying the use of several disks, not partitions of one disk, as well as the high cost (now relatively just several disks) of the equipment required to build this very array.

Let's take a look at what RAID arrays are. First, consider the levels that were presented by scientists from Berkeley, then their combinations and unusual modes. It is worth noting that if disks of different sizes are used (which is not recommended), then they will work in the smallest volume. The extra capacity of large disks will simply not be available.

RAID 0. Striped disk array without fault tolerance / parity (Stripe)

It is an array where data is divided into blocks (the block size can be specified when the array is created) and then written to separate disks. In the simplest case, there are two disks, one block is written to the first disk, another to the second, then again to the first, and so on. This mode is also called “striping” because when blocks of data are written, the discs to which you write are striped. Accordingly, blocks are also read one by one. Thus, I / O operations are performed in parallel, which leads to better performance. If earlier we could count one block per unit of time, now we can do it from several disks at once. The main advantage of this mode is the high data transfer rate.

However, miracles do not happen, and if they do, then they are rare. The performance does not grow by a factor of N (N is the number of disks), but less. First of all, the access time to the disk increases by a factor of N, which is already high in relation to other computer subsystems. The quality of the controller is equally important. If it is not the best, then the speed can hardly differ from the speed of one disk. Well, the interface with which the RAID controller is connected to the rest of the system has a significant impact. All this can lead not only to a less than N increase in the linear read speed, but also to the limit of the number of disks, above which there will be no increase at all. Or, conversely, it will slightly reduce the speed. In real tasks, with a large number of requests, the chance to encounter this phenomenon is minimal, because the speed is very much limited by the hard disk itself and its capabilities.

As you can see, in this mode there is no redundancy as such. All disk space is used. However, if one of the disks fails, then obviously all information is lost.

RAID 1. Mirroring

The essence of this RAID mode is to create a copy (mirror) of a disk in order to increase fault tolerance. If one disk fails, then the work does not stop, but continues, but with one disk. This mode requires an even number of disks. The idea of \u200b\u200bthis method is close to backup, but everything happens "on the fly", as well as recovery from a failure (which is sometimes very important) and there is no need to waste time on it.

Cons - high redundancy, since twice as many disks are needed to create such an array. Another disadvantage is that there is no performance gain - after all, a copy of the first disk is simply written to the second disk.

RAID 2 Array using the robust Hamming code.

This code allows you to correct and detect double errors. Used extensively in Error Correction (ECC) memory. In this mode, disks are divided into two groups - one part is used for storing data and works similarly to RAID 0, splitting data blocks across different disks; the second part is used to store ECC codes.

On the fly, error correction, high speed of streaming data can be distinguished from the advantages.

The main disadvantage is high redundancy (with a small number of disks, it is almost double, n-1). As the number of disks increases, the specific number of ECC storage disks becomes smaller (the specific redundancy decreases). The second disadvantage is the low speed of working with small files. Due to its bulkiness and high redundancy with a small number of disks, this RAID level is not currently used, having lost ground to higher levels.

RAID 3. Bit interleaved, parity, fault tolerant array.

This mode writes data in blocks to different disks, like RAID 0, but uses another disk for parity storage. Thus, the redundancy is much lower than in RAID 2 and is only one disk. In the event of a single disk failure, the speed remains practically unchanged.

The main disadvantage is low speed when working with small files and many requests. This is due to the fact that all control codes are stored on one disk and they must be rewritten during I / O operations. The speed of this disk also limits the speed of the entire array. Parity bits are written only when data is written. And when reading, they are checked. Because of this, there is an imbalance in read / write speed. Single reading of small files is also characterized by low speed, which is due to the impossibility of parallel access from independent disks, when different disks execute requests in parallel.

RAID 4

Data is written in blocks to different disks, one disk is used to store parity bits. The difference from RAID 3 is that the blocks are not divided into bits and bytes, but into sectors. The advantages are high transfer rates when working with large files. Also, the speed of work with a large number of read requests is high. Among the shortcomings we can note are those inherited from RAID 3 - imbalance in the speed of read / write operations and the existence of conditions that impede parallel access to data.

RAID 5. Disk array with striped and distributed parity.

The method is similar to the previous one, but it does not allocate a separate disk for the parity bits, but this information is distributed among all disks. That is, if N disks are used, then N-1 disks will be available. The volume of one will be allocated for the parity bits, as in RAID 3.4. But they are not stored on a separate disk, but separated. Each disk has (N-1) / N amount of information and 1 / N of the space is filled with parity bits. If one disk in the array fails, it remains functional (the data stored on it is calculated based on the parity and data of other disks "on the fly"). That is, the failure is transparent to the user and sometimes even with a minimal drop in performance (depending on the computational capacity of the RAID controller). Among the advantages, we note the high speed of reading and writing data, both with large volumes and with a large number of requests. Disadvantages are complex data recovery and lower read speed than RAID 4.

RAID 6. Disk array with striped and double distributed parity.

The whole difference boils down to the fact that two parity schemes are used. The system is robust against two disk failures. The main difficulty is that to implement this you have to do more operations when performing a write. Because of this, the write speed is extremely slow.

Combined (nested) RAID levels.

Since RAID arrays are transparent to the OS, the time has soon come to create arrays, whose elements are not disks, but arrays of other levels. Usually they are written with a plus sign. The first number means which level arrays are included as elements, and the second number means which organization the top level has, which unites the elements.

RAID 0 + 1

A combination, which is a RAID 1 array built on top of RAID 0 arrays. As with a RAID 1 array, only half of the disk capacity will be available. But like RAID 0, the speed will be faster than with a single drive. To implement such a solution, at least 4 disks are required.

RAID 1 + 0

Also known as RAID 10. It is a mirror stripe, that is, a RAID 0 array built from RAID 1 arrays. Almost the same as the previous solution.

RAID 0 + 3

An array with dedicated parity over interleaving. It is a level 3 array, in which data is divided in blocks and written to RAID 0 arrays. Combinations other than the simplest 0 + 1 and 1 + 0 require specialized controllers, which are often quite expensive. The reliability of this type is lower than that of the next option.

RAID 3 + 0

Also known as RAID 30. It is a stripe (RAID 0 array) of RAID 3 arrays. It has a very high data transfer rate, coupled with good fault tolerance. The data is first divided into blocks (as in RAID 0) and placed on array elements. There they are again divided into blocks, their parity is considered, blocks are written to all disks except one, to which the parity bits are written. In this case, one of the disks of each of the RAID 3 arrays may fail.

RAID 5 + 0 (50)

Created by combining RAID 5 arrays into a RAID 0 array. Provides high data transfer and query processing speed. It has an average data recovery rate and good fault tolerance. A combination of RAID 0 + 5 also exists, but more in theory, as it provides too few benefits.

RAID 5 + 1 (51)

Combination of mirroring and interleaving with distributed parity. Also an option is RAID 15 (1 + 5). Very high resiliency. Array 1 + 5 can handle three drive failures, and 5 + 1 can handle five out of eight drives.

RAID 6 + 0 (60)

Interleaving with double distributed parity. In other words, a stripe from RAID 6. As already mentioned in relation to RAID 0 + 5, RAID 6 from stripes is not widespread (0 + 6). Such techniques (stripe from arrays with parity) can increase the speed of the array. Another advantage is that it can easily increase the volume without complicating the latency required to compute and write more parity bits.

RAID 100 (10 + 0)

RAID 100, also spelled RAID 10 + 0, is a stripe from RAID 10. In essence, it is similar to a wider RAID 10 array, which uses twice as many disks. But such a "three-story" structure has its own explanation. Most often, RAID 10 is done in hardware, that is, by the controller, and already a stripe is made from them in software. Such a trick is resorted to to avoid the problem that was mentioned at the beginning of the article - controllers have their own limitations in scalability and if you plug in a double number of disks in one controller, you may not see the gain at all under some conditions. Software RAID 0 allows you to create it on the basis of two controllers, each of which has a RAID 10 on board. Thus, we avoid the controller bottleneck. Another useful point is to work around the problem with the maximum number of connectors on one controller - by doubling their number, we also double the number of available connectors.

Non-standard RAID modes

Double parity

A common addition to these RAID levels is double parity, sometimes implemented and therefore referred to as "diagonal parity". Double parity is already implemented in RAID 6. But, in contrast, parity counts over other blocks of data. Recently, the RAID 6 specification has been expanded so that diagonal parity can be considered RAID 6. Whereas for RAID 6, parity is counted as the result of modulo addition of 2 bits in a row (that is, the sum of the first bit on the first disk, the first bit on the second, etc. .), then there is a displacement in diagonal parity. Working in disk failure mode is not recommended (due to the difficulty of calculating lost bits from checksums).

Is a NetApp design of a double parity RAID array and falls under the updated definition of RAID 6. It uses a different data recording scheme than the classic RAID 6 implementation. Writing is done first to the NVRAM cache, which is equipped with an uninterruptible power supply to prevent data loss in the event of a power outage. The controller software writes only whole blocks to disks whenever possible. This design provides more protection than RAID 1 and is faster than regular RAID 6.

RAID 1.5

It was proposed by Highpoint, but is now used very often in RAID 1 controllers, without any emphasis on this feature. The bottom line boils down to a simple optimization - data is written as on a regular RAID 1 array (which is essentially what 1.5 is), and data is read striped from two disks (as in RAID 0). In a specific implementation from Highpoint, which was used on DFI LanParty boards on the nForce 2 chipset, the gain was barely noticeable, and sometimes even zero. This is probably due to the low speed of the controllers of this manufacturer as a whole at that time.

Combines RAID 0 and RAID 1. Creates at least three disks. Data is written interleaved on three disks, and a copy is written with a shift on one disk. If you write one block on three discs, then a copy of the first part is written on the second disc, the second part - on the third disc. When using an even number of disks, it is of course better to use RAID 10.

Usually, when building RAID 5, one disk is left free (spare) so that in case of a failure, the system immediately begins to rebuild the array. During normal operation, this disk is idle. The RAID 5E system uses this drive as an array element. And the amount of this free disk is distributed throughout the array and is located at the end of the disks. The minimum number of disks is 4. The available space is n-2, the space of one disk is used (being distributed among all) for parity, the space of another is free. If a disk fails, the array is compressed to 3 disks (for example, the minimum number) by filling the free space. It turns out a normal RAID 5 array, resistant to another disk failure. When a new disk is connected, the array expands and occupies all disks again. It should be noted that during compression and decompression, the disc is not resistant to another disc coming out. It is also unavailable for reading / writing at this time. The main advantage is faster operation, since striping occurs on more disks. The downside is that this disk cannot be assigned to several arrays at once, which is possible in a simple RAID 5 array.

RAID 5EE

It differs from the previous one only in that the areas of free space on the disks are not reserved in one piece at the end of the disk, but are interleaved in blocks with parity bits. This technology greatly speeds up recovery from a system failure. Blocks can be written directly to free space, without having to move around the disk.

Likewise, RAID 5E uses an additional disk to improve performance and load balancing. Free space is shared among other drives and is located at the end of the drives.

This technology is a registered trademark of Storage Computer Corporation. An array based on RAID 3, 4, optimized for performance. The main advantage is the use of read / write caching. Data transfer requests are made asynchronously. The build uses SCSI disks. The speed is about 1.5-6 times faster than RAID 3.4 solutions.

Intel Matrix RAID

Is the technology introduced by Intel in the southbridge since ICH6R. The bottom line comes down to the possibility of combining RAID arrays of different levels on disk partitions, and not on separate disks. For example, on two disks, you can organize two partitions, two of them will store the operating system on a RAID 0 array, and the other two, working in RAID 1 mode, will store copies of documents.

Linux MD RAID 10

This is a Linux kernel RAID driver that provides the ability to create a more advanced version of RAID 10. For example, if there was a limitation of an even number of disks for RAID 10, then this driver can work with an odd one. The principle for three disks will be the same as in RAID 1E, where disk striping takes place in turn to create a copy and striping of blocks, as in RAID 0. For four disks, this will be equivalent to regular RAID 10. In addition, you can specify on which area a copy of the disk will be kept. Let's say the original will be in the first half of the first disc, and its copy will be in the second half of the second. The opposite is true for the second half of the data. Data can be duplicated multiple times. Storing copies on different parts of the disk allows achieving higher access speed as a result of the heterogeneity of the hard disk (the access speed varies depending on the location of the data on the platter, usually the difference is two times).

Developed by Kaleidescape for use in their media devices. Similar to RAID 4 using double parity, but uses a different method of fault tolerance. The user can easily expand the array by simply adding disks, and if it contains data, the data will simply be added to it, instead of deleting, as is usually required.

Developed by Sun. The biggest problem with RAID 5 is the loss of information as a result of a power outage when information from the disk cache (which is volatile memory, that is, does not store data without electricity) has not had time to be saved to the magnetic platters. This discrepancy between the information in the cache and on the disk is called incoherence. The very organization of the array is associated with the Sun Solaris file system - ZFS. A forced write of the contents of the disk cache is used, you can recover not only the entire disk, but also the block “on the fly” when the checksum does not match. Another important aspect is the ZFS ideology - it does not change data if necessary. Instead, it writes the updated data and then, after making sure that the operation was already successful, changes the pointer to it. Thus, it is possible to avoid data loss during modification. Small files are duplicated instead of generating checksums. This is also done by the file system, since it is familiar with the data structure (RAID array) and can allocate space for this purpose. There is also RAID-Z2, which, like RAID 6, is capable of surviving two drive failures by using two checksums.

Something that is not RAID in principle, but is often used together with it. Literally translated as “just a bunch of disks” The technology combines all disks installed in the system into one large logical disk. That is, instead of three disks, one large one will be visible. The entire total disk space is used. There is no acceleration in reliability or performance.

Drive Extender

A feature built into the Window Home Server. It combines JBOD and RAID 1. If you need to create a copy, it does not immediately duplicate the file, but puts a label on the NTFS partition indicating the data. When idle, the system copies the file so that the disk space is maximum (you can use disks of different sizes). Allows to achieve many of the advantages of RAID - fault tolerance and the ability to easily replace a failed disk and restore it in the background, transparency of the location of the file (regardless of which disk it is on). It is also possible to perform concurrent access from different disks using the above labels, achieving similar performance to RAID 0.

Developed by Lime technology LLC. This scheme differs from conventional RAID arrays in that it allows you to mix SATA and PATA drives in one array and drives of different sizes and speeds. A dedicated disk is used for the checksum (parity). Data is not striped between drives. If one disk fails, only the files stored on it are lost. However, they can be restored using parity. UNRAID is implemented as an add-on to Linux MD (multidisk).

Most types of RAID arrays are not widespread, some are used in narrow fields of application. The most widespread, from ordinary users to entry-level servers, are RAID 0, 1, 0 + 1/10, 5 and 6. Do you need a raid array for your tasks - it's up to you. Now you know how they differ from each other.

Greetings to blog readers!

Today there will be another article on a computer topic, and it will be devoted to such a concept as Raid disk array - I am sure that this concept will say absolutely nothing to many, and those who have already heard about it somewhere have no idea what it is all about. Let's figure it out together!

Without going into the details of terminology, a Raid array is a kind of complex built from several hard drives, which allows you to more competently distribute functions between them. How do we usually place hard drives on a computer? We connect one hard drive to Sata, then another, then a third. And drives D, E, F and so on appear in our operating system. We can put some files on them or install Windows, but in fact these will be separate disks - removing one of them we will not notice anything at all (if the OS was not installed on it) except that we will not be able to access the ones recorded on them files. But there is another way - to combine these disks into a system, to set them a certain algorithm of joint work, as a result of which the reliability of information storage or the speed of their operation will significantly increase.

But before we can create this system, we need to know if the motherboard supports Raid disk arrays. Many modern motherboards already have a built-in Raid controller, which allows you to combine hard drives. See the motherboard descriptions for supported array designs. For example, let's take the first ASRock P45R2000-WiFi board that I came across in Yandex Market.

Here, a description of the supported Raid arrays is displayed in the Sata Disk Controllers section.

In this example, we see that the Sata controller supports the creation of Raid arrays: 0, 1, 5, 10. What do these numbers mean? This is a designation of various types of arrays, in which disks interact with each other according to different schemes, which are designed, as I said, either to speed up their work, or to increase reliability from data loss.

If the computer's motherboard does not support Raid, then you can purchase a separate Raid controller in the form of a PCI card, which is inserted into a PCI slot on the motherboard and allows it to create arrays from disks. For the controller to work, after installing it, you will also need to install the raid driver, which either comes on the disk with this model, or you can simply download it from the Internet. It is best not to save money on this device and buy from some well-known manufacturer, for example Asus, and with Intel chipsets.

I suspect that you still do not really have an idea of \u200b\u200bwhat it is all about, so let's take a close look at each of the most popular types of Raid arrays to make everything clearer.

RAID 1 array

Raid 1 array is one of the most common and budget options that uses 2 hard drives. This array is designed to provide maximum protection for user data, because all files will be simultaneously copied to 2 hard drives at once. In order to create it, we take two hard drives of the same size, for example, 500 GB each and make the appropriate settings in the BIOS to create an array. After that, your system will see one hard drive of not 1 TB, but 500 GB, although two hard drives are physically working - the calculation formula is given below. And all files will be simultaneously written to two disks, that is, the second will be a full backup of the first. As you understand, if one of the disks fails, you will not lose any part of your information, since you will have a second copy of this disk.

Also, the operating system will not notice the breakdown, which will continue to work with the second disk - only a special program that monitors the operation of the array will notify you of the problem. You just need to remove the faulty disk and connect the same one, only a working one - the system will automatically copy all data from the remaining healthy disk to it and continue working.

The disk space that the system will see is calculated here using the formula:

V \u003d 1 x Vmin, where V is the total and Vmin is the memory size of the smallest hard drive.

RAID 0 array

Another popular scheme, which is designed to increase not the reliability of storage, but, on the contrary, the speed of work. It also consists of two HDDs, however, in this case, the OS already sees the total total volume of the two disks, i.e. If you combine 500 GB disks in Raid 0, the system will see one 1 TB disk. The speed of reading and writing is increased due to the fact that blocks of files are written alternately on two disks - but at the same time the fault tolerance of this system is minimal - if one of the disks fails, almost all files will be damaged and you will lose part of the data - the one that was written on broken disk. After that, you will have to restore information at the service center.

The formula for calculating the total disk space visible to Windows looks like this:

If, before reading this article, by and large, you were not worried about the fault tolerance of your system, but would like to increase the speed of work, then you can buy an additional hard drive and safely use this type. By and large, at home the overwhelming majority of users do not store any super-important information, and you can copy some important files to a separate external hard drive.

Raid 10 array (0 + 1)

As the name implies, this type of array combines the properties of the two previous ones - it is like two Raid 0 arrays combined into Raid 1. Four hard drives are used, on two of them information is written in blocks alternately, as it was in Raid 0 , and on the other two, full copies of the first two are created. The system is very reliable and at the same time quite fast, but very expensive to organize. To create, you need 4 HDDs, while the system will see the total volume according to the formula:

That is, if we take 4 disks of 500 GB each, then the system will see 1 disk of 1 TB.

This type, as well as the next one, is most often used in organizations, on server computers, where it is necessary to ensure both high speed of work and maximum security against loss of information in case of unforeseen circumstances.

RAID 5 array

The Raid 5 array is the optimal combination of price, speed and reliability. In this array, at least 3 HDDs can be used, the volume is calculated from a more complex formula:

V \u003d N x Vmin - 1 x Vmin, where N is the number of hard drives.

So, let's say we have 3 500 GB disks. The amount visible to the OS will be 1 TB.

The array works as follows: blocks of split files are written to the first two drives (or three, depending on their number), and the checksum of the first two (or three) files is written to the third (or fourth). Thus, if one of the disks fails, its contents can be easily restored due to the checksum on the last disk. The performance of such an array is lower than that of Raid 0, but it is as reliable as Raid 1 or Raid 10 and at the same time cheaper than the latter. you can save on the fourth hard.

The diagram below shows a Raid 5 diagram of four HDDs.

There are also other modes - Raid 2,3, 4, 6, 30, etc., but they are largely derived from those listed above.

How to Install Raid Disk Array on Windows?

I hope we figured out the theory. Now let's look at the practice - I think it won't be difficult for experienced PC users to insert a controller into the PCI Raid slot and install the drivers.

How now to create an array of connected hard drives in the Windows Raid operating system?

It is best, of course, to do this when you have just purchased and connected clean hard drives without an installed OS. First, we restart the computer and go into the BIOS settings - here you need to find the SATA controllers to which our hard drives are connected and set them to RAID mode.

After that, we save the settings and restart the PC. On a black screen, information will appear that you have Raid mode turned on and about the key with which you can get into its settings. The example below prompts you to press the TAB key.

It may differ depending on the Raid controller model. For example, "CNTRL + F"

We go into the settings utility and click something like "Create array" or "Create Raid" in the menu - the labels may differ. Also, if the controller supports several types of Raid, then you will be prompted to select which one you want to create. In my example, only Raid 0 is available.

After that, we go back to the BIOS and in the boot order setting we see not several separate disks, but one in the form of an array.

That's all - RAID is configured and now the computer will treat your disks as one. This is how, for example, Raid will be visible when installing Windows.

I think you have already understood the benefits of using Raid. Finally, I will give a comparative table of measurements of the write and read speed of a disk separately or as part of Raid modes - the result, as they say, is obvious.

The shift in center of gravity from processor-centric to data-centric applications is driving the growing importance of storage systems. At the same time, the problem of low bandwidth and fault tolerance characteristic of such systems has always been quite important and always required a solution.

In the modern computer industry, magnetic disks are widely used as a secondary storage system, because, despite all their disadvantages, they have the best characteristics for the corresponding type of device at an affordable price.

Features of the technology of building magnetic disks have led to a significant mismatch between the increase in the performance of processor modules and the magnetic disks themselves. If in 1990 the best among the serial were 5.25 ″ disks with an average access time of 12 ms and a delay time of 5 ms (at a spindle speed of about 5000 rpm 1), today the palm belongs to 3.5 ″ disks with an average access time of 5 ms and delay time 1 ms (at spindle speed 10,000 rpm). Here we see a performance improvement of about 100%. At the same time, the speed of processors has increased by more than 2,000%. This is largely due to the fact that processors have the direct benefits of using VLSI (Extra Large Integration). Its use not only makes it possible to increase the frequency, but also the number of components that can be integrated into the chip, which makes it possible to implement architectural advantages that allow parallel computing.

1 - Average data.

The current situation can be described as a crisis in the I / O of a secondary storage system.

Increasing performance

The impossibility of a significant increase in the technological parameters of magnetic disks entails the need to find other ways, one of which is parallel processing.

If we arrange a data block over N disks of a certain array and organize this arrangement so that there is a possibility of simultaneous reading of information, then this block can be read N times faster (without taking into account the block formation time). Since all data is transmitted in parallel, this architectural solution is called parallel-access array (parallel access array).

Concurrent arrays are typically used for applications that require large data transfers.

Some tasks, on the other hand, are characterized by a large number of small queries. These tasks include, for example, database processing tasks. By arranging the database records across the array disks, you can spread the load independently by positioning the disks. This architecture is usually called independent-access array (array with independent access).

We increase resiliency

Unfortunately, as the number of disks in an array increases, the reliability of the entire array decreases. With independent failures and an exponential law of mean time to failure distribution, the MTTF of the entire array (mean time to failure) is calculated by the formula MTTF array \u003d MMTF hdd / N hdd (MMTF hdd is the mean time to failure of one disk; NHDD is the number of disks).

Thus, there is a need to improve the fault tolerance of disk arrays. To increase the fault tolerance of the arrays, redundant coding is used. There are two main types of encoding that are used in redundant disk arrays - duplication and parity.

Duplication, or mirroring, is most commonly used in disk arrays. Simple mirrored systems use two copies of the data, each copy on separate disks. This scheme is quite simple and does not require additional hardware costs, but it has one significant drawback - it uses 50% of the disk space to store a copy of information.

The second way to implement redundant disk arrays is to use redundant coding using parity computation. Parity is calculated as an XOR operation of all characters in the data word. The use of parity in redundant disk arrays reduces the overhead to a value calculated by the formula: НР hdd \u003d 1 / N hdd (НР hdd - overhead costs; N hdd - the number of disks in the array).

History and development of RAID

Although magnetic disk storage systems have been in production for 40 years, the mass production of fault-tolerant systems has only recently begun. Redundant disk arrays, commonly referred to as RAID (redundant arrays of inexpensive disks), were introduced by researchers (Petterson, Gibson, and Katz) at the University of California at Berkeley in 1987. But RAID systems became widespread only when disks that were suitable for use in redundant arrays became available and efficient enough. Since RAID was officially reported in 1988, research into redundant disk arrays has exploded in an effort to provide a wide range of cost-performance-reliability trade-offs.

There was an incident with the abbreviation RAID at one time. The fact is that at the time of this writing, all the disks used in the PC were called inexpensive disks, as opposed to expensive mainframe disks (mainframe). But for use in RAID arrays, it was necessary to use rather expensive hardware compared to other PC configurations, so they began to decrypt RAID as a redundant array of independent disks 2 - a redundant array of independent disks.

2 - Defining the RAID Advisory Board

RAID 0 has been introduced to the industry as the definition of a non-fault-tolerant disk array. At Berkeley, RAID 1 was defined as a mirrored disk array. RAID 2 is reserved for arrays that use Hamming code. RAID levels 3, 4, 5 use parity to protect data from single faults. It is these levels, up to and including the 5th, that were presented at Berkeley, and this RAID systematics was adopted as the de facto standard.

RAID levels 3,4,5 are quite popular and have good disk space utilization, but they have one significant drawback - they are only resistant to single faults. This is especially true when using a large number of disks, when the likelihood of simultaneous downtime of more than one device increases. In addition, they are characterized by a long recovery time, which also imposes some restrictions on their use.

To date, a fairly large number of architectures have been developed that ensure the operability of the array when any two disks fail simultaneously without data loss. Among the many, two-dimensional parity (two-dimensional parity) and EVENODD, which use parity for encoding, and RAID 6, which uses Reed-Solomon encoding, are worth noting.

In a two-space parity scheme, each data block participates in the construction of two independent codewords. Thus, if a second disc fails in the same codeword, a different codeword is used to reconstruct the data.

The minimum redundancy in such an array is achieved with an equal number of columns and rows. And equal to: 2 x Square (N Disk) (in "square").

If the two-dimensional array is not organized into a "square", then when the above scheme is implemented, the redundancy will be higher.

The EVENODD architecture has a fault tolerance scheme similar to two-dimensional parity, but a different layout of information blocks, which ensures minimal excess capacity utilization. Just like in two-dimensional parity, each data block participates in the construction of two independent codewords, but the words are arranged in such a way that the redundancy factor is constant (unlike the previous scheme) and is equal to: 2 x Square (N Disk).

Using two check characters, parity and non-binary codes, the data word can be designed to provide fault tolerance in the event of a double fault. This is known as RAID 6. Non-binary code based on Reed-Solomon encoding is usually computed using tables or an iterative process using linear closed-loop registers, which is relatively complex and requires specialized hardware.

Considering that the use of classic RAID options, which provide sufficient fault tolerance for many applications, often has unacceptably low performance, researchers from time to time implement various moves that help increase the performance of RAID systems.

In 1996, Savage and Wilks proposed AFRAID, an A Frequently Redundant Array of Independent Disks. This architecture sacrifices fault tolerance to some extent for performance. In an attempt to compensate for the small-write problem in RAID 5 arrays, it is allowed to strip without parity for a period of time. If the disk intended for writing parity is busy, then its writing is postponed. It is theoretically proven that 25% reduction in fault tolerance can increase performance by 97%. AFRAID actually changes the fault tolerant failure model of arrays because a codeword that does not have updated parity is susceptible to disk failures.

Instead of sacrificing fault tolerance, you can use traditional methods of improving performance such as caching. Given that disk traffic is bursty, it is possible to use the writeback cache to store data when the disks are busy. And if the cache memory is executed in the form of non-volatile memory, then, in case of power failure, the data will be saved. In addition, lazy disk operations make it possible to combine small blocks in an arbitrary order to perform more efficient disk operations.

There are also many architectures that offer performance gains by sacrificing volume. Among them are deferred modification to the log disk and various schemes for modifying the logical placement of data into the physical, which allow distributing operations in the array more efficiently.

One of the options - parity logging (parity registration), which addresses the small-write problem and makes better use of disks. Parity logging involves postponing the change of parity in RAID 5 by writing it to the FIFO log, which is located partly in the controller's memory and partly on disk. Given that access to a full track is, on average, 10 times more efficient than access to a sector, large amounts of modified parity data are collected using parity, which are then all written to a disk designed to store parity throughout the track.

Architecture floating data and parity (floating data and parity), which allows reallocation of the physical disk blocks. Free sectors are placed on each cylinder to reduce rotational latency (rotation delays), data and parity are placed on these empty spaces. In order to ensure operability during a power outage, the parity and data card must be stored in non-volatile memory. If you lose the location map, all data in the array will be lost.

Virtual stripping - is a floating data and parity architecture using writeback cache. Naturally realizing the positive aspects of both.

In addition, there are other ways to improve performance, such as RAID balancing. At one time, Seagate built support for RAID operations in its Fiber Chanel and SCSI drives. This made it possible to reduce traffic between the central controller and the disks in the array for RAID 5 systems. This was a radical innovation in the field of RAID implementations, but the technology did not get a start in life, since some features of Fiber Chanel and SCSI standards weaken the failure model for disk arrays.

For the same RAID 5, the TickerTAIP architecture was presented. It looks like this - the central control mechanism originator node (initiator node) receives user requests, chooses a processing algorithm and then transfers the work with the disk and parity to the worker node (worker node). Each worker node processes a subset of the disks in the array. As in the Seagate model, worker nodes transfer data among themselves without the participation of the initiating node. If a working node fails, the disks that it was servicing become unavailable. But if the codeword is constructed in such a way that each of its characters is processed by a separate worker node, then the fault tolerance scheme repeats RAID 5. To prevent failures of the initiator node, it is duplicated, so we get an architecture that is resistant to failures of any of its nodes. For all its positive features, this architecture suffers from a "write hole" problem. Which implies the occurrence of an error when several users change the codeword at the same time and the node fails.

I should also mention a rather popular way to quickly recover RAID - using a spare disk. If one of the disks in the array fails, the RAID can be rebuilt using a free disk instead of the failed one. The main feature of such an implementation is that the system goes to its previous (fail-safe state without external intervention). With a distributed sparing architecture, logical spare blocks of a drive are physically distributed across all drives in an array, eliminating the need to rebuild the array if a drive fails.

In order to avoid the recovery problem of classic RAID levels, an architecture called parity declustering (parity distribution). It involves placing fewer logical drives with more capacity on smaller, but larger physical drives. With this technology, the response time of the system to a request during reconstruction is more than doubled, and the reconstruction time is significantly reduced.

Basic RAID Architecture

Now let's look at the architecture of the basic levels of RAID in more detail. Before considering, let's make some assumptions. To demonstrate the principles of building RAID systems, consider a set of N disks (for simplicity, N will be considered an even number), each of which consists of M blocks.

The data will be denoted by D m, n, where m is the number of data blocks, n is the number of sub-blocks into which the D data block is divided.

Disks can be connected to one or several data transmission channels. Using more channels increases the throughput of the system.

RAID 0. Striped Disk Array without Fault Tolerance

It is a disk array in which data is divided into blocks, and each block is written (or read) to a separate disk. Thus, multiple I / O operations can be performed simultaneously.

Benefits:

- highest performance for applications requiring intensive I / O processing and large data volumes;

- ease of implementation;

- low cost per volume unit.

disadvantages:

- not a fault-tolerant solution;

- if one disk fails, all data in the array will be lost.

RAID 1. Disk array with duplicate or mirroring (mirroring)

Mirroring is a traditional method for improving the reliability of a small disk array. In the simplest version, two disks are used, on which the same information is recorded, and in case of failure of one of them, its duplicate remains, which continues to work in the same mode.

Benefits:

- ease of implementation;

- ease of array recovery in case of failure (copying);

- reasonably high performance for high-demand applications.

disadvantages:

- high cost per volume unit - 100% redundancy;

- low data transfer rate.

RAID 2. Fault-tolerant disk array using the Hamming Code ECC.

The redundant coding used in RAID 2 is called Hamming code. The Hamming code allows you to correct single and double faults. Today it is actively used in the technology of data encoding in RAM such as ECC. And encoding data on magnetic disks.

In this case, an example is shown with a fixed number of disks due to the cumbersome description (a data word consists of 4 bits, respectively, an ECC code of 3).

Benefits:

- fast error correction ("on the fly");

- very high speed of data transfer of large volumes;

- with an increase in the number of disks, overhead costs are reduced;

- quite simple implementation.

disadvantages:

- high cost with a small number of disks;

- low speed of request processing (not suitable for systems oriented on transaction processing).

RAID 3. Fault Tolerant Array with Parallel Transfer Disks with Parity

Data is divided into subblocks at the byte level and written simultaneously to all disks in the array except for one, which is used for parity. Using RAID 3 solves the problem of high redundancy in RAID 2. Most of the control disks used in RAID level 2 are used to locate the failed bit. But this is not necessary, since most controllers are able to determine when a disk has failed using special signals, or additional encoding of information written to the disk and used to correct random failures.

Benefits:

- very high data transfer rate;

- disk failure has little effect on the speed of the array;

disadvantages:

- difficult implementation;

- low performance with a high intensity of requests for small data.

RAID 4. Independent Data disks with shared Parity disk

The data is broken down at the block level. Each block of data is written to a separate disk and can be read separately. Parity for a group of blocks is generated on write and checked on read. RAID level 4 improves the performance of small data transfers through parallelism, allowing more than one I / O to be performed concurrently. The main difference between RAID 3 and 4 is that in the latter, data striping is performed at the sector level, not at the bit or byte level.

Benefits:

- very high speed of reading large data volumes;

- high performance with a high intensity of data read requests;

- low overhead for implementing redundancy.

disadvantages:

- very low performance when writing data;

- low speed of reading small data with single requests;

- asymmetry of performance with respect to reading and writing.

RAID 5. Independent Data disks with distributed parity blocks

This level is similar to RAID 4, but unlike the previous one, parity is distributed cyclically across all disks in the array. This change improves the performance of writing small amounts of data on multitasking systems. If the write operations are properly planned, it is possible to process up to N / 2 blocks in parallel, where N is the number of disks in the group.

Benefits:

- high speed of data recording;

- sufficiently high speed of data reading;

- high performance with a high intensity of data read / write requests;

- low overhead for implementing redundancy.

disadvantages:

- data reading speed is lower than in RAID 4;

- low read / write speed of small data with single requests;

- quite complex implementation;

- complex data recovery.

RAID 6. Independent Data disks with two independent distributed parity schemes

Data is partitioned at the block level, similar to RAID 5, but in addition to the previous architecture, a second scheme is used to improve fault tolerance. This architecture is double fault tolerant. However, when performing a logical write, there are actually six disk accesses, which greatly increases the processing time of one request.

Benefits:

- high resiliency;

- sufficiently high speed of request processing;

- relatively low overhead for implementing redundancy.

disadvantages:

- very complex implementation;

- complex data recovery;

- very low data writing speed.

Modern RAID controllers allow you to combine different RAID levels. In this way, it is possible to implement systems that combine the merits of different levels, as well as systems with a large number of disks. This is usually a combination of stripping and some fault tolerant level.

RAID 10. Fault Tolerant Duplicate and Parallel Array

This architecture is a RAID 0 array whose segments are RAID 1 arrays. It combines very high fault tolerance and performance.

Benefits:

- high resiliency;

- high performance.

disadvantages:

- very high cost;

- limited scaling.

RAID 30. Fault-tolerant array with parallel data transfer and increased performance.

It is an array of RAID 0 type, the segments of which are RAID 3 arrays. It combines fault tolerance and high performance. Typically used for applications requiring large serial data transfers.

Benefits:

- high resiliency;

- high performance.

disadvantages:

- high price;

- limited scaling.

RAID 50. Fault Tolerant Distributed Parity Array with Increased Performance

It is a RAID 0 array with segments of RAID 5. It combines fault tolerance and high performance for high demand applications and high data transfer rates.

Benefits:

- high resiliency;

- high data transfer rate;

- high speed of request processing.

disadvantages:

- high price;

- limited scaling.

RAID 7. Resilient array optimized for performance. (Optimized Asynchrony for High I / O Rates as well as High Data Transfer Rates). RAID 7® is a registered trademark of Storage Computer Corporation (SCC)

To understand the architecture of RAID 7, let's look at its features:

- All data transfer requests are processed asynchronously and independently.

- All read / write operations are cached over the high-speed x-bus.

- The parity disc can be placed on any channel.

- The array controller microprocessor uses a real-time process-oriented operating system.

- The system has good scalability: up to 12 host interfaces and up to 48 disks.

- The operating system controls the communication channels.

- Standard SCSI disks, buses, motherboards and memory modules are used.

- A high-speed X-bus is used to work with the internal cache memory.

- The parity generation routine is integrated into the cache.

- Disks attached to the system can be declared free-standing.

- An SNMP agent can be used to manage and monitor the system.

Benefits:

- high data transfer rate and high query processing speed (1.5 - 6 times higher than other standard RAID levels);

- high scalability of host interfaces;

- data writing speed increases with the number of disks in the array;

- there is no need for additional data transmission to compute parity.

disadvantages:

- property of one manufacturer;

- very high cost per unit volume;

- short warranty period;

- cannot be serviced by the user;

- you need to use an uninterruptible power supply to prevent data loss from the cache memory.

Let's now look at the standard levels together to compare their characteristics. The comparison is made within the framework of the architectures mentioned in the table.

| RAID | Minimum disks | Need in disks | Failure sustainability | Speed data transmission | Intensity processing requests | Practical using |

|---|---|---|---|---|---|---|

| 0 | 2 | N | very high up to N x 1 disc | Graphics, video | ||

| 1 | 2 | 2N * | R\u003e 1 disc W \u003d 1 disc | up to 2 x 1 disc W \u003d 1 disc | small file servers | |

| 2 | 7 | 2N | ~ RAID 3 | Low | mainframes | |

| 3 | 3 | N + 1 | Low | Graphics, video | ||

| 4 | 3 | N + 1 | R W | R \u003d RAID 0 W | file servers | |

| 5 | 3 | N + 1 | R W | R \u003d RAID 0 W | database servers | |

| 6 | 4 | N + 2 | the tallest | low | R\u003e 1 disc W | used extremely rarely |

| 7 | 12 | N + 1 | the tallest | the tallest | different types of applications |

Clarifications:

- * - the commonly used option is considered;

- k is the number of sub-segments;

- R - read;

- W - record.

Some aspects of the implementation of RAID systems

Let's consider three main options for implementing RAID systems:

- software (software-based);

- hardware room - bus-based;

- hardware - an autonomous subsystem (subsystem-based).

It cannot be said unequivocally that one implementation is better than another. Each option for organizing the array satisfies one or another user needs, depending on financial capabilities, the number of users and the applications used.

Each of the above implementations is based on code execution. They actually differ in where this code is executed: in the central processor of the computer (software implementation) or in a specialized processor on the RAID controller (hardware implementation).

The main advantage of software implementation is low cost. But at the same time, it has many disadvantages: low performance, load with additional work on the central processor, increased bus traffic. Simple RAID levels 0 and 1 are usually implemented in software, since they do not require significant computation. With these features in mind, software-based RAID systems are used in entry-level servers.

Hardware RAID implementations cost more than software RAID, as they use additional hardware to perform I / O operations. At the same time, they unload or free up the central processor and the system bus and, accordingly, increase performance.

Bus-oriented implementations are RAID controllers that use the high-speed bus of the computer in which they are installed (recently, the PCI bus is usually used). In turn, bus-oriented implementations can be divided into low-level and high-level. The former usually do not have SCSI chips and use the so-called RAID port on a motherboard with a built-in SCSI controller. In this case, the functions of processing RAID code and I / O operations are distributed between the processor on the RAID controller and the SCSI chips on the motherboard. Thus, the central processor is freed from processing additional code and bus traffic is reduced compared to the software version. The cost of such cards is usually low, especially if they are focused on RAID - 0 or 1 systems (there are also implementations of RAID 3, 5, 10, 30, 50, but they are more expensive), due to which they gradually displace software implementations from the entry-level server market. High-level bus controllers have a slightly different structure than their little brothers. They take over all functions related to I / O and RAID code execution. In addition, they are not so dependent on the implementation of the motherboard and, as a rule, have more capabilities (for example, the ability to connect a module to store information in the cache in the event of a motherboard failure or power failure). These controllers are usually more expensive than low-level controllers and are used in mid- to high-end servers. They, as a rule, implement RAID levels 0,1, 3, 5, 10, 30, 50. Considering the fact that bus-oriented implementations are connected directly to the internal PCI bus of the computer, they are the most productive among the systems under consideration (when organizing one host systems). The maximum performance of such systems can reach 132 MB / s (32bit PCI) or 264 MB / s (64bit PCI) with a bus frequency of 33MHz.

Together with the listed advantages, the bus-oriented architecture has the following disadvantages:

- dependence on the operating system and platform;

- limited scalability;

- limited opportunities for organizing fault-tolerant systems.

All of these disadvantages can be avoided by using stand-alone subsystems. These systems have a completely autonomous external organization and, in principle, are a separate computer that is used to organize information storage systems. In addition, in the case of successful development of fiber-optic technology, the speed of autonomous systems will in no way be inferior to bus-oriented systems.

Usually, the external controller is placed in a separate rack and, unlike systems with a bus organization, it can have a large number of I / O channels, including host channels, which makes it possible to connect several host computers to the system and organize cluster systems. In systems with a stand-alone controller, hot spare controllers can be implemented.

One of the disadvantages of autonomous systems is their high cost.

Considering the above, we note that stand-alone controllers are usually used to implement high-capacity data warehouses and cluster systems.

Greetings to all, dear readers of the blog site. I think many of you have come across such an interesting expression on the Internet at least once - "RAID array". What it means and why an ordinary user may need it, that's what we will talk about today. It is a well-known fact that it is the slowest component in a PC and is inferior to the processor and.

To compensate for the "innate" slowness where it is out of place at all (we are talking primarily about servers and high-performance PCs), they came up with a so-called disk array RAID - a kind of "bundle" of several identical hard drives working in parallel. This solution allows you to significantly increase the speed of work, coupled with reliability.

First of all, a RAID array allows you to provide high fault tolerance for the hard drives (HDD) of your computer by combining several hard drives into one logical element. Accordingly, to implement this technology, you will need at least two hard drives.... In addition, RAID is just convenient, because all information that previously had to be copied to backup sources (external hard drives) can now be left "as is", because the risk of its complete loss is minimal and tends to zero, but not always, about this just below.

RAID translates something like this: a secure set of inexpensive disks. The name goes back to the times when large hard drives were very expensive and it was cheaper to assemble one common array of smaller disks. The essence has not changed since then, in general, like the name, only now you can make a huge storage out of several HDDs of a large volume, or make it so that one disk duplicates another. You can also combine both functions, thereby gaining the benefits of one and the other.

All these arrays are under their own numbers, most likely you have heard about them - raid 0, 1 ... 10, that is, arrays of different levels.

Varieties of RAID

Speed \u200b\u200bRaid 0

Raid 0 has nothing to do with reliability, because it only increases speed. You need at least 2 hard drives and in this case the data will be "cut" and written to both disks simultaneously. That is, you will have access to the full volume of these disks and in theory this means that you get 2 times higher read / write speed.

But, let's imagine that one of these drives is broken - in this case, the loss of ALL your data is inevitable. In other words, you still have to make regular backups in order to be able to restore information later. It usually uses 2 to 4 discs.

Raid 1 or "mirror"

Reliability does not decrease here. You get disk space and performance from only one hard drive, but you have double the reliability. One disk breaks - the information will be saved on the other.

An array of RAID 1 does not affect the speed, but the volume - here you have only half of the total disk space, which, by the way, in raid 1 can be 2, 4, etc., that is, an even number. In general, the main feature of the first level raid is reliability.

Raid 10

It combines all the best from the previous types. I propose to analyze how it works on the example of four HDDs. So, information is written in parallel on two disks, and this data is duplicated on two other disks.

As a result, the access speed is doubled, but also the volume of only two of the four disks in the array. But if any two disks break, there will be no data loss.

Raid 5

This type of array is very similar to RAID 1 in its purpose, only now you need at least 3 disks, one of them will store the information necessary for recovery. For example, if such an array contains 6 HDDs, then only 5 of them will be used to record information.

Due to the fact that data is written to several hard drives at once, the read speed is high, which is perfect for storing a large amount of data there. But, without an expensive raid controller, the speed will not be very high. God forbid one of the disks breaks - restoring information will take a lot of time.

Raid 6

This array can survive the failure of two hard drives at once. This means that to create such an array, you will need at least four disks, despite the fact that the write speed will be even lower than that of RAID 5.

Please note that without a productive raid controller, such an array (6) is unlikely to be assembled. If you have only 4 hard drives at your disposal, it is better to build RAID 1.

How to create and configure a RAID array

RAID controller

A raid array can be done by connecting several HDDs to a computer motherboard that supports this technology. This means that such a motherboard has an integrated controller, which, as a rule, is built into. But, the controller can be external, which is connected via PCI or PCI-E connector. Each controller usually has its own configuration software.

The raid can be organized both at the hardware level and at the software level, the latter option being the most common among home PCs. Users do not like the controller built into the motherboard for poor reliability. In addition, in case of damage to the motherboard, it will be very problematic to recover data. At the software level, it plays the role of a controller, in which case it will be possible to safely transfer your raid array to another PC.

Hardware

How to make a RAID array? To do this, you need:

- Get it somewhere with raid support (in the case of hardware RAID);

- Buy at least two identical hard drives. It is better that they are identical not only in characteristics, but also of the same manufacturer and model, and connected to the mat. board with one.

- Transfer all data from your HDD to other media, otherwise they will be destroyed during the raid creation process.

- Further, in the BIOS, you will need to enable RAID support, how to do this in the case of your computer - I cannot tell, due to the fact that everyone has different BIOSes. Usually this parameter is named something like this: "SATA Configuration or Configure SATA as RAID".

- Then restart your PC and a table with more detailed raid settings should appear. You may have to press the "ctrl + i" key combination during the "POST" procedure for this table to appear. For those who have an external controller, they will most likely need to press "F2". In the table itself, click "Create Massive" and select the required array level.

After creating a raid array in BIOS, you need to go to "disk management" in OS -10 and format the unallocated area - this is our array.

Program

You don't have to enable or disable anything in the BIOS to create a software RAID. You, in fact, don't even need the motherboard to support the raid. As already mentioned above, the technology is implemented at the expense of the PC's central processor and Windows itself. Yeah, you don't even need to install any third-party software. True, in this way you can create only RAID of the first type, which is a "mirror".

Right-click on "my computer" - item "management" - "disk management". Then we click on any of the hard drives intended for the raid (disk1 or disk2) and select "Create mirrored volume". In the next window, select a disk that will be a mirror of another hard drive, then assign a letter and format the resulting partition.

In this utility, mirrored volumes are highlighted in one color (red) and are designated by a single letter. In this case, files are copied to both volumes, once to one volume, and the same file is copied to the second volume. It is noteworthy that in the window "my computer" our array will be displayed as one section, the second section is, as it were, hidden, so as not to "call out" the eyes, because there are the same duplicate files.

If any hard drive fails, the error "Failed redundancy" will appear, while on the second section everything will remain intact.

Let's summarize

RAID 5 is needed for a limited range of tasks, when much more (than 4 disks) number of HDDs are collected in huge arrays. For most users, Raid 1 is the best option. For example, if you have four disks with a capacity of 3 terabytes each - in RAID 1, then 6 terabytes of capacity are available. RAID 5 in this case will give more space, however, the access speed will drop dramatically. RAID 6 will give you the same 6 terabytes, but even lower access speed, and it will also require an expensive controller from you.

Let's add more RAID disks and you will see how everything changes. For example, let's take eight disks of the same capacity (3 terabytes). In RAID 1, only 12 terabytes of space will be available for writing, half of the volume will be closed! RAID 5 in this example will give 21 terabytes of disk space + it will be possible to get data from any one damaged hard drive. RAID 6 will give 18 terabytes and data can be retrieved from any two drives.

In general, RAID is not a cheap thing, but personally I would like to have a RAID of the first level of 3 terabyte disks at my disposal. There are even more sophisticated methods, such as RAID 6 0, or "raid from raid arrays", but this makes sense with a large number of HDDs, at least 8, 16 or 30 - you must admit, this is far beyond the scope of ordinary "household" use and is used in demand for the most part in servers.

Something like this, leave comments, add the site to bookmarks (for convenience), there will be many more interesting and useful things, and see you soon on the blog pages!