1. Introduction

Every year the volume of the Internet is increasing significantly, so the likelihood of finding the necessary information increases dramatically. The Internet unites millions of computers, many different networks, the number of users is increasing by 15-80% annually. And, nevertheless, more and more often when accessing the Internet, the main problem is not the lack of the required information, but the ability to find it. As a rule, an ordinary person, due to various circumstances, cannot or does not want to spend more than 15-20 minutes looking for the answer he needs. Therefore, it is especially important to correctly and competently learn a seemingly simple thing - where and how to look in order to get the DESIRED answers.

To find the information you need, you need to find its address. For this, there are specialized search servers (index robots (search engines), thematic Internet directories, meta-search systems, people search services, etc.). In this master class, the main technologies for finding information on the Internet are revealed, general features of search tools are provided, and the structures of search queries for the most popular Russian-speaking and English-language search engines are considered.

2. Search technologies

Web-technology World Wide Web (WWW) is considered a special technology for preparing and posting documents on the Internet. The WWW includes web pages, electronic libraries, catalogs, and even virtual museums! With such an abundance of information, the question arises: "How to navigate in such a huge and large-scale information space?"

Search tools come to the rescue in solving this problem.

2.1 Search tools

Search tools are special software, the main purpose of which is to provide the most optimal and high-quality search for information for Internet users. Search tools are hosted on special web servers, each of which performs a specific function:

- Analysis of web pages and entering the analysis results into one or another level of the search engine database.

- Search for information on the user's request.

- Providing a convenient interface for searching information and viewing the search result by the user.

The methods of work used when working with various search tools are practically the same. Before moving on to discussing them, consider the following concepts:

- The search tool interface is presented as a page with hyperlinks, a query submission line (search bar) and query activation tools.

- The search engine index is an information base containing the result of the analysis of web pages, compiled according to certain rules.

- A query is a keyword or phrase that a user enters into the search bar. To form various queries, special characters ("", ~), mathematical symbols (*, +,?) Are used.

The information search scheme on the Internet is simple. The user types a key phrase and activates the search, thereby receiving a selection of documents for a formulated (specified) query. This list of documents is ranked according to certain criteria so that at the top of the list are those documents that most closely match the user's request. Each of the search tools uses different criteria for ranking documents, both when analyzing search results and when forming an index (filling an index database of web pages).

Thus, if you specify the same query in the search string for each search tool, you can get different search results. For the user, it is of great importance which documents will appear in the first two or three dozen documents according to the search results and to what extent these documents correspond to the user's expectations.

Most search tools offer two ways to search - simple search(simple search) and advanced search(advanced search) with and without a special request form. Let's consider both types of search using the example of an English-language search engine.

For example, AltaVista is useful for ad hoc queries, "Something about online degrees in information technology," while Yahoo's search tool allows you to get world news, currency exchange rates, or weather forecasts.

Mastering the criteria for refining a request and advanced search techniques allows you to increase the search efficiency and quickly find the necessary information. First of all, you can increase the search efficiency by using logical operators (operations) Or, And, Near, Not, mathematical and special symbols in queries. With the help of operators and / or symbols, the user associates keywords in the desired sequence to get the most appropriate search result. The request forms are shown in Table 1.

Table 1

A simple query gives a number of links to documents, since the list includes documents containing one of the words entered during the query, or a simple phrase (see table 1). The and operator allows you to indicate that all keywords should be included in the content of the document. However, the number of documents may still be large and it will take a long time to review them. Therefore, in some cases it is much more convenient to use the near context operator, which indicates that words should be located in the document in sufficient proximity. Using near significantly reduces the number of documents found. The presence of the "*" symbol in the query string means that a word will be searched by its mask. For example, we get a list of documents containing words starting with "gov" if we write "gov *" in the query string. These can be the words government, governor, etc.

The equally popular search engine Rambler keeps statistics on link traffic from its own database, the same logical operators AND, OR, NOT, metacharacter * (similar to the * character expanding the query range in AltaVista), coefficient symbols + and - are supported, to increase or decrease the significance words entered into the query.

Let's take a look at the most popular technologies for finding information on the Internet.

2.2 search engines

Web search engines are servers with a huge database of URLs that automatically access WWW pages at all these addresses, examine the contents of these pages, form and write keywords from the pages into their database (index pages).

Moreover, search engine robots follow the links found on the pages and re-index them. Since almost any WWW page has many links to other pages, then with this kind of work, the search engine in the end result can theoretically bypass all sites on the Internet.

It is this type of search tools that is the most famous and popular among all Internet users. Everyone has heard the names of well-known web search engines (search engines) - Yandex, Rambler, Aport.

To use this type of search tool, you need to go to it and type in the search bar the keyword you are interested in. Next, you will receive results from links stored in the search engine database that are closest to your query. To make your search most effective, pay attention to the following points in advance:

- decide on the subject of your request. What exactly do you ultimately want to find?

- pay attention to language, grammar, use of various non-letter symbols, morphology. It is also important to correctly formulate and enter keywords. Each search engine has its own form of composing a query - the principle is the same, but the symbols or operators used may differ. Required forms of inquiry also differ depending on the complexity of the software of the search engines and the services they provide. One way or another, each search engine has a "Help" section, where all syntax rules, as well as recommendations and tips for searching, are easily explained (screenshot of search engine pages).

- use the capabilities of different search engines. If you can't find it on Yandex, try it on Google. Use the advanced search services.

- to exclude documents containing certain terms, use the "-" sign in front of each such word. For example, if you need information about Shakespeare's works, except for "Hamlet", then enter a query in the form: "Shakespeare-Hamlet". And in order to, on the contrary, in the search results necessarily include certain links, use the "+" symbol. So, to find links about the sale of cars, you need the query "sale + car". To increase the efficiency and accuracy of your search, use combinations of these symbols.

- each link in the list of search results contains - several lines from the found document, among which there are your keywords. Before clicking on the link, evaluate the correspondence of the snippet to the subject of the request. After clicking on a link to a specific site, carefully take a look at the main page. As a rule, the first page is enough to understand whether you have come to the address or not. If yes, then conduct further searches for the necessary information on the selected site (in the sections of the site), if not, return to the search results and try the next link.

- remember that search engines do not produce information on their own (except for clarifications about themselves). The search engine is only an intermediary between the owner of the information (site) and you. Databases are constantly updated, new addresses are added to them, but the lag behind the information actually existing in the world still remains. Simply because search engines don't work at the speed of light.

The most famous web search engines include Google, Yahoo, Alta Vista, Excite, Hot Bot, Lycos. Among the Russian speakers, you can distinguish Yandex, Rambler, Aport.

Search engines are the largest and most valuable, but far from the only sources of information on the Web, because besides them, there are other ways to search the Internet.

2.3 directories

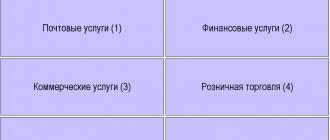

The catalog of Internet resources is a constantly updated and replenished hierarchical catalog containing many categories and individual web servers with a brief description of their contents. The way of searching the catalog implies "going down the stairs", that is, moving from more general categories to more specific ones. One of the advantages of subject catalogs is that explanations for the links are given by the creators of the catalog and fully reflect its content, that is, it gives you the opportunity to more accurately determine how the content of the server corresponds to your search purpose.

An example of a thematic Russian-language catalog is the resource http://www.ulitka.ru/.

On the main page of this site there is a thematic rubricator,

with the help of which the user enters the heading with links to the products of interest to him.

In addition, some subject directories allow keyword searches. The user enters the required keyword into the search bar

and gets a list of links with site descriptions that best match his request. It should be noted that this search does not take place in the contents of WWW servers, but in their brief descriptions stored in the catalog.

In our example, the catalog also has the ability to sort sites by the number of visits, alphabetically, by date of entry.

Other examples of Russian-language directories:

[email protected]

Weblist

Vsego.ru

Among the English-language catalogs are:

http://www.DMOS.org

http://www.yahoo.com/

http://www.looksmart.com

2.4 Link collections

Link collections are links sorted by topic. They are quite different from each other in terms of content, so in order to find a selection that most fully meets your interests, you need to go through them yourself in order to form your own opinion.

As an example, we will give the Selection of links "Treasures of the Internet" of JSC "Relcom"

User by clicking on any of the headings that interest him

For motorists

- Astronomy and astrology

- Your home

- Your pets

- Children are flowers of life

- Leisure

- Cities on the Internet

- Health and medicine

- News agencies and services

- Local history museum, etc.,

- Automotive electronics.

- Automotive Museum of Antiquity.

- Collegium of Legal Protection of Car Owners.

- Sportdrive.

The advantage of this type of search tools is their purposefulness, usually the selection includes rare Internet resources selected by a specific webmaster or owner of an Internet page.

2.5 addresses database

Address databases are special search servers that usually use classifications by type of activity, by products and services provided, by geography. Sometimes they are supplemented with an alphabetical search. The database records store information about sites that provide information about the email address, organization, and postal address for a fee.

The largest English-language address database can be called: http://www.lookup.com/ -

Getting into these subdirectories, the user discovers links to sites that offer information of interest to him.

We do not know of widely available and official databases of addresses in the Russian Federation.

2.6 Searching the Gopher archives (Gopher archives)

Gopher is an interconnected server system (Gopher space) distributed over the Internet.

The Gopher space contains the richest literary library, but the materials are not available for remote viewing: the user can only view a hierarchically organized table of contents and select a file by name. With the help of a special program (Veronica), such a search can be done automatically, using queries based on keywords.

Until 1995, Gopher was the most dynamic technology on the Internet, with the growth in the number of related servers outstripping the growth of all other types of Internet servers. In the EUnet / Relcom network, Gopher servers have not received active development, and today almost no one remembers them.

2.7 FTP Search System

The FTP file search engine is a special type of Internet search engine that allows you to find files available on "anonymous" FTP servers. FTP is designed to transfer files over a network, and in this sense, it is functionally a kind of analogue of Gopher.

The main search criterion is the file name, specified in different ways (exact match, substring, regular expression, etc.). This type of search, of course, cannot compete with search engines in terms of capabilities, since the contents of files are not taken into account in the search in any way, and files, as you know, can be given arbitrary names. Nevertheless, if you need to find some well-known program or description of the standard, then with a high degree of probability the file containing it will have an appropriate name, and you can find it using one of the FTP Search servers:

FileSearch searches for files on FTP servers by the names of the files and directories themselves. If you are looking for any program or something else, then on WWW-servers you will most likely find their descriptions, and from FTP-servers you can download them to yourself.

2.8 Usenet News conference search engine

USENET NEWS is a teleconferencing system for the Internet community. In the West, this service is usually called news. A close analogue of teleconferences are the so-called "echoes" in the FIDO network.

From a teleconference subscriber's point of view, USENET is a bulletin board that has sections where you can find articles on anything from politics to gardening. This bulletin board is accessible via a computer like email. Without leaving your computer, you can read or post articles in one or another conference, find useful advice or enter into discussions. Naturally, articles take up space on computers, so they are not stored forever, but are periodically destroyed, making room for new ones. Worldwide, the best service for finding information in Usenet newsgroups is the Google Groups server (Google Inc.).

Google Groups is a free online community and discussion group service that offers the largest archive of Usenet posts on the Internet (over a billion posts). For more information, please visit http://groups.google.com/intl/ru /googlegroups/tour/index.html

Among the Russian speakers, the USENET server and the Relcom teleconferences stand out. Just like in other search services, the user types in a query string, and the server generates a list of conferences containing keywords. Next, you need to subscribe to the selected conferences in the news program. There is also a similar Russian server FidoNet Online: Fido conferences on WWW.

2.9 Meta-search engines

For a quick search in the databases of several search engines at once, it is better to turn to meta-search engines.

Meta-search engines are search engines that send your query to a huge number of different search engines, then process the results obtained, remove duplicate resource addresses and represent a wider range of what is presented on the Internet.

The most popular meta-search engine in the world is Search.com.

The combined search engine Search.com of CNET, Inc. includes almost two dozen search engines, links to which are replete with the entire Internet.

Using this type of search tools, the user can search for information in a variety of search engines, however, the negative side of these systems can be called their instability.

2.10 People search engines

People search systems are special servers that allow you to search for people on the Internet, the user can specify the full name. person and get his email address and url. It should be noted, however, that people search engines mainly take information about email addresses from open sources such as Usenet newsgroups. Among the most famous people search systems are:

Search for e-mail addresses

in the special columns of the search for contact details (First Name. City, Last Name, Phone number), you can find the information you are interested in.

People search systems are really big servers, their databases contain about 6,000,000 addresses.

3. Conclusion

We examined the main technologies for finding information on the Internet and presented in general terms the search tools that exist at the moment on the Internet, as well as the structure of search queries for the most popular Russian-speaking and English-speaking search engines and, summing up the above, we want to note that a single optimal scheme there is no search for information on the Internet. Depending on the specifics of the information you need, you can use the appropriate search tools and services. And the quality of search results depends on how well the search services are selected.

In the general case, the search for the query phrase is carried out on the Internet pages, and using certain criteria and algorithms, the search results are ranked and presented to the user. The most commonly used search engine ranking criteria are:

- the presence of words from the request in the document, their number, proximity to the beginning of the document, proximity to each other;

- the presence of words from the request in the headings and subheadings of documents;

- the number of references to this document from other documents;

- "respectability" of the referenced documents.

As you can see from the ranking criteria, the real criterion of the relevance of a document - the presence of words from the query (search phrase) - does not significantly affect its rank in the search results. This situation leads to a decrease in the quality of search, as potentially more useful documents are inevitably pushed aside by their “optimized” competitors to the bottom of the list. Indeed, many have come across the fact that really useful resources in search engines are located on the second third page of a search query. This is where the inefficiency of the algorithms for ranking the found documents is manifested. This is largely due to the fact that search queries, on average, consist of only three to five words, that is, there is simply not enough initial information to effectively rank the results.

And here are the problems when searching ...

This is where the 100% efficiency of the algorithms for ranking the found documents is not manifested. Of course, this situation also arises because user searches on average consist of only three to five words. That is, such initial information for search engines is too scarce for effective SERP ranking.

The second problem is how to process such a large amount of information (\u003d "digest", "consider", "highlight the main thing", "weed out unnecessary and useless") for a specific user, taking into account his needs, meaning and topic of the request his previous search history, geographic location, his views on search results, etc. Of course, search engines are actively developing in this direction, but it is obvious that the search engine is far from perfect. Because, today, only a person can evaluate the semantic usefulness, quality, specificity of the information found, etc.

Alternatives to search engines

Therefore, as an alternative, services appear that somehow structure the Internet to facilitate the search for the information the user needs. And at the moment there are already social bookmarks, directories, torrent trackers, forums, specialized search engines, file sharing, etc. All these services, to one degree or another, structure the Internet and "reduce the distance" between the user and the information he needs (be it movies, music, books, answers to questions, etc.). And what is most important, it is mainly the users themselves who "structure the Internet".

No, there is no hint here that search engines are useless or not very effective. I believe that search engines are ideal for finding superficial and most popular information. And to search for deeper information, including useful books, articles, magazines, music, etc. (meaning with the ability to download all of this) the above-mentioned resources "structuring the Internet" are more suitable.

How to avoid getting lost on the Internet?

Briefly:

1.To search for surface information, use search engines, for example http://google.com , http://yandex.ru, http://nigma.ru, http://nibbo.com

2.To search for sites relevant to the topic, use Internet directories, for example,

Finding the information you need on the Internet is often difficult. The Internet is developing chaotically, there is no clearly defined structure. No one can guarantee that on one domain there will be only information of a certain topic, and on the other - information of another, but also clearly defined topic. For example, on the .com domains you can find not only commercial information, but, for example, various documentation on software products or even anecdotes.

If the domain structure were similar to the directory structure, for example, in the ru.comp.os.linux domain (as in the news system) there would be all information about the Linux operating system in Russian and some moderator organization would make sure that other domains did not provide information about Linux, then the search would be much easier. After all, we would know where to look. You open a browser, enter ru.comp.os.linux and you get ... millions of different links to articles, HOWTOs and other information related to Linux in one way or another.

Search efficiency

- Search efficiency depends on many factors:

- From the information itself - there can be a lot of information on one topic, but little on another. Sometimes you can find a lot of information on a given topic, but the efficiency of this search will be close to 0.0%, and you can find only 3-4 links, and this will be just what you need. This also includes the ability of the webmaster to correctly submit information so that the search engines themselves can find it. Suppose that somewhere very far away there is the information you need, but the search engine does not know anything about it. Perhaps the information has just been published, or the webmaster who published the information is simply unaware of the existence of search engines. You are looking for information using a search engine. If she does not "know" the information you need, then, consequently, you will not know anything about her either.

- From a search engine - there are many search engines and they are all different. Even if they are of the same type (we will talk about the types of search engines a little later), undoubtedly, each of them will have its own algorithm. If you cannot find information using one search engine, try searching it using another. Don't get hung up on one search engine, however much you like it.

- A lot depends on the ability to use a search engine - on how you are able to use a search engine. If you don't know how to use a search engine, search is unlikely to be effective.

How to search for information correctly

Since most often you do not select the site you need from the search engine directory, but enter a specific keyword (or several keywords), then you need to specify this keyword as specifically as possible. The more accurately you define the subject of your search, the more accurate the result will be. A search engine cannot guess your thoughts; you need to clearly indicate to it what you are looking for.

Each search engine has its own syntax that you need to know. This chapter will describe the syntax of the Google, Yandex and Rambler search engines. If you want to use another search engine, you can find out its syntax on its own website (usually it is described in detail).

Internet search engines

Now let's talk about the search engines themselves.

On the territory of the former CIS, the following search engines are the most popular, according to SpyLog (Openstat):

- 1. Yandex (www.yandex.ru);

- 2. Google (www.google.com);

- 3. [email protected] (go.mail.ru);

- 3. Rambler (www.rambler.ru);

- 5. Yahoo! (www.yahoo.com);

- 6. AltaVista (www.altavista.com);

- 7. Bing (www.bing.com).

Search engines are listed in descending order of popularity. As you can see, the most popular among us is the Yandex search engine.

Search engine types

- There are two main types of search engines:

- indexed - Google, AltaVista, Rambler, HotBot, Yandex, etc.

- classification (catalog) - Rambler, Yahoo! and etc.

Do not be surprised that the Rambler search engine is listed twice - it was both index and classification at the same time. We will return to this later, but for now let's talk about the differences between these two systems.

How does an index search engine work? The search engine launches a special program that scans the content of web servers, indexing information: it enters into its database the keywords of a particular web page, some information from a web page.

A brief history of Google

Let's start with the title. Google is a slightly modified version of the word googol (it is not for nothing that it is often called "google"). In turn, this word was introduced by Milton Sirota, nephew of the famous mathematician Edward Kasner, and then was popularized in the book of Kasner and Newman "Mathematics and the Imagination". The word "googol" displays a number with one one and 100 zeros. The name "Google" represents an attempt to organize the vast amount of information on the Web.

So let's start from the very beginning. Future Google developers Sergey Brin and Larry Page met in 1999 at Stanford University. Then Larry was 24 years old, and Sergei - 23. Larry at that time was a student at the University of Michigan and came to Stanford for a few days. Sergei was in a group of students that was supposed to acquaint the guests with the university. From the first meeting, Sergey and Larry, to put it mildly, disliked each other - they argued about everything that could be argued about. Although in the end this turned out to be a positive moment, since their different opinions led to the creation of an algorithm for solving one of the most pressing computer problems: finding the necessary information among a huge array of data. In January 1996, Larry and Sergey began work on the BackRub search engine, which was supposed to analyze the "back" links pointing to this website. Work on this server was carried out in a constant lack of funds - after all, at that time Sergey and Larry were graduate students of the university - you yourself understand that graduate students do not have very much funds. By the way, Larry took part in such a serious project for the first time, and before that he was engaged in all sorts of "frivolous", even sometimes anecdotal projects, for example, he built a working printer from a Lego set.

Google search algorithms

Google's interface is striking in its simplicity: an input field and two buttons. As they say, all ingenious is simple.

Google special (extended) syntax

In addition to boolean operators, Google provides you with the search modifiers listed in the table. Search modifiers are called special Google syntax. Take this table seriously: once you try to search for something using modifiers, you will not give up on them.

Google inurl modifier

The inurl modifier is used to search for the specified URL. And unlike the site modifier, which allows you to search for information only on one site or domain, the inurl modifier allows you to search for information in site subdirectories, for example:

inurl: siteskype-zvonim-besplatno

The inurl modifier allows you to use the * character to specify a domain, for example:

inurl: "* .redhat.com"

It's best to use inurl in conjunction with site. The next request will search for information in the gidmir.ru domain, on all its subdomains, except www:

site: gidmir.ru inurl: "* .gidmir" -inurl: "www.gidmir.ru"

Google Search Language

Google allows mixed syntax, i.e. a syntax that uses several special search modifiers in a query. This allows you to achieve the best possible result.

Here's the simplest example of mixed syntax:

site: ru inurl: disc

In this case, the search will be performed on the domain's sites, and the URL must contain the word disc.

Here's another example:

site: ru -inurl: оrg.ua

The search will be performed on the sites of the ru domain, but the search results will not contain pages located on org.ua.

Google searches

For most ordinary Google users, the 10 key limit is not noticeable. But fans of long search terms have probably noticed that Google takes into account only the first 10 keywords, and all the rest are simply ignored.

Why search for long phrases? In most cases, these are excerpts from works. Suppose we are looking for the work "The Master and Margarita". It should be noted that the keyword phrase should look like "Master Margarita", since the words and, or, and, of, or, I, a, the and some others are ignored by the search engine. If you want to force one of these words to be included in the search, precede the word with a "+" sign, for example + the.

Correct query construction allows to overcome the limit of 10 words. The following guidelines will help you not only shorten your query length, but also improve your overall search performance.

Advanced Google Search

We type in the browser input line the address - www.google.ru/advanced_search and go to the advanced Google search.

With an advanced search, you can search for information almost as flexibly as with search modifiers. Why "almost"? The advanced search interface does not provide access to all search modifiers.

Setting Google Search Properties in Browser Cookies

I don't want to boggle your head with technical details, so I will briefly tell you what Cookies are and not, there is nothing to eat them with, but how you need to work with them.

Let's imagine that we are faced with the following task: we need to write an individual visit report for each client of our company's website. That is, so that the user does not see the total number of visits, he knows exactly how many times he has been on our site. For each IP address, you need to keep records in one table, which is likely to be large, and from this it follows that we are wasting CPU time and disk space. It would be much more correct on our part to use this space with greater benefit.

Google search result

A Google search result is not just a collection of links that match specified search terms. This is something more that deserves separate consideration. Enter the word "rusopen" and click on the Google Search button.

At the top, we see the total number of results (883,000,000) and the total time that the search took, namely 0.34 seconds.

- In most cases, the result is presented as:

- page title;

- page description;

- Page URL;

- page size;

- date of the last indexing of the page;

Google image search

Google Images allows you to find various images on the Internet. Although the images themselves cannot be indexed, pages containing those images are indexed. Enter a description of the image and you will get many, many links, as well as the images themselves, presented in the form of a gallery.

- For a more efficient image search, use the following search modifiers:

- intitle: - search in the page title;

- filetype: - allows you to specify the type of picture, you can specify the following types: JPEG and GIF, not BMP, PNG, images of other types are not indexed;

- inurl: - search by the specified URL, for example inurl: www.gidmir.ru;

- site: Searches the specified domain or site, for example site: com.

Google services

Google is a powerful search engine with over 3 billion pages. In addition to regular web pages, Google indexes files in Word, Excel, PowerPoint, PDF, and RTF formats. You can also use Google to search for pictures and phone numbers by Google Images and Phonebook, respectively. In this article, we will talk about Google Special Services.

Google email

Try using Google mail. It should be noted that this is not exactly your regular webmail.

- Among the features of Gmail are the following:

- huge mailbox size - more than 7 GB;

- instead of deleting letters, you can archive them - then you have enough space for a long time, and you can restore letters that were received or sent by you several years ago;

- the ability to search your mailbox with Google efficiency;

- convenient organization of letters and replies to them: all letters and responses form one chain, which is easy to track;

- good spam protection;

- memorable address [email protected];

- user-friendly interface.

Rambler search engine

Rambler history

It all started back in 1991 in the town of Pushchino, Moscow Region. In that distant year, a group of like-minded people gathered, among whom were Dmitry Kryukov, Sergey Lysakov, Viktor Voronkov, Vladimir Samoilov, Yuri Ershov. The general interest of this group was the Internet. Probably, in 1991, none of the future Rambler developers imagined that they would become the creators of one of the largest and most famous search engines on the Runet. After all, before that, they all served radio devices at the Institute of Biochemistry and Physiology of Microorganisms of the Russian Academy of Sciences. In 1992, the "Stack" company was created, headed by Sergei Lysakov. The company's profile is local networks and the Internet. In essence, Stack was an Internet service provider. The firm created an intracity network, then connected Pushchino to Moscow, and through it - to the Internet. By the way, this was the first IP channel to go outside Moscow. And this is in 1992! Now it is quite problematic to lay a channel - there will always be a lot of nuances, but then the cables had to be laid independently, manually, underground, and all this was done in winter.

How Rambler search worked

The Internet is constantly evolving: the number of sites and their sizes are increasing every day. After all, just imagine: large sites are updated every day, even if the volume of updates is 1024 bytes (1 KB), then if we assume that there are 10,000 such sites, every day the search engine has to process (index) 10,000 KB (roughly speaking, 10 MB ) information. The number 10,000 is taken "from the ceiling" - for example. It can be higher or lower - even large sites are not updated every day. The size of the update is also far-fetched. Imagine an information and analytical site where new articles are published almost every day or materials from other sites are reprinted. In this case, the size of updates will be far from 1 KB, but at least 10. Add to all this news and other information and it turns out that with the number of updated sites 10,000, the search engine should index 120 MB of text. And with all this, the search engine must not only accurately display the search results, but also do it as quickly as possible so that the user is comfortable working with it. Who wants to wait 10 minutes for search results? Of course, I'm exaggerating this, but personally I would not wait for search results more than 30 seconds (from the moment you click on the Find button until the first ten results appear). It turns out that the developers of the search engine have to constantly maintain at the proper level not only "hardware", which should be able to process the constantly growing volumes of information, but "mathematics" cannot be taken by hardware alone. It is necessary to constantly improve search algorithms so that with an increase in the volume of the search base, the search time does not increase (meaning a significant increase in time - it makes no difference for the user how long the search will be performed for 2.5 seconds or 2.0555 seconds, since he is not able to estimate this time).

Rambler queries, Rambler syntax

A request to Rambler could consist of one or more words, and the request could contain punctuation marks. Rambler developers have designed their search engine for maximum user convenience. Rambler could be used even by an inexperienced user who is not at all familiar with the query language. All he had to do was enter a query consisting of several words (for example, some phrase) and without punctuation marks - Rambler himself found the necessary documents, and he did it as efficiently as possible. Of course, if the query language was used correctly, the efficiency increased significantly, but even with a complete ignorance of the query language, the search efficiency was at a high level. As already noted, knowledge of the query language is in your best interest, you can simply find the information you need much faster.

Yandex search engine (Яndex)

Historical reference

In the distant 1990, the development of search software began at the Arcadia company, which was headed by Arkady Borkovsky and Arkady Volozh. Six years later, the Yandex website appeared. But what happened in these six years?

In two years, two information retrieval systems were created - "International Classification of Inventions" and "Classifier of Goods and Services". Both systems worked under DOS and allowed searching for a word from a given dictionary using logical operators.

In 1993 Arcadia became a CompTek division. In 1993-1994, search technologies have significantly improved, for example, a dictionary that provides a search taking into account the morphology of the Russian language occupied only 300 KB, which means that it freely fit into RAM, and work with it was very fast. On the basis of this new technology, in 1994, the "Bible Computer Reference Book" was created - an information retrieval system that works with translations of the Old and New Testaments.

Yandex language search

How will the search engine interpret the word you entered?

- Now we'll talk about this:

- Rule 1. It turns out that the system interprets it according to the rules of the Russian language. Example: If you entered the word "car", you will also get results containing the words "cars", "car", etc. The same is with verbs - upon request "to go" you will receive documents containing the words "to go", "is going", "walked", "walked", etc. As you can see, the search engine is more intelligent than you thought - it is not just a means of finding a specific word in a database.

- Rule 2. Particular attention is paid to words written with a capital letter. If a word is capitalized and is not the first in a sentence, only capitalized words will be found. Otherwise, words will be found written with both capital and small letters. Example: on request "Tax A." documents containing both "tax" (fee) and "Tax" (surname) will be found, since the word "Tax" is written with a capital letter, but it is the first in the sentence. But on the request "A. Taxa" documents will be found containing only the word "Taxa", written with a capital letter.

Yandex syntax

By default, Yandex uses the logical operator AND. This means that if you entered the query "Samsung TV", then in the results you will receive documents in which the words "TV" and "Samsung" will be found in the same sentence. If you want to specify the AND operator explicitly, use the ampersand & character. In other words, the query "Samsung TV" is the same as the query "TV & Samsung". You can also use the query "TV + Samsung".

If you want the opposite effect i.e. if you want to get documents in which there is a separate word "TV" and a separate word "Samsung", then you need to use the OR operator (|), for example: "TV | Samsung".

Yandex query syntax

Yandex numbers all words in the text of the document in order. The distance between adjacent words is 1 (not 0!), And the distance between words in reverse order is -1. The same goes for offers.

To indicate the distance between words, a / is placed, followed by a number immediately, which means that this is the distance between words. For example, the query "developer / 2 programs" will find documents that contain the words "developer" and "programs", and the distance between words should be no more than two words and all these words should be in one sentence. In this case, documents containing "application developer", "system software developer", etc. will be found.

If we know exactly the distance and word order, then we can use the syntax / + n. For example, the query "red / + 1 cap" will result in the word "cap" immediately following the word "red". The query "little red riding hood" would lead to the same result.

Yandex search operators

Brackets are used to represent an entire expression in a query. For example, the query "(history | technology | programs) / + 1 Linux" will find documents containing one of the phrases "Linux history", "Linux technology", "Linux programs".

Zones

The zone is where you find the information you need. You can specify the zone in which you want to search - titles (Title zone), links (anchors) or address (Address). You can also use the all zone to search the entire document.

Syntax: $ zone_name query.

For example: the query $ title "(! LANG: Microsoft" найдет все документы, в заголовках которых встречается точная фраза "Microsoft".!}

Additional search capabilities Yandex

The Google search engine allowed limiting the search site to a specific list of servers, or, conversely, excluding some servers from the search list. Exactly the same capabilities are available in the Yandex search engine. You can also search for documents that have links to specific URLs or images. When specifying a mask for a file (for example, a picture), you can use the * symbol, which means all symbols, for example: "audi- *".

The syntax is as follows: # item_name \u003d ”value”.

cVyacheslav Tikhonov, November 2000atomzone.hypermart.net

1. Introduction

2. Search engines

2.1. How search engines work 2.2. Comparative review of search engines

3. Search robots

3.1. Using search robots

3.1.1. Statistical analysis 3.1.2. Serving hypertext 3.1.3. Mirroring 3.1.4. Resource Research 3.1.5. Combined use

3.2. Increased costs and potential dangers when using search robots

3.2.1. Network resource and server load 3.2.2. Updating documents

3.3. Robots / client agents

3.3.1 Bad software implementations of robots

4.1. Determination by the robot what information to include / exclude 4.2. The file format is /robots.txt. 4.3. Records of the file /robots.txt 4.4. Extended format comments. 4.5. Determination of the order of movement on the Web 4.6. Summing up the data

5. Conclusion

1. Introduction

The main protocols used on the Internet (hereinafter also the Network) are not provided with sufficient built-in search functions, not to mention the millions of servers located in it. The HTTP protocol used on the Internet is only good for navigation, which is viewed only as a means of viewing pages, not finding them. The same is true for FTP, which is even more primitive than HTTP. With the rapid growth of information available on the Web, navigational browsing methods are rapidly reaching their limit of functionality, not to mention the limit of their effectiveness. Without specifying specific numbers, we can say that it is no longer possible to obtain the necessary information immediately, since there are billions of documents on the Web now and all of them are at the disposal of Internet users, moreover, today their number is increasing according to an exponential dependence. The number of changes to which this information has been subjected is enormous and, most importantly, they have occurred in a very short period of time. The main problem is that there has never been a single full functional system for updating and recording such a volume of information, simultaneously available to all Internet users around the world. In order to structure the information accumulated on the Internet and provide its users with convenient means of finding the data they need, search engines have been created.

2. Search engines

Search engines usually have three components:

an agent (spider or crawler) who travels around the Web and collects information;

a database that contains all the information that spiders collect;

a search engine that people use as an interface to interact with a database.

2.1 How search engines work

Search and structuring tools, sometimes called search engines, are used to help people find the information they need. Search tools such as agents, spiders, crawlers and robots are used to collect information about documents on the Internet. These are special programs that search for pages on the Web, extract hypertext links on these pages and automatically index the information they find to build a database. Each search engine has its own set of rules governing how to collect documents. Some follow every link on every page they find and then, in turn, explore every link on every new page, and so on. Some people ignore links that lead to graphics and sound files, animation files; others ignore references to resources such as WAIS databases; others are instructed to browse the most popular pages first.

Agents are the most intelligent of search engines. They can do more than just search: they can even execute transactions on your behalf. Already, they can search for sites of a specific topic and return lists of sites sorted by their attendance. Agents can process the content of documents, find and index other types of resources, not just pages. They can also be programmed to retrieve information from pre-existing databases. Regardless of the information that the agents index, they pass it back to the search engine database.

General search for information on the Web is carried out by programs known as spiders. The spiders report the content of the found document, index it and extract the summary information. They also look at headers, some links and send the indexed information to the search engine database.

Crawlers look at the headings and only return the first link.

The robots can be programmed to follow various links of varying nesting depths, index and even check links in a document. Because of their nature, they can get stuck in loops, so they need significant Web resources to follow links. However, there are methods designed to prevent robots from searching on sites whose owners do not want them to be indexed.

Agents retrieve and index various types of information. Some, for example, index every single word in a encountered document, while others index only the most important 100 words in each, index the document's size and number of words, title, headings and subheadings, and so on. The type of index built determines what search can be done by the search engine and how the resulting information will be interpreted.

Agents can also navigate the Internet and find information and then put it into the search engine's database. Search engine administrators can determine which sites or types of sites agents should visit and index. The indexed information is sent to the search engine database in the same way as described above.

People can submit information directly to the index by filling out a specific form for the section in which they would like to submit their information. This data is transferred to the database.

When someone wants to find information available on the Internet, he visits a search engine page and fills out a form detailing the information he needs. Key words, dates and other criteria can be used here. The criteria in the search form must match the criteria used by the agents when indexing the information they find while navigating the Web.

The database retrieves the query subject based on the information provided in the completed form and outputs the corresponding documents prepared by the database. The database uses a ranking algorithm to determine the order in which the list of documents will be displayed. Ideally, documents that are most relevant to the user's query will be placed first in the list. Different search engines use different ranking algorithms, but the basic principles for determining relevance are as follows:

The number of query words in the textual content of the document (i.e. in the html code).

Tags where these words are located.

The location of the search words in the document.

The proportion of words for which relevance is determined in the total number of words in the document.

These principles are applied by all search engines. And the ones presented below are used by some, but quite well-known (like AltaVista, HotBot).

Time - how long the page has been in the search engine database. At first it seems like this is a rather meaningless principle. But, if you think about how many sites exist on the Internet that live a maximum of a month! If the site has existed for quite a long time, this means that the owner is very experienced in this topic and the user is more suitable for a site that has been broadcasting to the world about the rules of table behavior for a couple of years than the one that appeared a week ago with the same topic.

Citation Index - how many links to a given page lead from other pages registered in the search engine base.

The database outputs a similarly ranked list of HTML documents and returns it to the person who made the request. Different search engines also choose different ways to display the resulting list - some only show links; others display links with the first few sentences contained in the document, or the title of the document along with a link.

2.2 Comparative overview of search engines

Lycos ... Lycos uses the following indexing mechanism:

words in title headers take top priority;

words at the beginning of the page;

Like most systems, Lycos allows for a simple query and a more sophisticated search method. In a simple query, a sentence in a natural language is entered as a search criterion, after which Lycos normalizes the query, removing the so-called stop words from it, and only after that starts its execution. Almost immediately, information about the number of documents per word is displayed, and later a list of links to formally relevant documents. The list against each document indicates its closeness measure to the query, the number of words from the query that got into the document, and the estimated closeness measure, which may be more or less formally calculated. While you cannot enter logical operators in a line along with terms, but you can use logic through the Lycos menu system. This opportunity is used to build an advanced request form intended for sophisticated users who have already learned how to work with this mechanism. Thus, it can be seen that Lycos belongs to a system with a query language of the "Like this" type, but it is planned to expand it to other ways of organizing search prescriptions.

AltaVista ... Indexing in this system is carried out by a robot. The robot has the following priorities:

key phrases at the beginning of the page;

key phrases by the number of occurrences of word phrases;

If there are no tags on the page, it uses the first 30 words, which it indexes and shows instead of the description (tag description)

The most interesting feature of AltaVista is its advanced search. It should be noted right away that, unlike many other systems, AltaVista supports the single NOT operator. In addition, there is also the NEAR operator, which implements the contextual search capability when terms should be placed side by side in the text of the document. AltaVista allows searching by key phrases, while it has a fairly large phraseological dictionary. Among other things, when searching in AltaVista, you can specify the name of the field where the word should occur: hypertext link, applet, image name, title, and a number of other fields. Unfortunately, the ranking procedure is not described in detail in the documentation for the system, but it can be seen that ranking is applied both for a simple search and for an extended query. Actually, this system can be classified as a system with an extended boolean search.

Yahoo ... This system was one of the first to appear on the Web, and today Yahoo cooperates with many manufacturers of information retrieval tools, and different software is used on its various servers. Yahoo's language is quite simple: all words must be entered separated by a space, they are connected by a linkage AND or OR. When issuing, the degree of compliance of the document with the request is not indicated, but only the words from the request that are found in the document are underlined. This does not normalize the vocabulary and does not analyze for "common" words. Good search results are obtained only when the user knows that the information is in the Yahoo database for sure. The ranking is based on the number of query terms in the document. Yahoo belongs to the class of simple traditional systems with limited search capabilities.

OpenText ... The OpenText information system is the most commercialized information product on the Web. All descriptions are more like advertisements than informative job guides. The system allows searching using logical connectors, but the size of the request is limited to three terms or phrases. In this case, we are talking about advanced search. When issuing results, the degree of compliance of the document with the request and the size of the document are reported. The system also allows you to improve search results in the style of a traditional boolean search. OpenText could be classified as a traditional information retrieval system if not for the ranking mechanism.

Infoseek ... In this system, a robot creates an index, but it does not index the entire site, but only the specified page. Moreover, the robot has the following priorities:

words in title title have the highest priority;

words in the keywords, description tag and the frequency of occurrences of repetitions in the text itself;

when repeating the same words next to each other, throws them out of the index

Allows up to 1024 characters for the keywords tag, 200 characters for the description tag;

If no tags were used, index the first 200 words on the page and use it as a description;

The Infoseek system has a rather developed information retrieval language, which allows not only to indicate which terms should be found in documents, but also to weigh them in a peculiar way. This is achieved using special signs "+" - the term must be in the document, and "-" - the term must be absent in the document. In addition, Infoseek allows for what is called contextual search. This means that using a special query form, you can require consistent co-occurrence of words. You can also specify that some words should appear together not only in one document, but even in a separate paragraph or heading. It is possible to specify key phrases that represent a single whole, down to word order. Ranking when issuing is carried out by the number of query terms in the document, by the number of query phrases minus common words. All of these factors are used as nested procedures. To summarize briefly, we can say that Infoseek belongs to the traditional systems with an element of weighting of terms when searching.

WAIS ... WAIS is one of the most sophisticated search engines on the Internet. Only fuzzy set search and probabilistic search are not implemented in it. Unlike many search engines, the system allows not only building nested boolean queries, calculating formal relevance by various measures of proximity, weighing query and document terms, but also correcting the query by relevance. The system also allows you to use term truncation, splitting documents into fields, and maintaining distributed indexes. It is no coincidence that this particular system was chosen as the main search engine for the implementation of the Britannica encyclopedia on the Internet.

3. Search robots

In recent years, the World Wide Web has become so popular that now the Internet is one of the main means of publishing information. As the Web grew from a few servers and a small number of documents to enormous limits, it became clear that manually navigating a large part of the hypertext link structure was no longer possible, let alone an efficient method of resource exploration.

This problem has prompted Internet researchers to experiment with automated Web navigation called "robots." A web robot is a program that navigates the hypertext structure of the Web, requests a document, and recursively returns all documents to which the document refers. These programs are also sometimes called "spiders", "wanderers", or "worms" and these names are perhaps more attractive, however, they can be misleading, since the terms "spider" and "wanderer" give the false impression that the robot moves itself and the term "worm" might imply that the robot also reproduces like an Internet worm virus. In reality, robots are implemented as a simple software system that requests information from remote sites on the Internet using standard network protocols.

3.1 Using search robots

Robots can be used to perform a variety of useful tasks such as statistical analysis, hypertext maintenance, resource exploration, or page mirroring. Let's consider these tasks in more detail.

3.1.1 Statistical Analysis

The first robot was created in order to detect and count the number of web servers on the web. Other statistical calculations may include the average number of documents per server on the web, the proportions of certain types of files on the server, the average page size, the degree of linkage, and so on.

3.1.2 Serving Hypertext

One of the main difficulties in maintaining a hypertext structure is that links to other pages can become "dead links" when a page is transferred to another server or deleted altogether. To date, there is no general mechanism that could notify the service personnel of the server, which contains a document with links to such a page, that it has changed or has been removed altogether. Some servers, such as CERN HTTPD, will log failed requests caused by dead links along with a recommendation for the page where the dead link was found, with the expectation that this problem will be resolved manually. This is not very practical, and in reality document authors find that their documents contain dead links only when they are notified directly, or, which is very rare, when the user himself notifies them by email.

A robot like MOMSPIDER that checks links can help the document author find such dead links, and can also help maintain the hypertext structure. Robots can also help maintain the content and structure itself by checking the relevant HTML document, its compliance with accepted rules, regular updates, etc., but this is not usually used. Perhaps this functionality should be built in when writing the HTML document environment, since these checks can be repeated whenever the document changes, and any problems can be resolved immediately.

3.1.3 Mirroring

Mirroring is a popular mechanism for maintaining FTP archives. The mirror recursively copies the entire directory tree via FTP, and then regularly re-quests those documents that have changed. This allows you to distribute the download across multiple servers, successfully handle server failures, and provide faster and cheaper local access, as well as offline access to archives. On the Internet, mirroring can be done using a robot, but at the time of this writing, no sophisticated means existed for this. Of course, there are several robots out there that rebuild the page subtree and save it on the local server, but they don't have the means to update exactly the pages that have changed. The second problem is page uniqueness, which is that links in copied pages must be overwritten where they link to pages that have also been mirrored and may need updating. They should be changed to copies, and where relative links point to pages that have not been mirrored, they should be expanded to absolute links. The need for mirroring mechanisms for performance reasons is greatly reduced by the use of sophisticated caching servers that offer selective upgrades that can ensure that the cached document is not updated and is largely self-serving. However, it is expected that mirroring facilities will develop properly in the future.

3.1.4 Resource Research

Perhaps the most exciting use of robots is in resource exploration. Where people cannot deal with a huge amount of information, it is quite possible to transfer all the work to the computer looks quite attractive. There are several robots that collect information from most of the Internet and feed the results to a database. This means that a user who previously relied solely on manual web navigation can now combine search with page browsing to find the information they want. Even if the database does not contain exactly what he needs, chances are high that this search will find many links to pages, which, in turn, can link to the subject of his search.

The second advantage is that these databases can be automatically updated over a period of time so that dead links in the database are found and removed, as opposed to manual document maintenance, where checking is often spontaneous and incomplete. The use of robots to explore resources will be discussed below.

3.1.5 Combined use

A simple robot can perform more than one of the above tasks. For example, the RBSE Spider robot performs statistical analysis of requested documents and maintains a resource database. However, such combined use is unfortunately very rare.

3.2 Increased costs and potential dangers when using search robots

Robots can be expensive, especially when used remotely on the Internet. In this section, we will see that robots can be dangerous because they place too high demands on the Web.

Robots require significant server bandwidth. First, robots work continuously for long periods of time, often even for months. To speed up operations, many robots make parallel page requests from the server, which subsequently lead to increased use of the server's bandwidth. Even remote parts of the Web can feel the network load on a resource if the robot makes a large number of requests in a short period of time. This can lead to a temporary lack of server bandwidth for other users, especially on servers with low bandwidth, since the Internet does not have any means of load balancing depending on the protocol used.

Traditionally, the Internet was perceived as "free" because individual users did not have to pay to use it. However, this is now called into question, as especially corporate users pay for the costs associated with using the Web. The company may feel that its services to (potential) customers are worth the paid money, but the pages automatically transferred to the robots are not.

In addition to making demands on the Web, the robot also makes additional demands on the server itself. Depending on the frequency with which it requests documents from the server, this can lead to a significant load on the entire server and a decrease in the access speed of other users accessing the server. In addition, if the host computer is used for other purposes as well, this may not be acceptable at all. As an experiment, the author ran a simulation of 20 concurrent requests from his server acting as a Plexus server on a Sun 4/330. For several minutes, the machine, slowed down by the use of the spider, was generally impossible to use. This effect can be felt even by consistently requesting pages.

All this shows that you need to avoid situations with simultaneous page requests. Unfortunately, even modern browsers (like Netscape) create this problem by simultaneously requesting images in the document. The web protocol HTTP has proven ineffective for such transfers, and new protocols are being developed to combat these effects.

3.2.2 Updating documents

As already mentioned, the databases created by the robots can be automatically updated. Unfortunately, there are still no effective mechanisms for monitoring changes taking place on the Web. Moreover, there is not even a simple query that could determine which of the links has been removed, moved or changed. The HTTP protocol provides an "If-Modified-Since" mechanism whereby a user agent can determine when a cached document is modified at the same time as requesting the document itself. If the document has been modified, then the server will only transfer its content, since this document has already been cached.

This tool can only be used by the robot if it maintains the relationship between the totals that are retrieved from the document: it is the link itself and the timestamp when the document was requested. This introduces additional requirements for the size and complexity of the database and is not widely used.

3.3 Robots / client agents

Web loading is a particular problem with the application of the category of robots that are used by end users and implemented as part of a general purpose web client (eg Fish Search and tkWWW robot). One of the features that are common among these robots is the ability to transmit discovered information to search engines as they navigate the Web. This is touted as an improvement in resource exploration methods, as multiple remote databases are automatically queried. However, according to the author, this is unacceptable for two reasons. First, the search operation leads to a greater load on the server than even a simple document query, so the average user can experience significant inconvenience when working on several servers at greater overhead than usual. Second, it is a mistake to assume that the same search keywords are equally relevant, syntactically correct, not to mention optimality for different databases, and the range of databases is completely hidden from the user. For example, a request for "Ford and Garage" might be sent to a database storing 17th century literature, a database that does not support boolean operators, or a database that specifies that queries for cars must begin with the word "car:". And the user doesn't even know it.

Another dangerous aspect of using a client robot is that once it has been distributed over the Web, no errors can be fixed, no knowledge of problem areas can be added, and no new effective properties can improve it, just as not every user can subsequently will upgrade this robot with the latest version.

The most dangerous aspect, however, is the large number of potential robot users. Some people are likely to use such a device sensibly, that is, to be limited to some maximum of links in a known area of \u200b\u200bthe Web and for a short period of time, but there are also people who abuse it out of ignorance or arrogance. According to the author, remote robots should not be shared with end users, and fortunately, so far, it has been possible to convince at least some robot authors not to distribute them openly.

Even without considering the potential danger of client robots, an ethical question arises: where the use of robots can be useful to the entire Internet community to combine all the available data, and where it cannot be applied, since it will benefit only one user.

"Intelligent agents" and "digital assistants" for use by the end user looking for information on the Internet are currently a popular research topic in computer science, and are often seen as the future of the Web. At the same time, this may indeed be the case, and it is already clear that automation is invaluable for resource research, although more research is needed to make them effective. Simple user-controlled robots are very far from intelligent network agents: the agent must have some idea of \u200b\u200bwhere to find certain information (that is, what services to use), instead of blindly searching for it. Consider a situation where a person is looking for a bookstore; he uses the Yellow Pages for the area in which he lives, finds a list of stores, selects one or more of them, and visits them. A customer robot would walk to all stores in the area asking for books. On the web, as in real life, this is ineffective on a small scale, and should be prohibited on a large scale.

3.3.1 Bad software implementations of robots

The load on the network and servers is sometimes increased by poor software implementation, especially of recently written robots. Even if the protocol and links sent by the robot are correct, and the robot handles the returned protocol correctly (including other features like reassignment), there are some less obvious problems.

The author has watched several similar robots control a call to his server. While in some cases the negative effects were caused by people using their site for trials (instead of a local server), in other cases it became apparent that they were caused by the poor writing of the robot itself. In this case, repeated page requests can occur if there are no records of already requested links (which is unforgivable), or when the robot does not recognize when several links are syntactically equivalent, for example, where DNS aliases for the same IP address differ , or where links cannot be processed by a robot, eg "foo / bar / baz.html" is equivalent to "foo / baz.html".

Some robots sometimes ask for GIF and PS documents that they cannot process and therefore ignore.

Another danger is that some areas of the Web are nearly endless. For example, consider a script that returns a page with a link down one level. It will start, for example, with "/ cgi-bin / pit /", and continue with "/ cgi-bin / pit / a /", "/ cgi-bin / pit / a / a /", etc. Because such links can lure a robot into a trap, they are often referred to as "black holes".

4. Problems in cataloging information

It is undeniable that robotic databases are popular. The author directly regularly uses such databases to find the resources he needs. However, there are several problems that limit the use of robots to explore resources on the Web. One of them is that there are too many documents here, and they are all dynamically changing all the time.

One measure of the effectiveness of an information retrieval approach is a "recall", which contains information about all relevant documents that have been found. Brian Pinkerton argues that revocation in Internet indexing systems is a perfectly acceptable approach, as finding sufficiently relevant documents is not a problem. However, if we compare all the information available on the Internet with the information in the database created by the robot, then the feedback cannot be too accurate, since the amount of information is huge and it changes very often. So, in practice, the database may not contain a specific resource that is available on the Internet at the moment, and there will be many such documents, since the Web is constantly growing.

4.1. Determination by the robot what information to include / exclude

The robot cannot automatically determine whether a given web page has been included in its index. In addition, web servers on the Internet can contain documents that are only relevant to the local context, documents that exist temporarily, etc. In practice, robots retain almost all information about where they have been. Note that even if the robot was able to determine if the specified page should be excluded from its database, it has already incurred the overhead of requesting the file itself, and the robot that chooses to ignore a large percentage of documents is very wasteful. In an effort to remedy this situation, the Internet community has adopted the Robot Exception Standard. This standard describes the use of a simple structured text file available at a known location on the server ("/robots.txt") and used to determine which part of their links should be ignored by robots. This tool can also be used to alert robots to black holes. Each type of robot can be given specific commands if it is known that this robot specializes in a specific area. This standard is free, but very simple to implement and has significant pressure on robots to comply.

4.2. The file format is /robots.txt.

The /robots.txt file is intended to instruct all search robots to index information servers as defined in this file, i.e. only those directories and server files that are NOT described in /robots.txt. This file should contain 0 or more records that are associated with one or another robot (as determined by the value of the agent_id field), and indicate for each robot or for all at once what exactly they DO NOT need to index. The person who writes the /robots.txt file must specify the Product Token substring of the User-Agent field, which each robot issues to the HTTP request of the indexed server. For example, the current Lycos robot responds to such a request as the User-Agent field:

Lycos_Spider_ (Rex) /1.0 libwww / 3.1

If the Lycos robot did not find its description in /robots.txt, it does what it sees fit. Another factor to consider when creating the /robots.txt file is file size. Since each file is described that should not be indexed, and even for many types of robots separately, with a large number of files that should not be indexed, the size of /robots.txt becomes too large. In this case, you should use one or more of the following methods to reduce the size of /robots.txt:

specify a directory that should not be indexed, and, accordingly, files that should not be indexed should be located in it

create a server structure taking into account the simplification of the description of exceptions in /robots.txt

specify one indexing method for all agent_id

specify masks for directories and files

4.3. The records of the /robots.txt file

General description of the recording format.

[# comment string NL] *

User-Agent: [[WS] + agent_id] + [[WS] * # comment string]? NL

[# comment string NL] *

# comment string NL

Disallow: [[WS] + path_root] * [[WS] * # comment string]? NL

Options

Description of parameters used in /robots.txt entries

[...] + Square brackets followed by a + sign indicate that one or more terms must be specified as parameters. For example, after "User-Agent:" one or more agent_id can be specified after a space.

[...] * Square brackets followed by a * sign indicate that zero or more terms can be specified as parameters. For example, you can write or not write comments.

[...]? Square brackets followed by a character? means that zero or one terms can be specified as parameters. For example, a comment can be written after "User-Agent: agent_id".

.. | .. means either what is before the line, or what is after.

WS one of the characters - space (011) or tab (040)

NL one of the characters - end of line (015), carriage return (012), or both (Enter)

User-Agent: keyword (capital and uppercase letters do not matter). The parameters are the agent_id of search robots.

Disallow: Keyword (no upper and lower case letters). Parameters are full paths to non-indexed files or directories.

# the beginning of the comment line, comment string - the actual comment body.

agent_id is any number of characters, not including WS and NL, that define the agent_id of various crawlers. The * sign identifies all robots at once.

path_root is any number of characters, not including WS and NL, that define files and directories that should not be indexed.

4.4. Extended format comments.

Each record begins with the User-Agent line, which describes which or which search robot this record is intended for. Next line: Disallow. The paths and files that cannot be indexed are described here. EVERY entry MUST have at least these two lines. All other lines are options. A post can contain any number of comment lines. Each comment line must begin with a # character. Comment lines can be placed at the end of the User-Agent and Disallow lines. The # at the end of these lines is sometimes added to indicate to the crawler that the long agent_id or path_root string has ended. If several agent_ids are specified in the User-Agent line, then the path_root condition in the Disallow line will be fulfilled equally for all. There are no restrictions on the length of the User-Agent and Disallow strings. If the crawler has not found its agent_id in the /robots.txt file, then it ignores /robots.txt.

If you do not take into account the specifics of the work of each search robot, you can specify exceptions for all robots at once. This is achieved by specifying the line

If the search robot finds several records in the /robots.txt file with a matching agent_id value, then the robot is free to choose any of them.