Universal Data Exchange XML Processing (Universal Data Exchange XML Processing)

Processing "Universal data exchange in XML format" is intended for loading and unloading data into a file from any configuration implemented on the 1C: Enterprise 8 platform.

Working hours

When using a managed form, processing has two modes of operation:

1. On the client. When using this mode, the rules and upload data files are transferred from the client to the server, and the upload data file is transferred from the server to the client. The paths to these files located on the client must be specified in the dialog box immediately before performing the action.

2. On the server. In this mode, files are not transferred to the client and the paths to them must be specified on the server.

Note: The external processing file and exchange protocol files must always be on the server regardless of the operating mode.

Download Universal Data Interchange XML - Only registered user can download files!

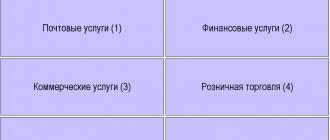

Processing has four tabs

Uploading data

To unload data, you must specify the name of the file to which the data will be unloaded and select the file of exchange rules. Exchange rules for any configurations can be configured in the specialized configuration "Data conversion, edition 2".

To upload documents and records of independent periodic registers of information, you must specify the period - "Start date" and "End date". The resulting file with uploaded data can be compressed.

On the "Data uploading rules" tab, you can select those types of objects that should be uploaded, set up filters for selecting objects, or specify the data exchange node for which you want to upload data.

On the "Upload Parameters" tab, you can specify additional data upload parameters.

On the "Comment" tab, you can write an arbitrary comment text to be included in the exchange file.

It is possible to customize the loading of data into transactions. To do this, check the "Use transactions" checkbox and specify the number of items in one transaction when loading.

"Load data in exchange mode (Data Exchange. Loading \u003d True)" - if the checkbox is set, then the loading of objects will be performed with the set loading sign. This means that when objects are written to the database, all platform and application checks are disabled. The exception is documents that are recorded in the mode of posting or cancellation of posting. Posting and cancellation of document posting is always performed without setting the loading mode, i.e. checks will be performed.

Additional settings

The tab serves for detailed configuration of data upload and download.

"Debug mode" - a flag for setting the exchange debug mode. If this flag is set, then the communication process will not be stopped when any error occurs. The exchange will complete to the end with the output of debug messages to the exchange protocol file. It is recommended to use this mode when debugging exchange rules.

"Output of information messages to the message window" - if the flag is set, the protocol of the data exchange process will be displayed in the message window.

"Number of processed objects to update the status" - the parameter is used to determine the number of processed items before changing the line status of loading / unloading

"Data upload settings" - allow you to define the number of items processed in one transaction when uploading data, upload and process only those objects for which you have access rights, configure the type of registration change for unloaded objects through exchange plans.

"Use optimized format for data exchange (V8 - V8, processing version not lower than 2.0.18)" - the optimized format of the exchange message assumes the presence of the DataTypeInformation node in the message header, into which information about data types is uploaded. This speeds up the loading process.

"Use transactions when uploading for exchange plans" - the flag determines the mode of using transactions when uploading data when selecting changes on nodes of exchange plans. If the flag is set, the data upload will be performed in a transaction.

"Cardinality per transaction" - defines the maximum number of data items that can be placed in a message within one database transaction. If the parameter value is 0 (default value), then all data is placed within one transaction. This mode is recommended as it guarantees the consistency of the data placed in the message. However, when creating a message in multi-user mode, there may be lock conflicts between the transaction in which the data is placed in the message and transactions performed by other users. To reduce the likelihood of such conflicts, you can set this parameter to a value other than the default. The lower the value of the parameter, the less the likelihood of a lock conflict, but the higher the probability of placing inconsistent data in the message.

"Unload objects for which there are access rights" - if the checkbox is set, the selection of infobase objects will be performed taking into account the access rights of the current user of the program. This involves using the ALLOWED literal in the request body to retrieve data.

"Automatically remove invalid characters from strings for writing to XML" - if the flag is set, then when writing data to an exchange message, invalid characters will be removed. Symbols are checked against the XML 1.0 recommendation.

"Registration changes for exchange nodes after unloading" - the field defines the mode of operation with registration of data changes after the completion of data upload. Possible values:

Do not delete registration - after uploading the data, the registration of changes on the node will not be deleted.

Completely delete registration for an exchange node - after uploading data, registration of changes on the node will be completely deleted.

Delete registration only for uploaded metadata - after uploading data, registration of changes on the node will be deleted only for metadata objects that were specified for upload.

"Exchange protocol" - allows you to configure the output of information messages to the message window, maintaining and writing to a separate file of the exchange protocol.

"File name, exchange protocol" - the name of the file to display the protocol of the data exchange process.

"Boot protocol (for COM - connection)" - the name of the file for outputting the protocol of the data exchange process in the receiver base when exchanging through the COM connection. Important: the path to the file must be accessible from the computer on which the target base is installed.

"Append data to exchange protocol" - if the flag is set, the contents of the exchange protocol file are saved if the protocol file already exists.

"Output to the protocol of information messages" - if the flag is set, then messages of an informative nature will be output to the exchange protocol, in addition to messages about exchange errors.

"Open exchange protocol files after performing operations" - if the flag is set, then after data exchange the exchange protocol files will be automatically opened for viewing.

Deleting data

The bookmark is only needed for developers of exchange rules. Allows you to delete arbitrary objects from the infobase.

Debugging data upload and download

Processing allows you to debug event handlers and generate a debug module from a rules file or data file.

The debugging mode for unloading handlers is enabled on the "Data unloading" tab by setting the "Unloading handlers debugging mode" checkbox. Accordingly, on the "Data loading" tab, the loading debugging mode is enabled by setting the "Load handlers debugging mode" checkbox.

After setting the debug mode of the handlers, the debug settings button will become available. By clicking on this button, the settings window will open.

Debugging handlers is configured in four steps:

Step 1: Selecting Algorithm Debugging Mode

At the first step, you need to decide on the mode for debugging algorithms:

No algorithm debugging

Call algorithms as procedures

Substitute Algorithm Code at Call Place

The first mode is convenient to use when we know for sure that the error in the handler is not related to the code of any algorithm. In this mode, the algorithm code is not dumped into the debug module. The algorithms are executed in the context of the "Run ()" statement and their code is not available for debugging.

The second mode should be used in cases where the error is in the algorithm code. When this mode is set, algorithms will be unloaded as separate procedures. At the moment of calling the algorithm from any handler, the corresponding processing procedure is called. This mode is convenient to use when the "Parameters" global variable is used to transfer parameters to algorithms. The limitation of using this mode is that during debugging, the algorithm does not access the local variables of the handler from which it is called.

The third debugging mode is used, as in the second case, when debugging the code of algorithms and in those cases in which the second debugging mode is not suitable. When this mode is set, algorithms will be unloaded as integrated code in handlers. Those. instead of the algorithm call operator, the complete algorithm code is inserted, taking into account the nested algorithms. In this mode, there are no restrictions on the use of local handler variables, but there is a restriction when debugging recursively called algorithms.

Step 2: building the debug module

At the second step, you need to unload the handlers by clicking on the "Generate unloading (loading) debug module" button. The generated handlers and algorithms will be displayed in a separate window for viewing. The contents of the debug module must be copied to the clipboard by clicking the "Copy to Clipboard" button.

Step 3: Create External Processing

At this step, you need to launch the configurator and create a new external processing. In the processing module, you must paste the contents of the clipboard (debug module) and save the processing under any name.

Step 4: Connect external processing

At the fourth, final step, you must specify the name of the external processing file in the input field. In this case, the program checks by the time of creation (update) of the processing file. If the processing has an earlier version than the version of the debug module file, a warning will be displayed and the configuration form will not be closed.

Note: The ability to debug the global conversion handler "After loading the exchange rules" is not supported.

In almost all configurations of 1C 8 there are predefined exchanges between other standard releases, for example with: "1C Trade Management 8", "1C ZUP 8", "1C Retail 8". However, what if you need to exchange between different configurations with completely different metadata structures? In this case, processing "Universal data exchange in XML format" will help, which can be downloaded free of charge for and

To work with these processing we need a rules file in xml format. It describes exactly how data is transferred from one infobase to another. It is created using a specialized configuration "Data Conversion", which is supplied on disk, or on the ITS website. We will look at how to create it in the next article, but for now, let's imagine that we already have it. There are 4 tabs in processing. Let's consider all of them in order:

Uploading data

- First of all, we specify the name of the rules file, on the basis of which the unloading will take place.

- We indicate the name of the data file, in which all information will be saved.

- You can check the box: compress the resulting file or not.

After the rules file has been specified, metadata objects for which the data will be saved will be displayed in the "Uploaded data" tab. Also here you can specify the period for which the selection will take place. Additional values \u200b\u200bcan be specified in the "Upload parameters" tab. The comment tab speaks for itself

Loading data

In this tab, only the data file is indicated, since all the rules are already in the uploaded file along with the data. Here you can set the number of items that will be loaded in one transaction. There are additional boolean options on the form, based on which the loading will be performed. If you want all the built-in checks to be disabled during processing, then select the Configure automatic data loading item speaks for itself.

Additional settings

The advanced settings tab allows you to fine-tune the processing

- Debug mode allows you not to stop the upload or download procedure when an unexpected error occurs. After completing the operation, a detailed report will be displayed.

- To monitor the exchange process, you can check the box "Display information messages".

- The number of processed objects to update the status - determines the number of processed items after which the information in the information window will be updated.

- "Use the optimized format for data exchange (V8 - V8, processing version not lower than 2.0.18)" - a specialized format that assumes the presence of the tag "InformationOnDataTypes" in the message header, which allows to speed up the execution process.

- Use transactions when uploading for exchange plans - when this flag is set, the upload will be performed in one transaction (indivisible, logically connected sequence)

- The number of items in a transaction - determines the number of items that will be unloaded / loaded in one transaction. If set to 0, then the whole procedure will be performed in one transaction. This option is recommended, since the guarantee of the logical coherence of the data will be preserved.

- Unload objects for which you have access rights - the flag, on the basis of which the objects for unloading are determined for which the current user has access rights.

- Automatically remove invalid characters from strings for writing to XML - when this item is checked, all entries in the message are checked for XML 1.0 validity and non-standard characters are removed.

- Registration changes for exchange nodes after unloading - determines the method of working with the registration of data changes after the end of data uploading (do not delete registration, completely delete registration, delete registration only for uploaded metadata).

- Exchange protocol file name - specifies the file name for keeping the exchange procedure logs.

- Download protocol (for COM - connection) - the name of the log file when exchanging via a COM connection.

- Append data to the exchange protocol - when this flag is set, the log file will be added, not overwritten.

- Outputting information messages to the log file - not only information about errors, but also information messages will be added to the log file.

- Open exchange protocol files after performing operations - the flag speaks for itself

Deleting data

Processing "Universal data exchange in XML format" is intended for loading and unloading data into a file from any configuration implemented on the 1C: Enterprise 8 platform

Processing has four tabs

Uploading data

To unload data, you must specify the name of the file to which the data will be unloaded and select the file of exchange rules. Exchange rules for any configurations can be configured in the specialized configuration "Data conversion, edition 2".

To upload documents and records of independent periodic registers of information, you must specify the period - "Start date" and "End date". The resulting file with uploaded data can be compressed.

On the "Data uploading rules" tab, you can select those types of objects that should be uploaded, set up filters for selecting objects, or specify the data exchange node for which you want to upload data.

On the "Upload Parameters" tab, you can specify additional data upload parameters.

On the "Comment" tab, you can write an arbitrary comment text to be included in the exchange file.

To load data, you must specify the name of the file from which the data will be loaded.

It is possible to customize the loading of data into transactions. To do this, check the "Use transactions" checkbox and specify the number of items in one transaction when loading.

Additional settings

The bookmark is used for fine-tuning the upload and download of data.

"Debug mode" - the checkbox determines the mode of unloading and loading data

"Number of processed objects to update the status" - the parameter is used to determine the number of processed items before changing the line loading / unloading status

"Data upload settings" - allow you to determine the number of items processed in one transaction when uploading data, upload and process only those objects for which you have access rights, configure the type of registration change for unloaded objects through exchange plans

"Exchange protocol" - allows you to configure the output of information messages to the message window, maintaining and writing to a separate file of the exchange protocol.

Deleting data

The bookmark is only needed for developers of exchange rules. Allows you to delete arbitrary objects from the infobase.

Debugging data upload and download

Processing allows you to debug event handlers and generate a debug module from a rules file or data file.

The debugging mode for unloading handlers is enabled on the "Data unloading" tab by setting the "Unloading handlers debugging mode" checkbox. Accordingly, on the "Data loading" tab, the loading debugging mode is enabled by setting the "Load handlers debugging mode" checkbox.

After setting the debug mode of the handlers, the debug settings button will become available. By clicking on this button, the settings window will open.

Debugging handlers is configured in four steps:

Step 1: Selecting Algorithm Debugging Mode

At the first step, you need to decide on the mode for debugging algorithms:

Without debugging algorithms

Call algorithms as procedures

Substitute Algorithm Code at Call Place

The first mode is convenient to use when we know for sure that the error in the handler is not related to the code of any algorithm. In this mode, the algorithm code is not dumped into the debug module. The algorithms are executed in the context of the "Run ()" statement and their code is not available for debugging.

The second mode should be used in cases where the error is in the algorithm code. When this mode is set, the algorithms will be unloaded as separate procedures. At the moment of calling the algorithm from any handler, the corresponding processing procedure is called. This mode is convenient to use when the "Parameters" global variable is used to transfer parameters to algorithms. The limitation of using this mode is that during debugging, the algorithm does not access the local variables of the handler from which it is called.

The third debugging mode is used, as in the second case, when debugging the code of algorithms and in cases in which the second debugging mode is not suitable. When this mode is set, algorithms will be unloaded as integrated code in handlers. Those. instead of the algorithm call operator, the complete algorithm code is inserted, taking into account the nested algorithms. In this mode, there are no restrictions on the use of local handler variables, but there is a restriction when debugging recursively called algorithms.

Step 2: building the debug module

At the second step, you need to unload the handlers by clicking on the "Generate unloading (loading) debug module" button. The generated handlers and algorithms will be displayed in a separate window for viewing. The contents of the debug module must be copied to the clipboard by clicking the "Copy to Clipboard" button.

Step 3: Create External Processing

At this step, you need to launch the configurator and create a new external processing. In the processing module, you must paste the contents of the clipboard (debug module) and save the processing under any name.

Step 4: Connect external processing

At the fourth, final step, you must specify the name of the external processing file in the input field. In this case, the program checks by the time of creation (update) of the processing file. If the processing has an earlier version than the version of the debug module file, a warning will be displayed and the configuration form will not be closed.

Note: The ability to debug the global conversion handler "After loading the exchange rules" is not supported.

In most cases, automated control systems consist of separate databases and often have a geographically distributed structure. At the same time, correctly implemented data exchange is a necessary condition for the effective operation of such systems.

At the same time, the initial exchange setup may require a number of actions, not only in terms of programming, but also consulting, even if we are dealing with homogeneous sources, as is the case with products on the 1C: Enterprise platform. Why setting up 1C exchange (or, as it is also called, data synchronization in 1C 8.3) can become the most time-consuming and expensive task of an integration project, we will consider in this article.

Data exchange in 1C environment allows:

- Exclude double entry of documents;

- Automate related business processes;

- Optimize communication between distributed units;

- Promptly update data for the work of specialists from different departments;

- "Delineate" different types of accounting. *

* In the case when the data of one type of accounting differs significantly from another, it is necessary to ensure the confidentiality of information and "delimit" information flows. For example, the exchange of data between 1C UT and 1C Accounting does not require uploading management data to the routine accounting database, i.e. synchronization in 1C will be incomplete here.

If we represent the standard process of implementing the primary data exchange, when at least one of its objects is a 1C product, then the following stages can be distinguished:

- Coordination of the composition of the exchange;

- Definition of transport (exchange protocols);

- Setting the rules;

- Scheduling.

Identifying the composition of the exchange 1C

The objects of exchange can be conditionally divided into "source" and "receiver". At the same time, they can perform two roles simultaneously, which will be called - bilateral exchange. Determination of the source and destination occurs in a logical way, depending on the need or on the functionality of the system. *

* For example, when integrating "WA: Financier" - a solution for financial accounting and treasury process management, developed on the basis of "1C: Enterprise", WiseAdvice experts recommend it as a master system. This is due to the availability of control tools to comply with the rules of the application policy, and, accordingly, to ensure the effectiveness of the solution.

Further, on the basis of the received and recorded requirements from the users, a list of data for exchange is created, their volume, requirements for the exchange frequency are determined, the process of working with errors and handling exceptions (collisions) is prescribed.

At the same stage, depending on the fleet of existing systems and the structure of the enterprise, the exchange format is determined:

Distributed information base

- RIB implies an exchange between identical configurations of 1C databases, with a clear master-slave control structure for each exchange pair. As an element of the technological platform, the RIB, in addition to data, can transfer changes to the configuration and administrative information of the database (but only from the master to the slave).

Universal data exchange in 1C

- A mechanism that allows you to configure the exchange of 1C databases, both with configurations on the 1C: Enterprise platform, and with third-party development systems. The exchange is carried out by converting data into a universal xml-format in accordance with the "Exchange plans".

EnterpriseData

- The latest development of 1C, designed to implement data exchange in xml format between products created on the 1C: Enterprise platform with any automation systems. Using EnterpriseData simplifies the exchange-related improvements. Previously, when a new configuration was included in the system, it was necessary to implement a mechanism for importing and exporting data, both for it and for existing systems. Now systems supporting EnterpriseData do not need any modifications, having only one "entry-exit" point.

Definition of transport (exchange protocols)

For the system based on the 1C: Enterprise 8 platform, a wide range of possibilities is provided for organizing exchange with any information resources through generally accepted universal standards (xml, text files, Excel, ADO-connection, etc.). Therefore, when defining a transport for exchange data, you should proceed from the capabilities of a third-party system database.

Synchronization of directories

The basic principle of effective directory synchronization is the presence of one entry point. But if we are talking about working with reference books that were historically filled in according to different rules, it is necessary to clearly define the synchronization fields to bring the exchange to a “common denominator”. *

* At this stage, it may be necessary to carry out work on the normalization of the reference data on the side of the data source. Depending on the state of the reference books and their volume, the process of matching elements, recognizing, identifying errors and duplicates, as well as filling in missing fields and assigning synchronization fields, may require the work of a whole group of experts, both from the integrator (owner of the standardization method of reference data) and from the customer's side.

Setting rules

The ability to display data from source systems in receivers depends on correctly specified exchange rules. The rules, presented in the xml format, regulate the correspondence of the key attributes of the source-destination objects. The 1C: Data Conversion solution is designed to automate the creation of rules for the implementation of both a one-time exchange and a permanent one.

Ensures no data loss when exchanging the Exchange Plan. This is an integral part of any configuration on the 1C: Enterprise platform, which fully describes the procedure for exchanging 1C: data composition (documents with “identification” details) and nodes (information bases of transmitters and receivers), as well as RIB activation for selected exchange directions.

Any change in the data entered in the Exchange Plan is recorded and receives a sign of "change". Until the changed data match each other in the transmitter-receiver nodes, the flag will not be cleared, and the system will send control messages to both nodes. After unloading the data and confirming their full compliance in both systems, the sign is reset.

Exchange schedule in 1C

To automate the regular exchange, the frequency of data upload is set. The exchange frequency depends on the need and technical capabilities. Also, configurations on the 1C: Enterprise platform allow you to set up data exchange when an event occurs.

Having considered the standard exchange implementation process, let us pay attention to the factors that will require improvements at different stages:

- Non-typical, highly modified database configurations;

- Different versions of the 1C: Enterprise platform;

- For a long time not updated, not up-to-date configuration versions;

- Objects of exchange that were previously modified;

- The need for non-standard exchange rules;

- A very different set and composition of requisites in the existing reference books.

Since even standard actions for the implementation of the primary data exchange require expert knowledge, they are recommended to be carried out with the participation of 1C specialists. Only after completing all the above steps should you proceed to setting up the exchange in the configuration. Let's consider the database integration using the example of "1C: UPP" and "1C: Retail" (according to the same scheme, the exchange with "1C: UT" is configured). Also, the typical synchronization includes the exchange of SCP - SCP, which is typical for large-scale automation systems at the largest industrial enterprises.

In the "Service" submenu, select "Data exchange with products on the platform ..." (the choice of direct exchange with "Retail" often threatens with errors at the level of COM objects). Let's pay attention to the service message “This feature is not available”.

To solve this problem, you must select "Communication settings"

To solve this problem, you must select "Communication settings"

... and tick the box. Next, we ignore the error message.

... and tick the box. Next, we ignore the error message.

In the data synchronization settings, select "Create an exchange with" Retail "...

In the data synchronization settings, select "Create an exchange with" Retail "...

Before configuring the settings for connecting via a local or network directory, make sure that there is enough space on the disk for the directory. Although, as a rule, it does not take more than 30-50 MB, in exceptional cases it may require up to 600 MB. You can create the required directory directly from the configurator.

Before configuring the settings for connecting via a local or network directory, make sure that there is enough space on the disk for the directory. Although, as a rule, it does not take more than 30-50 MB, in exceptional cases it may require up to 600 MB. You can create the required directory directly from the configurator.

When connecting via a network directory, we ignore the suggestions to set up a connection via an FTP address and by e-mail, clicking "Next".

When connecting via a network directory, we ignore the suggestions to set up a connection via an FTP address and by e-mail, clicking "Next".

In the settings, we manually put down prefixes - conventions for the bases (as a rule, BP, UPP, RO), set the rules and the start date for uploading data. The prefix will be indicated in the name of the documents to indicate the base in which they were created. If the unloading rules are not edited, the data will be unloaded by default for all available parameters.

In the settings, we manually put down prefixes - conventions for the bases (as a rule, BP, UPP, RO), set the rules and the start date for uploading data. The prefix will be indicated in the name of the documents to indicate the base in which they were created. If the unloading rules are not edited, the data will be unloaded by default for all available parameters.

We create an exchange settings file for "Retail" so as not to repeat our actions. If you need to immediately send data immediately after setting up synchronization - check the box.

We create an exchange settings file for "Retail" so as not to repeat our actions. If you need to immediately send data immediately after setting up synchronization - check the box.

To automate the exchange process, you need to set up a schedule.

To automate the exchange process, you need to set up a schedule.

Retail menu.

Retail menu.

Check the box and select "Synchronization".

Check the box and select "Synchronization".

We make the "reverse" setting by choosing Manufacturing Enterprise Management.

We make the "reverse" setting by choosing Manufacturing Enterprise Management.

Load the settings file created in the SCP.

We put a tick, the system picks up the address automatically.

We put a tick, the system picks up the address automatically.

We act in the same way as in the SCP.

Verification data comparison (Manual data comparison is recommended at the preparatory stage, since this work can become the most time-consuming in the process of implementing the exchange). The mapping window is opened by double-clicking.

In case of an error in synchronization, "Details ..." will be replaced by "Never ...".

In case of an error in synchronization, "Details ..." will be replaced by "Never ...".

"Details ..." opens the registration log with updated information on the exchange.

"Details ..." opens the registration log with updated information on the exchange.

Done.

Done.

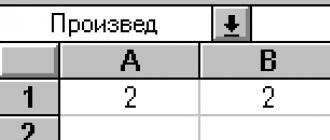

| Parameter name | Value |

| Topic of the article: | XML data exchange |

| Category (thematic category) | Technology |

The DBMS can support the exchange of XML data in a very simple way — supporting the output of query results and the input of data for an INSERT statement in XML format. However, this requires the user or programmer to carefully consider the format of the generated query results, so that it exactly matches the format of the INSERT statement in the receiving database. XML data exchange should be really useful if it is more explicitly supported by the DBMS.

Several commercial products today offer the ability to batch export tables (or query results) to an external file formatted as an XML document. However, they offer a similar ability to batch import data from a file of the same type into a DBMS table. This schema makes XML the standard format for representing table contents for data interchange.

Please note that the use of the capabilities of import / export of table data in XML format offered by the DBMS does not limit their use for exchange between databases.

Data exchange in XML format - concept and types. Classification and features of the category "Data exchange in XML format" 2017, 2018.

XML Markup Language Markup Languages \u200b\u200bMarked text makes it easy to parse and manipulate text. It includes: · text that carries semantic information (infoset); · Markup, indicating the structure of the text. Markup language is designed to ....

The first version of the HyperText Markup Language (HTML), like the Web itself, was developed by Tim Berners Lee in 1991. HTML is an SGML application for a type of document that has been called HTML documents. The language defines a fixed structure, ....

XML (Extensible Markup Language) is a markup language that describes a class of data objects called XML documents. XML language is used as a means for describing the grammar of other languages \u200b\u200band control over the correctness of documents / 6 /. Unlike HTML, XML allows 1 .....

The structure of an XML document includes a header, a DOCTYPE section, and an XML document body. The title describes the version and encoding. The DOCTYPE section describes entities. Entity is a constant used in the body of an XML document for shorthand and easy maintainability. In the XML body ....

Defines a handler for the event that occurs each time the state of the object changes. The name must be written in lower case. The readyState property of the XMLHttpRequest object. The readyState property determines the current state of the XMLHttpRequest object. The table shows the possible values \u200b\u200b....

Defines a handler for the event that occurs each time the state of the object changes. The name must be written in lower case. The readyState property of the XMLHttpRequest object. The readyState property determines the current state of the XMLHttpRequest object. The table shows the possible values \u200b\u200b...

In recent years, the W3C consortium (WWW Consorcium) has been actively working to radically overhaul the foundations of Web technologies. As a result, the Extensible Markup Language (XML) was created to describe and process information ...