Php-cgi processes gobble up memory exponentially and do not want to die after the FcgidMaxRequestsPerProcess limit has passed, after which php-cgi actively starts dumping everything in a swap and the system starts issuing "502 Bad Gateway".

To limit the number of forked php-cgi processesIt’s not enough to set FcgidMaxRequestsPerProcess, after which the processes must bend, but they do not always do this voluntarily.

The situation is painfully familiar when php-cgi processes ( childs) eating away memory, but you can’t make them die - they want to live with it! :) It reminds us of the problem of overpopulation of the land with people - isn't it true ?;)

The eternal imbalance between ancestors and childs can be resolved by limiting the number of php-cgi childs and their lifetime ( genocide) and control the activity of their reproduction ( contraception).

Php-cgi process limit for mod_fcgid

The directives below probably play the most important role in limiting the number of php-cgi processes and in most cases, the default values \u200b\u200bgiven here are flawed for servers with RAM below 5-10 GB:

- FcgidMaxProcesses 1000 - the maximum number of processes that can be active at the same time;

- FcgidMaxProcessesPerClass 100 - the maximum number of processes in one class ( segment), i.e. the maximum number of processes that are allowed to spawn through the same wrapper ( wrapper - a wrapper);

- FcgidMinProcessesPerClass 3 - the minimum number of processes in one class ( segment), i.e. minimum number of processes launched through the same wrapper ( wrapper - a wrapper), which will be available after all requests are completed;

- FcgidMaxRequestsPerProcess 0 - FastCGI should "play in the box" after completing this number of requests.

Which number of php-cgi processes will be the most optimal? To determine the optimal number of php-cgi processes you need (pub) Register on our site! :)(/ pub) (reg) take into account the total amount of RAM and the size of memory allocated for PHP in memory_limit ( php.ini), which can be absorbed by each of the php-cgi processes when running the PHP script. So for example, if we have 512 MB, 150-200 of which are reserved for the OS itself, another 50-100 for the database server, mail MTA, etc., and memory_limit \u003d 64, then in our case our 200-250 MB RAM, without much damage, we can run 3-4 php-cgi processes simultaneously. (/ reg)

Php-cgi process life timeout settings

With the active reproduction of php-cgi childs, eating RAM they can live almost forever, and this is fraught with cataclysms. Below is a list of GMO directives that will help trim lifetime for php-cgi processes and timely release the resources they occupy:

- FcgidIOTimeout 40 - time ( in sec) during which the mod_fcgid module will try to execute the script.

- FcgidProcessLifeTime 3600 - if the process exists longer than this time ( in seconds), then it will have to be marked for destruction during the next process scan, the interval of which is set in the FcgidIdleScanInterval directive;

- FcgidIdleTimeout 300 - if the number of processes exceeds FcgidMinProcessesPerClass, then a process that does not process requests during this time (in seconds), during the next process scan, the interval of which is set in the FcgidIdleScanInterval directive, will be marked for killing;

- FcgidIdleScanInterval 120 - the interval through which the mod_fcgid module will search for processes that have exceeded the limits of FcgidIdleTimeout or FcgidProcessLifeTime.

- FcgidBusyTimeout 300 - if the process is busy processing requests over this time ( in sec), then during the next scan, the interval of which is set in FcgidBusyScanInterval, such a process will be marked for killing;

- FcgidBusyScanInterval 120 - the interval through which scanning and searching for busy processes that exceed the FcgidBusyTimeout limit is performed;

- FcgidErrorScanInterval 3 - interval ( in sec), through which the mod_fcgid module will kill processes awaiting completion, incl. and those that have exceeded FcgidIdleTimeout or FcgidProcessLifeTime. Killing occurs by sending a SIGTERM signal to the process, and if the process continues to be active, it is killed by the SIGKILL signal.

It must be taken into account that a process that exceeded FcgidIdleTimeout or FcgidBusyTimeout can survive + FcgidIdleScanInterval or FcgidBusyScanInterval, through which it will be marked for destruction.

It is better to set ScanInterval s with a difference of several seconds, for example, if FcgidIdleScanInterval 120, then FcgidBusyScanInterval 117 - i.e. so that scanning processes does not occur at the same time.

Activity generating php-cgi processes

If none of the above helped, which is surprising, then you can still try to play with the activity generating php-cgi processes ...

In addition to the limits on the number of requests, php-cgi processes and their lifetime, there is such a thing as the activity of generating child processes, which can be regulated by directives such as FcgidSpawnScore, FcgidTerminationScore, FcgidTimeScore and FcgidSpawnScoreUpLimit, which I think I gave the correct translation from bourgeois ( default values \u200b\u200bindicated): FcgidSpawnScoreUpLimit, no child processes of the application will be spawned, and all requests for spawning will have to wait until the existing process is free or until the evaluation ( Score) does not fall below this limit.

If my translation of the description and understanding of the above parameters is correct, then to lower the activity of generating php-cgi processes, lower the value of the FcgidSpawnScoreUpLimit directive or increase the values \u200b\u200bof FcgidSpawnScore and FcgidTerminationScore.

Summary

I hope I have listed and chewed in detail most of the mod_fcgid module directives that will help limit php-cgi number and their lifetime, as well as reduce resource consumption. The following is complete mod_fcgid configuration for a successfully working server with a 2500 MHz processor and 512 MB RAM:

Oleg Golovskiy

At all, if you can don't raise Apache, do not do this. Think about whether lighttpd or thttpd can do the tasks you need. These web servers can be very useful in situations where there are not enough system resources for everyone, but should work. I repeat once again: we are talking about situations where the functionality of these products will be enough to complete the tasks (by the way, lighttpd able to work with Php) In those situations where without Apache Well, you just can’t do anything, anyway you can usually free up a lot of system resources by redirecting requests to static content (JavaScript, graphics) from Apache to a lightweight HTTP server. Biggest problem Apache is his great appetite for RAM. In this article, I will discuss methods that help speed up work and reduce the amount of memory it takes:

Loading fewer modules

The first step is to get rid of unnecessary modules loading. Browse through the config files and determine which modules you are loading. Do you need all downloadable modules? Find what is not used and turn off the nafig, this will save some amount of memory.

Handling fewer concurrent requests

More processes Apache allowed to run simultaneously, the more simultaneous requests it can handle. By increasing this number, you thereby increase the amount of memory allocated for Apache. Using top, you can see that each process Apache it takes up quite a bit of memory since shared libraries are used. AT Debian 5 with Apache 2 the default configuration is:

StartServers 5 MinSpareServers 5 MaxSpareServers 10 MaxClients 20 MaxRequestsPerChild 0

Directive StartServers determines the number of server processes that are started initially, immediately after its start. Directives MinSpareServers and MaxSpareServersdetermine the minimum and maximum number of subsidiary "spare" processes Apache. Such processes are in a state of waiting for incoming requests and are not unloaded, which makes it possible to accelerate the server's response to new requests. Directive Maxclientsdetermines the maximum number of concurrent requests simultaneously processed by the server. When the number of simultaneous connections exceeds this number, new connections will be queued for processing. In fact, the directive Maxclients and determines the maximum number of child processes Apacherunning simultaneously. Directive MaxRequestsPerChild determines the number of requests the child process should process Apachebefore you complete your existence. If the value of this directive is set to zero, then the process will not “expire”.

For my home server, with relevant needs, I fixed the configuration to the following:

StartServers 1 MinSpareServers 1 MaxSpareServers 1 MaxClients 5 MaxRequestsPerChild 300

Of course, the above configuration is completely unsuitable for use on highly loaded servers, but for the house, in my opinion - it is.

Process circulation

As you can see, I changed the value of the directive MaxRequestsPerChild. By limiting the lifetime of child processes in this way to the number of processed requests, you can avoid accidental memory leaks caused by crooked scripts.

Using Not Too Long KeepAlive

Keepalive Is a support method permanent connection between client and server. Initially, the HTTP protocol was designed to be non-persistent. That is, when a web page is sent to the client, all its parts (pictures, frames, JavaScript) are transmitted using various, separately established connections. With the advent of Keepalive, browsers have the opportunity to request a permanent connection and, having established it, download data using one established connection. This method gives a strong performance boost. but Apache by default, it uses too long a timeout before closing the connection, equal to 15 seconds. This means that after all the content has been given to the client requesting Keepalive, the child process will wait another 15 seconds for incoming requests. Too much, however. It is better to reduce this timeout to 2-3 seconds.

KeepAliveTimeout 2

Timeout reduction

Alternatively, you can reduce the value of the directive. Timeout, which determines the wait time for the completion of individual requests. By default, its value is 300 perhaps in your case it will make sense to reduce / increase this value. I personally have left it as it is.

Reduced logging intensity

On the way to increasing server performance, you can try to reduce the logging intensity. Modules such as mod_rewrite, can write debugging information to the log, and if you do not need it, turn off its output.

Disabling host name resolution

In my opinion, there is no need to reverse-map IP addresses to host names. If you need them so much when analyzing the logs, then you can determine them at the analysis stage, and not during the operation of the server. The directive is responsible for resolving host names HostnameLookups, which, in fact, is installed by default in OffHowever, check this if you really think it necessary to disable the conversion.

HostnameLookups Off

Disabling Usage. Htaccess

File processing .htaccess performed Apache every time you request data. Not only that Apache should download this file, so still a lot of time and resources are spent on its processing. Take a look at your web server and review the need for files .htaccess. If you need different settings for different directories, maybe it will really be possible to put them into the main server configuration file? A disable processing .htaccess can be a directive in the server configuration.

About four months ago, Mozilla began the first tests of multi-process architecture. In the version of Firefox 48, a small part of users, and then the test sample was increased one and a half times. According to Mozilla in the official blog, almost all Firefox users without installed extensions were among the testers.

The results of the experiment were very good. Browser response time was reduced by 400%, and page speed improvements were up to 700%.

There is not much time left until the end of testing, when multiprocessing will become the standard Firefox built-in function, so that the Fire Fox will catch up (or overtake) in stability, security and speed of Chrome, Edge, Safari and Internet Explorer, which have long been using multiprocess architecture.

Multiprocessing means that Firefox is able to distribute tasks between multiple processes. In practice, in a browser, this means isolating the rendering engine from the browser user interface. Thus, the problem with rendering the web page does not cause the entire browser to freeze, which increases overall stability. If a separate isolated rendering process is launched for each tab, then the problem with freezing will be limited to just one tab. In addition, rendering isolation in a separate sandbox increases security: it is more difficult to use exploits for vulnerabilities in the rendering engine.

Chrome and others have been using multiprocessing for many years. In Chrome, it is present at all from the very first version, which was released in 2008. It was in a sense a technological breakthrough. It is interesting to note that to create the very first version of Chrome, Google invited several Firefox developers to the staff, and they did not disappoint.

But Mozilla’s own business is a little stalled. The development of multi-process architecture, code-named Electrolysis (e10s), began in 2009, but between 2011 and 2013 it was stopped "due to a change in priorities."

With the separate Add-on Compatibility Reporter add-on, you can check if your add-ons are compatible with the Firefox multiprocessing mode.

Now the last few steps are left. It is necessary to ensure the normal operation of the browser in Firefox assemblies with extensions that do not yet support multiprocessing. According to the plan, in the version of Firefox 51 they plan to provide work with extensions that are not explicitly marked as incompatible with multiprocessing.

Further Mozilla will refine the architecture itself. In addition to separating the rendering engine into a separate process, you should implement support for several content processes. In this case, the increase in performance will be the maximum possible, and the risks of browser stability from freezes of individual tabs will be minimized.

First, the second content process was implemented on the Nightly branch. Now developers are checking on it how many separate processes Electrolysis can withstand, and catch bugs.

The second big task is to implement a security sandbox with child processes that are limited in rights. In Firefox 50, the sandbox was first implemented in a Windows release. This is still the first experimental version, which is not properly protected, the developers warn. In future versions of Firefox, the sandbox will be added on Mac and Linux.

Although several years late, Firefox is finally introducing multiprocessing. And this is great news for all users of this wonderful browser. Better late than never. And it’s better to do everything right, and not to rush. Early tests showed that Electrolysis provides a notable boost in performance, stability, and security.

Applies to: System Center 2012 R2 Operations Manager, System Center 2012 - Operations Manager, System Center 2012 SP1 - Operations Manager

Monitoring The template allows you to track whether a particular process is running on a computer. Using this template, you can implement two different main scenarios: You may need a process running for a specific application and a warning if it does not work, or you may need a notification if it turns out that an unwanted process is running. In addition to observing whether the application is running, you can collect data on the processor performance and memory used by the process.

Scenarios

Use monitoring template in various scenarios where you want to monitor the running process of an agent-managed computer running Windows. Applications can track the following processes.

Critical process

A process that should be started at any time. Use monitoring template to make sure that this process runs on the computers where it is installed and use monitoring template for measuring its performance.

Undesired process

A process that should not be started. This process may be a known extraneous process that may lead to corruption or there may be a process that starts automatically when an error occurs in the application. Monitoring You can track the template for this process and send a warning if it is found to be complete.

A long running process.

A process that runs in a short time. If the process runs for too long, it may indicate a problem. Monitoring The template can be monitored throughout the entire time, this process runs and send a warning if the execution time exceeds a certain duration.

Monitoring by process monitoring template

Depending on the choice made in the monitoring wizard, monitoring performed by the created monitors and rules may include any of the following parameters.

|

Description |

When turned on |

|

|---|---|---|

|

Monitors |

Number of desired processes |

Enabled when selected processes need on process to track pages and number of processes on running processes pages. |

|

Process Lead Time |

Enabled when selected processes need on process to track pages and duration on running processes pages. |

|

|

Execution of an unwanted process |

If enabled observation scenario for unnecessary processes. |

|

|

Enabled when selected processes need on process to track pages and enable CPU warning on performance data pages. |

||

|

Process memory usage |

Enabled when selected processes need on process to track pages and enable Memory warning on performance data pages. |

|

|

Data Collection Rules |

Processor Collection |

Enabled when selected processes need on process to track pages and enable CPU warning on performance data pages. |

|

Collection of memory usage by process. |

Enabled when selected processes need on process to track pages and enable Memory warning on performance data pages. |

View Monitoring Data

All data collected monitoring template available in process status view is in Track Windows processes and services folders. In this view, an object is displayed for each agent in the selected group. Even if the agent is not monitoring a process, it is listed and the monitor displays the status for a process that is not running.

You can view the status of individual process monitors by opening the Operations Manager Health Analyzer for a process object. You can view performance data; open the performance view for the process object.

The same process objects that are listed in process status views are included in the health analyzer of the computer on which the process is located. The health status of the process monitors is to reduce the health of the computer.

Wizard Options

At startup monitoring template, you must set the parameter values \u200b\u200bin the following tables. Each table represents a separate wizard page.

General properties

Common parameters wizard page.

Process to track

The following options are available on process to track wizard page.

Parameter |

Description |

|---|---|

Observation scenarios |

The type of monitoring to be performed. Select method and process the monitor is running to monitor for the required process and set for the critical state of the monitor when the process is not running. Select track only whether the process is running monitor an unwanted process and configure monitor settings to a critical state when the process starts. |

Process name |

The full name of the process. This is the name of the process as it appears in the task manager. Should not include the path to the executable itself. You can enter a name or click the ellipsis button ( ... ) button to find the file name. |

Target group |

The process is monitored on all computers that are included in the specified group. |

Running processes

The following options are available on running processes wizard page.

Parameter |

Description |

|---|---|

Create an alert number of processes - below the minimum value or exceeds the maximum value longer than during the specified period |

If the check box is selected, the monitor is set to a critical state and a warning is generated if the number of instances of the specified process is less than the minimum or more than the specified maximum value for more than a specified period. To ensure that at least one process instance is running, the minimum and maximum are 1. |

Minimum number of processes |

The minimum number of processes that must be started. |

Maximum number of processes |

The maximum number of processes to be executed. |

Duration |

Indicates how long the number of running processes must exceed the specified range before setting the monitor to a critical state. Do not set this value to less than 1 minute. |

Generate a warning if the process takes longer than the specified period |

If the check box is selected, the monitor is set to a critical state, and a warning is generated if one instance of the process takes longer than the specified period. |

Performance data

The following options are available on performance data wizard page.

Parameter |

Description |

|---|---|

Generate a warning if the CPU load exceeds a predetermined threshold |

Indicates whether CPU monitoring should be monitored for the process. A monitor will be created that sets the error state in the object and generates a warning if the specified threshold value is exceeded. A rule is created to collect CPUs for analysis and reporting. |

CPU (percent) |

If CPU utilization is monitored, this parameter sets the threshold value. If the percentage of total CPU utilization exceeds a threshold value, the set of objects is in an error state and a warning is generated. |

Generate warning if memory usage exceeds specified threshold |

Indicates whether the memory used by the process should be monitored. A monitor will be created that sets the error state in the object and generates a warning if the specified threshold value is exceeded. A rule is created to collect CPUs for analysis and reporting. |

Memory (MB) |

If monitoring memory usage, this parameter sets the threshold value. If the total CPU utilization on the disk in megabytes (MB) exceeds the threshold, a set of objects is in an error state and a warning is generated. |

Number of samples |

If monitoring CPU or memory usage, this parameter specifies the number of consecutive performance samples that must be exceeded before the set of objects is in an error state, and a warning is generated. A numerical value greater than 1 for this parameter limits the noise from observation due to the fact that a warning is not generated when the service only briefly exceeds the threshold value. The greater the value set, a long period of time before you receive a notification about the problem. The default value is 2 or 3. |

Sampling interval |

If monitoring CPU or memory usage, specify the time between performance samples. A lower value for this parameter reduces the time to detect a problem, but increases the load on the agent and the amount of data collected for reports. The usual value is 5 to 15 minutes. |

Additional monitoring features

In addition to performing the specified monitoring monitoring the template creates a targetd class that can be used for additional monitors and workflows. A monitor or rule using this class as the target will be executed on any agent-controlled computer in the group specified in the template. If it creates Windows events that indicate an error, for example, you can create a monitor or rule that defines a specific event and uses the process class as the target.

17 dec 2010

Here you can download for free.

This material is provided by the site for informational purposes only. Administration is not responsible for its contents.

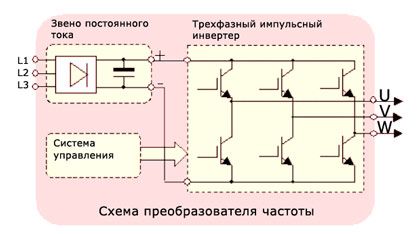

In this article, I will describe the fundamental differences between Apache and Nginx, the architecture of the front-end backend, the installation of Apache as the backend and Nginx as the front-end. I will also describe a technology that speeds up the operation of a web server: gzip_static + yuicompressor.

Nginx - the server is light; it starts the specified number of processes (usually the number of processes \u003d the number of cores), and each process in the loop receives new connections, processes the current ones. This model allows you to serve a large number of customers with low resource costs. However, with this model, you cannot perform lengthy operations when processing a request (for example mod_php), because this essentially hangs the server. With each cycle inside the process, two operations are essentially performed: read a data block from somewhere, write somewhere. From somewhere and somewhere - a connection to a client, a connection to another web server or FastCGI process, a file system, a buffer in memory. The work model is configured by two main parameters:

worker_processes - the number of processes to start. Usually set equal to the number of processor cores.

worker_connections - the maximum number of connections processed by one process. It directly depends on the maximum number of open file descriptors on the system (1024 by default on Linux).

Apache - the server is heavy (it should be noted that, if desired, it can be quite lightened, however, this will not change its architecture); It has two main work models - prefork and worker.

Using the prefork model, Apache creates a new process for processing each request, and this process does all the work: accepts the request, generates content, and gives it to the user. This model is configured with the following parameters:

MinSpareServers - minimum number of idle processes. This is necessary so that when a request arrives, it will begin to process it faster. The web server will start additional processes so that they are the specified number.

MaxSpareServers - the maximum number of idle processes. This is necessary so as not to occupy excess memory. The web server will kill unnecessary processes.

MaxClients - the maximum number of concurrently served clients. The web server will not start more than the specified number of processes.

MaxRequestsPerChild - the maximum number of requests that the process will process, after which the web server will kill it. Again, to save memory, because memory in processes will gradually “leak”.

This model was the only one supported by Apache 1.3. It is stable, does not require multithreading from the system, but it consumes a lot of resources and loses a little in the speed of the worker model.

When using the worker model, Apache creates several processes, several threads in each. Moreover, each request is fully processed in a separate thread. Slightly less stable than prefork, as a thread crash can crash the whole process, but it runs a little faster, consuming less resources. This model is configured with the following parameters:

StartServers - sets the number of processes to start when the web server starts.

MinSpareThreads - the minimum number of threads hanging idle in each process.

MaxSpareThreads - the maximum number of threads hanging idle in each process.

ThreadsPerChild - sets the number of threads that each process starts when the process starts.

MaxClients - the maximum number of concurrently served clients. In this case, sets the total number of threads in all processes.

MaxRequestsPerChild - the maximum number of requests that the process will process, after which the web server will kill it.

Frontend backend

The main problem of Apache is that a separate process is allocated for each request (at least a thread), which is also hung with various modules and consumes a lot of resources. In addition, this process will hang in memory until it gives all the content to the client. If the client has a narrow channel, and the content is quite voluminous, then this can take a long time. For example, the server will generate content in 0.1 seconds, and it will be given to the client for 10 seconds, all the while taking up system resources.

To solve this problem, the front-end backend architecture is used. Its essence is that the client request comes to a light server, with an architecture like Nginx (frontend), which redirects (proxies) the request to a heavy server (backend). The backend generates content, very quickly gives it to the frontend and frees up system resources. The front end puts the result of the backend in its buffer and can long and hard give it (the result) to the client, while consuming much less resources than the backend. Additionally, the front end can independently handle requests for static files (css, js, pictures, etc.), control access, check authorization, etc.

Configuring the Nginx bundle (frontend) + Apache (backend)

It is assumed that Nginx and Apache are already installed. You must configure the server so that they listen to different ports. At the same time, if both servers are installed on the same machine, it is better to hang the backend only on the loopback interface (127.0.0.1). In Nginx, this is configured with the listen directive:

In Apache, this is configured with the Listen directive:

Listen 127.0.0.1:81

Next, you need to tell Nginx to proxy backend requests. This is done with the proxy_pass directive 127.0.0.1:81 ;. This is the entire minimum configuration. However, we said above that it is better to entrust Nginx with the return of static files. Let's say we have a typical PHP site. Then we need to proxy only requests to .php files on Apache, processing everything else on Nginx (if your site uses mod_rewrite, you can also do rewrite on Nginx, and simply throw out the .htaccess files). It is also necessary to consider that the client request comes to Nginx, and the request to Apache is already done by Nginx, therefore there will be no Host https header, and Apache will determine the client address (REMOTE_ADDR) as 127.0.0.1. Host header is easy to substitute, but Apache determines REMOTE_ADDR itself. This problem is solved using mod_rpaf for Apache. It works as follows: Nginx knows the client IP and adds a certain https-header (for example X-Real-IP) into which this IP is registered. mod_rpaf receives this header and writes its contents to the Apache REMOTE_ADDR variable. Thus, php scripts executed by Apache will see the real IP of the client.

Now the configuration will be complicated. First, make sure that both Nginx and Apache have the same virtual host, with the same root. Example for Nginx:

server (

listen 80;

server_name site;

root / var / www / site /;

}

Example for Apache:

ServerName site

Now we set the settings for the above scheme:

Nginx:

server (

listen 80;

server_name site;

location / (

root / var / www / site /;

index index.php;

}

location ~ \\ .php ($ | \\ /) (

proxy_pass https://127.0.0.1:81;

proxy_set_header X-Real-IP $ remote_addr;

proxy_set_header Host $ host;

}

}

Apache:

# mod_rpaf settings

RPAFenable On

RPAFproxy_ips 127.0.0.1

RPAFheader X-Real-IP

DocumentRoot "/ var / www / site /"

ServerName site

The regular expression \\ .php ($ | \\ /) describes two situations: a request to * .php and a request to * .php / foo / bar. The second option is necessary for the work of many CMS..com / index.php (since we defined the index file) and will also be proxied on Apache.

Speeding up: gzip_static + yuicompressor

Gzip on the web is good. Text files are perfectly compressed, traffic is saved, and content is delivered to the user faster. Nginx can compress on the fly, so there are no problems. However, a certain amount of time is spent on file compression, including processor time. And here the Nginx gzip_static directive comes to the rescue. The essence of its work is as follows: if, when requesting a file, Nginx finds a file with the same name and additional extension ".gz", for example, style.css and style.css.gz, then instead of compressing style.css, Nginx will read with The disk is already compressed style.css.gz and will give it under the guise of compressed style.css.

Nginx settings will look like this:

https (

...

gzip_static on;

gzip on;

gzip_comp_level 9;

gzip_types application / x-javascript text / css;

...

Great, we will generate a.gz file once so that Nginx will give it away many times. In addition, we will compress css and js using the YUI Compressor. This utility minimizes css and js files as much as possible, removing spaces, shortening names, etc.

And to make all this shrink automatically, and even update automatically, you can use cron and a small script. Write in cron to run the following command once a day:

/ usr / bin / find / var / www -mmin 1500 -iname "* .js" -or -iname "* .css" | xargs -i -n 1 -P 2 packfile.sh

in the -P 2 parameter specify the number of cores of your processor, do not forget to write the full path to packfile.sh and change / var / www to your web directory.

In the packfile.sh file, write.