Send your good work in the knowledge base is simple. Use the form below

Students, graduate students, young scientists who use the knowledge base in their studies and work will be very grateful to you.

Posted on http://www.allbest.ru/

1. The principles of the exchange of information between hierarchical levels of the memory subsystem.

2. The main functions and basic subsystems of a computing system.

3. The principle of the associative cache.

4. The implementation of interrupts from peripheral devices connected via a serial interface.

5. What are protection rings?

6. What is RAM segmentation?

7. The main features of video data. Matrix and graphic representation of video information.

8. What is a file? Differences between file and directory.

9. The main stages of the conveyor of modern processors.

10. What is register renaming?

Question No. 1. The principles of information exchange between hierarchical levels of the memory subsystem

The implementation of the memory hierarchy of modern computers is based on two principles: the principle of locality of calls and the cost / performance ratio. The principle of locality of calls indicates that most programs fortunately do not perform calls to all their commands and data equally likely, but prefer a certain part of their address space.

The memory hierarchy of modern computers is built on several levels, and a higher level is smaller in volume, faster and has a greater cost in terms of bytes than a lower level. The hierarchy levels are interconnected: all data at one level can also be found at a lower level, and all data at this lower level can be found at the next lower level, and so on, until we reach the base of the hierarchy.

The memory hierarchy usually consists of many levels, but at each moment of time we are dealing with only two nearby levels. The smallest unit of information that can either be present or absent in a two-level hierarchy is called a block. The block size can be either fixed or variable. If this size is fixed, then the amount of memory is a multiple of the block size.

Successful or unsuccessful access to a higher level are called hit (hit) or miss (miss), respectively. A hit is a call to an object in memory that is found at a higher level, while a miss means that it is not found at this level. The hit rate or hit ratio is the percentage of hits found at a higher level. Sometimes it is represented by interest. The miss rate is the percentage of hits that are not found at a higher level.

Since increased productivity is the main reason for the emergence of a memory hierarchy, the frequency of hits and misses is an important characteristic. Hit time is the time it takes to reach a higher level in the hierarchy, which includes, in particular, the time it takes to determine if the hit is a hit or miss. Miss penalty is the time to replace a block at a higher level with a block from a lower level plus the time to forward this block to the desired device (usually the processor). Losses on a miss further include two components: access time (access time) - time to access the first word of a block during a miss, and transfer time (transfer time) - additional time to forward the remaining words of the block. Access time is associated with lower-level memory latency, while forwarding time is associated with channel bandwidth between memory devices of two adjacent levels.

Question No. 2. The main functions and basic subsystems of a computing system

OS is a complex of interconnected programs designed to increase the efficiency of computer hardware by rationally managing its resources, as well as to provide convenience to the user by providing him with an expanded virtual machine. The main resources managed by the OS include processors, main memory, timers, data sets, disks, tape drives, printers, network devices, and some others. Resources are shared between processes. To solve resource management problems, different OSs use different algorithms, the features of which ultimately determine the appearance of the OS. The most important OS subsystems are the process, memory, file and external device management subsystems, as well as the user interface subsystems, data protection and administration subsystems.

Main functions:

* Performing at the request of programs those fairly elementary (low-level) actions that are common to most programs and are often found in almost all programs (input and output of data, start and stop of other programs, allocation and release of additional memory, etc.).

* Standardized access to peripheral devices (input-output devices).

* Management of random access memory (distribution between processes, the organization of virtual memory).

* Control access to data on non-volatile media (such as a hard disk, optical disks, etc.), organized in a particular file system.

* Providing user interface.

* Network operations, support for the stack of network protocols.

Additional functions:

* Parallel or pseudo-parallel tasks (multitasking).

* Effective distribution of resources of a computing system between processes.

* Differentiation of access of various processes to resources.

* Interaction between processes: data exchange, mutual synchronization. A process is a dynamic object that occurs in the operating system after the user or the operating system itself decides to “run the program for execution”, that is, create a new unit of computational work. User - a person or organization that uses an existing system to perform a specific function. A file is a named area of \u200b\u200bexternal memory that can be written to and from which data can be read. The main goals of using files are: long-term and reliable storage of information, as well as shared access to data.

Question No. 3. The principle of the associative cache

In associative memory, elements are selected not by address, but by content. Let us explain the latter concept in more detail. For memory with an address organization, the concept of a minimum addressable unit (MAE) was introduced as a piece of data with an individual address. We introduce a similar concept for associative memory, and we will call this minimum unit of storage in associative memory a string of associative memory (StAP). Each StAP contains two fields: a tag field (English tag - label, label, tag) and data field. A read request to associative memory with words can be expressed as follows: select a line (s) for which (for which) the tag is equal to the specified value.

We emphasize that with such a request one of three results is possible:

1. there is exactly one line with a given tag;

2. there are several lines with a given tag;

3. There are no lines with the given tag.

Search for a record by attribute is an action typical of accessing databases, and database search is often an associative search. To perform such a search, you should look at all the records and compare the given tag with the tag of each record. This can be done when using ordinary addressable memory for storing records (and it is clear that this will require a lot of time - in proportion to the number of stored records!). Associative memory is talked about when associative retrieval of data from memory is supported by hardware. When writing to associative memory, the data element is placed in the StAP along with the tag inherent in this element. For this, you can use any free Strap.

At the beginning, the cache is empty. When the first command is executed during fetching, its code, as well as several neighboring bytes of program code, will be transferred (slowly) to one of the cache lines, and at the same time the most important part of the address will be written to the corresponding tag. This is how the cache line is populated.

If the following samples are possible from this section, they will be made already from the cache (fast) - "Cache hit". If it turns out that there is no necessary element in the cache, it is a cache miss. In this case, the access occurs to RAM (slowly), and at the same time the next cache line is filled at the same time.

The appeal to the cache is as follows. After the executive address is formed, its most significant bits forming the tag are hardware (fast) and simultaneously compared with the tags of all cache lines. In this case, only two of the three situations listed above are possible: either all comparisons will give a negative result (cache miss), or a positive comparison result will be recorded exactly for one line (cache hit).

When reading, if a cache hit is detected, the lower bits of the address determine the position in the cache line, from which bytes should be selected, and the type of operation determines the number of bytes. Obviously, if the length of the data element exceeds one byte, then situations are possible when this element (in parts) is located in two (or more) different cache lines, then the time to select such an element will increase. This can be counteracted by aligning operands and instructions along the boundaries of cache lines, which is taken into account when developing optimizing translators or when manually optimizing code.

If a cache miss occurs and the cache does not have free lines, you must replace one cache line with another line.

The main goal of the substitution strategy is to keep in cache cache lines that are most likely to be accessed in the near future, and to replace lines that will be accessed in a more distant time or will not happen at all. Obviously, the optimal algorithm is that that replaces the line that will be accessed in the future later than any other cache line.

Question No. 4. Implementation of interrupts from peripheral devices connected via a serial interface

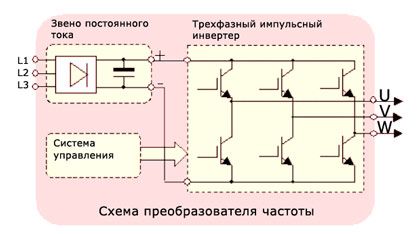

The serial interface for transmitting data in one direction uses a single signal line along which information bits are transmitted one after another in series. This transmission method determines the name of the interface and port that implements it (Serial Interface and Serial Port). Serial data transmission can be carried out in synchronous and asynchronous modes.

In asynchronous transmission, each byte is preceded by a start bit, which signals the receiver about the beginning of the next sending, followed by data bits or parity bits (parity control). Finishes sending a stop bit.

The asynchronous send format allows you to identify possible transmission errors.

Synchronous transmission mode assumes constant activity of the communication channel. Sending begins with a sync byte, followed closely by a stream of information bits. If the transmitter does not have data to transmit, it fills the pause by continuously sending synchronization bytes. When transferring large amounts of data, the overhead for synchronization in this mode will be needed lower than in asynchronous.

Interruption (English interrupt) - a signal that informs the processor about the completion of any asynchronous event. In this case, the execution of the current sequence of commands is suspended, and control is transferred to the interrupt handler, which does the work of processing the event and returns control to the interrupted code. Types of interrupts: Hardware (English IRQ - Interrupt Request) - events from peripheral devices (for example, keystrokes, mouse movements, a signal from a timer, network card or disk drive) - external interrupts, or events in the microprocessor - ( for example, division by zero) - internal interruptions; Software - initiated by an executable program, i.e. already synchronously, not asynchronously. Software interrupts can be used to call operating system services.

Interrupts require the suspension of the execution of the current instruction stream (with state preservation) and the start of the ISR (Interrupt Service Routine) interrupt handler procedure. This procedure should first identify the source of the interrupt (and there may be several), then perform actions related to the reaction to the event. If events should trigger some actions of the application program, then the interrupt handler should only give a signal (through the OS) that starts (or wakes up) the instruction stream that performs these actions. Actually, the ISR procedure should be optimized according to the time spent. Serving interrupts, especially in protected mode, in PC-compatible x86-based computers is associated with significant overhead. For this reason, they are trying to reduce their number. Identification of the source of interruption is a significant hassle - the architecture of PC-compatible computers uses traditional but inefficient mechanisms for this. In some cases, interrupts from devices are replaced by polling - a program-controlled polling of device status. At the same time, the states of many devices are polled by interruption from the timer.

Question No. 5. What are protection rings?

Protection rings - the architecture of information security and functional fault tolerance, which implements hardware separation of system and user privilege levels. The privilege structure can be represented as several concentric circles. In this case, the system mode (supervisor mode or zero ring, the so-called “ring 0”), providing maximum access to resources, is the inner circle, while the user mode with limited access is external. Traditionally, the x86 microprocessor family provides 4 protection rings.

Support for multiple protection rings was one of the revolutionary concepts included in the Multics operating system, the forerunner of today's UNIX-like operating systems.

The original Multics system had 8 protection rings, but many modern systems tend to have less. The processor always knows in which ring the code is executed, thanks to special machine registers.

The ring mechanism strictly limits the ways in which control can be transferred from one ring to another. There is some instruction that transfers control from a less secure to a more secure (with a lower number) ring. This mechanism is designed to limit the possibility of accidental or intentional security breach.

Making effective use of the ring protection architecture requires close interaction between the hardware and the operating system. Operating systems designed so that they work on a large number of platforms can have a different implementation of the ring mechanism on each platform.

Question number 6. What is the segmentation of RAM?

Segmentation is a technique for organizing programs in which the address structure of a program reflects its substantial division. During segmentation, the address space of each program is divided into segments of different lengths, which correspond to the content of different parts of the program. For example, a segment may be a procedure or a data region. In this case, the address consists of the name of the segment and the address inside the offset segment. Since program segments are addressed by name, it is possible to allocate segments in non-contiguous memory areas when allocating memory; moreover, not all segments must be located in the OP at the same time, some of them can be located in external memory and can be transferred to the OP as necessary.

As already indicated, in a system with segmentation, each address represents a pair: s is the name of the segment and d is the offset. Each program corresponds to a segment table always present in the memory, in which each segment of the process corresponds to one record. Using this table, the system maps the program addresses to the true addresses of the OP. The table address is stored in a hardware register called a segment table register.

Calculation of the address during segmentation is as follows. Before the system can calculate the address, the sign of the presence of a segment in the OP is checked by hardware. If a segment is present, then using the segment table register, the s-th row of the segment table is accessed, where the segment address in memory is indicated. Since segments are of different lengths, it is necessary to know the boundary of the segment to prevent access outside the specified segment.

If at some point the system wants to switch its attention to another process, it will simply replace the contents of the register of the segment table with the address of another segment table, after which the type links are interpreted in accordance with the new table.

Address space segmentation has many advantages over absolute addressing, and the main thing is the efficient use of RAM. If there is not enough space in the OP for all segments of a given program, some may be temporarily located in auxiliary memory. If some program needed to introduce a new segment into the OP, the system can remove any segment from the OP to the auxiliary one. The preempted segment does not need to belong to the program for which a new segment is entered in the OP. Which segment table corresponds to the preempted segment does not matter, the main thing is that when you transfer it to auxiliary memory in the corresponding segment table, the attribute value changes.

Question No. 7. The main features of the video. Matrix and graphic representation of video information

Video information can be either static or dynamic. Static video information includes text, figures, graphs, drawings, tables, etc. Drawings are also divided into flat - two-dimensional and three-dimensional - three-dimensional.

Dynamic video is video and animation used to transmit moving images. They are based on sequential real-time exposure of individual frames on the screen in accordance with the scenario.

The demonstration of animated and slide films is based on various principles. Animated films are shown so that the human visual apparatus cannot capture individual frames. To get high-quality animation, frames should be replaced about 30 times per second. When demonstrating slide films, each frame is exposed on the screen for as long as necessary for human perception (usually from 30 s to 1 min). Slide films can be attributed to static video information.

In computer technology, there are two ways to represent graphic images; matrix (raster) and vector. Matrix (bitmap) formats are well suited for images with complex gamut of colors, shades and shapes, such as photographs, drawings, scanned data. Vector formats are more suited to drawings and images with simple shapes, shadows and coloring.

In matrix formats, the image is represented by a rectangular matrix of points - pixels (picture element), the position of which in the matrix corresponds to the coordinates of the points on the screen. In addition to coordinates, each pixel is characterized by its color, background color or gradation of brightness. The number of bits allocated to indicate the color of a pixel varies depending on the format. In high-quality images, the color of a pixel is described by 24 bits, which gives about 16 million colors. The main disadvantage of matrix (raster) graphics is the large memory capacity required to store the image, which is why various methods of data compression are used to describe images. Currently, there are many formats of graphic files that differ in compression algorithms and ways of representing matrix images, as well as the scope.

The vector representation, unlike matrix graphics, defines the image description not in pixels, but in curves - splines. A spline is a smooth curve that passes through two or more reference points that control the shape of a spline.

The main advantage of vector graphics is that the description of the object is simple and takes up little memory. In addition, vector graphics in comparison with matrix graphics have the following advantages:

Ease of scaling an image without compromising its quality;

Independence of the memory capacity required for snoring images from the selected color model.

The disadvantage of vector images is their certain artificiality, which consists in the fact that any image must be divided into a finite set of primitives that comprise it. As with matrix graphics, there are several formats for graphic vector files.

Matrix and vector graphics do not exist apart from each other. So, vector drawings can include matrix images. In addition, vector and matrix images can be converted into each other. Graphic formats that allow you to combine matrix and vector image descriptions are called metafiles. Metafiles provide sufficient file compactness while maintaining high image quality.

The considered forms of representing static video information are used, in particular, for individual frames that make up animated films. Various methods of compressing information are used to store animated films, most of which are standardized.

Question No. 8. What is a file? Differences between file and directory

computational memory live video

File (English file - folder) is a concept in computer technology: an entity that allows you to access any resource of a computer system and has a number of signs:

A fixed name (a sequence of characters, a number or something else that uniquely characterizes a file);

A specific logical representation and its corresponding read / write operations.

It can be anything - from a sequence of bits (although we read it in bytes, or rather words-groups of bytes, four, eight, sixteen each) to a database with an arbitrary organization or any intermediate variant; multidimensional database, strictly ordered.

The first case corresponds to the read / write operations of the stream and / or array (that is, sequential or with access by index), the second - DBMS commands. Intermediate options - reading and parsing all kinds of file formats.

A file is a named collection of bytes of arbitrary length located on a storage medium, and a directory is a named place on a disk in which files are stored. The full file name may include directories like C: \\ papka \\ file.txt, it may not include C: \\ file.txt, and the directory is where the files can be located: C: \\ papka. The directory cannot be opened in the program to write some information to it or read, it is for storing files inside itself, the file is the other way round - it can be opened and edited.

Question No. 9. The main stages of the conveyor of modern processors

The main task of the processor is to execute (and as quickly as possible) the commands included in the program. The easiest way (increasing the processor clock frequency) is quickly enough exhausted by technological limitations. Therefore, you have to look for other ways to increase productivity. It was a set of architectural innovations that allowed Pentium to improve its performance compared to 486 processors. The most important of them is the conveyor.

The execution of the team consists of a number of stages:

1) reading a command from memory,

2) determination of length,

3) determining the address of the memory cell, if used,

4) command execution,

5) saving the result.

In the early processors, all of these steps on each team were carried out completely. The conveyor allowed to speed up the process: after the team went through one of the stages and moved on to the next, the processing of the next command began. This solution appeared in the last 486s (for example, in AMD 5x86-133). Pentium first introduced the dual pipeline. Commands could be executed in parallel (except for floating arithmetic and transition commands). This allowed to increase productivity by about 30-35%.

Question No. 10. What is the renaming of registers?

Renaming registers is a method of weakening the interdependence of instructions used in processors that carry out their extraordinary execution.

In the event that, in accordance with two or more instructions, it is necessary to record data in one register, their correct extraordinary execution becomes impossible even if there is no dependence on the data. Such interdependencies are often called false.

Since the number of architectural registers is usually limited, the likelihood of false interdependencies is high enough, which can lead to reduced processor performance.

Renaming registers is the conversion of software references to architectural registers into links to physical registers and helps to weaken the effect of false interdependencies by using a large number of physical registers instead of a limited number of architectural ones. At the same time, the processor monitors the state of which physical registers corresponds to the state of the architectural ones, and the results are output in the manner prescribed by the program.

Sources

Internet resource - http://www.chinapads.ru/

Ryazantsev O.I., Nedzelsky D.O., Guseva S.V. Architec tural and structural organization of calculus systems. Navalny pos_bnik. - Lugansk: View. SNU im. V. Dalya, 2008.

Internet resource - http://studopedia.net/

Internet resource - http://proc.ucoz.ru/load/ustrojstvo_processora/1-1-0-2

E. Tannenbaum. Computer Architecture, 4th ed. SPb. Peter 2006.

Internet resource - http://193.108.240.69/moodle/file.php/5/navch_pos_OS.doc

Internet resource - http://znanija.com/task/1788585

Posted on Allbest.ru

Similar documents

Classification of computer memory. Use of operative, static and dynamic random access memory. The principle of operation of DDR SDRAM. Formatting magnetic disks. The main problem is synchronization. Theory of Computational Processes. Memory addressing

term paper, added 05/28/2016

The history of the appearance and development of RAM. General characteristics of the most popular modern types of RAM - SRAM and DRAM. Phase Change Memory (PRAM). Thyristor memory with random access, its specificity.

term paper added 11/21/2014

The simplest scheme for the interaction of RAM with the CPU. The device and the principles of functioning of RAM. The evolution of dynamic memory. EDO-DRAM BEDO memory module (Burst EDO) - batch EDO RAM. SDRAM, DDR SDRAM, SDRAM II memory module.

abstract, added December 13, 2009

The concept, types and basic functions of computer memory are parts of a computer, a physical device for storing data used in calculations for a certain time. Schematic diagram of RAM. Floppy disks.

presentation added on 03/18/2012

Memory for computing systems, its creation and characteristic features. Creation of memory devices and basic operational characteristics. Functional diagrams and a method of organizing a matrix of storage elements. Types of magnetic and flash memory.

presentation, added 12.01.2009

Storage of various information as the main purpose of memory. Description of the types of memory. Memory type SRAM and DRAM. Cache memory or super-operative memory, its specifics and applications. The latest innovations in the field of RAM.

presentation, added 01.12.2014

A generalization of the main types and purpose of computer RAM. Volatile and non-volatile memory. SRAM and DRAM. Triggers, dynamic RAM and its modifications. Cache memory. Read only memory Flash memory Types of external memory.

term paper, added 06/17/2013

Improving the parameters of memory modules. The functioning and interaction of the operating system with RAM. Analysis of the main types, parameters of RAM. The software part with the processing of command execution and placement in RAM.

term paper, added 02.12.2009

General microprocessor device. The structure of the 64-bit memory subsystem. Selection of input / output ports. Features of the interface of microprocessor systems. Designing a memory subsystem based on Itanium 2. Calculation of information content and required volumes.

term paper, added 12/05/2012

The concept and functional features of computer storage devices, their classification and types, comparative characteristics: ROM, DRAM and SRAM. Assessment of the advantages and disadvantages of each type of RAM, directions and ways of their use.

| № | Type of memory | 1985 | 2000 year | ||||

|---|---|---|---|---|---|---|---|

| Sampling time | Typical volume | Price / Byte | Sampling time | Typical volume | Price / Byte | ||

| 1 | Superoperative memory (registers) | 0.2 5 ns | 16/32 bit | $ 3 - 100 | 0.01 1 ns | 32/64/128 bit | $ 0,1 10 |

| 2 | Fast buffer memory (cache) | 20 100 ns | 8Kb - 64Kb | ~ $ 10 | 0.5 - 2 ns | 32Kb 1Mb | $ 0,1 - 0,5 |

| 3 | Operational (main) memory | ~ 0.5 ms | 1MB - 256MB | $ 0,02 1 | 2 ns 20 ns | 128MB - 4GB | $ 0,01 0,1 |

| 4 | External memory (mass memory) | 10 - 100 ms | 1Mb - 1GB | $ 0,002 - 0,04 | 5 - 20 ms | 1GB - 0.5Tb | $ 0,001 - 0,01 |

The processor registers make up its context and store the data used by the processor commands executed at a particular moment. Access to processor registers occurs, as a rule, according to their mnemonic designations in processor instructions.

Cache Used to match the speed of the CPU and main memory. Computing systems use a multi-level cache: level I cache (L1), level II cache (L2), etc. Desktop systems typically use a two-tier cache, while server systems use a three-tier cache. The cache stores instructions or data that are likely to be sent to the processor for processing in the near future. The cache is transparent to software, so the cache is usually not software accessible.

RAM usually stores functionally complete program modules (the kernel of the operating system, executable programs and their libraries, drivers of the devices used, etc.) and their data directly involved in the work of the programs, and is also used to save the results of calculations or other processing data before transferring them to an external memory device, to a data output device or communication interfaces.

Each cell random access memory assigned a unique address. Organizational methods for allocating memory provide programmers with the ability to efficiently use the entire computer system. These methods include a solid (“flat”) memory model and a segmented memory model. When using a solid model of memory, the program operates with a single continuous address space, a linear address space in which memory cells are numbered sequentially and continuously from 0 to 2n-1, where n is the capacity of the CPU at the address. When using a segmented model for a program, memory is represented by a group of independent address blocks called segments. To address a memory byte, the program must use a logical address consisting of a segment selector and an offset. The segment selector selects a specific segment, and the offset indicates a specific cell in the address space of the selected segment.

Theme 3.1 Organization of computing in computing systems

The purpose and characteristics of the aircraft. Organization of computing in computing systems. Parallel-action computers, the concepts of command flow and data flow. Associative systems. Matrix systems. Pipeline computing. Command pipeline, data pipeline. Superscalarization.

Student must

know:

The concept of a flow of commands;

The concept of data flow;

Types of computing systems;

Architectural features of computing systems

Computing Systems

Computing system (Sun)- a set of interconnected and interacting processors or computers, peripheral equipment and software, designed to collect, store, process and distribute information.

The creation of the sun has the following main objectives:

· Increasing system performance by speeding up data processing;

· Increase the reliability and reliability of calculations;

· Providing the user with additional services, etc.

Theme 3.2

Classification of aircraft depending on the number of command and data flows: OKOD (SISD), OKMD (SIMD), MKOD (MISD), MKMD (MIMD).

Classification of multiprocessor aircraft with different ways of implementing shared memory: UMA, NUMA, COMA. Comparative characteristics, hardware and software features.

Classification of multi-machine aircraft: MPP, NDW and COW. Appointment, characteristics, features.

Examples of aircraft of various types. Advantages and disadvantages of various types of computing systems.

Classification of Computing Systems

A distinctive feature of the aircraft in relation to classical computers is the presence in it of several calculators that implement parallel processing .

Concurrency of operations significantly improves system performance; it can significantly increase reliability (if one component of the system fails, another can take on its function), as well as the reliability of the system if operations are duplicated and the results are compared.

Computing systems can be divided into two groups:

· multi-machine ;

· multiprocessor .

Multi-machine computing system consists of several separate computers. Each computer in a multi-machine system has a classical architecture, and such a system is widely used. However, the effect of using such a computing system can be obtained only when solving a problem having a special structure: it should be divided into as many loosely coupled subtasks as there are computers in the system.

Multiprocessor architecture assumes the presence of several processors in the computer, therefore, many data streams and many command streams can be organized in parallel. Thus, several fragments of the same task can be performed simultaneously. The advantage in the performance of multiprocessor computing systems over single-processor systems is obvious.

The disadvantage is the possibility of conflict situations when multiple processors are accessing the same memory area.

A feature of multiprocessor computing systems is the presence of a common RAM as a shared resource (Figure 11).

Figure 11 - Architecture of a multiprocessor computing system

Flynn's classification

Among all the considered classification systems for aircraft, the most widely used classification was proposed in 1966 by M. Flynn. It is based on flow concept , which refers to the sequence of command elements or data processed by the processor. Depending on the number of command streams and data streams, Flynn identifies 4 classes of architectures:

· OKOD - single command stream - single data stream. These include the classic background - Neumann VMs. Pipeline processing does not matter, therefore both VM 6600 with scalar functional devices and 7600 with conveyor ones fall into the OKOD class.

· MKOD - multiple command stream - single data stream. In this architecture, multiple processors process the same data stream. An example would be the aircraft, on the processors of which a distorted signal is supplied, and each of the processors processes this signal using its own filtering algorithm. Nevertheless, neither Flynn nor other specialists in the field of computer architecture have so far been able to imagine a real-life aircraft built on this principle. A number of researchers attribute conveyor systems to this class, but this has not found final recognition. The presence of an empty class should not be considered a drawback to Flynn's classification. Such classes may become useful in the development of new concepts in the theory and practice of constructing aircraft.

· OKMD - one stream of commands - many data streams - commands are issued by one control processor, and are executed simultaneously on all processing processors on the local data of these processors. SIMD (single instruction - multiple data)

· MKMD - many streams of commands - many streams of data - a set of computers working on their programs with their original data. MIMD (multiple instruction - multiple data)

The Flynn classification scheme is the most common in the initial assessment of aircraft, since it immediately allows you to evaluate the basic principle of the system. However, Flynn’s classification has obvious drawbacks: for example, the inability to unambiguously attribute some architectures to one or another class. The second drawback is the excessive saturation of the MIMD class.

Existing computer systems of the MIMD class form three subclasses: symmetric multiprocessors (SMP) clusters and massively parallel systems (MPP). This classification is based on a structurally functional approach.

Symmetric Multiprocessors consist of a set of processors with the same access to memory and external devices and functioning under the control of one operating system (OS). A special case of SMP is single-processor computers. All SMP processors have a shared shared memory with a single address space.

Using SMP provides the following features:

· Scaling applications at low initial cost, by applying without conversion applications on new, more productive hardware;

· Creating applications in familiar software environments;

· The same access time to all memory;

· The ability to forward messages with high bandwidth;

· Support for coherence in the aggregate of caches and main memory blocks, indivisible synchronization and locking operations.

Cluster system It is formed from modules connected by a communication system or shared external memory devices, for example, disk arrays.

The cluster size varies from several modules to several dozen modules.

Within the framework of both shared and distributed memory, several models of memory system architectures are implemented. Figure 12 shows the classification of such models used in computing systems of the MIMD class (also true for the SIMD class).

Figure 12 - Classification of models of memory architectures of computing systems

In systems with shared memory all processors have equal access to a single address space. A single memory can be built as a single block or on a modular basis, but usually the second option is practiced.

Computing systems with shared memory, where any processor accesses the memory in the same way and takes the same time, is called systems with uniform access to memory and are referred to as UMA (Uniform Memory Access). This is the most common parallel VS memory architecture with shared memory.

Technically, UMA systems assume a node connecting each of pprocessors with each of tmemory modules. The simplest way to build such an aircraft is to combine several processors (P i.) With a single memory (M P) via a common bus - shown in Figure 12a . In this case, however, at each moment in time, only one of the processors can exchange on the bus, that is, processors must compete for access to the bus. When the processor P iselects a command from memory, other processors P j(i ≠ j) must wait until the tire is free. If only two processors are included in the system, they are able to work with performance close to maximum, since their access to the bus can be alternated: while one processor decodes and executes the command, the other has the right to use the bus to select the next command from memory. However, when a third processor is added, performance starts to drop. If there are ten processors on the bus, the bus performance curve (Figure 12b) becomes horizontal, so adding an 11th processor no longer improves performance. The bottom curve in this figure illustrates the fact that the memory and the bus have a fixed bandwidth, determined by the combination of the memory cycle length and the bus protocol, and in a multiprocessor system with a common bus, this bandwidth is distributed between several processors. If the processor cycle time is longer than the memory cycle, many processors can be connected to the bus. However, in fact, the processor is usually much faster than memory, so this circuit is not widely used.

An alternative method of constructing a multiprocessor aircraft with a shared memory based on UMA is shown in Figure 13c . Here, the bus is replaced by a switch routing processor requests to one of several memory modules. Despite the fact that there are several memory modules, all of them are included in a single virtual address space. The advantage of this approach is that the switch is able to handle multiple requests in parallel. Each processor can be connected to its memory module and have access to it at the maximum permissible speed. Rivalry between processors may arise when trying to access the same memory module simultaneously. In this case, only one processor gets access, and the others are blocked.

Unfortunately, the UMA architecture does not scale very well. The most common systems contain 4-8 processors, much less often 32-64 processors. In addition, such systems cannot be attributed to fault tolerant, since a failure of one processor or memory module entails a failure of the entire aircraft.

Figure 13 - Shared memory:

a) combining processors using the bus and a system with local caches;

b) system performance as a function of the number of processors on the bus;

c) multiprocessor aircraft with shared memory, consisting of separate modules

Another approach to building a shared memory aircraft is heterogeneous memory access , designated as NUMA (Non-Uniform Memory Access). Here, as before, a single address space appears, but each processor has local memory. The processor accesses its own local memory directly, which is much faster than accessing remote memory through a switch or network. Such a system can be supplemented with global memory, then local storage devices play the role of fast cache memory for global memory. Such a scheme can improve the performance of the aircraft, but is not able to indefinitely delay the alignment of direct performance. If each processor has a local cache (Figure 13a), there is a high probability (p\u003e0.9) that the desired command or data is already in local memory. Reasonable probability of getting into local memory significantly reduces the number of processor calls to global memory and, thus, leads to increased efficiency. Performance curve break point (upper curve in Figure 13b ), corresponding to the point at which the addition of processors remains effective, now moves to the region of 20 processors, and the point where the curve becomes horizontal, to the region of 30 processors.

As part of the concept NUMAimplements several different approaches, denoted by abbreviations SOMA, CC-NUMAand NCC-NUMA.

AT architecture with cache only (COMA, Cache Only Memory Architecture) the local memory of each processor is built as a large cache for quick access from the side of its own processor. Caches of all processors in the aggregate are considered as the global memory of the system. Actually global memory is missing. The fundamental feature of the SOMA concept is expressed in dynamics. Here, the data is not statically bound to a specific memory module and does not have a unique address that remains unchanged throughout the lifetime of the variable. In the COMA architecture, data is transferred to the cache of the processor that last requested it, while the variable is not fixed by a unique address and can be located in any physical cell at any time. Transferring data from one local cache to another does not require the participation of the operating system in this process, but involves complex and expensive memory management equipment. To organize such a regime, so-called cache directories . Note also that the last copy of a data item is never deleted from the cache.

Since in the COMA architecture, data is moved to the local cache of the owner processor, such aircraft in terms of performance have a significant advantage over other NUMA architectures. On the other hand, if a single variable or two different variables stored in the same line of the same cache are required by two processors, this line of the cache must be moved back and forth between the processors at each data access. Such effects may depend on the details of the memory allocation and lead to unpredictable situations.

Model heterogeneous memory cache access (CC-NUMA, Cache Coherent Non-Uniform Memory Architecture) is fundamentally different from the COMA model. The CC-NUMA system does not use cache memory, but ordinary physically distributed memory. No copying of pages or data between memory locations occurs. There is no software messaging. There is just one memory card, with parts physically connected by a copper cable, and smart hardware. Hardware-based cache coherency means that no software is required to store multiple copies of the updated data or transfer it. The hardware level handles all this. Access to local memory modules in different nodes of the system can be performed simultaneously and is faster than to remote memory modules.

The difference between the model and heterogeneous memory cache incoherent (NCC-NUMA, Non-Cache Coherent Non-Uniform Memory Architecture) from CC-NUMA is obvious from the name. The memory architecture assumes a single address space, but does not ensure the consistency of global data at the hardware level. The management of the use of such data rests entirely with the software (applications or compilers). Despite this circumstance, which seems to be a drawback of the architecture, it proves to be very useful in increasing the performance of computing systems with a DSM-type memory architecture, which is discussed in the section "Distributed memory architecture models".

In general, NUMA-based shared-memory aircraft are called virtual shared memory architectures (virtual shared memory architectures). This type of architecture, in particular CC-NUMA, has recently been considered as an independent and rather promising type of computing system of the M1MD class.

Models of distributed memory architectures.In a system with distributed memory, each processor has its own memory and is able to address only to it. Some authors call this type of systems multi-machine aircraft or multicomputers , emphasizing the fact that “the blocks from which the system is built are themselves small computing systems with a processor and memory. Models of architectures with distributed memory are usually denoted as architecture without direct access to remote memory (NORMA, No Remote Memory Access). This name follows from the fact that each processor has access only to its local memory. Access to remote memory (local memory of another processor) is possible only by exchanging messages with the processor to which the addressed memory belongs.

Such an organization is characterized by a number of advantages. Firstly, when accessing data, there is no competition for the bus or switches: each processor can fully use the bandwidth of the communication path with its own local memory. Secondly, the absence of a common bus means that there are no related restrictions on the number of processors: the size of the system limits only the network that unites the processors. Thirdly, the cache coherence problem is removed. Each processor has the right to independently change its data without worrying about matching copies of data in its own local cache with caches of other processors.

Student must

know:

Aircraft classification;

Examples of aircraft of various types.

be able to:

- choose the type of computing system in accordance with the task at hand.

© 2015-2019 website

All rights belong to their authors. This site does not claim authorship, but provides free use.

Page Created Date: 2016-07-22

Classification of MKMD systems

In the MKMD system, each processor element (PE) performs its program quite independently of other PEs. At the same time, the processor elements must somehow interact with each other. The difference in the method of such interaction determines the conditional division of MKMD systems into aircraft with shared memory and systems with distributed memory (Fig. 5.7).

In systems with shared memory, which characterize them as highly coupled, there is a common data and instruction memory available to all processor elements using a common bus or network of connections. Such systems are called multiprocessors. This type includes symmetric multiprocessors (UMA (SMP), Symmetric Multiprocessor), systems with heterogeneous memory access (NUMA, Non-Uniform Memory Access) and systems with so-called local memory instead of cache memory (COMA, Cache Only Memory Access).

If all processors have equal access to all memory modules and all input / output devices and each processor is interchangeable with other processors, then such a system is called an SMP system. In systems with shared memory, all processors have equal access to a single address space. A single memory can be built as a single block or on a modular basis, but usually the second option is practiced.

SMP systems belong to the UMA architecture. Computing systems with shared memory, where any processor accesses the memory in the same way and takes the same time, are called systems with uniform access to UMA (Uniform Memory Access).

In terms of memory levels in the UMA architecture, three options for building a multiprocessor are considered:

Classical (only with shared main memory);

With an additional local cache for each processor;

With additional local buffer memory for each processor (Fig. 5.8).

From the point of view of the way the processors interact with shared resources (memory and UHV), in general, the following types of UMA architectures are distinguished:

With common bus and time division (7.9);

With coordinate switch;

Based on multi-stage networks.

Using only one bus limits the size of the UMA multiprocessor to 16 or 32 processors. To get a larger size, a different type of communication network is required. The simplest connection diagram is the coordinate switch (Fig. 5.10). Coordinate switches have been used for many decades to connect a group of inbound lines to a number of outbound lines in an arbitrary way.

The coordinate switch is a non-blocking network. This means that the processor will always be connected to the desired memory block, even if some line or node is already occupied. Moreover, no prior planning is required.

Coordinate switches are quite applicable for medium-sized systems (Fig. 5.11).

Based on 2x2 switches, you can build multi-stage networks. One of the possible options is the omega network (Fig. 5.12). For n processors and n memory modules, log 2 n steps, n / 2 switches per step are consumed, that is, a total of (n / 2) log 2 n switches per step. This is much better than n 2 nodes (intersection points), especially for large n.

The size of UMA multiprocessors with one bus is usually limited to several dozen processors, and coordinate multiprocessors or multiprocessors with switches require expensive hardware, and they are not much larger. To get more than 100 processors, you need different access to memory.

For greater scalability of multiprocessors, an architecture with non-uniform access to NUMA memory (NonUniform Memory Access) is adapted. Like UMA multiprocessors, they provide a single address space for all processors, but, unlike UMA machines, access to local memory modules is faster than to remote ones.

The NUMA concept implements approaches denoted by the abbreviations NC-NUMA and CC-NUMA.

If the access time to the remote memory is not hidden (since there is no cache), then such a system is called NC-NUMA (No Caching NUMA - NUMA without caching) (Fig. 5.13).

If there are consistent caches, then the system is called CC-NUMA (Coherent Cache Non-Uniform Memory Architecture - NUMA with consistent cache) (7.14).

Since the creation of the von Neumann computer, the main memory in a computer system organized as linear (one-dimensional) address spaceconsisting of a sequence of words and later bytes. The external organization is similarly organized. memory. Although such an organization reflects the particularities of the hardware used, it does not correspond to the way programs are usually created. Most programs are organized as modules, some of which are unchanged (read-only, execute-only), while others contain data that can be changed.

If a operating system and hardware can work effectively with user programs and data represented by modules, this provides a number of advantages.

Modules can be created and compiled independently of each other, and all links from one module to another are resolved by the system while the program is running.

Different modules can receive different degrees of protection (read only, only execute) due to very moderate overhead.

It is possible to use a mechanism that provides the joint use of modules by different processes (for the case of cooperation of processes in work on one task).

Parkinson’s paraphrased law states: “Programs expand to fill the full amount of memory available to support them.” (it was said about the OS). Ideally, programmers would like to have unlimited in size and speed memorywhich would be non-volatile, i.e. kept its contents when the power was turned off, and would also be inexpensive. However, there is really no such memory yet. At the same time, at any stage in the development of the technology for the production of storage devices, the following fairly stable relations apply:

the shorter the access time, the more expensive the bit;

the higher the capacity, the lower the cost of a bit;

the higher the capacity, the longer the access time.

bit cost is reduced;

capacity increases;

access time increases;

the frequency of processor accesses to memory is reduced.

Fig. 6.1.Hierarchy of memory

Suppose cPU It has access to memory of two levels. The first level contains E 1 words, and it is characterized by an access time T 1 \u003d 1 ns. To this level cPU can handle directly. However, if you want to get wordlocated on the second level, it must first be transferred to the first level. Moreover, not only the required word, but data blockcontaining this word. Because the addresses that are being accessed cPUtend to assemble in groups (loops, routines) cPU refers to a small repeating set of commands. Thus, the processor will work with the newly received memory unit for a rather long time.

We denote by T 2 \u003d 10 ns the time of access to the second memory level, and by P - the attitude the number of occurrences of the desired word in quick memory to the number of all calls. Let in our example P \u003d 0.95 (i.e. 95% of calls are fast memorywhich is quite real), then the average access time memory can be written like this:

T cf \u003d 0.95 * 1ns + 0.05 * (1ns + 10ns) \u003d 1.55ns

This principle can be applied not only to memory with two levels. It really happens. The amount of RAM significantly affects the nature of the flow of the computing process, since it limits the number of simultaneously running programs, i.e. multiprogramming level. Assuming the process spends part p of its time in anticipation of completion operations I / O, then the degree of load Z of the central processor (CPU) in the ideal case will be expressed by the dependence

Z \u003d 1 - p n, where n is the number of processes.

In fig. Figure 6.2 shows the dependence Z \u003d p (n) for various completion wait times. operations input-output (20%, 50% and 80%) and the number of processes n. The large number of tasks required for high processor load requires a large amount of RAM. Under the conditions when, to ensure an acceptable level of multiprogramming, the available memory is insufficient, a method for organizing a computational process was proposed, in which the images of some processes are completely or partially temporarily unloaded on disk.

Obviously, it makes sense to temporarily unload inactive processes that are waiting for any resources, including the next time slice of the central processor. By the time it passes turn execution of the unloaded process, its image is returned from the disk to the operational memory. If this reveals that there is not enough free space in RAM, then on disk another process is unloaded.

Such a substitution ( virtualization) RAM memory allows you to increase the level of multiprogramming, since the amount of RAM is now not so severely limits the number of simultaneously executed processes. In this case, the total amount of RAM occupied by process images can significantly exceed the available amount of RAM.

In this case, a virtual operational memorythe size of which far exceeds the real memory system and is limited only by the addressing capabilities of the process used (in a PC based on Pentium 2 32 \u003d 4 GB). Generally virtual (apparent) is called resourcepossessing properties (in this case, a large amount of OP), which in reality it does not have.

Virtualization RAM is implemented by a combination of hardware and software of the computing system (processor circuits and operating system) automatically without the participation of a programmer and does not affect the logic of the application.

Virtualization memory is possible based on two possible approaches:

swapping (swapping) - images of processes are downloaded to disk and returned to the entire RAM;

virtual memory ( virtual memory) - parts of images (segments, pages, blocks, etc.) of processes are moved between the main memory and the disk.

redundancy of data being moved and hence the slowdown of the system and inefficient use of memory;

the inability to load a process whose virtual space exceeds the available free memory.

Virtual memory it does not have these drawbacks, but its key problem is the conversion of virtual addresses to physical ones (why this is a problem will be clear further, but for now significant expenses time for this process, unless special measures are taken).

Virtual memory concept

In an aircraft with virtual memory, the address space (AP) of the process (process image) is stored at runtime in the external memory of the computer and loaded into the real memory in parts dynamically, if necessary, to any free space in the ROP. However, the program does not know anything about this, it is written and executed as if it is completely in the ROP.

Virtual memory is a simulation of RAM in external memory.

The mechanism for displaying virtual and real addresses establishes a correspondence between them and is called dynamic address translation(DPA ).

The computer here already acts as a logical device, and not a physical machine with unique characteristics. DPA is supported at the firmware level. In Intel MP, starting with the 386 processor, virtual memory is supported.

Such a procedure is performed for EC computers - series 2 and higher, for SM computers - 1700, for IBM PC - I386 and higher.

When managing virtual memory, adjacent virtual addresses will not necessarily be adjacent real addresses (artificial adjacency). The programmer is freed from the need to consider the placement of his procedures and data in the RRO. He gets the opportunity to write programs in the most natural way, working out only the details of the algorithm and the structure of the program, ignoring specific features of the structure of hardware.

The DPA mechanism involves maintaining tables showing which VP cells are currently in the ROP and where exactly. Since an individual mapping of information elements (word-by-word or byte-by-bit) does not make sense (since ROPs would be required for address mapping tables more than for processes), address mapping is performed at the level of OP blocks.

Picture 1. Dynamic address translation

Problem:what part of the processes to keep in the OP, at some points in time, pushing out some sections of the ROP and placing others.

Another issue that needs to be addressed: How to make block size?

An increase in the block size leads to a decrease in the size of the block mapping table, but increases the exchange time and, conversely, a decrease in the block size leads to an increase in the tables and a decrease in the exchange time with external memory.

Blocks can be a fixed size (page) and variable size (segments). In this regard, there is four ways to organize virtual memory:

1.Dynamic page organization.

2. Segment organization.

3. The combined segment-page organization.

4. Two-level page organization.

Virtual addresses in page and segment systems are two-component and represent an ordered pair (p, d), where p - the number of the block (page or segment) in which the element is placed, and d - offset relative to the starting address of this block. Virtual address translationV \u003d (p, d) to the address of real memoryr carried out as follows. When the next process is activated, the address of the table displaying the blocks of this process is loaded into a special processor register. According to the block number p from the block mapping table, a line is read in which a correspondence is established between the numbers of virtual and physical pages for pages loaded into RAM, or a note is made that the virtual page has been unloaded to disk. In addition, the page table contains control information, such as a sign of page modification, a sign of non-downloadability (unloading of some pages may be prohibited), a page access flag (used to calculate the number of hits for a certain period of time), and other data generated and used by the mechanism virtual memory. The offset size is added to the read physical location address of the selected block. d and the required real address is calculated.

Figure 2Convert virtual address to real memory address

Consider what a virtual memory management strategy is? Similarly to ROP management, there are three categories of strategies for managing EPs, with the current goal of reducing page waiting and placing only used blocks in the ROP.

Push strategy , which determines when to rewrite a page or segment from external memory in the OP.

but) pushing on request- the system expects links to a page / segment from an ongoing process (interruption due to the absence of a page);

arguments for:

it is impossible to predict the course of program execution;

guarantee the location in the OP only the necessary pages;

the overhead of determining the required pages is minimal;

swapping on one block leads to an increase in the total waiting time.

Advantage: reduced waiting time.

Currently, the hardware performance is increasing, and non-optimal solutions do not lead to a decrease in the efficiency of computing systems.

Placement strategy, determining where to place the incoming page / segment. In page systems it’s trivial: in any free block (the page has a fixed size). In segmented systems, the same strategies as for real OP (in the first suitable area, in the most suitable, in the least suitable).

Push (substitution) strategy, which determines which page / segment to remove from the OP to free up the space of the incoming page.

Here’s the main problem " slippage", in which the popped-out page should be placed in the ROP at the next moment.

Consider the procedures for determining blocks for pushing from the OP.

but) random page popping- not used in real systems;

b) popping out the first page that arrived (FIFO - turn). To implement it, you need to set timestamps for pages.

Argument: The page already had the opportunity to use its chance.

Actually: It’s more likely to replace actively used pages, since finding pages for a long time may mean that it is constantly in use. For example, the text editor used.

at) popping the longest unused pages.

For implementation it is necessary to implement updated timestamps. Heuristic argument: - The recent past is a good guide to the future.

The disadvantage is significant overhead: constant updating of timestamps.

d) popping least used pages - assumes the presence of page counts (less intensive than updated timestamps). Intuitively justified, but also may not be rational.

e) popping recently unused pages - The most common algorithm with low overhead. It is implemented by two hardware bits per page:

1. sign of treatment 0 - was

1 - was not.

2. The sign of modification of record 0 is unchanged.

1 - changed.

The following combinations are possible { 00,10,01,111). If there were no changes on the page, then the page can simply be rewritten, and not saved to disk.